|

|

|

14:09 |

|

|

show

|

4:27 |

Hello and welcome to the course Write Pythonic Code Like a Seasoned Developer.

My name is Michael Kennedy and we are going to be on this journey together to help you write more readable, more efficient and more natural Python code.

So what is Pythonic code anyway?

When developers are new to Python, they often hear this phrase Pythonic and they ask what exactly does that mean?

In any language, there is a way of doing things naturally and a way that kind of fight the conventions of the language.

When you work naturally with the language features and the runtime features, this is called idiomatic code, and in Python when you write idiomatic Python we call this Pythonic code.

One of the challenges in Python is it's super easy to get started and kind of learn the basics and start writing programs before you really master the language.

And what that often means is people come in from other languages like C++ or Java or something like that, they will take algorithms or code that they have and bring it over to Python and they will just tweak the syntax until it executes in Python, but this often uses the language features of - let's just focus on Java.

In Python there is often a more concise, more natural way of doing things, and when you look at code that came over from Java, we'll just take a really simple example- if you have a class and the class has a get value and set value, because in Java that is typically the way you do encapsulation, and people bring those classes in this code over and migrate it to Python, they might still have this weird getter/setter type of code.

And that would look completely bizzare in Python because we have properties for example.

So we are going to look at this idea of Pythonic code, now, it's pretty easy to understand but it turns out to be fairly hard to make concrete, you'll see a lot of blog posts and things of people trying to put structure or examples behind this concept of Pythonic code.

We are going to go through over 50 examples of things I consider Pythonic and by the end you'll have many examples, patterns and so on to help you have a solid grip on what Pythonic code is.

So what is Pythonic code and why does it matter?

Well, when you write Pythonic code, you are leveraging the experience of 25 years of many thousands, maybe millions of developers, these guys and girls have worked in this language day in and day out for the last 25 years and they really perfected the way of working with classes, functions, loops, and so on, and when you are new especially, it's very helpful to just study what those folks have done and mimic that.

When you write Pythonic code, you are writing code that is specifically tuned to the CPython runtime.

The CPython interpreter and the Python language have evolved together, they have grown up together, so the idioms of the Python language are of course matched or paired well with the underlying runtime, so writing Pythonic code is an easy way to write code that the interpreter expects to run.

When you write Pythonic code, you are writing code that is easily read and understood by Python developers.

A Python developer can look at standard idiomatic Python and just glance at sections and go, "oh, I see what they are doing here, I see what they are doing there, bam, bam, bam" and quickly understand it.

If instead it's some algorithm that is taken from another language with other idioms, the experienced developer has to read through and try to understand what is happening at a much lower level, and so your code is more readable to experienced developers and even if you are new will become probably more readable to you if you write Pythonic code.

One of the super powers of Python is that it is a very readable and simple language without giving up the expressiveness of the language.

People coming from other languages that are less simple, less clean and easy to work with will bring those programming practices or those idioms over and they will write code that is not as simple as it could be in Python even though maybe it was a simple as it could be in C.

So when you write Pythonic code, you are often writing code that is simpler and cleaner than otherwise would be the case.

If you are working on an open source project, it will be easier for other contributors to join in because like I said, it's easier for them to read and understand the code at a glance, and they will more naturally know what you would expect them to write.

If you are working on a software team, it's easier to onboard new Python developers into your team if you are writing idiomatic code, because if they already know Python it's much easier for them to grok your large project.

|

|

|

show

|

3:56 |

What areas are we going to cover in this class?

Well, we are going to start with the foundations and this concept called PEP 8.

So, PEP 8 is a standardized document that talks about the way code should be formatted, and even some of the Pythonic ideas and Pythonic code examples.

However, we are going to go way beyond PEP 8 in this course, and so we'll probably spend 15 minutes talking about PEP 8 and then we'll move onto other foundational items.

Then we are going to focus on dictionaries, dictionaries play a super important role in Python, they are basically the backing store for classes, they are used for data exchange all over the place, and there are a lot of interesting use cases and ways in which dictionaries are used in a language.

We are going to talk about a lot of interesting aspects and optimal ways to use and leverage dictionaries.

Next up are working with collections, things called list comprehensions and generator expressions.

And we'll see that Python has a lot of interesting flexibility around working with sequences, and we'll see the best way to do this here.

Next, functions and methods.

This will include the use of things like lambda expressions for small inline methods, as well as returning multiple values from methods and that sort of things.

There is a lot to look at to write Pythonic functions.

One of the great powers of Python is the ability to import or pip install a whole variety of packages, there is even a great xkcd cartoon about importing packages in Python, and we'll see that there are a lot of interesting Pythonic conventions around working with packages and modules.

Next up, we are going to look at classes and objects.

Object oriented programming in Python is a key cornerstone concept, even though it may play a slightly less important role than languages like Java and C#, still, classes are really important and there is a lot of idiomatic conventions around working with classes, we'll focus on that in this section.

Python has a lot of powerful ways of working with loops, one of the first giveaways if somebody is brand new to Python is they are not using loops correctly, so we'll talk about when and how you should use loops and we'll even talk about the controversial else clause for "for...in" and "while" loops.

Next, we'll talk about tuples.

Tuples are smallish, read-only collections that let you package up related possibly heterogeneous data and pass it around, If we go into a basic database queries and the built in DB API you'll see that the rows come back as tuples.

Some of the powerful techniques we'll learn about loops involve tuples and we'll see that tuples in general play a really important role, and there is some powerful and useful conventions around working with tuples in Python.

Finally, we are going to look beyond the standard library, with something I am calling Python for Humans; one of the great powers of Python is the ability to go out to PyPi and grab one of the over 80 000 packages, install them using pip or something like this and add amazing powers to your application.

People who are new to Python often skip this step and they look at something they have to do and are just like OK I think "I can implement it in these 20 lines of code".

It's very likely that there is already a package out there that you can use to do this, so we are going to study two packages one for HTTP and one for database access to really bring home this point of look to PyPi and look to open source first before you start writing your own algorithms.

Of course, over time, we may add more topics than what are described here, I am sure as more and more people take this class they will say, "Hey Michael, did you think about having this", or "I also consider this little bit to be idiomatic." Now I don't want to just grab every single detail that I can find, that is possibly Pythonic code and cram it in here, I want to cover the stuff that's most important and not waste your time, but of course, I am sure we'll hear about some new ones that are great and those may be folded in over time.

|

|

|

show

|

0:19 |

We are going to write a lot of code in this course.

And you want to download it and play with it and experiment with it and so on so of course I am going to put this into a GitHub repository, here you can see github.com/mikeckennedy/write-Pythonic-code-demos so I recommend that you go out there and star this so you have it as a reference.

|

|

|

show

|

0:18 |

Are you brand new to Python?

Well this course assumes that you have some basic knowledge on how to create functions, classes, loops, those types of things, and we focus really on the best way to do those.

So if you are brand new to Python, I recommend you check out my course Python Jumpstart By Building Ten Applications and you can find that at talkpython.fm/course

|

|

|

show

|

1:59 |

Before we get to the actual code examples, let's really quickly talk about setup, tools, versions, that sort of thing.

So, this course is built with Python 3 in mind.

If you don't have Python 3 installed, you can get it for your operating system at Python.org/downloads, or you can "Homebrew" or "apt get install" it.

That said, most of the topics will actually be quite similar between Python 2 and Python 3, so we'll talk about Python 2 whenever there are significant differences.

You may be wondering why are we talking about Python 3, when Python 2 is more widely used today in commercial applications.

Let's look at Python over time and I think you'll see why I made this decision; if we start at the beginning, back in 1989, Guido started work on Python.

1991, Python 1 came out, 2000 Python 2, and then, in 2008 Python 3 came out, and this was the first major breaking change to try to improve and clean up the language from all the years that had preceded it.

Well, there was limited adoption because people already had large working code basis, a lot of the PyPi packages were not updated and so on, now if we look at today Python 3 has default as much more common than it has been but more importantly, if you look just four years into the future, not far at all, you will see that Python 2 is "end of life", there will be no more support for Python 2 in less than four years.

And that means, whoever has projects, written in Python 2 have to not just start upgrading them, but complete the upgrades by then.

So I expect there to be quite a pick up for Python 3 and I think focusing on Python 3 is very important going forward.

Guido Van Rossum and the core developers feel this way as well, if you look at the last three keynotes, there has been something like this where Guido has gotten up at PyCon and said, "There is not going to be another version of Python 2, going forward it's all about Python 3." So, that's why this course itself is based on Python 3, even though like I said, differences are quite minor for the topics we are covering.

|

|

|

show

|

1:05 |

So you can use any editor you would like, however, I am going to use PyCharm.

One, because I think PyCharm is the best editor for Python, two- because PyCharm actually detects and warns you and sometimes even automatically corrects errors when you write code that is not Pythonic.

This has to do with naming, this has to do with structure, all sorts of cool things.

So I am going to be using PyCharm in the videos and I encourage you to get it as well.

You can get it at jetbrains.com/pycharm.

If we open that in our browser you can see here is the PyCharm page and if we go to download it, you'll see there are actually two versions, there is the PyCharm community edition which is 100% free, and there is the PyCharm professional edition and if you look at the place where the features are missing from the community edition, it's really around things like database, web and profiler information as well as some of their Docker support.

So, for this course you should be able to use the community edition, I love this tool, I pay for it so I am going to be using the professional edition.

It works great on OS X, on Windows and on Linux.

So whatever operating system you use, you should be able to use it.

|

|

|

show

|

2:05 |

Welcome to your course i want to take just a quick moment to take you on a tour, the video player in all of its features so that you get the most out of this entire course and all the courses you take with us so you'll start your course page of course, and you can see that it graze out and collapses the work they've already done so let's, go to the next video here opens up this separate player and you could see it a standard video player stuff you can pause for play you can actually skip back a few seconds or skip forward a few more you can jump to the next or previous lecture things like that shows you which chapter in which lecture topic you're learning right now and as other cool stuff like take me to the course page, show me the full transcript dialogue for this lecture take me to get home repo where the source code for this course lives and even do full text search and when we have transcripts that's searching every spoken word in the entire video not just titles and description that things like that also some social media stuff up there as well.

For those of you who have a hard time hearing or don't speak english is your first language we have subtitles from the transcripts, so if you turn on subtitles right here, you'll be able to follow along as this words are spoken on the screen.

I know that could be a big help to some of you just cause this is a web app doesn't mean you can't use your keyboard.

You want a pause and play?

Use your space bar to top of that, you want to skip ahead or backwards left arrow, right?

Our next lecture shift left shift, right went to toggle subtitles just hit s and if you wonder what all the hockey star and click this little thing right here, it'll bring up a dialogue with all the hockey options.

Finally, you may be watching this on a tablet or even a phone, hopefully a big phone, but you might be watching this in some sort of touch screen device.

If that's true, you're probably holding with your thumb, so you click right here.

Seek back ten seconds right there to seek ahead thirty and, of course, click in the middle to toggle play or pause now on ios because the way i was works, they don't let you auto start playing videos, so you may have to click right in the middle here.

Start each lecture on iowa's that's a player now go enjoy that core.

|

|

|

|

13:52 |

|

|

show

|

1:54 |

Are you ready to start looking at some Pythonic examples and writing some code?

I sure am, we are going to start by focusing on PEP 8.

And some of the very basic structured ways in which you are supposed to write Python code, and then we'll quickly move beyond that to more the patterns and techniques that we are going to talk about for the rest of class.

So one of the first questions about this idiomatic Python code, this Pythonic code, is "Who decides what counts as Pythonic and what doesn't?" The way one person writes code may very well be different than the way another person likes to write code, and this is one of the reasons that exactly, specifically stating what is Pythonic code and what does it look like is a challenge, but there is a couple of areas where we might take inspiration and pull together some sources and find some consensus.

One of them is just the community.

We can look at blogs, Stack Overflow, things like that and see what people are saying and what they agree or disagree upon.

So, here is a question that asked what is Pythonic code and there is some examples given.

Another area that people take inspiration from is something called The Zen of Python, and if you want to look at The Zen of Python, this is by Tim Peters you can just type import this inside of the REPL in any version of Python and you'll get this, and it's things like "beautiful is better than ugly, flat is better than nested, errors should never pass silently"; again, this is not super concrete but it does give some sort of structure about what is important and what isn't.

We also have PEP 8, Python Enhancement Proposal 8, one of the very first official updates to the Python language was this thing called PEP 8 which is a style guide for Python.

Mostly this talks about the actual structure of your code, like "you should use spaces, not tabs", those types of things, but it also has some guidance on patterns as well.

We'll look at the few of the recommendations from PEP 8 before we move on to the more design/pattern/style recommendations.

|

|

|

show

|

3:59 |

The first PEP 8 recommendation that we are going to look at is around importing modules and packages.

So let's switch over here to PyCharm and have a look at some not-so-great code.

You can see on line seven here, we are importing collections, the recommendation from PEP 8 is that all module level imports should go at the top, unless there is some sort of function level import you are doing kind of unusual, conditional things.

And notice there is a little squiggle under here, and that's actually PyCharm saying "here is a PEP 8 violation, a module level import is not at the top of the file." So we can take this and put it at the top of the file, now everything is happy.

So let's look at few other things we can do, we could say "from os import change directory, change flags and change owner." This is a good way to import a bunch of items from os and not have to state their name, we could do this a different way, using what's called a wildcard import, we could say from os import *, now that would import the three listed above, but it would also import every symbol defined in os.

Now what's wrong with this?

Imagine up here I imported another module, from my module import path, so if I write this code, line four and line six, path is not going to be what you think it is, because we are importing path here but then we are importing every symbol from os using what is called the wildcard import, and os also has a path so it is going to overwrite the definition of path here.

So it's why it's always recommended to use this style of importing.

You may wonder why this is gray in PyCharm, PyCharm is just trying to help us out saying "here are some unused imports you can actually remove", but if I write something like "c = chdir", then that part will go away.

But of course, because we are importing the same thing twice basically it's also saying, I'll move that.

Here we go, so now it says "you are using change dir, change owner but not change flags", down below it's using "change owner".

All right, now "change flags", if I do something with that, it also lights up.

All right, so there was never a problem with that import, that was just PyCharm trying to tell us "hey, look out, you are actually not using that import." Another mistake that people make is they might say something like this, "import collections, os" and let's say "multiprocessing", they may put multiple imports on a single line.

That also works just fine, however, again, PEP 8 recommends that you put one import per line so it's very clear line by line what you depend on here, so we could fix this by saying, let me just put a "no:", something like that, and we could of course fix this, like so, "import os", "import multiprocessing".

So this would be the recommended way to write what was on line four.

So let's look at that import guidance a little more clearly.

Here we have at the top two bad styles of imports, first line: "import sys, os, multiprocessing", on a single line; PEP 8 says "do not import multiple modules on a single line", and avoid "from module import star", these wildcard imports because you may accidentally, unknowingly overwrite other imports.

So we have our better set of imports, "import sys, import os, import multiprocessing", and these of course are going to allow us to use "module name.symbol" name so "os.path" for example, in this sort of namespace style, and that's really nice to know where the particular symbol that you are working with, like "path", where it came from.

If you don't want to use that namespace style, you can use the final import we have here, "from os import path, change mod and change owner".

PEP 8 also have some guidance on the order and grouping of your imports.

It says the standard library imports should go at the top, related third party imports should go in a little section below that and then finally, local app other models within your own code should be put last.

Of course, all three of those go at the top of the file.

Another thing that's nice about PyCharm - it does this for you automatically if you hit Command+Alt+L for reformat file.

|

|

|

show

|

2:47 |

Next up, code layout.

Let's see what PEP 8 has to say about it.

The most important part about code layout that PEP 8 talks about is indentation.

So, imagine we have a method called some_method(), it says that you should indent four spaces, and PyCharm of course, as you've seen, knows PEP 8 and indents four spaces for us automatically, so: one, two, three, four, there we are.

And, we can write something so we could define a variable, hit Enter, like an "if" statement, so something like "if x is greater than two", enter; Python does not like us to use tabs, PEP 8 recommends we use spaces and every indent is four spaces, but it even looks like if I hit tab and delete tab and delete, hit tab, back and forth, it's actually four spaces and PyCharm just treats blocks of four spaces like tabs for us so that it's easier for us to work with.

Next, PEP 8 also talks about spaces between methods and other statements, so for example here it says there should be two blank lines between this method and anything else, somewhere down here two blank lines, two blank lines, I can go and fix this, but if I hit Command+Alt+L it will actually fix all of those spaces for us.

If we look within a method, PEP 8 says there should be either zero or one blank line between any section of that method, and use the blank line sparingly but to indicate a logical grouping; so you can see maybe we wanted to define x and later have this if statement, that would be fine, but if we go farther you can see "hey, PEP 8 warning, too many blank lines", put that back.

Last thing that I'll highlight is around classes, so if we have a class called AClass here, you can see it has three methods, and just like functions, it should have two blank lines separated in it from other module or level things like the top of the file, the method down here, and so on, but within the class, there should be one blank line between methods, not two.

So that's different than functions, when you are looking at methods within a class, it's a little more tightly grouped as you see here.

So let's look at that in a diagram, here we have our method, you can see the little dashes indicate spaces, which have all of our symbols within our method indented four spaces, additionally four more spaces for loops and conditionals and more and more as you nest those things, right, there should be two blank lines between methods, classes and other symbols defined within your module, and finally, PEP 8 recommends that you don't have lines longer than 79 characters, although there is a lot of debate around the value of exactly 79, but you know, it's a guidance, basically try to avoid having super long lines but don't do so by changing your code structures so much that it becomes small, so for example don't, like, create all your variables to just be one character so that when you combine them in some expression they still fit on the line, you would be better off using the continuation, but the idea of not having really long lines of code that's a good idea.

|

|

|

show

|

2:08 |

Next, let's look at what PEP 8 says about documentation and docstrings.

Here we have our some_method, method that I wrote, and I added three more parameters, if I come down here and I say some_method, see there is sort of note help in the IntelliSense, and if I say "look it up", PyCharm does a little work to say what the type hints would be if it could look at the types the way the function is written, but it doesn't tell me really what a1, a2 or a3 mean, or what the method itself does, and obviously it's poorly named, so this doesn't help as well.

So what we should do is give it some docstrings, and docstrings are just a string by itself on the first line of the method, or module, if you're documenting the module or class, if you're documenting the class.

So we can just say triple quote and then I hit Enter and PyCharm will actually look at the method and help us out, so it knows it returns a value and it knows it has three parameters called a1, a2 and a3, so when I hit Enter, I get the sort of structured way of documenting my method so we'll say something like "some_method returns a larger of one or two", which is not actually what it even returns, it's just a silly method I threw in there to talk about spaces, let's say this is a meaningful thing, and let's say this will be the first value will be first item to compare I will say a2 is the second item to compare and a3 is actually whether or not it should be reversed which again, doesn't mean anything.

We'll say 1 or 2.

So if we write this we can come down here now and I say some_method, and look at it I could hit F1 or if I was in REPL I can type "help some_method" and I would see this- some method returns the larger of 1 or 2, a1, first item to compare, a2 second item to compare, a3 should reverse, 1 or 2.

All right, so that's helpful, this is the recommended way to write these docstrings, according to PEP 8, let me fix this, put a little space here, we'll go back and look at the picture.

So the recommendation from PEP 8 is we should write docstrings for all public symbols, modules, functions, classes and methods, and they are not really necessary for non-public items, that's an implementation detail and you can do whatever you want there.

|

|

|

show

|

3:04 |

Let's talk about naming conventions.

PEP 8 actually says a lot about how you should name your functions, methods, classes, and other symbols in your code.

Before we get to the specifics, I just want to give you one of my core programming guidelines around naming, and that is: Names should be descriptive.

Let's imagine you are writing a function.

If you write the function and then you start to put a comment at the top to describe what that function does, I encourage you to stop and think about just making the concise statement about what that comment is the name of the function.

I am a big fan of Martin Fowler's reafctoring ideas and especially the "code smells" that he talks about, and "code smells" are things in code that are not really broken but they kind of smell off, like a function that is 200 lines long, it's not broken, it just doesn't seem right.

It sort of smells wrong, right, so breaking that to a smaller bunch of functions through refactoring might be the solution.

And when he talks about comments he says comments are deodorant for code smell, instead of actually fixing the code so it's remarkably descriptive and easy to understand, people often put comments to say "here is this really hard to understand thing, and here is the comment that says why it's this way or what it actually does or maybe in my mind, what it really should be named." So my rule of thumb is if you need a comment to describe what a function does, you probably need a better name.

That said, let's talk about how PEP 8 works.

So here is some code that is PEP 8 compliant.

We have a module called data_access and modules should all have short, all lower case names and if needed for readability you can put underscore separating them.

Next you see we have a content that does not change during the execution of our code, it's called TRANSACTION_MODE and I forced it to be serializable.

These should be all upper case.

Classes, classes have cap word names, like this, CustomerRepository, capital C capital R.

Variables and function arguments are always lower case, possibly separated with underscores as well; functions and methods lower case, again, possibly separated with underscores.

And if you happen to create your own exception type, here we might want to raise a RepositoryError and it derives from the exception class, when you have exceptions they should always be named in error, so "SomethingError".

Here we have RepositoryError.

As you have seen in other cases, PyCharm will help us here, notice I renamed other_method, here to be Javascripty style with the capital M and not the underscore, you hover over it PyCharm will say "no, no, no, you are naming your functions wrong." It'll even let us hit Alt+Enter and give us some options about fixing this up, it doesn't quite understand how to rename it for us but at least it gives us the option to go over here and do a rename.

Now this is not this renaming in place, but this is a refactor rename across the entire project, so if we had hundreds of files, docstrings, that sort of stuff, this would fix all of those.

It'll show us where it's going to change, here we don't have anything going on really so it's just this one line.

Now, our warning is gone.

|

|

|

|

32:10 |

|

|

show

|

5:57 |

Now that we've got more or less obvious PEP 8 items out of the way, let's talk about a handful of what I consider foundational items.

They don't fit neatly into some classification like loops, or classes or something like that, but they are really important so I put them at the beginning.

The first thing I want to talk about is what I am calling truthiness, the ability to take some kind of symbol or object in Python and test it and have it tell us whether or not it should evaluate to be True or False.

So just to remind you in case you don't remember all the nuances of truthiness in Python, there is a list of things that are defined to be False, and then if it's not in this list, if it doesn't match one of the items on the list, it's considered to be True.

So obviously, the keyword "False" is False, empty sequences or collections, lists, dictionaries, sets, strings, those types of things, those are all considered to be False even though they are objects which are pointing to real live instances.

The numerical values zero integer and zero float are False, "None", that is the thing that represents pointing to nothing, is False and unlike other languages like say C where the null is actually defined to just be a sort of type def back to the zero, "None" is not zero but it's still considered to be False.

And if you have a custom type you can actually define its truthiness by in Python 3 defining a dunder bool, or Python 2 dunder non-zero.

All right, if you are not on that list, then whatever you are testing against is True.

Now let's just review, that's not Pythonic code per se, so let's see how this leads us to Pythonic behavior around testing for True and False.

All right, here I have a real basic method I call print truthiness, and let's just test it here, I can say print the truthiness of True and we could also test False I suppose, so if I run this, no surprise, True is True, False is False and you can see we are using this ternary statement here, "TRUE if" expression, "else FALSE".

Now we are not saying if expression == True, we are using the implicit truthiness of whatever it is that we are passing, here it's the True and False values, but it could also be a sequence, it could be some other kind of expression, all right.

So the recommendation for Pythonic tests on True/False like this is to do something along these lines, it's to actually use the truthiness inherent in the object itself, so you would say something like if I had a val, let's just say it's 7, I would say "if val" and down here I would do something, I wouldn't say "if value == True", or "if value is not equal to zero", I would just use the implicit truthiness that here is a number, if it's non-zero, which in theory I was testing for, it's True, otherwise it's False.

So let's see this for sequences, so if I have some sequence, let's make a list, it could be a dictionary or whatever, we could print the truthiness of empty list and we could have our sequence here, and you can see an empty list is False, but now if I add something and I run it again, and I test for the list with one item, then you can see, now it's coming out to be True.

You can see we can put in here numbers like zero, we can put in 11, or even -11, and zero of course is False, the others are True.

Now we can call this function print_truthiness on "None" as well, maybe leave a little comment here, we'll say "for none", if we pass None, it's going to evaluate to be False, this is not the best way if you are explicitly expecting None to test for it we'll talk about that as a separate item, finally we can define a class called a class or whatever you want to call it and maybe it's going to have some kind of internal collection, we'd like to surface that so we could use the instance of this class itself in a sort of truthiness way, so down here we'll give it some data like a list here, we'll give it the ability to add an item to its set and then we'll go over here and since it's Python 3, we are going to define dunder bool and here for bool we can define one of these ternary statements, we can say "return True if self.data" and just leverage the truthiness of data itself, "else return False".

And then once we do this, we can come down here and say "a = AClass()", we can print and of course if we run it empty, we would expect it to be False, and there it is, it's False, now if we add and item to it and we print it again, now it evaluates to True.

So the Pythonic expectation here or the Pythonic style is when you are testing objects, leverage their implicit truthiness, now we'll write something like "this is True if the length of data is greater than zero." Now, we don't want to write that, we just want to say "if data", it has an implicit truthiness and we are going to leverage that.

So here you can see we've got basically the same code, True if the expression evaluates to True, via its implicit truthiness, else we'll state False, we've got empty collection and it evaluates to False.

We add something to the collection and it evaluates to True, however notice we can't actually test the data equal to True so we can't say print me the truthiness of "data == True" because that's False, these are not the same things, you are basically comparing a list to a singleton True value, a boolean which never is going to be equal, so it's always going to say False, but we can leverage the truthiness of data and it will come back as True.

Finally, if we are going to create a custom type that is itself imbued with truthiness, we give it a dunder bool method and then we just return True or False, depending on how we want it to behave.

You can see below our empty version is False, our non-empty version is True.

|

|

|

show

|

2:23 |

Next let's look at testing for a special case.

Imagine that we are going to call a function and that might return a list and that list might have items in it or it might not, maybe we are trying to do some kind of search and there is just no results, and yet, if it was an unable to perform a search, we'd like to indicate that differently than if there is just no results.

In that case, we can actually change our algorithm just a little bit and test for something different.

Let's look at PyCharm.

So here I have this function called find_accounts and you give it some search text; it checks to see if the database was available and if it's not available, it returns None, which we know is False.

Otherwise, it's going to actually do a search against our database and return a list of account ids.

However, there might not be any matching results in which case that list could be empty, so you might be tempted to say "if not accounts", then maybe I want to just like print something out like this, "else", let's actually try to say we are going to print the accounts.

This part is going to work fine if there are results, we would list them.

However, it could be that our search just returns no results, in which case this list would evaluate the False, so we are going to change this, we are going to test actually if accounts is None, when you are testing against singletons, and None is a singleton, there is only one instance of it per process, we'll use "is" and that actually compares at the pointers, not just the inherit truthiness of the objects or some kind of overloaded comparison operator.

So now our code is going to work as expected, if we literally get nothing back because the DB is unavailable, then we can say oh, DB is not available, otherwise, we might print them out.

And of course, that set might be empty, we might want to put in additional testing here to say "well, if it's empty" like your search works but there is no results, whatever.

But the Pythonic thing here is to notice that we are using "is" to test against singleton and a special case of singletons of course is the None keyword, which is one of the most common.

So here we have this in a graphic, so we are calling find_accounts, giving it some search text and we are going to get some results back and we want to make sure that not only is it truthy, but it's actually worth specifically testing that there is something we got back rather than a None pointer, so "if accounts is not None", and notice, when you negate "is", you say "is not", rather "than not account is", which would also work but is less Pythonic.

|

|

|

show

|

6:18 |

Now let's talk about multiple tests against a single variable.

What do I mean by that?

Here is an example, suppose we have an enumeration of moves, North, South, East, West, North-East, South-West, things like that, and we would like to test is this a horizontal or vertical move or is it a diagonal move?

So somehow we have received a move called "m", from somewhere maybe this is in a game or something, and we want to check is it one of the direct, vertical or horizontal ones so we would say "if m is North, or if it's South, or if it's West, or if it's East, then we are going to match this case." So this is very common in many languages but in Python, we'll see there is a more Pythonic way of doing things.

So here we are in PyCharm and we have basically the situation I just described.

You can see we are in our moves enumeration and it has these various moves, the four horizontal-vertical ones and the four diagonal ones, and it even has a parse method here, parse static method and we are going through and we are actually checking hey if we are given a text and the text lower case version is "w" then we are going to parse that to West, if it's "e" then it's East, "nw" is North-West and so on.

Now we'll see if we can actually improve on this as well but that's not the point of this conversation, OK, so we are going to run our code and it's going to ask for which direction we want to move, North, South, East, West and so on and we'll use that parse method you just saw.

If it's something it doesn't understand it returns None and as we already discussed we check if it is None, then we bail or print it out so just you can see what the parse did as and and then here is this code that we have before.

So, let's first run this to make sure it works, OK, "which direction you want to move?" Let's go South-West, move South-West was parsed, hey that's a diagonal move; we'll try another one, let's try North.

North, that's a direct move, maybe the name is a little off but you get the idea.

So let's write in more Pythonic version here.

recall in Python that if we have a collection, like a set or something like that, if we say "s = {1,2,9,11}", we can check for containment and in this set using the "n" keyword, so if we have "v = 11", we could ask we could say "print v in s" and because 11 is in the set it should come back and say yes, so let's just say something here, True, yes it's in there.

We change this to 12 and run it again, we'll see it False, it's not in there.

So we are going to use this principle to make our test more Pythonic.

So we can make this shorter, less error-prone, easier to read and more Pythonic using that idea, so what we'll say is "if m is in the set of moves to the North, moves to the South, moves to the West or moves to the East", like so, we could use a list, we could use a dictionary, but set seems like the right things for what we are trying to express here.

Let's just run it and make sure that this still works.

So if we move North, it's a direct move, if we move South-East that's a diagonal move.

Let's just try, let's take West, boom, direct move.

OK, so that's pretty sleek, right?

So when we have a single variable and we want to test it against multiple conditions, use this "in" keyword, now this is extremely readable, but in fact, it would be a little bit slower, so most of the time, a little bit slower in something like this is you know, a millisecond here, a millisecond there, nobody cares, it doesn't matter.

But if this happened to be within a really tight loop, we could improve upon this by taking this set we want to test for and moving it outside the loop.

So we could do some kind of refactor and create a variable here and call this say a direct_moves, like that; where this gets put somewhere outside of our loop and then inside of the loop we can test it like this, again this should still work, just like before; North, yes that's a direct move, but sometimes you may want to avoid this for performance reasons.

Let's just do a quick little test to actually understand what the performance implications are.

So here we are going to do a "for...in loop" one million times and let's start out with the version that is not so Pythonic with the multiple tests, we'll just compute the boolean over and over and over and see how long that took.

So let's run this.

So it took 0.2 seconds, so 200 milliseconds for a million moves, chances are this doesn't really matter for you, but if we want to figure out how long that took for one, we could do something like this, so 10 to the -7, extremely fast but like I said, if you do it in a million times, hey maybe it matters, let's test a version that is more Pythonic but slightly slower.

Here 0.3 instead of 0.2, no big deal, however we wanted allocating the set each time to the loop, you'll see this is fairly slower.

So 2 seconds, instead of 0.2 seconds, so that's like 10 times slower, so you really have to decide how much does this performance matter you can see it's almost entirely negligible when you extract it outside of the loop, and you write it like this, it's still quite fast but it does put indent in when you do it this way.

So here I commented out a little test if you want to play with it, feel free to uncomment it, you should really thrive to do this, this sort of test here, and maybe I'll make it the most readable version until we have a performance problem, so we can inline this here, excellent, so here is a nice Pythonic way to test a single variable against multiple values in Python.

So this is probably the most natural way to write this code coming from somewhere like C++, Java, C# and so on, however, in Python we can use this set and the "n" operator to make this much readable, now if we want to see all the cases, it's very easy just look at what's in the set if you want to add or remove one easy to do and you won't miss an "or" with an "end" or drop a parenthesis or something weird like that.

|

|

|

show

|

3:32 |

This tip is nice and short but really helpful.

So let's talk about how we choose a random item.

Here in PyCharm, first I want to show you the bad what I am calling C-style but this appears in many languages.

So we have these letters, and letters and numbers, we'd like to randomly pick one and show it to the user.

So the most natural thing to do coming from a language like C is to create a random number that will be a proper index into that list, so we'd say "index = rand", we create "rand" and "n" be from 0 to the "len" of letters.

And we'll say "item = letters of index".

Now, this actually includes the upper bound so we need to take one away from it to make sure we don't have an off-by-one error, but of course, this is something you have to go look up in the documentation to see - is it including the upper bound and lower bound or just lower bound?

Something like this.

And we can just print out the item, if I run it, apparently "y" is the randomly selected one run it a few more times, "zero", "w", "k", awesome.

So what's wrong with this?

Well, there is a couple of things.

One, I have to go and calculate the length I have to know this is including the upper bound when I ask for these random numbers, so I have to do minus 1, oh and I forgot to check that there is actually an item in here that the index is not negative, something like that.

So let's write the Pythonic version.

You'll see it's easier to read, it's shorter, it's safer, everything you want.

So, I want a random item, and given a sequence, I can just say "random, choose a random item", from that sequence.

Done, I don't have to think about what the documentation says about upper and lower bounds, I don't have to verify anything, just print out the item, run it again, "c" was chosen by the C-style, and "d" by the Python style.

"b", "z", "4", "t", and so on.

Simple, sweet, but very effective and once you start using it, you will never want to go back to the C-style.

So here is that code in a graphic.

We have the bad style using the "random int", oh and you can see in my slide we actually have a bug about the upper bound, how interesting, huh?

There, I fixed it, but it was interesting that we had this place where we could introduce the bug and of course we get the index, we use the index to index into the letters, get the item and then we can print it out.

The Python one instead let's just go random that choice, from a sequence, boom, there you go.

In Python it's generally preferable to use declarative code rather than procedural code, and this is a little step in that direction.

|

|

|

show

|

7:07 |

Let's talk about string formatting and building up strings from fundamental values.

Over here in PyCharm we have two variables, a name and an age, Michael and 43.

We'd like to create the string "Hi, I'm Michael and I am 43 years old", given whatever values those variables have.

If you are coming from some languages like say C# or Javascript, you may try to go do something like this, you might say, "Hi", so if we try to run this, it's actually going to crash, you can see PyCharm is even pointing that out, it says there is something wrong with this age and this string concatenation here, boom, crash, cannot convert integer to string implicitly.

So this version is obviously not Pythonic, right, the deal is Python is not going to implicitly convert that to a string for us so we could say "crash, don't do this".

There is some other ways to do this, we could come down here and say if it's not going to implicitly convert this to a string, we can do that.

Now, this actually works.

We are done!

Not really, we are writing bad Python code, when we write this, so this works, but not Pythonic at all.

So, another way that we can do this is we can use a format string, so I can come down here and say, we are going to put a string and here we are going to put a number and I'll use "d" for integer, and then I can do a % format here and give it a tuple, so name and age, now format that in a little there, now if we run it, you can see exact same text.

So this is probably Pythonic, this is very common, but it's also an older format, it has a lot of limitations, so for example, if I was giving the age but the age was presented to me as actually a string, well, then this crashes.

What's on line 13 is not bad and is used quite widely, it does have some problems.

For example, you have to very carefully know what the type is, so I could do my "%d" with age because that was a number, but if it happens to be that it's just a string that represents a number, or could be converted to a number, well, again, a crash.

So there is a new, more modern style that works in Python 2 and in Python 3, I am going to decree this style to be Pythonic, so if we take the same code and I'm going to come here and say "I would like to just put whatever it's specified first here, whatever is specified second there, and do whatever you need to do to format them", here we go, we can do it like this, so "Hi I'm Michael, I am 43 years old", and it doesn't matter, let's comment this out for a moment it doesn't matter if this is a tuple, it doesn't matter if this is a string, it didn't even matter if it's an object, right, anything you want can go in there and you'll get the best string representation that you are going to get.

OK, so that's nice, really like that, but of course, with this style we do get more flexibility here, I didn't put numbers in here but you can say things like I would actually like the second, this is zero based, the second element to appear first, and the first item to appear second like this, so "Hi I'm 43, my name is Michael", I can even say something like "yeah, {1}" and repeat them, right, so that's excellent.

So I think that this style, which works well in both versions of Python is really the preferred way to do string formatting.

However, we can go farther, suppose I have a dictionary here and it has a day called Saturday and an office called home office, and I'd like to take those pieces of information and say: "On Saturday I was working in my home office." So we could go and do something like this, we could say "print" and let's just say we are going to put some kind of item here, and some kind of item here.

Now I could use the format you see above and just pull the items out of the dictionary but I can actually say "there is a key in the dictionary called day, and a key in a dictionary called office", now I would like to just project this dictionary into that string, and so I can say "format" and then do the keyword unpacking of the dictionary like so, on Saturday, I was working in my home office, so not only do you get more safety around the type you get a lot more flexibility.

You see, you can have additional data in the dictionary but if for some reason one of them is missing, if it's like this, well that's going to crash because it's going to say well, there is no office here, right, so you have to have at least values you are working within a dictionary but they can have more.

Now we can take this once step farther, and I can't show it to you running because I am running Python 3.5, but in Python 3.6 they have taken this idea and said this is a great idea, if we could come down here, let's take this version, so great idea, if I already have like a name, and an age I could come down here and use keyword values, like so and get this to run, right, "Hi, I'm Michael I'm 43", kind of like unpacking our dictionary, but in Python 3.6 they said you know, this is such a common thing, we would like to just grab the variable called name and grab the variable called age and put it in the string, without format.

So if we had just an f right here, then Python itself would actually pull name and age out of whatever namespace it happens to be in.

Now you can see this is an error because it doesn't work, in Python 3.5, but that's coming in the next release of Python, which is awesome.

All right, so let's review.

The first sort of naive version of printing out this string we are just going to concatenate them, that works well with strings but not with non-strings, so our age caused the crash, that was definitely not the way to do it, we could explicitly convert that age to a string, this is definitely not Pythonic, this is absolutely not Pythonic, but it does print put "Hi, I'm Michael I'm 43 years old", we can use the percent format style where we have "%s", "%d", "%f", that kind of thing, but it's restricted only formatting output for integers, floats, under the "%d" and then strings under the "%s".

Anything really that can be converted to a string for those.

But you kind of have to know the format, that's not perfect to my mind.

Some people say they prefer the percent version because there is fewer characters to type, and that somewhat depends on your editor I mean you do have to say ".format" instead of percent, in PyCharm you type ".f" and hit Enter, right, so you type about the same number of characters, so here are the more Pythonic versions in my opinion.

The first version, we used .format(name, age) and then we just put blank curly braces open/close curly braces, no numbers to say that the first item here, the second item there and so on, we have of course the extra flexibility to say "we'd like to reorder those and number them in the string", if we have a dictionary so we can actually project it by key into the string or we could even use keyword arguments on our format to accomplish the same thing, which is really nice because here we have a 1 and zero on a complex strings you might have many of these little indices or whatever, into your arguments and those are hard to maintain.

Whereas keyword arguments are really clear what goes into which part of the string.

Finally, in Python 3.6 coming soon, there is going to be what's called string interpolation, where you put an "f" (format) in front of the string that will automatically grab the data straight out of the variables.

|

|

|

show

|

2:49 |

The next Pythonic tip I want to cover is about sending an exit code to indicate whether your application succeeded or failed.

Let's have a look, so here I have a little utility app, meant to run on Windows and its job is to format the main drive C: and of course you don't want to just do that, just because the app was run, the script was run, you'd like a little confirmation, so here we ask the user "are you sure you want to format your main hard drive?" "Yes" or "no", you can see the default is "no" but if for some reason they type in "yes", and they come down here, we are going to simulate a little work, we are not actually going to format anyone's hard drive, and then we'll say "format completed".

So let's run this.

"Are you sure you want to format drive C?" Let's say "no", format canceled, now notice, exit code zero.

Let's come down here and say "yes", I would love to format drive C, so we do a little bit of work, you can't format it immediately, it's hard job here, and then boom, formatted successfully, enjoy the new hard drive space.

Now, we also got code zero, if I was trying to run this as a subprocess, or I was trying to orchestrate this by chaining it together, there is no way for me to know as a user of this script whether or not the user canceled or they actually formatted the hard drive.

We can easily come up here before a return, we don't technically need to return, because this is going to actually stop executing immediately; anyway, I can go over here and I can import "sys", now I was already importing "sys" so that I could use this little flush command that normally I wouldn't have been there, so I'll say "sys.exit" and we are going to exit here with let's say 1, down here, we can do this, we can say "sys.exit(0)" of course, you saw if we don't do anything at all, "exit(0)" is what is going to happen, so maybe I'll leave this one off.

Now, we also know that this is going to throw an exception so this "return" is actually unreachable, PyCharm told us that, OK so let's try again; "are you sure you want to format your main hard drive?" No.

Exit code 1.

Do you want to format your hard drive - oh please do, exit code 0.

Again, canceled, exit code 1, perfect.

So if there is any chance that your script is going to be called by other script or other applications you want to make sure that you indicate some kind of exit code so that they can use that information to determine whether or not they can continue whatever they are doing afterwards, whether or not your script succeeded.

So if we just let our app, our script exit, well it's always code zero, but we can use "sys.exit" and give some kind of code, typically the convention is if it's non-zero there was some kind of either failure or abnormal exit whereas zero is "all systems nominal".

Everything is good.

|

|

|

show

|

4:04 |

For this next Pythonic concept, let's go back to the Zen of Python.

So here I am in the Python REPL, and one of the core concepts is that flat is better than nested.

Now, it turns out this is one of my favorite items here and one of my favorite programming concepts, because a lot of people seem to do it in the reverse.

I call this sawtooth style of programming, we have lots of loops with "ifs" and conditionals and then more loops and so on.

So let's look at this, how we can apply this in Python.

Over in PyChram, we have a program that is meant to simulate downloading a file, and this might not be the best way to do it, obviously it is not the best way, but it really is a simple example to highlight this "flat is better than nested".

So what we want to do is we want to download a file and we are going to do a series of tests to make sure that we are able to download the file or at least we think we'll be successful before we actually try.

First one uses a little support module here, we are going to ask: "Is the download URL set?" if it is, then we are going to check the network status and then we are going to make sure that the DNS is working then finally we are going to check that we have permission to access the file and if all those things are true, then we are going to try to download it.

Otherwise, we are going to say well, this one goes back here so it looks like no access this one here, no DNS, this one here PyCharm even has little like tiny lines that are probably hard to see but I can follow back up, no network and then finally this one is bad URL.

This is a serious bad piece of mine, I hate code that looks like this, so let's write a different variation of this which I am going to call download_flat().

So let's just reverse these things, let's look at our conditionals here, instead of having these sort of positive checks, yes you can do this and you can do this and you can do this, we can return these "if" statements into what are called guarding clauses, you don't let the method run if one of them is failed.

So I can come over here and say "if not check url", then we'll say oh that's a bad URL.

And we can unindent, that's good, of course now that we are up here we want to return early, we'll say "if not check the network" then we want to say "no network".

And return, and again, unindent, it's better, again, "if not check DNS", do something and then return we'll print out that there is no DNS and then we'll unindent and finally we'll do this "if not this, return", and then if all the guarding clauses pass then write at the very edge of our method, not indented at all as far as this method is concerned, we can write our meaningful code and this makes it so much easier to maintain and write, instead of trying to do our actual work way down inside here.

So this is a very nice way that you can write these guarding clauses instead of this what I call sawtooth programming, so that you have nice, flat, easy to understand, easy to modify things, like for example down here, if I want to insert another test, I've got to make sure I've paired up correctly with the "else" down here and so on, but most importantly, you don't have to work in a hyper-indented way; this also works in loops, you wouldn't return out of the loop but you would just do a "continue" with the guarding clause instead of a test and then put the actual stuff inside the "if" statement.

"Flat is better than nested", let's see that in a diagram.

All right, here is the code that we wrote that was the sawtooth style with the positive checks, "make sure I can do this, make sure I can do that" and so on, and we rewrote that by inserting or converting those two guarding clauses this flat version is much better, we've converted all the positive checks to guarding clauses and it's much easier to add and remove those guarding clauses, see what the "else" clause is that goes with it because now it's right there and most importantly, at the end, we get to work in a non-indented way.

|

|

|

|

44:01 |

|

|

show

|

1:43 |

Now we are going to focus on a really important set of topics revolving around dictionaries in Python.

So, the first question you might ask is like: "Why should we focus on dictionaries in Pythonic code?" Well, it turns out that dictionaries are everywhere in Python, you'll see that dictionaries are the backing store for many types, so for example when you create a new object from a custom class you've created, every instance has its own backing store which is a dictionary for the fields and whatnot that you add to this class.

Dictionaries are isomorphic with JSON, so you'll see that there is basically a one to one mapping between Python dictionaries and JSON which is the web's most important transport type.

If we want to create a method, that allows us to use keyword arguments, one of the ways we can do that is to have the kwargs, **kwargs parameter and this allows us to not just pass a set of non-keyword arguments but actually arbitrary ones as well, and those come through as a dictionary.

As we'll see, dictionaries add incredible performance boost for certain types of algorithms, and we'll look at that in the section as well.

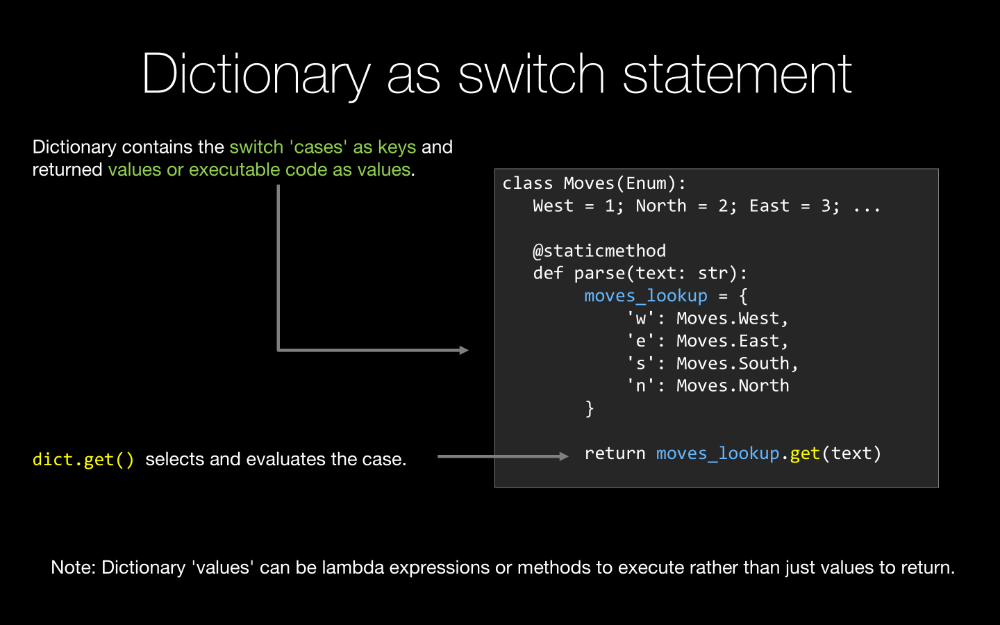

Python, the language does not have a switch statement, and we don't miss it too often but sometimes a switch statement is really nice and you'll see that we can actually leverage dictionaries to add switch statements, switch statement-like functionality to the Python language.

If you access a database and you are not using an ORM such is SQLAlchemy, typically the way the rows come back to you are each row comes back either as a tuple or a dictionary, preferably as a dictionary so you can lookup the columns by name rather than index.

So that's just a taste of why dictionaries are so important in Python, you'll see there is a lot of cool Pythonic techniques around working with them, so let's jump right in.

|

|

|

show

|

9:33 |

The first area I want to cover around dictionaries is using dictionaries for performance.

So when you have a collection of data, the most natural choice, the thing Python developers reach for first, has got to be a list.

It's super common, super useful and very flexible.

Let's look at two different algorithms one using a list and one using a dictionary and we'll compare the relative performance.

So here we have a bit of code and we'll look at this in detail in PyCharm in just a moment, but here is the basic way it works.

So we start out with a list of data, here you can see in our example we are going to be using half of million items, so we are calling a data list and it's going to contain some rich objects with multiple values or measurements - details aren't actually affected.

Then, suppose for some reason we have done some computation and we've come up with a hundred pieces of data we would like to go look up in our list, so we are going to go loop over each of those 100 and then we are going to find the item in the list, we can't just use interesting "item in list" in operator, because we actually have to filter on a particular value, what we did instead is wrote this method called find_point_by_id_in_list and it just walks through the list, it compares the id it's looking for against the ids that it finds in the list, as soon as it finds the match, it returns that one, it's assuming to be unique and then it appends it to this interesting_points.

So this is one version of the algorithm.

The other one is well, maybe we could do a little bit better if we actually used a dictionary.

And then we could index - the key for the dictionary could be the id.

So if we wrote it like this, here we have a dictionary of half a million of items, again the same dynamically discovered 100 interesting points, and instead of doing this lookup by walking through the items, we can actually map the id to the objects that we are looking for so we can just index into the dictionary.

Obviously, indexing into the dictionary is going to be faster, but possibly the computation, the building of the dictionary itself might be way slower, if we are going to do this a 100 times, we have half a million items, right, it's much more complicated to generate a dictionary with half a million items than it is a list.

So let's see this in action and see what the verdict is.

So here we have our data point, our data point is a named tuple, it could have been a custom class but named tuple is sufficient and it has five values: id, an "x y" for two dimensional coordinates, a temperature and a quality on the measurement of the temperature.

You can see I have collapsed some areas of the code because they don't really matter, these little print outs I think they kind of make it hard to read.

In PyCharm you can highlight these and hit Command+.

and turn them into little collapsible regions, so I did that so that you can focus on the algorithm and not the little details.

Here we have our data_list that we are going to work with, and we are going to use a random seed of zeros so we always do exactly the same thing, but randomly, so that we have perfectly repeatable results and then down here for each item of this range of half a million each time through the list we are going to randomly construct one of these data points and put it into our list.

Next, we do a little reordering on the list just to make sure that we don't just randomly access it in order, since we are using auto incrementing ids, next we are going to create our set of interesting ids that we are going to go search through our list, and then later through our dictionary.

Really we would use some kind of algorithm and we would find interesting items we need to go look up, but in this case we are just going to randomly do it, but there is a few Pythonic things going on here, one - notice this statement here with the curly braces, and then one item left to the "for", that means what we are building here is a set using something called a set comprehension and each item in the set is going to be a random number between zero and the length of that list which is half a million, so quite a large range there.

And we are just going to range across zero to 100.

The other thing to look at is we don't actually care about the index coming out of the range, we just want to run this a 100 times.

In Python, when you are looping across something like this range set here or you are possibly unpacking a tuple and there is only a few of the values, not all the values you care about, it's Pythonic to use the underscore for the variable name to say "I must put something here but I actually have no concern what it is." So, our interesting ids are interesting from a Pythonic perspective, but now we have the set of approximately 100 ids, assuming that there is no conflicts or duplication there, and next thing we are going to do is we are going to come along here, we are going to start a little timer, figure at the end what the total seconds pass were, and during that time, we want to go and actually pull out the interesting points that correspond to the interesting ids.

So we are going to go for each interesting id, remember, it's about a 100, we are going to say "find the point in the list like so, and then add it", and if we look quickly at this, you can see we just go through each item on the list and if the item matches the id we are looking for, we are done, otherwise, we didn't find it.

So, just to get a base line, I am going to assume that this is slower, let's go and run it and see what happens.

Remember, it's only the locating data in the list part that is actually timed.

All right, so this took 7.9 seconds and here you can see there is a whole bunch of data points it found, if we run it again, we get 8.4 seconds.

So it's somewhere around 7 to 8 seconds.

All right, so let's take this algorithm here and adapt it for our dictionary.

So I've got a little place holder to sort of drop in the timing and so on, you don't have to watch me type that, so the first thing we want to do is create a dictionary, before we had data_list, now we are going to have data_dict, we can create this using a dictionary comprehension, so that would be a very Pythonic thing to do and we want to map for each item in the dictionary the id to the actual object.

So, we create set and dictionary comprehensions like so but the difference is we have a "key:value" for the dictionaries where we just have the value for sets.

You kind of have to write this in reverse, I am going to name the elements we are going to look at "d", so I am going to say "d.id", maps the "d for d in data_list", right, so this is going to create a dictionary of all half a million items and mapping the id to the actual value.

So now, let's start a little timer, and next we want to locate the items in the dictionary, so again, we'll say interesting_points, let's clear that; "for id", we call it "d.id" so it doesn't conflict with the id built in, so "for d.id in interesting ids" we want to do a lookup, we'll say "the data element is", now we have a dictionary and we can look up things by ids, so that is super easy, we just say it like so, assuming that there is none of the id that is missing, something like that and then we'll just say "interesting_points.append(d)" Oops, almost made a mistake there, let's say "d.id" not the built in, that of course won't work.

All right, so let's run it again and see how it works, so we are going to run, it's still going to run the other slow version, I'll skip that in the video, wow, look at that, 8 seconds, and this is 0.000069 seconds.

So that's less than 1 millisecond, by a wide margin.

That is a non-trivial speed up, let's see how much of a speed up that is, then the other thing to consider as well, maybe the speed up was huge but the cost of computing the dictionary was more than offsetting the gains we had, let's try.

Wow, the speedup that we received was not one time faster, two times faster, or ten times faster, if this is data that we are going to go back into and back into, we would create this dictionary and sort of reuse it, where we get a speed up of a 128 000 times faster and an algorithm that is actually easier to use than writing our silly list lookup and it took literally one line of a dictionary comprehension, that's a beautiful combination of how dictionaries work for performance, bringing together these Pythonic ideas like dictionary comprehensions and so on, it made our algorithm both easier and dramatically faster.

What if we had to create this dictionary just one time to do this work?

Maybe we should move this down and actually count the creation of the dictionary as part of the computational time, so let's see what we get if we run it that way.

Look at that, 8 seconds versus 0.2 seconds, so even though it took a while to create that dictionary it still took almost no time relative to our way more inefficient algorithm using lists, we've got a 37 times speedup if every single time we call this function or we do this operation we would have to recreate the dictionary, it's still dramatically better and of course simpler as well.

Let's review that in a graphic.

So here we have two basically equivalent algorithms, we have a bunch of data we are storing in a list, half a million items, and then we are going to loop over them and we are going to try to pull some items out, by some particular property of the things contained in the list, well if you are in that situation, dictionaries are amazing for it and as you saw they are stunningly fast.

If we don't count the creation of the dictionary, we had a 130 000 times faster the bottom algorithm to the top algorithm.

So I am sure you all thought well dictionary is probably faster, but did you think it would be a 130 000 times faster, that's really cool, right?

It basically means that becomes free to do that lookup, and even if we had to recreate the dictionary every time, it's still 37 times faster, which is an amazing speedup.

|

|

|

show

|

6:15 |

Next, let's talk about taking multiple dictionaries and combining them into one that we can use to look up items that came from various places.

Here in PyCharm, I have a sort of web example for you, imagine that you are writing a web app, there is data coming from different locations into your action method, so on one hand we have routing setup that is passing data through the URL, we might be in that location, passing the id and the current value might be this, the title might be that, we also might have the query string and the query string might have some other value for id, like 1, it might have a separate value it's adding to the mix here called render_fast is True, then maybe we are also, as part of posting long URL which matches the route with the query string we are posting back a form and that form has email, a name and it is well.

So here I am just going to print out these three dictionaries just so you can see what they look like, and no surprise, here they are, they just look like basically they are written here, so what if I want one dictionary that I can just ask - "what is the id, what is the user name or what is the email address?" - and not have to worry about which one is located and we have our super non-Pythonic way here and I am going to use this dictionary called "m1", we'll go like this, and I'll say "for k in" and what we are going to do is we are just going to loop over each dictionary and stick the value in there.

The order in which we do this matters.

So let's suppose we want the query string that has the least value, so we are going to put those values into this combined dictionary first, so we'll say like so, and then we could just go to "m1" and we can just set the value for whatever the key is, to whatever the value and the query dictionary, here's like that.

And, we'll do the same thing for let's say the next thing we are going to do is the post, like that, and finally, we'll do it for the route.

OK, so if we run it, we should get a dictionary because the route has higher priority, with id 27, title Fast apps, render_fast is True and then that data in it.

Let's run it, all right, id 271, like we expected, Jef, Fast apps, perfect, so it looks like you combined it well, but this is a very procedural way, and there is better ways, so in Python down here we can actually sort of improve upon this by leveraging a couple of methods on the dictionary, so we can go like this, and remember we wanted the query first so we can say "query.copy" and actually create a copy of the dictionary and then we can go here and we can say update, I would like you to update your values possibly overwriting them with "post", and then I would also like to overwrite this with the route.

So now if I run it, we should have the exactly the same output but a little safer, less fiddly.

OK, you can see these are the same dictionary, now notice there is nothing about dictionaries that are ordered, so they are going to be out of order but they are the same value, down here this "no", I wrote some code that checks whether these four dictionaries "m1", "m2", "m3" and "m4" are the same.

We are not finished yet, so they are not going to be the same.

But in the end, we write these better versions here, we should be good.

We can actually do this in one line with the dictionary comprehension, it's not pretty but it does work.