|

|

|

15:26 |

|

|

show

|

3:23 |

Hello and welcome to Python for decision makers and business leaders.

I'm really glad that you're checking out my course.

Do you have to decide whether your organization or your team, needs to adopt Python or maybe even switch from one programming technology over to Python?

That's a huge decision and obviously it has large implications for your company but you've probably heard that Python is awesome and it's growing in popularity and it can do all these amazing things.

That's true.

In this course, what we're going to do is we're going to go through all the things that are good about Python what it can do for you, how it works and so on.

We're also going to look at maybe when Python is not the right choice and there are a couple of times unfortunately where Python is not the best answer and we'll be sure to call that out.

The idea is to make this course very fact-based.

You're going to see graphs, you're going to see numbers you're going to see trends and we're going to compare Python against the broader programming ecosystem and we're even going to write a little bit of code.

Now I realize you may not be a Python developer You're probably not.

You might not even be a developer at all and that's totally fine.

We're just going to see a couple of minutes here and there of Python in action because it's great to look at numbers and graphs and say Whoa, Python is great!

and see these people are adopting it and they have these wins or these successes but to really appreciate this and to make it concrete it's important to see Python in action.

Here's a really interesting problem that we solved in ten lines of code that maybe if you're not a programmer you couldn't have written but you're like Actually I could read this, this makes a lot of sense.

That's the idea we're going to go through, compare Python to the broader programming ecosystem talk about when it does make sense and when it doesn't, and we're going to back that up with examples, with code and with facts.

You might be thinking Well, this is interesting, but maybe my company is not really a software company we make tractors, or whatever it is you all make.

If this was 1980 that may well have been a true statement.

It could've been, We make tractors we're not a software company.

But as Satya Nadella said at the Mobile World Conference in 2019 Every company today is a software company.

If you want to compete, if you want a special edge over your competition, you really need to be a software company.

Not just any software company, you need to be a good one and one element of being a good software company is choosing the right technology to build upon.

You have a great foundation, with really poor cement.

You need something good.

It turns out Python is really good for many foundations but not all, as I said before.

I'm sure whatever your company does and I don't really know what that is it's still a software company.

Software and programming is something that will definitely give you an edge on the competition and this course will help you decide whether Python is the right thing to build that edge.

This choice is not simple.

Depending on what you need to do it might require one programming technology, or another or a mix of a few.

Choosing the right technology for your company or your organization, or even just for your team is tricky, and that's why we're going to compare Python with many different technologies, talk about its strengths and its weaknesses, so that you can make the right choice.

If you're a global insurance company, you probably have a different set of constraints than a film and animation studio.

Is Python right for you?

Well, we'll talk about its benefits and its drawbacks and then you can decide if those line up with exactly what you need.

|

|

|

show

|

0:44 |

Let's take just a moment and talk about the prerequisite knowledge I expect that you have as a student in this course.

Now, don't worry.

I don't expect you to be a programmer I already said that at the outset but I do expect that you probably have some experience working with technical teams.

You could be a programmer and that's great.

Maybe you're a project manager, or even you're a CEO but you work with, say, tech team leads.

If you have experience in giving some input into the software creation experience or the technical direction of your company that's really all I'm expecting.

So, no programming experience is required but I will show you a tiny bit of code so that you can see Python in action and make actual concrete choices about it.

|

|

|

show

|

3:25 |

Let's spend a minute and talk about the details of what we're going to cover in this course.

We're going to start out by talking about what you can build with Python and we're going to look at it from two perspectives.

What are the general types of applications that you could build with Python say, machine learning and artificial intelligence and what are some concrete things people have built with Python companies that you probably know.

I think it's very compelling to see a company in your industry has been successful with Python.

That could help make the decision that yeah maybe that's working out.

We're going to see that Python means three things not just one.

It's a language, it's a set of libraries and it's an entire ecosystem and it's important to identify which you're talking about when you're speaking with somebody comparing another technology.

If you say I think the Javascript language does this and the Python language does that, you need to know what they're talking about.

If they say, Should we adopt Python?

I heard it's really fast.

Well, what does that mean?

Does that mean it's computational fast?

It's fast to market?

It's got a lot of libraries so we can build things quickly?

What does it mean, right?

So knowing the three things that people talk about when they say Python and being able to identify which those are really helps with the conversation.

Then we're going to dig into a couple specific technologies.

We'll start with web development.

Python is great for web development.

There's a bunch of options there.

So we're going to go and check out some Python web apps and frameworks help you decide is that the right one for you is that the right space at all for you and if it is which of the Python frameworks are good choices.

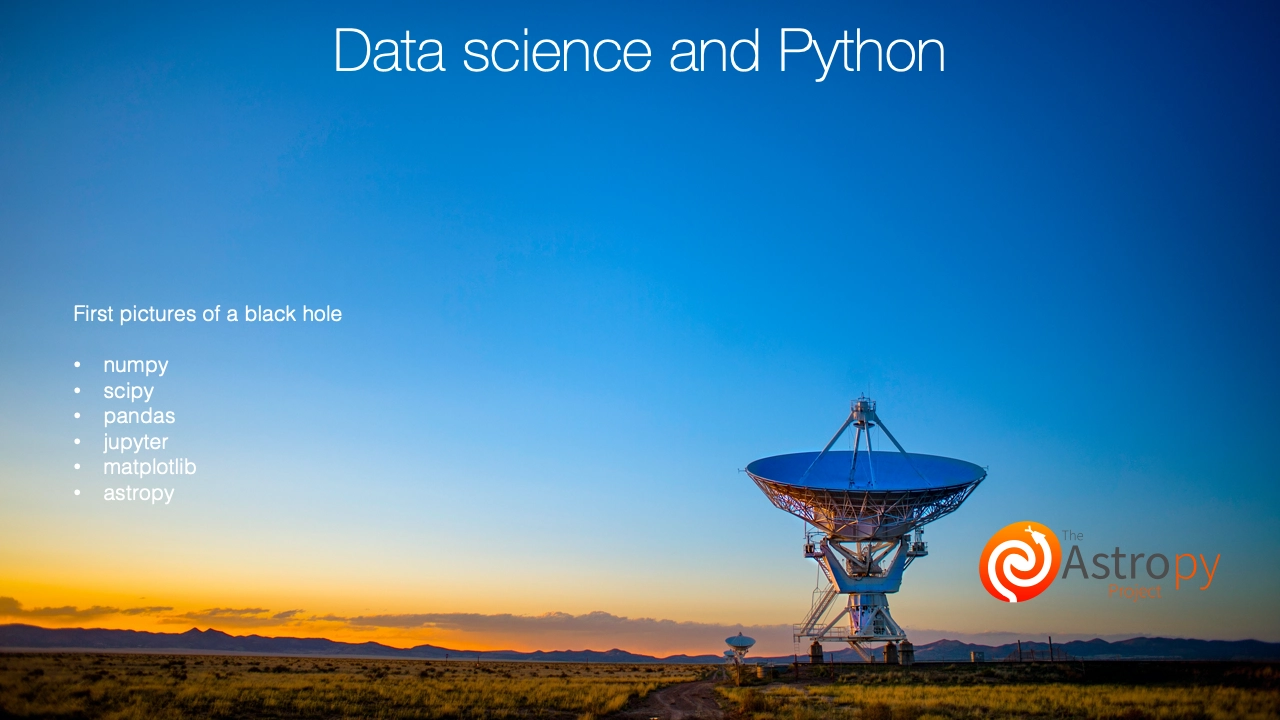

Data science is probably the place where Python has the biggest lead over other technologies so we're of course going to spend a little time talking about data science and Python.

Also see that Python is really good at testing not just testing Python code but testing hardware testing other languages so we're going to talk a little bit about testing with Python.

And then we're going to compare it against other similar ecosystems Python versus, say, .NET.

How do these compare?

What are the advantages of .NET over Python and what are the advantages of Python over .NET?

And .NET, by the way is probably a fairly decent stand-in for Java.

They kind of play in the same space.

I know Java developers are not necessarily .NET developers but the capabilities of those two are pretty similar these days.

In the scientific computing world MATLAB is really important.

Python has been making big inroads into that community.

Should you use some proprietary thing like MATLAB or some open source thing like Python?

We'll talk about that.

And finally, Python with C++ not necessarily versus.

So you might decide should I use Python or should I use C++ or should I use them together?

They actually go together pretty well.

If you're going to switch to Python you need to know that you can hire developers.

It's one thing to have a really cool language.

It's another to be able to say and there's a bunch of people I can just reach out to work with on this.

Can I find consultants?

Can I find capable full-time employees, maybe co-founders?

Whatever it is that you need knowing whether or not the job market and the number of developers out there is a good fit for what you're going to need well, we're going to talk about that.

And finally, we're going to finish talking about you've seen all this amazing stuff in Python but when not to Python.

Yeah, I'm making it a verb.

There are some areas and some situations where it doesn't make much sense to use Python.

We'll talk about that at the end of the course.

And that's it.

This is what we're going to cover.

It's going to give you a really great broad view of the Python ecosystem.

|

|

|

show

|

2:42 |

Let's take just a quick moment and have a very, very brief history lesson.

We'll talk about a brief history of Python.

Python was created back in 1990 by this guy named Guido van Rossum.

He's been involved in the Python space ever since and he's still involved today.

Let's look at a timeline.

So, 1990, Python was released.

Guido actually started on it maybe 2 years before that.

In 2000, we have Python 2.

This was a big improvement to Python coming out.

In 2005, one of the most popular web frame works, Django appeared, and really put Python on solid ground in the web development space.

Since then there have been many, many others such as: Flask, Starlette, Pyramid, and so on and we'll talk about those, but this was one of the first major, major web frame works.

In 2008, Python 3 came out, and this turns out to be more controversial than you might think and this is the reason I put those two timelines on there.

Many of the folks had built their code and their libraries, and projects on Python 2 and were reluctant to change.

The actual change was not that big but the consequence of those things that needed changed well, that turned out to be pretty huge.

For a long time in the Python community there was this divide of people sticking to the old stuff and the progress being done on the newer, modern Python Python 3, luckily that's solved.

That is basically a done issue, except for legacy code these days.

In 2012, as we'll see later, the data scientists moved to Python en masse.

For whatever reason, they just hit critical mass there and tons of data scientists already coming over and it became an incredible area of growth for Python.

There's many, many, many libraries and tools for data scientists, and it's one of the more popular areas where Python is used these days.

But there's a clear inflection point around 2012 when this happened.

I'm not actually sure what it was that triggered it or precipitated it, but, there it is on the timeline.

Finally, why is this Python 2/3 being not a debate anymore?

Because, in 2020, Python 2 will be end of life.

No security fixes, no updates, no more support, it's done.

There'll probably be places like Red Hat, potentially where you can go and pay for commercial extended support but it's kind of like saying, Hey, should we stick or maybe move to Windows XP or Windows 95?

No, and people are also not moving to Python 2.

It's pretty much settled.

Like I said, the reason I bring this up is if this is a hesitation for you, this 2 versus 3 thing it's gone.

Really, all the modern libraries are on Python 3.

It's Python 2 that's being left behind.

So, here's a quick history of Python.

|

|

|

show

|

3:01 |

Does it seem like Python is booming Python is everywhere?

Well that's probably because it mostly is.

Here's a cover story at the Tech Republic.

Python is eating the world.

How one developer's side project became the hottest programming language on the planet.

And I bet you can identify that guy in the background who created it after that previous video, huh?

Clearly here's an article but let's look at some graphs and some real data.

Maybe you've heard of Stack Overflow.

If you're not a developer, maybe not but like I said, if you work with technical teams you've probably heard the term Stack Overflow.

And this is a question and answer site that has somehow managed to become the definitive place that software developers go to ask questions and get answers and solutions to very specific technical problems.

Millions and millions of questions, and page reviews and all sorts of stuff.

So, very, very popular site.

Certainly comes right to the top of Google if you search for a specific programming problem.

So let's see what the data that they're making public says about Python compared to the other languages and ecosystems.

They have this cool thing called Stack Overflow trends and you can go there and put in different technologies and get graphs that look just like this.

And I put it in for the major programming languages C#, Python, Javascript, Java, PHP, C++, R Relevant in data science, Swift, or IOS and macOS C, and VisualBasic.NET.

And here's a bunch of graphs.

I think already you can tell one of these is unlike the others.

Like, really, really unlike it.

But let's make it more obvious.

Yes, Python is absolutely growing.

Where many of these languages are either flat or honestly, most of them are trending downwards giving up a lot of space to Python.

There's a lot of reasons why this is happening.

We're going to explore some of those but here's the numbers from Stack Overflow not just for some short little trend but this is over 10 years worth of data right here.

This is amazing.

They actually highlighted this is 2017 in the fall of 2017 article called The Incredible Growth of Python.

They cover a bunch of really aggressing things and here this data is in fact much more modern.

This is just almost 2020.

They have make a bunch of predictions and if you look at those predictions, it's surprising.

They actually about predicted this growth with Python projected out a few years but they underestimated the decline of the other languages.

It's amazing.

So if it feels like you're seeing Python everywhere or you're hearing from many people that Python is amazing when maybe a couple years ago you hadn't heard about it well that's because the momentum behind Python is growing so fast, the number of libraries that people can use is growing, and growing, and growing and making it even a more compelling place to be than it was not long ago.

Oh, remember that shift I talked about in 2012 about data scientists?

Yeah, also it's pretty obvious in the graph here that's not the only thing making this graph grow like this.

There's a bunch of factors, but certainly it helped kick that curve up there in 2012.

|

|

|

show

|

0:47 |

Before we get too far into this course I want to take a moment and just let you know all the slides all the presentation material you see as well as all the code that we're going to write or explore during this course, is freely available to you.

Even if you're not in this course, just visit github.com/talkPython/Python-for-decision-makers-course.

If you're familiar with GitHub you know how to get this code there's a couple of options.

If you're not, the simplest thing to do is just see that green button where it says clone or download if you click that, it'll expand.

There's a button that says download this as a zip file uncompress it and you'll have the slides and you'll have the code right there.

To remember anything you see on the screen you'll have it here as a record to take with you, to share with your co workers or to play around with the code.

|

|

|

show

|

1:24 |

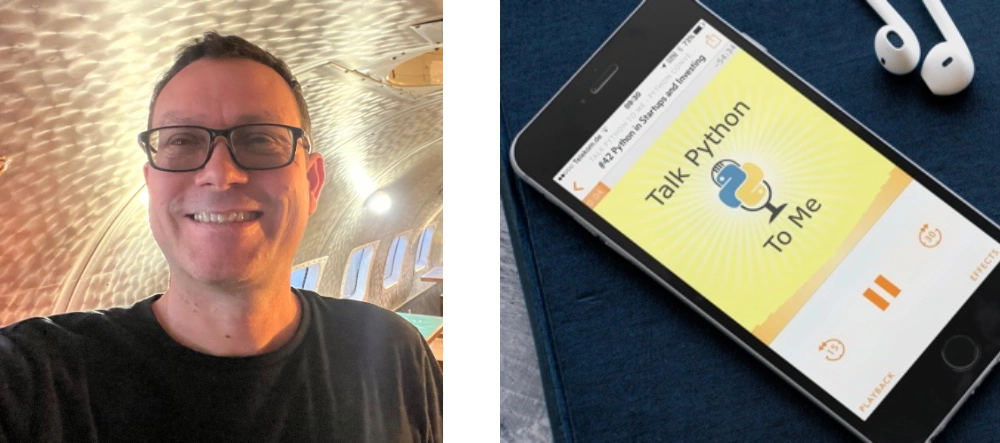

Last but definitely not least, let me introduce myself.

Here I am, my name is Michael, Michael Kennedy nice to meet you chat with me over on twitter where I am @mkennedy, why am I putting this course together?

Why am I passionate about Python and honestly why am I qualified to tell you about it?

Well, there's a bunch of things I've been doing I've been a software developer for like 20 years worked in many different technologies, but for the last four or five, I've been hosting the most popular Python podcast, Talk Python To Me.

Here I've interviewed an incredible array of people I've interviewed data scientists, astro physicists particle physicists, biologists, but of course people that work at Instagram running one of the largest Django websites in the world.

Through this experience, I've got a unique view at the whole spectrum of what Python is how people are using it and how they can be successful with it, also happened at co-host the second most popular Python podcast called Python Bytes, where we cover the news of the week in the Python space, so these are two complimentary different shows, and I'm also the founder and one of the primary authors at, Talk Python Training where your taking this course.

I've done a lot of work, 18 software developers and other folks about software development and of course I've put in a ton of energy into doing that for Python.

I'm so glad to meet you, I'm happy you're in my course and we're going to have a great time exploring Python together.

|

|

|

|

20:56 |

|

|

show

|

5:07 |

In this chapter, we're going to talk about what Python is, and what it is not.

Understanding what Python is, what constituent parts make it up, helps you consider whether those parts are valuable to you, and talk about the right level.

Are we comparing programming languages?

Are we comparing ecosystems?

Are we comparing libraries?

Is it matter that it's open source or not?

What is open source?

All these things we're going to talk about in this chapter.

Well, let's start off with a really quick and easy one.

Python, despite what you may have seen despite the logos, is not about snakes.

Yes, this right here is the official trademarked Python logo.

It is caricature of two snakes.

You can see little snake eyes a blue snake on the top, and a yellow snake on the bottom.

Despite that being the official logo Python was actually named after Monty Python the British comedy skit group, not Python the snake.

It doesn't really matter, but just so you know Python is really about Monty Python, and having fun.

Not so much about the snake.

More seriously though, Python is not 3 but in fact 4 things at once.

Here, this is Python, and this is our first bit of code.

We haven't even seen any programming yet and this one is really simple.

Like I said, I know you may not be a programmer but you can probably look at that for a minute and determine what would happen here.

So this is what we call a function.

If we call the function, we can pass it data.

Name, that's the purple thing there.

So I could say something like, Hello World, Michael.

And then what would happen, on the screen it would print out two different lines this is Python code, nice to meet you, Michael.

That's great, right?

So we would see that come out.

And this actually is the Python programming language.

So when someone says, I want to compare C# to Python they may be talking about the way you structure code and what you have to write down here.

But that's a very limited view of a programming language.

Some programming languages, that's mostly what they got but many of the popular ones, Python, Javascript .NET, and so on, they have much more.

So when you talk about them there's actually these layers that build.

The next layer up is a rich standard library.

You often hear in Python the phrase that it comes with batteries included, and when people say that they're talking about this standard library here.

And the idea is, there are many little libraries are utilities that you can use to greatly simplify the code that you have to write in the programming language here on the left.

So, in a language like C++, if I wanted to take a whole bunch of repeated data count how many times each one of them repeats and then sort them by how many times does it appear what one is the second most common how many times does it appear that might be like, 10 lines of code and you've got to think about the algorithm.

You could make mistakes, but you got to think about it.

In Python, there's built in libraries.

I could just go, create a Counter and say Counter, give me the most common grouping of this.

It will do that grouping, it'll do that sorting and boom, two lines of code, you have the answer you don't have to think about it.

That's what we mean when we talk about a standard library, or a base class library.

These rich little libraries that I can use to not do absolutely atomic building blocks and do everything from scratch, but in fact to build with much larger, more functional pieces.

Taking that idea further outside of what Python is delivered as, if I go to Python.org and download it, I get the first two things on the left.

But really when people talk about the power of Python and you can do so much with it they're thinking of third party libraries as well.

And Python has this thing called a package manager and the Python Package Index, which gives us access to literally hundreds of thousands of libraries some of which, you know, maybe just one of those is the Django web framework, or one of those is the Jupyter Notebook system.

Obviously those are actually built up of a couple libraries each, but you get the idea.

There's hundreds of thousands of extremely useful libraries.

We're going to explore those a little bit.

When they talk about Python, sometimes they're talking about this ecosystem, like of language plus the library but really all the libraries that are out there, open source or not.

And the fourth one, it's hard to overlook, the people.

Brett Cannon, one of the core developers of Python one of the guys who builds Python, creates Python and pushes it forward, has a great saying.

He says, I came for the language I stayed for the community.

And Python's community is fairly unique.

It's very welcoming, it's more diverse it's people who really, really want to be there.

Python often is a language of developer choice not something they're told they have to use but something that they rearrange their careers so they get to use.

So the community is very passionate it's built up of millions, literally of millions of programmers and other folks who program with Python.

So Python is these four things here the language, libraries, ecosystem, and the people.

When people say Python is valuable or I'd like to compare this against this other thing figure out what level they're talking about and make sure you're comparing apples to apples.

|

|

|

show

|

4:10 |

It's worth taking just a moment to look a little bit farther at this concept of the larger Python ecosystem the Python packages and so on.

Here we are at PyPI the Python Package Index and notice there are 206,248 different projects.

These are web frameworks.

These are data science tools.

All kinds of things.

It's really hard to appreciate the power of Python until you spend a little time seeing what is available in somewhere like this.

Let's suppose that you are a biologist.

Well, you type in biology and see oh, look, there are 800 different libraries for working with biology.

Maybe I want to be focused on genetics.

500 different projects on genetics.

What if you're into quantitative analysis as an economist or a trader?

Extra quant, 313.

The top one, enterprise architecture for quantitative analysis and finance.

If you need to build something around quantitative analysis you could start from absolutely scratch or you could come over here even dig in to this I'll show us what to do and come down here and it talks about what it is.

And it says how do you get started.

pip install quant and then off you go.

You start working with it and so on.

You got to read the documentation to figure out how that works.

What about web?

What can do with the web?

Oh, there's over 10,000, probably just, we're not counting anymore.

Though there's an incredible amount of stuff so Flask is one of the frameworks we'll work with.

We can come down here and it tells you a little bit about it.

It says the way you install it is you pip install flask.

This is something you type at the Terminal or Command line to install and manage these libraries.

There's other ways to do it but this is the most foundational one, I guess.

It says, Here's a simple example.

With those five lines of code literally just those five lines you can create a web server doesn't do much, you go to it, it says, Hello world!

But literally, that's a functional web application in Python.

You can even see them running, running down here you could go get it.

You also can get a sense of how popular these are, so number of stars.

This is really nice to know.

Is this highly used and highly maintained or is it kind of in the back waters of this area?

And maybe if you start using it you kind of have to, you know take over some of the development or at least there's not going to be that much out there to help, right?

So the more popular, generally the better but when you are over here if you have 800 choices for biology or you have literally over 200,000 projects it's hard to know if there are 10,000 of these things where should I start?

Which one's good?

You can't just spend your time working on that.

So I'll tell you about one more place, while we're here.

There's a place called Awesome Python and there's many awesome lists.

This is one of those types of things.

There's Awesome Python web frameworks and asynchronous frameworks but here's the most general.

So let's just look at this really, really quick.

So you notice over here on the right we have a bunch of different categories.

Maybe I'm interested in cryptography.

If I click on this it'll say Here are four highly popular cryptography libraries that you might use.

For example, Passlib is an awesome library for securely storing passwords.

If you create an account at training.talkpython.fm I super-duper encrypted hashed your password to store it in the database using Passlib.

Maybe I have to send some email.

Let's go over here and see what we can do.

So here's a bunch of different libraries you can use to send email.

And you click on it, you get some details, github repo and all of those sorts of things.

Places like awesomePython.com are really really helpful in determining what are the few stand-out packages or libraries for a given area.

Now that doesn't work perfectly because for example, I don't think genetics shows up here.

It does not!

It depends.

It works well if the category you are looking for appears on the right.

Otherwise, you're kind of out searching but still the idea is there are so many of these libraries.

And when people talk about Python they absolutely think about all of these hundreds of thousands of libraries available not just the foundational programming language that they type in.

|

|

|

show

|

5:00 |

We've already seen the incredible growth of Python.

We've seen how popular it is.

But you have to ask yourself, why?

Yeah, I talked about data scientists moving into Python and then actually bringing a whole nother group of not just data scientists but all the computational folks in all sorts of sciences and other disciplines such as economics.

But that's a result.

That's not why it's so popular.

That's other people coming along and finding it valuable.

I've been thinking a lot about this.

And the reason I believe Python is so popular is it fills a pretty unique space in the realm of software development in programming languages.

I've been a professional C++ developer Javascript developer, .NET developer, in Python developer and also worked with other languages more on the side.

And as I go through these different languages I found a special and that it's what I'm calling a full spectrum language.

Let me define that for you.

There are some programming languages that are really easy to get started with they're extremely productive for doing simple things.

At the very, very low end, we have things like the blocky Sketch programming languages they use to teach kids how to programme some of these visual programming languages.

But we also have things maybe like Visual Basic you know Visual Basic, it's kind of, can go pretty far but it's really easy to get started with.

But nobody would ever want to build an operating system with the Visual Basic.

So there are upper bounds what you might build with it they would never build YouTube or Instagram with Visual Basic.

There's these languages that are easy to get started with.

They're very appealing to people that are not really wanting to be full on programmers they just need to do a little bit of programming to get their job done.

You know, the computational side things like MATLAB definitely fill this role like I'm not a programmer but I need to make this stuff work so I need to visualize this graph or whatever so I'm going to do this little bit of programming in MATLAB and make that happen.

Again, MATLAB, you can't build YouTube you can't build desktop apps.

Right, you can just build simple visualization and analysis programs.

On the other end of the spectrum there are languages that are hard right from the beginning.

They're meant to build huge professional applications like C++ and C, or say, building Windows or Linux or Java or .NET, which are meant for building enterprise scale applications.

But how do you get started?

You get started by addressing all of the complexity and all the structures put into those languages from the beginning, just to do the simple things as well.

How do you get started in C#?

Well, you have a namespace, you have a class and you have all these qualifiers and all kinds of stuff just to get the thing off the ground and going and it's built that way 'cause it's meant for high end large scale software development but there's not like a simple cut down version of it.

Python on the other hand, is all about starting simple but for whatever reason, it's really well designed or its evolved this way.

There's not really a strong upper bound or a strong lower bound.

You've already seen two full Python programs.

The first one was at Hello, World thing where we passed the name that was three lines.

And even if you're not a programmer I suspect those probably made a lot of sense to you.

There's not a bunch of craziness and we haven't even talked about compiling and linking and all that weirdness.

The other one was the Flask web app.

And that was five lines.

There's probably one or two lines it was a little funky that you have to do in there but it was pretty simple.

Right, so you can get started incredibly easy as a scientist, as an economist I can look at this language and say actually, those five lines right there that's understandable, and that solves my problem incredibly, that, that is what I need.

And you don't have to learn all this computer science stuff to get started.

And yet, you don't grow out of Python, not usually anyway there's a few cases where maybe but just generally, you don't grow out of Python.

Python can scale up to build incredibly huge applications and infrastructures.

Much of the machine learning is done in Python.

YouTube is written in Python and it gets millions of requests per second millions of requests per second and it's implemented in Python.

Instagram, there's all these different things that are built in Python and these are large scale, serious applications maybe outside of the web space.

JPMorgan Chase has 35 million lines of Python running a lot of their internal important banking systems and software.

So you don't grow out of Python the same way you do some of these simple languages.

And yet, it's easy to get started.

And that's very appealing for a huge range of folks.

You have all the cool computer science techniques and infrastructure that you need to write real programs but you get to opt into it as you need.

That's what a full spectrum languages in my definition and Python is a standout among all of them for it.

|

|

|

show

|

1:07 |

No conversation about what is Python would be complete without mentioning that it is open source.

I'm sure you've heard of open source.

It's a trend in the software development space where the code is available and, typically it can be taken and adapted without permission without asking somebody, Hey can I make this change?

or, I saw there's this problem.

Could you please change your code because we wanted to do something else?

No, you could just go over here press this fork button and you are now the proud owner of a fully-functional version of Python and you could go do whatever you want rename it, make changes to it, and so on.

Typically, we don't want to do that because we want to stay part of the community.

We want all the changes everyone else is making to just come back into the thing that we're working on and make it so much better.

open source is really important these days and Python has been open source from the beginning.

You can get it right here on github.com/Python.

That's the organization.

There's a handful of things there and then /Python is actually the main Python runtime the library and the language that we talked about earlier.

So Python, open source, really amazing.

|

|

|

show

|

2:26 |

Let's just talk a little quickly also about the broader open source world.

Here's a report done by Red Hat on the state of enterprise open source.

You see the link here at the bottom Enter your information and download the whole report.

This is just what's off the public website there.

So I want to show you just a few pictures about it.

Now, you may say this is enterprise open source how is that different than open source?

Their definition, you can find it, on the Red Hat page is something like enterprise hardened open source or enterprise supported open source.

So this is really interesting.

It doesn't apply to all of it.

But I'ma show you one more thing to kind of of a retros-bit this enterprise term onto your other open source projects if you want.

But I think this is certainly totally relevant in terms of the trends we'll see.

They asked about 1000 IT folks who are in charge of things like this in their enterprise.

Does enterprise open source play a strategic role in your organization?

30% said it's extremely important, 40% said it's very important.

So we already have basically 70% of enterprises saying yes, it's very important or more than that.

And that's awesome.

And then you know, they're still important to the another 30% there.

Only 1% said, No, not for us, no, thank you.

There's a cool quote here as well says open source is increasingly used not because it's cheaper, though it often is.

But because it's genuinely better software.

That's for sure.

So many people with different ideas contributing to open source, at least the popular projects are really, really polished.

A lot of folks coming from other areas like say from Microsoft and .NET where Microsoft controls a language they control the libraries and a lot of the important frameworks like ASP.NET framework and so on.

It's a little uncomfortable to say we're just going to go and see what the community is done and like no one controls this and there's no SLA to go complain if something's wrong.

Who do I talk to?

How do I get them to fix it?

And yet, company after company are moving to open source because it turns out that it often is genuinely better software with way fewer restrictions.

So here's some other benefits as well lower total cost of ownership, higher quality access to Enterprise Level Support.

That's the enterprise side.

We'll talk more about that in just a moment.

But also access to the latest innovations.

You want to dig into more of this.

Just go grab that report from Red Hat and you can check it out.

It's pretty interesting.

|

|

|

show

|

3:06 |

Okay, so you've adopted one of these open source projects, and it turns out you need some help.

What are your options?

I guess it depends on what's going on.

Did you find a bug?

Are you trying to get a new feature or do you just need somebody to work on the project?

Let's just grab Flask as an example.

We're going to talk about this web framework later.

Let's imagine you're building a web application with Flask and you need to get some support in this broad sense.

I need a new feature, a bug fix or I need somebody to work with me.

There's a couple of things that you can do directly working with the project maintainers.

So David Lord, you can see David is the guy here who did the last commit.

So we could go over to the issues tab you can see there are 20 open issues right now and I could enter a message and say hey it looks like there's a problem with this code.

If there's legitimately a bug usually, you can come here, the project maintainer will address that and fix it.

If you have a new feature, you could file a bug and ask for the feature but remember you're asking for a favor, there's no reason that they need to take this and do this for you right?

So they may, if it's a good idea but they may not, if it's not in line with the project.

Another thing your can do is one of your developers or you yourself could fork this repo make a change and create what's called a pull request, which is, here's a change I've made to this software.

Here, I'm trying to give it back to you, let's have a discussion.

If you accept it, good.

I will automatically bring it back.

So that is also an option.

You could reach out to David Lord and offer to hire him as a consultant.

I have no idea if he does that type of thing.

You'll see down here, you can merger this pull request from gray li.

That is a fix that somebody else contributed back and David accepted to this project, but for popular, open source projects like Flask, you don't have to hire the maintainer.

There are literally thousands of people out there you can find at places like Toptal, Triplebyte, Hired, what not.

These various consultant market places and they can pair you up with Flask developers really easily.

So, because this project has 47,000 stars, that means it's very popular.

You can also see that it's used by 350,000 other projects.

You can bet there a bunch of people with Flask skills, if it's just a I need this thing built.

I need a consultant.

This works great for certain popular projects some of the time, but what if doesn't?

What if you need a broader solution?

You can check out Tidelift.

Now, Tidelift sponsors my podcast, but this is not a sponsored placement.

This is just me really liking what they're doing for open source And just want to give a quick shout out as an option.

So, Tidelift has this enterprise subscription.

So, it's managed, open source for your app developments teams.

You get things like security updates indemnification, maintenance.

So, what they do is they actually work with people like David Lord from Flask.

They work with the maintainers of those open source projects, and they group up all the enterprise users of those projects, and they all at once, one unified front, work with those projects to help get them maintained, security fixes done and so on.

So, definitely consider this.

It helps support open source, and Tidelift is doing a lot of good stuff for open source, so working through them is not a bad idea.

|

|

|

|

10:15 |

|

|

show

|

4:24 |

In this chapter we're going to focus on two important things which I think are very important to deciding is Python the right thing for you and does it fit for your use case.

The first one is what type of applications is Python really good at building?

Websites, API's, things like that.

Is it good for it?

We're going to find out.

The other one is who uses Python and how do they use it?

I think a really important case when you're thinking about is this technology for me?

If someone in your industry is being very successful with a technology, well, that probably should tell you.

Maybe we can make that work for you as well.

So, I'm going to show you a bunch of use cases of really interesting and well known companies doing cool stuff with Python.

Let's start with what you can build.

I mentioned websites, I said that YouTube was written in Python.

Also, Instagram and a bunch of other things.

So, building websites in Python, there are many many different web frameworks.

We're actually going to focus a little bit on that in this course.

But yes, you can definitely, definitely build websites with Python.

In fact, the Talk Python Training website would it surprise you to hear that it's built in Python?

And it's fast, isn't it?

It's faster than most of the sites out there.

So, yeah.

Python's pretty awesome for that.

Related to that are web services, HTTP API's, JSON.

So, if you need to build some way for applications to interact with your code rather than humans through a browser, then API's are the way to go.

In fact, at Talk Python Training we have mobile apps for taking our courses both in IOS and Android and those applications do all sorts of communication back to the server to get the videos, to figure out what courses you have, to get the transcripts to show and things like that.

All of those are, of course, written in Python and they work really really well.

So, if you need to build API's, there are many many options, as we'll see.

Data science is a huge part of Python.

We've already talked about the incredible growth of Python and how the folks coming from data science are finding it very welcoming cause it's a full spectrum language.

So, many of the things in the data science world were Python first and they start making their ways to other areas.

Think of iPython notebooks, maybe you've heard of Jupyter and Jupyter Lab.

This is a really cool interactive way to explore data that works with many languages.

It started out as iPython notebooks in the Python space.

So, of course data science is really really rich and powerful and Python.

Related to that is machine learning and AI image recognition, deep learning, all those types of things.

Most of the libraries that do deep learning or machine learning are Python first.

Yeah, they might work with other languages.

But, usually when these are designed, they're designed first to be consumed from Python and then other ways of working with them.

Maybe a C++ API or a Java API is built in.

But if you're doing some sort of machine learning it's extremely unlikely that you can not do that well with Python.

Robotics.

Python is very powerful for building and controlling robots.

Python is used to automate factories and build all sorts of amazing things.

Also, control little, smaller robots.

So if you do anything with robotics, you can use things like the ROS Operating System and work with that in Python.

Dev Ops.

Do you have a bunch of servers or a cloud infrastructure you need to manage?

You can use awesome things like Ansible or even through libraries such as Paramiko.

You can issue arbitrary SSH commands to remote servers all over the place.

So, in the Dev Ops world and infrastructure as code Hardware as code, Python is a really important player there.

So, if you're doing something Excel-like.

Putting a bunch of data into Excel and I'm doing a few calculations and I'm making a graph and so on there's actually a ton of functionality in Python in associated libraries either for directly automating Excel or working in similar ways, but better than Excel does.

There's actually an interesting Talk Python episode that I did called Escaping Excel Hell.

You can find it at talkpython.fm/200 and it talks about one, how to automate Excel.

And two, what are the libraries that are as programmatic equivalent of working with Excel.

And if you find you're doing a bunch of stuff with excel for your business, chances are you're kind of reaching the limits of it or it's getting challenging.

Python doesn't have those limits.

All right, so this is just a taste of what you can build with Python.

All the stuff on the screen here, it's very very good at these things.

So, if these are what you need to do, these types of apps or these types of capabilities.

Well, you're on the right track with Python.

|

|

|

show

|

5:51 |

As I mentioned at the outset, knowing who in your industry is using Python can be a huge help in deciding whether it's right for you or helping influence your team or your manager about whether or not Python is a good fit.

Let's talk about some really cool use cases.

SpaceX, one of my favorite companies I can't believe what Elon Musk and those guys are doing around finally starting to deliver on what technology can do for space.

Super, super cool.

I love to see those things land.

And you know what?

They have over 92 open positions for Python developers at SpaceX.

They're extremely secretive, so I haven't been able, yet to figure out what exactly they're doing with Python.

But if they have over 92 positions, not just jobs but maybe multiple openings in a given position for Python, you can bet that they're making serious use of it to get us into space.

Spotify, amazing radio music streaming service their website, built with Python.

Pretty awesome.

NASA, speaking of space, the other major space player.

NASA put together this cool documentary on all the steps that were taken to get back to the moon.

Man, I hope they really make that happen and it doesn't get canceled for whatever reason.

They showed engineers doing cool stuff, people doing tests and astronauts and whatnot.

In one of the sections they had in this promotional video was, and here are the software developers helping get us to space and writing the code to make this work.

You know what they had on the screen?

Of course it was Python.

Bitly, if you need your URL shortened and you want to share them and you wanted to have analytics real-time, quick analytics around who's clicking and sharing your data, Bitly written in Python.

Bitbucket, you've seen GitHub, Bitbucket is the other big competitor, maybe along with GitLab for that type of service.

And Bitbucket is written in Python.

JPMorgan Chase, maybe you're been thinking oh, all these other things are way out there.

We're like a way boring insurance company in Iowa.

Ain't nothing wrong with that but it's not SpaceX maybe so we don't get to use Python.

We use Java, or I don't know, whatever.

JPMorgan Chase has some of their most important systems written in Python.

They have 35 million lines of Python code and Python is typically short compared to other programming languages in terms of lines of code to get something done.

So that is a ton of Python they're using.

Not listed here, but also a massive, massive user of Python is Bank of America.

Quora, great Q&A site that you can go and ask deep questions.

Or ask questions and get fairly deep answers so Quora, does it surprise you to know that they're written in Python?

They're actually super passionate about Python.

They have an engineering blog where they talk about all the ways they're using Python and customizing it and trying to squeeze more out of it.

Super big users of Python.

Disqus, if you've ever gone to like a blog or certain sites that have a discussion section at the bottom Disqus may well be powering that.

There's all sorts of cool, real time web socket communication stuff going on there and much of what they're doing is Python.

They've written about it.

Instagram, Instagram may be the largest deployment of Django.

I can't remember exactly but they're doing incredible stuff.

They gave a keynote about how they're using Django at PyCon 2017, the biggest Python conference.

And that's on YouTube.

You can just search Instagram keynote PyCon and it'll come right up.

Yeah, huge, huge users and supporters of Python.

Reddit, the front page of the internet.

Reddit is interesting in a couple of ways.

They use Python, of course, to build their website and serve it up.

They also use a cool library called SQLAlchemy.

But what's even more interesting is you can actually find an open source version of the Reddit website out on the internet.

It's an archive version, it's not live like the Flask GitHub repo that I showed you.

But if you want to see what they did to build Reddit well, actually you can go get it.

YouTube, already mentioned YouTube was written and still runs in Python, handling millions of requests per second.

And that's a huge, huge scalability challenge that they're solving in Python and that's quite cool.

The Python Package Index, we saw all the cool libraries that you can get there.

Well, it's no surprise that would be written in Python is it?

Pinterest, if you've ever gone to the Pinterest website or used their APIs, written in Python.

PayPal, back to the financial space a little bit.

PayPal has a service internally whose job it is to say what the exchange rate is in money or how much should we charge as a percentage of this transaction and that gets called billions of times a day and needs near millisecond response time.

And they do that with Python.

Dropbox, Dropbox is a huge user of Python.

We talked about Guido van Rossum earlier.

He actually worked for many years at Dropbox and just recently retired.

It's time for him to do a little bit less corporate programming, but for a long time he was there at Dropbox doing cool stuff.

Even the client side Dropbox app, the little thing in your menu bar, in your task bar that looks like this icon, that is distributed as an application as Python.

And then of course all the back end stuff, Python.

Super cool.

Last but not least, Talk Python.

Of course our websites are built in Python our APIs are built in Python and it's been working incredibly well for us for many, many years.

And we have no intention to move away.

We have no complaints, millions of requests terabytes of traffic, beautiful.

Works like a charm.

If you want to read more about how some of these companies are using Python, like Quora, Pinterest, Spotify and so on, you can check out this link below.

There's actually an article that talks about a bunch of different sites, some of which I've highlighted here how they're actually using Python exactly.

And then others, like SpaceX or JPMorgan Chase have just pulled together from other places.

But here's a little bit of a reference for you.

These are just a few of the companies using Python.

It's a pretty diverse mix here and if it works for them, chances are it's probably going to work for you.

|

|

|

|

16:37 |

|

|

show

|

3:31 |

In this chapter, we're going to talk about web development with Python the whole spectrum of Python web frameworks how to pick one, and for the first time so far in this course, we're going to write a little bit of code.

That's right, we're going to create a web application a very simple one, and we're just going to hit the highlights of creating it.

But again, it's super important to see how effective Python can be by seeing just a little bit of it in action.

So, we're going to do that in this chapter.

Let's start by doing a quick purvey of the Python web frameworks.

In some technologies, let's say .NET there's almost no discussion of what web framework to use.

In .NET you use ASP.NET, that's the framework from Microsoft and so you use that one.

Yeah, there's some other ones you could pick but they're not really used.

ASP.NET, you don't think about it.

Maybe you think about variations but you don't actually think about the framework.

In Python, it's not like that at all.

There are many, many frameworks.

That's a great problem to have but it is actually a problem to decide where you should start.

Just by name recognition, probably Django is the most well-known framework.

Many of the examples I showed you before on who uses Python were actually also who uses Django.

Django is great and it's been around since 2005 very popular, gotten a lot going for it.

However, probably Flask is the one with the most momentum.

For example, last year, two years ago it was 15% behind in usage with Django.

It made up 15% in just one year and keep in mind, these both have been out for like 10 years so it's really starting to take off and you'll see its influence all over the place.

So these are the two big frameworks in the Python web development space.

There's also Pyramid.

Pyramid is a great option.

Actually our websites are built in Pyramid and it's really, really been great as well.

So these are all three really good options.

Masonite is a newer one that's got a lot of helpers to help you continue to add features to your site over time.

That's cool.

Starlette is one of the new foundational frameworks and I say foundational because it itself is a cool framework but there are many things built on it as well as you'll see.

So Starlette is one of the more modern frameworks.

It really, for Python Three, it takes advantage of some of the new language features about describing variable types and things like that and also supports asynchronous programming.

Built on top of Starlette is FastAPI which is a, maybe one of the best ways to build APIs these days if it's going to be an API that gets a ton of traffic.

FastAPI is on par, as fast as NodeJS, as fast as Go and it handles the scalability that those frameworks do as well so if somebody says, we can't use Python for this 'cause it's not scalable enough it turns out, actually, FastAPI totally does it.

To be honest, many of these other frameworks also completely do it.

You know, our site, running on Pyramid gets millions of requests per month and it does terabytes of data traffic and yet it runs just fine on this not so scalable thing right, but if you need extreme scalability FastAPI is good, Sanic is another one that's in that extreme scalability world.

It's another new framework that embraces Python's async and await.

The async programming capabilities that was recently added in Python Three and Tornado is one that's been around for quite a while and it has, also, this asynchronous programming model but it has a older traditional model that it's kind of built-in before Python itself had something for that.

Here's a whole bunch of frameworks and, actually we're leaving many, many of them out.

These are just some of the either new and exciting ones or the most popular older ones.

|

|

|

show

|

1:36 |

With all those framework options which one should you choose?

Well, it's not too hard.

If you want a traditional web framework then probably Flask is the way to go.

Maybe Django, probably Flask.

Like I said, Flask seems to have a little bit momentum and what you'll find is a lot of the more modern frameworks have adopted Flask's API but they make some slight variation on it.

Here are some survey results from The Python Software Foundation in conjunction with JetBrains, a developer tools company.

And they did a survey, the biggest survey of the Python dDeveloper space, in 2018.

They also did it this year but we don't yet have the results so we're going to look up the year before.

Notice how Flask is ahead and this call that I have on the right here is that surprisingly, Flask has grown by 15% usage among people who answered.

So, that's pretty awesome.

Django also is still very popular you can see my Pyramid is in here.

And there's some other things Hug, Falcon, Bottle and so on.

You don't even see Starlette or FastAPI, do you?

I believe those were either entirely too new and when they did this survey, they weren't even born yet they weren't created yet.

Or, if they were, they were very, very new and they weren't popular enough to make it onto this list.

So which one should you choose?

Well, if you want a traditional web framework like I said, Flask unless your organization is all about Django then just go with Django.

That's totally good.

If you need something scalable or you want something more modern look at things like Starlette and FastAPI, and possibly Sanic.

But, I'd probably start with Starlette.

It seems to be the foundation of so many of these things.

And, yeah, it's, it's really good.

|

|

|

show

|

1:51 |

Well, we've finally come to the part where we're going to play a little bit around with Python.

We've talked at the high level about why it's cool we've talked about how it's efficient the types of things you can build.

Let's just take a couple of minutes and actually build out something non-trivial.

We're going to build this website.

Looks pretty good, right?

Guitary, it will rock your socks off.

So here's a simple little Flask website.

And the idea is, we're a guitar company.

We're selling guitars.

Go here and, click that button and say, browse our guitars and you'll get a list you know, like a book store, or like any store that you would expect online these days.

Click that button, you get something like this.

Here's all the guitars, there's a cool picture there's the title, there's the price.

In a real app you'd be able to click on it and get all sorts of details and order it.

In our app we just have these two screens you can click those buttons, see all the guitars just the electric guitars, or the acoustic guitars.

So this is what we're going to build.

We're going to only take a super simple look at it we're not going to build every single detail from scratch.

For example, the web design we're just going to drop that in there.

But it's going to work with our Flask app that we're going to put together.

Now this is the first time we're writing some code in this course, and I did say, I totally understand that many of you may not be software developers.

Let me just set some expectations.

When you see what's happening here in this next couple of videos, don't expect to follow along every single step if you're not familiar with Python.

Don't expect to be able to recreate it.

It's not to teach you how to use Flask or the Python web framework; it's to give you a little bit of concrete sense of what it's like for someone, possibly you possibly someone else, to use these Python web frameworks.

So we're going to skip around a bit.

We're not going to cover every single detail.

It's more to inspire you with what is possible than it is to teach you every single nitty gritty detail.

Okay?

Here we go, It's going to be awesome.

|

|

|

show

|

2:44 |

Here we are in our Python IDE, called PyCharm.

There's a couple of good editors out there in the world.

PyCharm is one of them, my favorite.

There's also a Visual Studio Code and a couple of other options.

I've already set up a project by just creating a couple of folders and a file here.

To say that, we're going to use Flask.

And we're going to start by creating the Hello World Flask application and then we're going to extend that out, quite a bit.

Often, people think the Hello World app idea is to show you the simplest thing that you could possibly do to get Hello World on the screen.

But actually one of the important things of these, Hello World little demo applications is to show you the entire system is, kind of, hanging together and working.

Yes, I can run Python.

Yes, I can open up the web server and things like that.

So, let's do that, real quick here.

Want a new Python file, my convention is typically called app, and in order to use a library in Python regardless of whether it is one of these built-in standard library ones these batteries included or an external one that I have gotten installed you just type import and the name of it.

Here, you can see it automatically helps us out.

It says Flask, that's what we want to use.

So in here when I create a thing called app and we say Flask dot capital Flask and we give it the name of this file which you can get to like that.

Now we have to create a function which is called when somebody requests a webpage.

So I'll just call that index or something like that.

And here we're just going to say something like really simple return Hello world like that.

How about Hello Flask.

Now this itself is not enough to reformat that This itself is not enough to make it exposed to Flask.

We've got to do one more thing.

We're going to go over here and say app this is a route as in a destination URL, it's just forward slash.

Then finally down here we're going to say app.run.

Now there's a little convention in Python that allows us to import this into a production server or run it in testing.

So I'm going to do that here like this.

We'll go ahead and tell it it's in it's debug mode.

So in order to run it I just right click and say run.

There's our web server.

Look at that, it's already off to a good start.

What happens if I click on it?

Well, Hello Flask.

Whoo, make that nice and big, there we go way more dramatic when it's huge right?

Hello Flask, that's it.

So we've created our Flask web application.

There was no compile step, there was no installing things other than to get this installed into here.

Which I did before.

But there's no like messing with the system and things like that, it's just write these couple of lines run it, and we're off to the races with our Flask web app.

|

|

|

show

|

3:13 |

Hello World is fun, but it's not very realistic.

We want HTML pages.

These are the pages you see on the web, right?

Not this silly little string.

So let's do a quick thing to change this.

Notice I've added two directories static, where we're going to put some design things like images and style sheets and so on and this template folder.

So over here I'm going to add a file HTML file called index.html.

It has a title.

Let's call this Guitary.

That's going to be our little application.

And let's put a little header like this.

Welcome to our guitar store.

And what we want to do is we want to list out the guitars.

We want to have some data and show it.

First of all, we're going to need to change this around.

Instead of saying here's a string we have to go to Flask and say render_template and we have to give it the name.

And PyCharm knows about this convention so it's like, oh, here's one that looks like it might work.

If we rerun this and we check it out now woohoo, look, welcome to our guitar store.

We look at it, there's exactly what you would expect.

That's pretty cool.

However, we would like to pass some interesting data.

We're going to get a bunch of guitars.

How do we pass the guitars along?

Well, let's just say I've got a little bit of data here and it's a letter A, a letter B, a letter E, and a letter J.

So we can come in down here and just say values equal data or whatever.

We could just make up this name as long as we know what to refer to over on our template.

And we can come down here and we can have a little section.

We can have, like an unordered list that has a list item in it.

If you don't know HTML, don't worry about it.

We're just going to put some repeating HTML in here real quick.

So what we say is we say it'll be % for letter and value's what I called it and then down at the end, we'll have to say N4.

And the middle, we're just going to put out the value.

So let's put out the letter and let's say we want to make it uppercase so we can do that if we like.

Now, over here guess we'd better run it again.

If we click on this, look at that, what we get.

A, B, E, J.

And to be honest we're kind of done with Flask in a sense.

Like, this is mostly what Flask does.

And to give you a sense that's why I wanted to go through this of what web frameworks do in Python is we get then started, get 'em running we tell it what the URLs that it needs to process are what is the logic behind each one we're going to go and get some data and we pass it over to an HTML file which has a way to take this data and an HTML template like this and we didn't really look at the outcome, did we?

Let's actually look at the view page source.

And it turns it into just straight, static HTML.

So this is what Flask does.

We're going to do two quick more things just to make this work better.

We're going to go and get this data from a database or something like a database instead of just making it up here.

But this part doesn't change.

We're also going to put a little web design on here.

But again, that's just HTML and CSS.

That's kind of a separate thing.

If you understand what's happening here you kind of know what Flask does.

|

|

|

show

|

1:50 |

Well, this was fun.

But what we actually want is to have a way to browse the guitars.

So, let's come in here and define one more thing and then we'll put our demo app away.

We want all the guitars and it's going to look really similar to this.

Let's go down here and say we want the guitars to be somehow we're going to have to get those guitars from somewhere in a moment.

And we'll just come down here and say this is the guitars equal to the guitars.

This is our variable.

This is the data name in the template.

And this will be guitars.

We've already added this cool little service database thing.

So I can come over here and say catalog, service.

Pytrom knows about it and will write the thing to us it up at the top and then we just say all_guitars.

That will just say None.

So, that should return our guitars here.

And then we can just go and print it out.

We're going to need this guitar's file so let's do that real quick and then we're going to get it to be all guitars.

And each of these guitars has a name and a price and so on.

We're just going to put the name out for just a second.

Let's see if that works.

Go in your saved guitars.

Oh, whoops!

Looks like I forgot to put this part member that's required.

Here we have it.

Here are all the guitars!

Look how awesome that is!

Just those few lines, we've gone to the database as we've got all these.

That course is not nearly as beautiful as what we saw before.

so, the last thing to do will be to just drop in a little bit of web design and then we'll be actually done.

Kind of amazing.

|

|

|

show

|

1:52 |

The very last element to finish this incredibly simple but surprisingly decent little web application is to update our templates to use our static CSS that we put over here.

We look at this, we've made a small change.

We've put some buttons up here and we said For each guitar, instead of just putting the name put this little section and put the image and put the name, and put the price.

Other than that, we have not yet really changed anything.

Want to see the difference?

Let's find out.

Man look at that!

Isn't that incredible?

Look, we got this cool web design here and we go click on guitars.

Bam!

There they are.

We can see our guitars just like before listed on this page.

Wheezer Classic, Acoustic Black, Mellow Yellow but now with just a tiny bit of change in the instrumental and a little bit of CSS, we have what I consider actually a quite decent looking little website here.

Super, super cool.

We'd have to do one more step to make these buttons work right now.

They can't do their full train they just show all the guitars, but that's like one more line of code honestly, and then that's it.

So I'm just going to leave it here, because remember the idea is just to give you a sense of what this is like.

What do you think?

Incredible right?

I mean, look at how amazing this website is and then here's the totality of what we put onto that page.

That's the guitar listing there.

And over here, this is the entire flask site.

So when I say Python is easy to use it's fairly easy to read.

You don't have to do too much to get started.

Well, here it is.

We could of course make this super advanced; do all sorts of things, but the simple version this website is actually incredibly simple to put together.

With just a handful of minutes and here we have it.

Granted the web design was already done but we wrote all the logic of it from scratch.

That's amazing.

|

|

|

|

19:50 |

|

|

show

|

2:45 |

You should be aware by now that Python and data science go together so well and it's one of the fastest growing parts of Python.

In fact, in that survey I showed you in the web development side the Python software Foundation survey along with JetBrains they also ask, Are you a web developer a data scientist, or other?

Web developer and data scientist, those were about equal so this is a huge part of Python and I think this is one of the areas as I mentioned in the full spectrum section that is really unique for Python.

People can come from other disciplines from say science, or engineering, or economics and they can come into Python and they can do this type of work that we're about to talk about because Python is so easy to get started.

But they can do real honest to goodness work that has incredible implications as far out to the boundaries of science as you want to think.

How far out?

Well, what if we're using telescopes?

Yes, astronomers all over the world use Python to study all sorts of things.

To study stars, to look for exoplanets there's a whole bunch of libraries.

One of them, the most popular one, is called Astropy.

So this is a whole project for just doing astronomy with Python.

What was the biggest discovery in astronomy recently?

Well, as a non-astronomer, just looking in from the outside it was probably the fact that the very first picture of a black hole was taken.

This is not as easy as it sounds.

This doesn't just mean better cameras that can focus more.

There's all sorts of reasons that it's very hard to actually get a clear, true picture of what a black hole looks like.

So the people worked on this project they actually used machine learning to try to figure out what the real image looked like and tried to piece it back together from a bunch of parts with many interpretations but they taught the machine learning model what the most likely outcome was, and they recreated it.

They use a whole host of libraries that are from the data science side of Python NumPy, SciPy, pandas, Jupyter, Matplotlib and Astropy, of course.

This is just one discipline, and one huge discovery.

It would not surprise me if a Nobel Prize came out of this work done with Python.

But speaking of Nobel Prizes people did win the Nobel Prize for finding the Higgs boson at the Large Hadron Collider.

Yeah, Python was also involved in that as well.

Data science and Python, they go really well together and it's partly because of this full spectrum language.

It's so easy to come in as a non-developer pick up the little bits you need and just keep going, and going, and going until you've all of a sudden used machine learning and artificial intelligence to recreate the picture of a black hole.

Wow.

|

|

|

show

|

1:50 |

Most of this work that I just described was done in something called a Jupyter Notebook.

These use to be called iPython notebooks but now it's Jupyter because it actually runs many other languages.

Even though it started out being just Python now you can do things like R and Julia and even .NET and C++.

It's kind of everything.

So it's these notebooks that have this visual look here.

You can see the Lorenz differential equations.

There's a little description.

You can write a little bit of code and a little more description, a little bit more of code and oh, now you get a graph that you can interact with with these little widgets.

It's really really great for exploring data when you don't know exactly what you need to do.

You get the data and you start looking it at it and you slice it this way and that way.

And you ask questions, and you see it.

Very very different experience than writing the Flask web application.

In Flask we broke it into a bunch of little files we put them all together.

We wrote a little code here, called over to that thing passed it off to that.

Notebooks are these one thing here that you can work with and you just kind of explore it as you go.

You don't even necessarily know where you're going to end up.

At least the early stages of much data science is done this way.

You probably don't productize it.

You don't take this notebook and make it a web service that then can be consumed by an application.

You probably go and convert it over to that Flask type of story that we were talking about.

But at the beginning, the exploration and the explanation side, it's with these notebooks.

Oh, and this also happens to be similar to things like Matlab or R Studio.

Unlike say Matlab or Mathematica, instead of costing thousands of dollars, no.

This costs, well, nothing.

It's free.

I's supported by NSF, National Science Foundation in the United States and a bunch of other organizations as well as all the scientists working on this project as part of their research.

It's open-source, just like Python.

|

|

|

show

|

3:19 |

It's one thing to hear about Jupyter.

And Jupyter notebooks but to see them in action well that's something entirely different.

So the next demo that we're going to do in this course, may be the very last one actually is going to be working through a small analysis of real data out on the internet using Jupyter.

Over here, I've already set up an isolated environment with Jupyter.

All I have to do over here is type jupyterlab after installing those things.

Just going to get started and launch a browser here.

The browser's going to log my into my Jupyter server.

So what I want to do is I want to create a new notebook, and I have these different environments this is the one that I've set up for this course.

And it's all ready to go.

Click here, it creates this not named, which is kind of annoying so we're going to call this reference counter, something like that.

Now, what is this thing that we're in here?

So, what we can do is we can type in different types.

We can either type out raw stuff we can type in markdown, which is a way to write in formatted text.

If we go over here I can type something like this and what were going to do is, we're going to go to a site and were going to analyze what is the relative reference counter or authority of other sites, according to this one?

What I mean by that is let's take all the links to the site and figure out where it's looking out to in the world.

Figure out the domain names.

Maybe Github or Twitter, or Python.org.

How many times do each of those appear and then draw a graph, say we'll this one is the most popular, so it has the most authority.

We refer back to it the most.

So we can type markdown, that's in markdown.

We can type a little title here.

We're going to use a place called Python Bytes.

So, hit a button to execute that markdown and over here, on Python Bytes this is one of the podcasts that I run.

You maybe remember that from the beginning.