|

|

|

8:12 |

|

|

transcript

|

1:45 |

Hello and welcome to my course Excel, to Python.

We're so excited to have you here.

Have you been using excel a lot in your data work and feel like there has to be a better way?

Or maybe you have heard the Python is a good language to learn for data science and you will see how you could apply these concepts to your job.

If so, then you're in the right place.

We're going to have a lot of fun and get many ideas for using Python as an alternative to the task.

You might try to use Excel for today before taking this course.

You should have some experience with Python.

If you are not comfortable with the basic concepts of Python.

Then should look at our other course Python "Jump Start by Building 10 Applications" or "Python for the Absolute Beginner.

In this course, you will learn how to set up and use a robust Python environment form, organized and repeatable process that could be used to replace many of the cumbersome tasks you use excel for today.

The majority of the code in this class will be using the "Pandas Library" we will take a unique way to teach this powerful library by showing you examples and excel and translating them to the pandas version.

You will start to learn more about pandas and understand when it will be a good alternative to your current Excel solutions.

By the end of this course, you will understand how to incorporate Python into your daily analysis workflow.

You will also find ways to streamline and automate many of the boring and repetitive tasks use typically do in Excel.

Michael Kennedy frequently says that knowing Python is like having a superpower.

I think there's a great analogy, and I suspect that by the time you finish this course, you will have many ideas for how you can take this knowledge back to your job, improve a tedious process and look like a super hero for saving your organization time

|

|

|

transcript

|

1:28 |

before we go into Python, let's talk a little about some of the challenges with Excel.

Let's take a look at one really big financial disaster that had excel as a part of the problem.

Many of you may have heard about the massive trading losses that JP Morgan and Chase experienced in 2012.

In this specific example, JP Morgan and Chase lost over $6 billion.

There were many contributors to this error, but one of the compounding issues was that Excel performed badly is a financial modeling tool I'm sure you may have not made an Excel error that cost billions of dollars but if you have been around excel enough, you have probably seen people try to use it in ways that was not intended for Excel errors are not exclusively founding companies.

The government, not surprisingly, uses excel and makes some of the same mistakes in the next example, the British intelligence organization.

MI5 found, they had mistakenly bugged 1000 the wrong phone numbers due to an Excel formatting error in the spreadsheet.

Maybe you have not seen a financial modeling error, but I can almost guarantee that you have seen data issues in your spreadsheets when numbers and dates are not stored properly.

These examples highlight the widespread adoption of Excel and how many meaningful decisions are made based on the result.

An Excel spreadsheet in your own usage of excel.

You've probably seen some of these types of errors, hopefully as one of the reasons why you're taking this course.

|

|

|

transcript

|

1:46 |

Excel is widely used for many tasks.

Why does it lead to big and small errors and complaints from users?

I think part of the reason is that Excel is the only tool.

Many analysts know.

And they tried to use it for all their tasks.

The quote, If your only tool is a hammer then every problem looks like a nail is very appropriate in this context.

If you are an Excel user, you probably used it for many things.

A simple database, a task tracker, Project planner, Quick calculations complex modeling data cleaning you name it.

Some of these tasks are great for Excel, others not so much because Excel can only do so much.

People try to use it for everything data related.

Instead of alternative options like Python, that might be more appropriate for the task.

The next problem with excel is that all the flexibility makes it difficult to trace the flow of a spreadsheet Excel.

You can have formulas that reference other spreadsheets or have complex nested functions and visual basic behind the scenes.

The simple representation of a spreadsheet hides a lot of complexity that goes on behind the scenes.

Another related problem is that Excel formulas make it very easy to make subtle mistakes.

They're difficult to detect.

We already mentioned data formatting is one example.

I can almost guarantee you have had issues where you forgot to include the correct range, no formula, Or maybe your data included zeros or blank values that gave you erroneous results.

Buying these types of errors can be very challenging.

The final issue with excels performance, especially on large data sets.

In addition to managing Data, Excel stores information about formatting formulas and logic to analyze the data.

This overhead makes opening a large spreadsheet, a slow process with modern computers.

We have gigabytes of memory available, so this shouldn't be a limitation.

|

|

|

transcript

|

1:26 |

Python has been around since the late 19 nineties but has exploded in popularity recently This chart, from a Stack Overflow survey shows the surge in Pythons popularity over the past five years.

Popularity in and of itself is not a reason to use the language, however, popularity means there's a large pool of users documentation libraries to solve various tests.

Part of the reason for Pythons popularity is that it is easy to learn.

Python has many language characteristics to make it easy for brand new programmers or new to Python programmers to get up to speed and productive in a reasonable amount of time, one of the areas where Python has improved in the past few years.

Is installing it on windows, Python, has always worked on Linux and Mac and could work on Windows but sometimes challenging to get working.

However, in recent years, even Microsoft has taken a supportive stance on getting Python installed.

In fact, they have official documentation, describes how to use Python for development windows.

Finally, Python has always been considered a good glue language for automating tasks.

Within the past five years, the Python ecosystem has grown to include libraries such as pandas, which are excellent for many of the tasks Excel struggles with the pandas Library constrain line many of the data wrangling and analysis task that excel is not suited for.

In addition, pandas and other libraries like NumPy and Sci-kit-Learn our industrial strength data science tools that can grow with you as you progress your skills.

|

|

|

transcript

|

1:02 |

So what will we cover in this course?

We'll talk about how to get set up on your computer.

We'll talk about the basic Python concepts that you need to know before getting started.

We will focus on developing on Windows environment, and mostly ideas will also apply to multiple operating systems as well.

One of the things will talk about how to organize your environment so you don't have a mess on your hands.

We'll be using Jupyter during this course.

We'll talk about tidy data and the best practices for storing data, Pandas will be the core library we discuss.

We'll talk about reading in Excel files.

What is a data frame?

Viewing and adding rows and columns will talk about basic data activities like filtering, cleaning, grouping, emerging multiple files together.

We'll talk about different file formats and how to work with him, and then finally will close this out with a case study that will bring all of

|

|

|

transcript

|

0:10 |

Don't worry about getting lost following along with all the code, you will have access to all the materials on get up, including the notebooks and the example data sets.

|

|

|

transcript

|

0:35 |

So finally, you probably want to know who I am.

My name's Chris Moffitt, and I'm excited to be your instructor for this course.

I've been using Python for over 15 years to automate many of my day to day business tests.

In addition, I am the creator of the Blog Practical Business Python I have been writing articles for the past five years about using Python, pandas and other tools to effectively solve business problems.

Finally, I am an instructor data camp and have a course on Seaborn.

Thanks a lot for viewing this course, and I'm really excited to get started.

|

|

|

|

5:43 |

|

|

transcript

|

1:00 |

Now that you know a little bit about this course, I'm sure you're excited to get started before we go into some actual coding.

I would like to go through a little bit more detail about the concepts you'll need to understand before we continue.

If you do not understand any Python, I recommend you check out "Python, Jump Start by Building 10 Applications" or "Python for the Absolute Beginner", choose the one that best suits you will help you get you up to speed on Python quickly.

Since this course is not cover basic Python syntax or usage, I want to go through a couple of the key concepts you need to understand by presenting some example simple code snippets.

In this course, you will be installing Python libraries with pip or Conda.

You will be importing Python modules.

You need to understand dictionaries and lists and assigning values to variables Creating and calling functions as well as working with f-strings and pathlib with the code we will be using Python 3.8 and do the actual coding in Jupyter notebooks.

|

|

|

transcript

|

0:49 |

for this course, I will show all examples in the Windows operating system.

All the examples will work find on Linux or Mac, but I am assuming that many of the people in this course are in positions where they use windows as your day to day computer.

When it comes to installing and running Python, I prefer to use Mini Conda to install your environments and library it needs.

I also encourage you to use Conda to make working environments and install packages.

One of the reasons I recommend using the Anaconda is this more lightweight than the fully anaconda distribution, so you can install what you need.

In addition, Conda makes it much easier to install many of the scientific stack libraries like pandas, Sci-py and Numpy.

There's also performance gain because these libraries are installed with MKL optimization, which will ensure Python and the underlying libraries run as fast as possible on your system

|

|

|

transcript

|

0:12 |

Here is the screen where we can download Mini Conda.

Right now, the most recent version for Windows is the Python 3.7 version.

I'm going to go ahead and download that, and then we'll walk through how to install it.

|

|

|

transcript

|

0:37 |

Once you've downloaded the package, start the installation process.

For the most part, you're gonna follow the defaults.

There are a couple that I want to walk through in a little more detail.

The first one is just installed this for yourself.

You don't need to install this for all users.

Use the default location and then these advanced options air a place where sometimes people can get tripped up.

Leave it as it defaults here.

You don't need to add Mini Conda to your path.

and make sure you have registered Mini Conda as your default Python 3.7 environment.

Installation process is going to be pretty quick when we're finished there is no need to launch these other options.

So just go ahead and click finish.

We are done.

|

|

|

transcript

|

0:23 |

Now that your installation is complete, you have to actually launch a condo environment.

You can find this in two places, since the application was just installed.

It's going to be at the top of your start menu under recently added.

There will also be an option under the Anaconda 3 (64bit) folder.

Both of these options will have a power shell prompt and a regular prompt.

I prefer the regular prompt, but it's kind of user preference.

|

|

|

transcript

|

1:52 |

Once you launch your anaconda prompt, you show a couple commands on how to navigate and create new environments.

If you want to see a new environment or a list of all the environments that are installed, use "Conda info --envs".

As you can see here, I only have a base environment.

The base environment is a little unique.

You want to make sure that you keep that clean, and what I mean by that is, for the most part, don't install additional packages in your base environment.

You want to create a new environment where you do your work, use, conduct, create, give it a name, we'll call it work, and we're going to tell it to use Python 3.

And by just saying Python 3, it's gonna automatically choose the most recent version of Python.

As you can see here is using Python 3.8.

Installing a couple of their libraries, we choose yes.

It'll download the packages and install them once it's done installing.

You want to activate your environment so you do Conda activate work and the problem changes and now you can see that I'm working in the work environment.

You also want to install additional packages in this environment.

So we're going to "conda install pandas xlsxwriter xlrd notebook", mix LRT and notebook.

You don't have to install them all in one line.

You can do multiple installations, whatever makes sense for you.

But I think it's important that you understand have installed those packages and get used to doing that because having different environments is a really important part or an important benefit of using Conda.

Now we're done.

And if you get these kind of debug messages, you don't need to worry about that.

But the important thing is that now we have our two environments and we are in our work environment, and that's where you're gonna want to be for the rest of this course, actually doing the work.

|

|

|

transcript

|

0:50 |

now that you have your conda environment installed, want to give you this quick reference so you can refer back to it in the future?.

One of the things to keep in mind is that you want to keep your base environment clean, do all of your work in another environment and don't install additional packages in the base environment.

If you want a list your environment, use Conda info --envs and when you create new environment, give it a name.

And in this case, I tell it to use Python 3, which means just use the most recent version of Python.

You can also specify a specific point releases as well.

Once that environment is created, you can activate it and you could install one or more packages using the Conda install Command.

You can also use pip anaconda environment and I recommend use pip this way "python -m pip install <package>.

To make sure you're installing in the environment.

|

|

|

|

7:11 |

|

|

transcript

|

0:50 |

in this chapter will talk about how to organize your files and directory structures so that you can work most effectively with data in your Jupyter notebooks.

If you've worked with Excel for any period of time, you probably see a situation like this where you have a bunch of files in a directory.

It's very hard to tell which one is the most recent file or what you had to do to actually develop those files.

When working with Jupyter notebooks, you can, unfortunately have a similar situation.

We have a bunch of untitled notebooks in a directory which is very difficult to work with.

I really believe that having good organizational set up before you get started is critical for building a manageable process.

If you like cooking shows like I do, there's a concept called "Mise en place", which essentially means that you need to get organized before you get started working, you need to have a consistent file structure and naming convention that you use on every

|

|

|

transcript

|

1:00 |

I would like to walk through the file structure I'll use in this course and also think it's a good basis for your own projects.

I store all the files underneath the window directory and within that window directory have subdirectories for each individual project.

One of the important things is that I only store the Python notebook files in one directory.

I don't put any of the Excel or see SV files in that directory, The Excel and See SV files go into a subdirectory called Raw and put the original files there and make sure I never modify them.

If I do need to modify them, I do it programmatically and copy them into the process directory.

Once I'm done with all my work I stored in the Reports Directory.

If this seems like it's a little complicated to create maintained for every project, the good news is you can use the cookie cutter project, and I've provided a template that will create this directory structure for you.

|

|

|

transcript

|

0:21 |

once Mini Conda is installed, you have a couple options for launching your Jupyter notebook.

We talked about the to prompt options earlier.

There's also now an icon to launch.

The Jupyter notebook directly, but I prefer to use is the Windows Terminal, which you can download from the Microsoft store.

Once you install it, you can start a terminal session and activate your environment and launch the notebook server

|

|

|

transcript

|

0:43 |

If you choose to use Windows Terminal, here's a quick example of how you can configure it.

to launch Conda.

Once you launch the terminal, there's an option to update the Json settings File that control the various terminal environments.

Here's an example of the Conda environment that I have set up.

If you choose to use this, you'll need to modify to include a unique guid.

You'll also need to update the path so that the correct Conda environment starts once you launch the terminal.

And it's also a nice setting to configure a starting directory so that once you launch the terminal, you're in the right place in the file system so you can begin working.

|

|

|

transcript

|

1:05 |

Let's go through a quick example of how to install and use cookie cutter.

We've launched our terminal environment and we're in the base Conda environment.

Now activate the work environment, and now we need to install cookie cutter.

Once the insulation is done, you have a cookie cutter command that is available to you now and only need to pass to it is the url for the github repository that has the cookie cutter template.

Once it downloads the templates gonna ask you for a project name and it's going to take this project name and converted into a simple directory name.

You can accept the default.

And then I encourage you to put in description here to help you organize your projects.

So we're going to call this CFO, and once you're done, you can go that CFO report directory and you can see that we have to sample notebooks as well as the data and reports directory structure that

|

|

|

transcript

|

0:59 |

Now that we have our directory structure set up, let's launch the Jupyter notebook.

I prefer to do this from the command line so you can see if anything breaks in the process.

Now, by default, the Jupyter notebook is gonna open a browser.

But sometimes that doesn't work.

And I just want to walk through what you do there.

If your browser fails a lot, so you'll need to copy this URL.

Certainly not a requirement.

Like I said, most of time, the browser opens correctly, but if you need to, I want to show you this.

And when she pasted in there, you have the Jupyter notebooks and I want to show you an example.

Remember with Cookie cutter how we created information about the CFO report while it populated that in the notebook for us.

So this will help you keep yourself organized and understand a little bit of the history.

And as you get more and more experience, you can develop your own process that is repeatable for each of the types of analysis that you do on your own.

|

|

|

transcript

|

0:45 |

once you get into your actual notebook, it's also important have structure and certain guidelines so that it is easy to understand the analysis you did when you come back to it many months in the future.

One of the most important things to make sure you have a good descriptive name at the top of your notebook no more "untitled's".

I also like to put some free text in there that talks about Why am I doing this analysis?

Where did the files come from?

A basic change log.

I also include all my imports at the top and then make sure I defined my input and output files early using path lib.

And then finally, I tend to always save my results at the end in my

|

|

|

transcript

|

0:49 |

since we'll be working with Excel files quite a bit.

Let's talk a little bit about structuring data because Excel can do so much there.

Certain types of data that is really easy to read in to Python and other types Not so much.

So the first one is sometimes called wide form data, so you can see this example of where we have sales data for each month of the year and grand total.

That's easier read into pandas, tidy or narrow data is the preferred format for reading in data, and this is transaction level data is probably really good example of tidy data, the type of data that isn't really good for reading and a pandas unstructured data.

So if you have an Excel file that looks like this where you have just a bunch of data all over the place, that's probably not going to be a good candidate for you to read into pandas.

|

|

|

transcript

|

0:39 |

We talked a lot about how we're going to use Python to replace a lot of processes we use Excel for.

But I want to acknowledge the Excel is going away We are still going to use it as an input file.

It's also useful for an output.

summary as we're trying to understand the data and build out our analysis.

And then finally, When we are preparing and presenting Data Excel is ah is a very useful tool for that.

One thing that Excel really does well is ad hoc analysis and financial reporting and a common tool used across the organization.

So my advice to you is to learn where Python makes sense.

But don't try and replace everything that excelled us today.

That's just not a realistic goal.

|

|

|

|

25:12 |

|

|

transcript

|

0:49 |

in this chapter will talk more about pandas.

Pandas has been around since 2008 and is the primary library within Python for doing data analysis and manipulation.

Pandas has a lot of features and a lot of functionality.

And sometimes as people transition from Excel to pandas, it can be challenging to translate your Excel terminology.

to pandas terminology.

And that's what we'll be talking about in the rest of this course.

The other key take away here is that pandas has a lot of functionality You can think about it as a set of tools, and we are going to go through many of them to get you started.

But as you grow and develop and tackle more and more challenging problems, you'll start to use more and more of the tools in pandas to solve those problems

|

|

|

transcript

|

1:12 |

understanding the pandas data frame is core to working with pandas, and we'll compare to an Excel sheet just to give you a mental model for how to work with the data.

Here's an example of a fairly simple Excel spreadsheet, and once we read it in two pandas, this is the representation we get for the data frame.

It looks very similar to the spreadsheet, but there are some unique differences.

The first thing you'll notice that is that column names and excel don't always mean that much.

Frequently you'll access a column using the letters at the top, whereas when you read the column into pandas, the column name is gonna be very important.

The other thing is Pandas has an index in this case 0 through 999 that is somewhat similar to the road numbers in an Excel spreadsheet or the index in a sequel database.

If you're familiar with that, we'll talk quite a bit about indexes and columns.

The other concept is if you have a column of data or a row of data that's called a panda series, so that can be somewhat similar to if you select column "A" or Row "4" in this spreadsheet.

|

|

|

transcript

|

0:46 |

before we read an Excel file into pandas.

I want to walk through the file will be using and talk a little bit about how you would review this file if it was the first time you were looking at it in this simple file, you can see it.

There's only one tab you can count, how many columns you have, and then, if you want to see how many rows, so it gives you a basic idea of the data.

But most Excel users just kind of look at the file.

Don't look at the data in a whole lot of detail, will start to do the analysis right away and this contrast with the way you would do things in Panda's, where you would use some commands to understand the data in a little bit more detail before you start to do analysis.

|

|

|

transcript

|

4:13 |

Okay, now let's go ahead and read in the Excel file into our Jupyter notebook I'm going to go through the process of launching the Notebook one more time.

So let's "conda activate" our work environment.

The next thing we need to do is go into the directory where the files are So I placed them in a sales analysis directory, and now I'm going to run Jupyter notebook.

And here's my notebook.

The two files that are already there were created by Cookie Cutter, but I'm gonna go ahead and create a new one so we can walk through that process.

Click on New Python3 notebook and remember, one of the first things need to do is make sure to change the title.

It comes in as an untitled notebook, so you can see that here as well as in the URL.

So let's call this sales analysis exploration.

That's a really important thing to do so that you're in a good habit of organizing your data, I am going to create a markdown cell and press shift enter so that it gets rendered.

This is a good habit to get into so that you understand why you did this notebook and what the days sources were and how you wanted to use this to answer a business problem.

So now let's get into actually writing some Python code.

We put our imports at the top, and I'm just going to use pathlib to access the files and then pandas in a second to read in that file.

So what I've done here is referenced the sample sales file in relation to the current working directory.

And it is in a subdirectory called raw.

So I define that input file, and then I'm going to read that file in using the "pd.read_excel()" function in pandas and nothing happens.

But you can see that the number incriminated here.

So there was something that happened behind the scenes.

If we want to see what a variable looks like, we just type df (for data frame).

And now we see the data frame representation that looks very similar to the Excel file.

So let me go through a couple things that you will typically do the first time you read a file into pandas.

You can use the head command to look at the top five rows.

You can use df tail, see the bottom five.

This is really helpful.

Almost every time you read in the data, you're gonna look at what comes at the top and what comes in at the bottom Remember, we talked about columns, So if you want to look at what the columns are, type df.columns and you can see that has a list of all the columns they calls it and index, and that's gonna be important later for us to access our data.

The other thing that I like to do is the shape command - "df.shape".

And so this tells us how many rows.

So we have 1000 rows and 7 columns in the data.

So this is a really compact way to understand your data and really important thing to do as you go through and manipulate data to make sure that you are keeping all the data together, not dropping things inadvertently.

The other useful commanders DF info - df.info(), which shows you all the columns, how many different values are in the column and what data type they are.

This is really important as we start to manipulate the data because some of the analysis can't be done if the data is not in the correct data type.

The final command I'm gonna show is DF describe - df.describe() - which gives a quick summary of all the numeric columns.

So this is a really handy way to get a feel for the overall structure of your data.

It tells you how many instances of the data you have.

It does some basic math on the mean staring deviation the men Max and the various percentiles.

And this is all a very standard process that I go through almost every time I load in data and starts to get in my mind what the shape of the data is, what the structure is before I do further analysis.

|

|

|

transcript

|

0:31 |

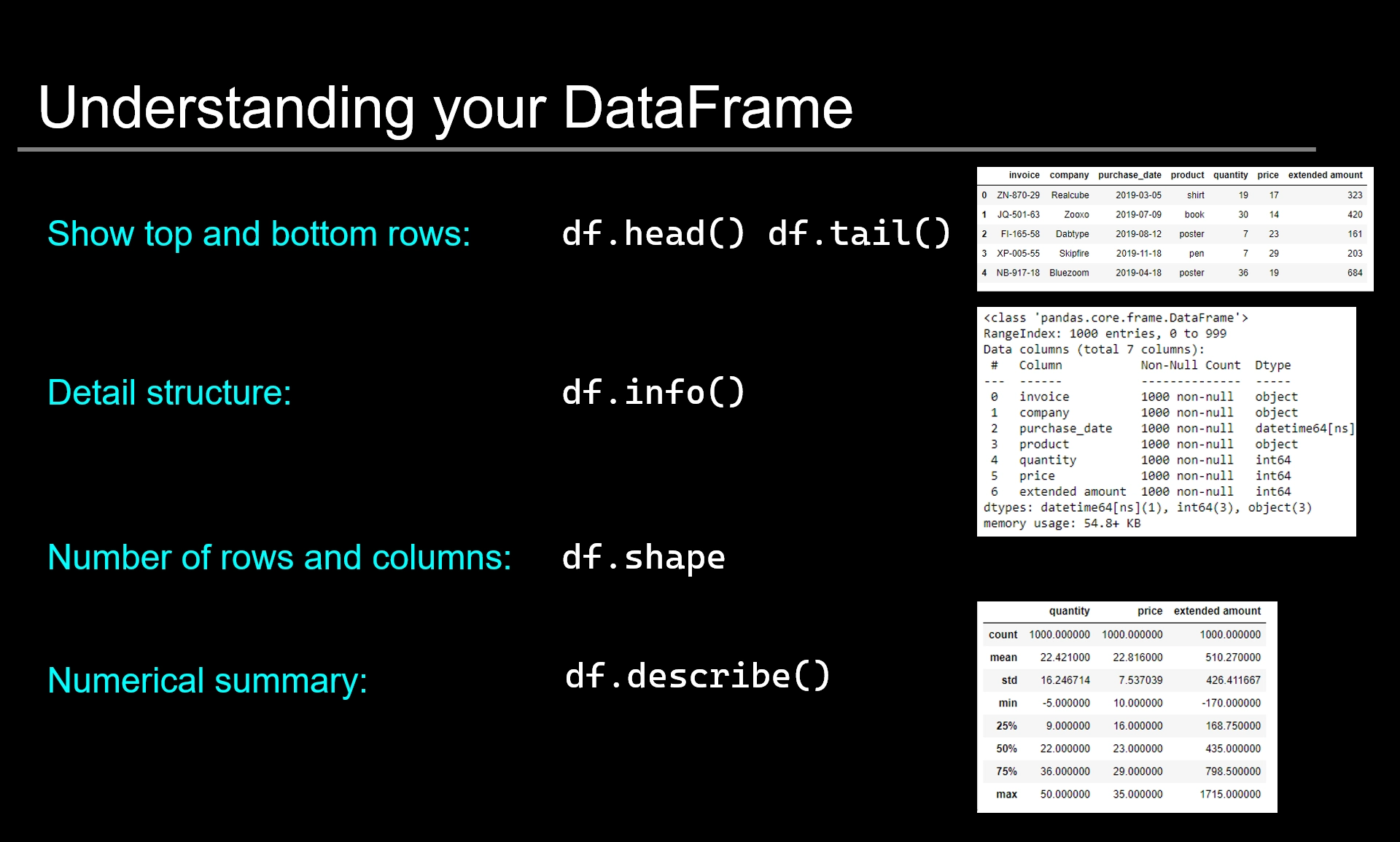

After creating your data frame in pandas, you want to use several commands to understand how it's structured.

If we have a data frame called DF and want to see the top of bottom rows, df.head() and df.tail() will show the bottom and top rows.

The info function will give more details on the columns, including memory usage and data types.

Understanding the number of rows and columns is important as well, so the DF shape function will tell us that, and finally, calling describe on a data frame will give us a numerical summary of the values in that data frame.

|

|

|

transcript

|

2:42 |

Okay, let's go through some more examples of how to work with Jupyter notebooks and pandas, and I've opened up my notebook and I want to walk through something that could be a little confusing to new users.

So if you look at this notebook, I've just opened it up and you can see that in this cell I'm showing the data frame by typing DF.

And so there may be a temptation to go in here and let's just take a look at the head and remember, shift + enter.

I press that and I get a name error df is not defined.

And the reason is I haven't actually run everything in the notebook, so it's really useful to hit this menu option.

Kernel, restart and run all, and you'll get this option to restart.

Run all cells.

You do that and what this does.

It runs through all of the code from top to bottom and makes everything live in the current Kernel.

So now if I make a change, everything works.

You can also see that the number has incriminated.

So went from 1, 2, 4 5, 6, 7, 8, 9 and then back up to 10 and 3 is gone because I reran in that cell.

So this points to some of the power of Jupyter notebooks, but also how it can be confusing sometimes if you get out of order.

So the thing I would recommend is that you frequently use Kernel Restart and run all And if you don't want to use the menu, this command here, restart the Kernel rerun, everything will do the same thing.

So once we've done that, we've taken a look at our data frame.

And now we want to actually look at some columns.

So the simplest way to do this, remember, we have.

If you ever forget what columns do I have, type df.head() and we have these columns called Invoice / Company / purchased_date.

So let's just say df.invoice and I see all of the invoice column all of the values in the invoice.

You can see each one it truncates if you are in the middle because it doesn't want to show 1000 rows, which makes sense.

It's pretty good.

That should be pretty intuitive to someone that has worked with Python before.

But what happens if we want to look at this extended amount where there's a space in the column name, you get a syntax error, and that's because Python doesn't understand what this space means.

So the syntax you need to use is put a bracket around it and quotes, and then you can reference the column and here you go, so you can see that.

323, 420, 161, 203, 684.

if I scroll appear 323, 420, 161, 203, 684.

So the reason I point this out is you have two options to access the columns, and sometimes you'll see code that has that period versus the bracket notation.

I encourage you to always use the bracket notation.

It will make your life easier when you have these types of situations and it's consistent with the other operations you're gonna want to do and pandas.

So the main reason I bring it up is so that you're aware of it, and you can keep that in mind when you are doing your analysis and doing your problem solving.

|

|

|

transcript

|

3:00 |

So the next thing we could do if we select a column will use this df["extended amount"] column again, and what we can do is if we want to take the sum of it, it's gonna operate on all the values in that column and added up.

We can also do things like, let's say, I don't want to even do a new cell.

I can go back edit it.

Press shift enter again and gives me the average, which is pretty helpful.

It can also do some other things that you may not think about as much that can be really useful in your data analysis.

So if we have a question of wanting to know how many invoices we have, we can use df["invoice"].unique().

So now we can say, Oh, well, we have a unique invoice in each row that's helpful.

The other thing you can do is all of the options that we've talked about our full data frame.

You can run those on a single calomn if we just want to see the product column and just want to look at the top by rows, you can do head to see that you can.

This one might be one where being able to see the number of unique products.

So if you were just looking at this data for the first time and want to know while I see I've got shirts and books and posters, how many unique values do I have?

You can do that as well.

So there's four different types of products, so that could be a pretty helpful function for you.

There's couple others that I wanna talk about.

Another one that is useful is the count function that you'll use in other settings.

So you get the idea that you have selected column and then you can performing operation on that column.

There's also some handy ones if you want to do a short cut.

So let's say I want to know...

Well, I have four different product types.

How many shirts?

How many books?

How many posters do I have?

You can use value counts for that which is really useful is a really common thing that I do.

On almost every data set is you take a look at your value counts, see how many you have, and then one of the other things you can do is you can chain operations.

So if we want to the value counts and want to maybe see what percentage?

Or maybe let's say divide those by 100.

You can use the DIV function to do that and the other.

The other thing to keep in mind is when you have these functions, you can pass arguments to it as well.

So this one bypassed normalize equals true, it gives me the percentage that each value shows.

|

|

|

transcript

|

1:11 |

So now the next thing might be Well, how do you work with multiple columns?

Let's get some summary information on the price and quantity columns.

So one way to do this if we want to work with multiple columns, is we define a variable and we need to use a list.

And then if we want most about columns and then if we want to get let's say, the average value for the price and quantity we can do df["summary_columns"] and this is going to calculate the mean we could ...

if we wanted to You could do -sum.

Sum may not really be useful in this context, but let's go and stick with mean.

So that's one way that you can combine multiple columns together and then let me show you that you can define a variable like that, and I think that's a good practice.

But if you choose not to define a variable, and I'm cutting and pasting on purpose so you can kind of see how this works.

I just defined that list of columns that I want to work on, and it can do that as well, so you'll see both methods when you are looking at your pandas data and looking at examples online.

I want to go over one Other example.

If you want to use the describe function, you can do that as well.

And remember, we ran that on full data frame once again have chosen just a small set of data frames and then the final thing.

Let's go through a real quick example of how we would add a new column.

So let's say we want to put a country column on here, and we know that everyone is in the US.

So think about how you do that.

If you had an Excel bio, you would probably put us a in a columm and drag it down.

Here.

You just assign the string USA to that country column.

And now if you say df.head(), it's gonna show country for all the values.

And if you want to check, you can look at df.tail() and see that country is everywhere.

So we can also do something similar if we want to do math.

So let's say we have a 15% or a, 1.5% fee.

We want to add we can, Say Okay, let's add df.['fee'] = df.['extended amount'] * .015 So what this does is adds a new column called Fee.

It is taking the "extended amount" times 0.15 and adding it as the entry in the fee column.

So we press enter.

And now if you look at the column, you can see the fee.

So this is "compare this" to how you would create a formula in Excel, where you would have to create that formula and drag it down for each row.

Here you just enter it once, and pandas takes care of making sure that everybody gets that value.

So it's a really compact and simple way to analyze things.

It also makes it easy to troubleshoot because you're only putting that formula in one location

|

|

|

transcript

|

7:46 |

Now let's summarize some of the column work that we did in the previous exercise so that she can reference this in the future.

If you want to do math on a single column, use the brackets.

Put the column name in there.

In this example, you can do this sum or the average on the extended amount column You can count or do number of unique values on a column.

If you want to do a frequency table and see how many products and how many examples or instances of that product there are, you can use value counts.

And then, if you want to add a new column in this example, if you want to do a mathematical calculation in this example, we multiply our extended amount times 0.15 or you can enter in a string.

And then pandas will make sure that everything is copied down, essentially to all of the other values in the data frame.

And then, when you want to work with multiple columns, you put a list inside the brackets, and then this example will show the average for the price and the quantity, and you can do this for as many columns as you want in your data frame

|

|

|

transcript

|

0:47 |

in the next section.

We're going to work with our column names a little bit and see how to access some data.

So remember when we open up our notebook, we need to restart and run all the cells again, which is pretty quick.

The other thing I want to walk through is kind of how I break up my notebook.

So now since we're gonna work on a new section, I am gonna put a break in here call this column names I'm using markdown and I selected the markdown type and you still press shift enter and it will render the markdown.

This is a good habit to get into so that you can organize your notebooks and know where you are and what you're doing.

So we talked about how important column names were.

If you look at the column names, property, you can see all the columns.

We can also convert that to a list if we want, which is gonna be helpful sometimes when you need to clean them up because we care about column names.

You'll notice some potential errors with the column names here, for instance.

We already talked about how extended amount has a space.

And then also, when I added those new column names of country and fee, I capitalized them.

But none of the other ones were capitalized.

So let's see how to clean those up.

This is a very common task that you're going to do when you're working with your data.

So we're gonna use some list comprehensions to clean up the spaces.

So let me type it out and then I'll explain what's going on.

So what this does we talked about the DF columns list, and I'm going to replace all the spaces with Underscores.

So we've converted our extended amount where it used to have a space to have an underscore.

So this is going make it a little easier for us to manipulate the data and keep our columns consistent if we want to assign that back so that that is the column name going forward.

Because if we look at DF head, we can see we haven't actually changed anything, so to change it, and I'm going to cut and paste to show you how I would normally do this.

Put that in there, and now when we run it and take a look at head.

You can see that we now have extended amount, the same way.

You can also do a similar sort of cleaning activity for the country and fee columns.

So let's go ahead, and I'm going to copy this instead of doing a replace, I'm going to lower.

So see now country and fee are lower case so we can do ah similar sort of activity.

And I purposely do the copy and paste is every retyping everything, because this is the way you work in a notebook.

It's an interactive process where you test out some code, see what the values are, and then use that to make your changes.

Okay, so now countries lower case fee is lower case.

Our data frame has all the column names that we would expect.

We can start to look at how to access the data in the data frames.

So we talked about columns.

We've talked about how to select an individual column, and in Excel, you would go in and use your cursor and click on a row or sell and make your changes.

You can't do that in pandas.

So how do you access a individual row or column.

Well, there's a command called loc for location.

So let's do df.loc[0,:] and what this does it says pick rows zero and show me all of the columns.

You can see that I've have ZN-870-29 Realcube.

This is an object that contains all of the values in the first row.

If we want to select multiple rows, we can just pass a list.

And then this colon here is kind of like, ah, wild card.

So that means Show me all of the columns.

Let's make sure to put a loc in there, and now we can see the first three rows with all the columns and let's say we wanted to maybe just look at a subset of columns.

So I am doing a copy and paste again.

And instead of selecting a certain number of rows, I'm going to put a list of columns here.

So let's just say I want to look at company / purchased / extended amount And we get error here?

Why is that so forgot a comma.

I want to go through the trouble shooting because you're going to get these types of errors when you do the work on your own.

So I want to show how to do that, that we all make mistakes and I'll make typos.

So now we have selected used that wild card yet again to select all the rows and only three columns, and you can combine this as well.

So, for instance, if we wanted to say, Well, let's just get maybe df.loc[1,2,3] So we've got the first three rows in just those three columns.

We can slice the data like we would on a typical pipe on list.

So instead of doing one through three, let's maybe do all.

And instead of having a explicit list, we're going to company colon extended amount, and it chooses everything between company and extended amount.

So if you forget, so you can see company their extended amount so it doesn't include invoice and drops the country and fee.

So this is This is the one of the main ways that you can select different rows and columns, and one of the things that I frequently do when I read in an Excel file is maybe it has more columns than I need.

So I want to sub select a certain number of columns.

So let me show you how it would do that and actually create a new data frame.

So I'm going to create one that's called Company only and use df.loc We want to copy all of the rows and we'll just do company through extended amount.

And one of the things you want to get in the habit of as well is to make an actual copy of it.

So I don't want to reference the original.

I want to create a whole new copy and here we go.

So we've got company only, you can see that has 1000 rows it just has a subset of columns that we care about.

So we talked about df.loc.

I want to talk about using iloc, which is for the index.

So it let's try something here.

It could be times where you want to look at the columns by numbers by the index.

If we do that, we get an error, so that's telling us that it can't do a slice.

So the way we want to do it, there's nothing wrong with this idea.

You want to use the df.iloc[:,1:5].

So if you do, I look then you can choose by index number.

So this is using one through five versus company through quantity.

So the important thing is you've got two different ways to select your rows and columns.

iloc and loc.

Let's do one more example.

Show how iloc works.

This example in of itself is not that useful, but it's really important to start to understand the row and column notation for loc and iloc, and we're going to go through a more powerful way in future lessons to sub select your data.

But this is the intro way to start thinking about it and get familiarity with this concept.

|

|

|

transcript

|

2:15 |

Here's a reference you can use to refer back to to make sure you understand how to use loc and iloc.

The first thing to keep in mind is that it's always rows then columns, and when you want to select a row, you can use a colon to select all the rows.

You can also provide a list, or you can slice the rows, and you could do a similar thing.

From a column perspective, you can select all the columns, a list of columns or slice the columns I loc is similar, but you can use indexes instead so you can select all rows or a subset of rows.

But when you select your columns, you can use a numerical value in future.

Sections will talk about Boolean indexing using these same formats, and it's really powerful way and the way that I normally will sub select the data in my data frames

|

|

|

|

21:55 |

|

|

transcript

|

0:31 |

In previous chapters, we talked about how to get data into a panda state of frame.

Now we're going to talk about what to do with it once it's in the data frame.

One of the most common task that Excel is used for his data wrangling.

When I talk about data wrangling, essentially, what I mean is modifying, transforming, mapping the data so that it is in the format so that we can do further analysis.

Pandas is a really great tool for this type of data manipulation and you can easily transition from data manipulation into data analysis.

|

|

|

transcript

|

0:59 |

it's really important to understand pandas data types when wrangling Data Excel doesn't manage data types in quite the same way.

In this example, I'm showing how you can use self formatting to change the way a date column is display in pandas.

The DFM Folk Command will show you the different data types that are assigned to each column, and for the most part, when you read in, data handles will determine the correct data type.

But sometimes you will have to convert to a specific data type, and we will talk about that in future chapters.

For now, here's a summary of some of the key data types that will be working with and when you want to use them.

The object data type is mostly used for working with text.

The date time is used for working with the date or time or a combination of both.

And if you want to numeric operations, the data type needs to be an end or a float.

There are also some more advanced options.

Such a category Boolean and string data types,

|

|

|

transcript

|

1:44 |

Before we do some work in pandas, I'm gonna walk through a couple excel examples of how we can manipulate a daytime data and then show how we would do that and pandas.

So I've opened up my sample sales details file, and there's a column See, that has the purchase date.

So what if during our analysis, we wanted to get the month associated with that purchase state, we would enter a formula that looks something like this and copy that down to eat row.

We could do a similar thing for the day.

We want to do that for the year, relatively straightforward.

And just for the sake of talking about data types, what would happen if we tried to do the same formula?

But maybe did I'll call him be, too, which is not a year we get evaluator.

So this starts to talk about how you when you're working with Excel, you intuitively know you can't call year on a calm that doesn't have a daytime value So we'll use that concept when we start to work on our hands data frame and then the final thing I want to do an example of is what if you wanted to understand what quarter you were in now, I had to look this up on the Internet.

But if I wanted to know that this first March 5th was in quarter one, here's a formula I would have to use.

I have to use round up and then put in the date and then divided by three been around 20 and the reason I show that form was it highlights how some of the things that you can do in excel with a formula are a little complicated and you typically have to do some Google searching.

But in pandas, there are some easier options, which we will explore in a second.

|

|

|

transcript

|

3:22 |

Now we're going to read in our sample sales data into our Jupyter notebook.

So we'll do the imports.

I went ahead and put those in here, and now you can see the data frame of that represents the Excel file.

And if we do DF info, it tells us that the purchase date is a date time 64 data type, which is good, which is what we had expected.

Quantity, price, extended amount and shipping costs are numeric values.

So everything appears to be in order here.

Here's how we might think about actually accessing the purchase date.

So if we know that we have a purchase state, maybe we could try typing month after that.

And we get an attribute error so Pandas doesn't know how to get at the month And so what penance has done is it has introduced a concept of an excess er and D T stands for daytime.

So now it knows that this is a daytime data type, and there is an excess er called D T, which enables us to get at the underlying data in that column.

And here we want to pull out the month we can do a similar sort of so year works as expected.

And there are some that you may not think of what's try like Day of Week Pandas goes in and Comptel, what day of the week each of those days is and assigns a numerical value to it.

So remember the example we had of trying to get the quarter and how we had to do a fairly, maybe non intuitive calculation for Excel?

Let's take a look at what if we just use quarter?

Ah, so that tells us that Pamela's knows the concept of quarter and can automatically calculate that force, which is really helpful.

And the recent one highlight This is there are a lot of options available once you have the correct data type to make your data manipulation just a little bit easier.

For instance, what if you want to know whether a current month has 30 or 31?

Or maybe it's a leap year.

We can look at days and month so we can see that it calculates a 31 and 30.

We can also see if something is the end of the month.

So none of these examples that are showing just the head and the tail.

But it is a helpful thing to keep in mind as you doom or data manipulation Now, one of the things that you really need to keep in mind is that I did all of this.

But there's been no underlying change to the data frame.

If we want to actually add some of these new columns to data frame, we need to make sure that we explicitly do so.

So what I've done here is I've created two new columns purchase month and purchase year and assigned the month and year to that.

You can see the data frame now has the purchase month and year.

So we are, replicating what we had in our Excel spreadsheet and if we wanted to add one more to the purchase corner.

Now we have our purchase quarter, and you can see that this is March.

The first quarter in this November,

|

|

|

transcript

|

4:22 |

now that we've done some data manipulation with daytime's let's take a look at strings or objects as they're shown.

So remember we have our DFM foe and we've got company We've got invoice.

We've got the skew in the product which are coming through his objects.

So let's say we wanted to turn the company into an upper case Once again, we get the attributes air because pandas doesn't know what we're trying to do.

There is no attributes for pandas Siri's to convert it to upper.

So we need to tell it that we're trying to do a string manipulation and now it works so similar to what we did with dot d.

T.

Now we use dot str and there's a bunch of string manipulations that you will likely encounter as you start to wrangle and manipulate your data.

So this you can lower case your values into title case s o.

Many of the string functions that you would expect to use just in General Python are available in pandas.

Another one that you might want to use is length and similar to what we did earlier.

If you want to actually make sure this gets incorporate any transformation To make it incorporate back into your data frame.

You need to make sure you re assign it, so call it upper company.

And now we have a new columns.

Has upper company that has the company name all uppercase.

Now we've already talked a little bit about how to do some basic math, and I want to just tie this back to the different data types.

So what?

What pandas knows is if you have a data type that in this case is an end or afloat, so it's numeric data type.

Then you could do mathematical operations so you can think about the mathematical formulas plus minus multiplication as access er's similar to what we did for the strings and the daytime data types.

So, for instance, if we have the extended amount and we wanted to multiply it by 0.9, so essentially give a 10% discount, you just use the standard math functions and pandas knows because it is a numeric value It understands what this operator is, so you don't need to use an excess.

Er Pan is smart enough to do that for you.

There is another way to do math in pandas, and I want to highlight it, so you're aware of it.

So instead of using the asterisk like you would just to do a normal math multiplication in Python, there are different operators on this one.

There is when a dot mole there's a division and add, and for the most part, we're not going to talk about those in the in this course, it's generally I would recommend using mathematical operations As you get started.

These types of functions will be useful form or advanced chaining of pandas operations, so I want you aware of it.

But I'm not going to spend a lot of time in the course talking about how to use them.

So to close this out, I want to dio a little more complex mathematical operation.

So let's say we want to create a new price and that new prices 5% higher than the old price.

And so then we've created a new price column that is 5% higher, and then we want to see what the new extended amount is, so we have to multiply that times the new price and the new quant and the old quantity.

If we do that and see this new price is going to be out here at the end 17.

85.

So the old price was 17.

We've added that 5% to it, and then this is the new extended amount.

And if you want to see what the actual total amount is, weaken dio simple formula on that as well.

So this tells us that the original extended amount was 510,000, and now we are at 535,000,

|

|

|

transcript

|

0:44 |

When I'm working with Excel, one of the most common things I use is the Excel Auto Filter.

This is a really useful way to sort and filter your data by multiple criteria.

Pandas conduce something very similar to this and in many ways more powerful using Boolean indexing and the local command.

So Boolean Index, in this example here shows all of the rows that have the company named Viva.

And then we could have another Boolean Index that tells us how many purchases are greater than 10.

And then we can combine these two using the local command to select all of the rows and all the columns where it is company Viva!

And they purchased at least 10 items.

|

|

|

transcript

|

4:39 |

Now let's go through some examples of using Boolean filtering.

So I am going to rerun my notebook and all it does right now is read in the same Excel file we've been using and show the summary data, Frank.

Now let's create our first example of a Boolean index.

So we see that we have a company name called Viva.

And if we want to understand all of the rows where the company aim is viva We can do this expression and what pandas does it returns and equivalent value of true or false, depending at the company.

Name is Viva.

So you can see here in row 9 95 and 96 there are Vivas, the company name and it returns a true there because this is Python We can assign that to a variable to make her life a little bit easier If we look at the Viva Variable, it's the same true false values that we had before Then If we choose to use DF Lok on Viva, then we have a list of all the invoices for company viva!

And what what's happened is this true false list has been passed to Lok and then on Lee, the true values are shown for each row.

There's another shortcut we can use that is pretty common.

I use a lot Instead of using Lok, we just pass a list of the criteria that we want to apply to the data frame.

So here I just say D f and then all those true false values and it returns the same value as look.

So the question might be Why would you want to use this?

The DOT lok approach versus just using the brackets and the reason you want to use DOT lok is If you want to be able to control the columns you return, then you need to use doubt.

Poke.

This approach of just using the brackets can essentially just filter on all the data.

Keep that in mind, and we will go through some more examples to drive that home.

Now we can also do mathematical comparisons, so let's say if we want to understand where we've purchased at least 10 items or more similar sort of results.

So we've got a bunch of truce, and false is for each row that has a quantity amount greater than or equal to 10 and what's really nice is you can actually combine these together.

So now we can see how maney times viva purchased at least 10 items or more We've got to transactions here, and we use the and operator, the ampersand operator similar to you what you would use in standard Python for an and operation you can do and or or just a single value here.

Let's show how we talked about with Lok that we could select multiple columns as well Let's see the purchase date through price and see the difference.

So instead of returning, all of the columns were just returning the ones between Purchase Day and Price.

And this is an inclusive list versus some of the other list approaches.

You might be experienced within Python.

Where that last item are.

The last index is not included.

Remember when we talked about string excess Er's?

We can use these as well to get Boolean lists.

Several of our companies have the word buzz in the name, not necessarily at the beginning or the end, and if we use string contains it will search and find all the instances of buzz and give us another Boolean Index or Boolean mask that we can use.

And let's take a look at some examples.

You can really do some very sophisticated analysis with Boolean filtering this way.

For the final example, we're going to use another string excess er.

Let's do a filter on skew and use the string excess er.

We can find all of the skews that start with F S.

And let's do show how we can do a little bit more analysis here.

Let's get the products as well.

So then we can see.

Okay, there's a skew there.

It starts with poster and combine it with value counts.

So now it's really easy to tell that we have two types of skews shirts and posters, and this is the number of occurrences of each one of those.

|

|

|

transcript

|

3:54 |

weaken.

Apply the same concepts when working with dates.

So here we can filter on the Rose, where the purchase date is greater than let's do rare than or equal to the first of December.

And what is really nice about this is notice.

I'm just using a regular string for the date because Pandas knows that purchase date is a date time.

It converts this to a day tight format in dozen filtering for us as we would expect.

So this is really handy when you're working with date and times and trying to sub select certain portions of a date range similar to what we did with strings.

We can combine these, so let's define a purchase date.

So what we've done here is we've defined a purchase date where the month is equal to 11 and then we want to Onley look at books so we can combine those again using the ampersand.

And now this gives us all of our purchases of books in the month of November.

We can also use mathematical comparisons greater than less than so.

If we want to look at the quantity greater than 12 we can do that as well We could also do comparisons across columns.

So let's say we define something called men order sighs.

It's five.

So we now have a men order size.

So let's assign that to a variable.

Call this small.

And if we want to get a list of all the transactions that were small orders, we can tell there are 176 transactions.

And these are the companies and the products that they purchase, where they didn't get at least five units in their order.

Boolean filtering is going to give us a lot of flexibility as we structure our data It works on strings, works on numbers.

It works on dates.

The other thing I wanted toe walk through quickly is another way.

You can filter the data and I want to highlight it.

So you're aware of it.

I'm not going to use a whole lot in the rest of the course I want you get used to using dot Lok for most of your analysis, and then you can use this other option called Query a little bit later as you get some more experience.

So if we want to query our data frame, we can say, DF dot query.

And if we want to understand quantity greater than 10 it's similar to the way low quirks.

And once again, this is just another functionality within pandas that can make some of the chained assignments that you want to do is get more advanced easier.

But I want to call it out now.

So you're aware of it and also mentioned that DOT Lok will do many of the same things and will actually make it easier in the future when we want to assign an update values.

So that's why I focused on the dot lok approach and would encourage you to do that in the beginning and then move to some of the other more sophisticated approaches as you get more experience.

|

|

|

transcript

|

1:40 |

in the previous examples, I've covered a lot of different content, so I want to summarize it for you.

When cleaning and transforming dates or strings, you'll use functions like year, month or quarter that actually return a value or string functions such as Lower Upper, which will transform a value to do additional calculations or add new columns to your data.

If you want to filter the data, you need to use functions that return a true or false Siris of values.

So for dates is month start or his quarter starter example, and then string has various search functions that you can use to determine if the value meet your criteria.

But the end of the day you'll need to refer to the pandas documentation for all the available methods.

And when you're working with numbers, they're similar approaches.

So comparison options such as greater than less center equal to you can use either comparing absolute numbers or other columns their equivalent functions, such as greater than less than or equal that you can use as well.

But I encourage you to use the numeric functions as you get started and get comfortable with Python, and then when you need to use the additional functions.

You can do that in the future.

And then, from a math perspective, it's a similar approach.

You can adds track, multiply and divide either whole numbers, floats or other values and columns, as well as using theme math operation functions.

But once again, I recommend that you use the standard math nomenclature until you get

|

|

|

|

26:02 |

|

|

transcript

|

0:59 |

in this chapter, we're gonna talk about aggregating grouping emerging data with pandas.

So what We mean when we say aggregating data, here's an example of what you would do in Excel.

You may not use the term aggregation, but what you're doing is getting a summary statistical view of a set of data.

In this example, we want to see what the average prices of column F or the standard deviation of that price.

And you use the standard Excel formula in this case, the average formula on all the values in column F.

If you'd like to do something similar and pandas, you can use the ag function on a column and pass it a list of different functions that you want to apply.

In this case, we compute the same mean and standard deviation on the price call This could be very powerful, with multiple functions and multiple columns, all called it once so you can get a really nice summary of your data using pandas Pandas approach encourages you to work on the entire data set using very powerful commands

|

|

|

transcript

|

1:05 |

Let's go through a couple examples and excel of how we would do an aggregation in this case, if we want to calculate the average of the price column, we would simply do average of all the values and f if we wanted to do this stair deviation and be a similar process and a little more complicated.

What if we wanted to understand how many shirts, books, posters and pens were sold?

One of the ways we could do that in Excel is to use thesis, if function, so we need to figure out the range.

This will be the range bacteria is shirt and what value we gonna Some were some of the equality, and then we can see that we sold 62 hundreds shirts and drag this down, and we get the total amount of products sold based on each of the different products.

This is fairly standard excel functionality,

|

|

|

transcript

|

8:44 |

Now let's go through some examples of how to aggregate in group data in Panis.

So Ivory started this notebook to read in my sample sales data and take a look at the top five rows, the data like we have been doing in the past So now let's try and do a simple aggregation of the Price column.

If we use the ag function and call that on the price calm, we can pass in a list of one or more functions to apply to that column and get the results.

So in this example, I did the mean function, which tells us that we have an average price of 22.8.

I can also do something like dinner deviation, or I can do men Max, and there are many different aggregations you can apply.

But this basic feature that we use on a column we can also use when we group data, which I will show in a second.

One interesting aspect about aggregations is that you can run it on an entire data frame as well, so we can say and pendants will then run a mean in a max on all of the columns that it can, and so you'll notice that it will run the functions on day columns, object columns, numeric columns and in those places where it can actually do a compass Correct calculation.

It returns this in a N, which means not a number function.

We can define a dictionary that has the key as each column name and then a list of all the aggregation functions we want to apply on those columns.

So let's go ahead.

And to that, just a show what I'm talking about.

So if we want to get the average quantity, or maybe let's just let's do the total 20 and then for price, we can pass our standard deviation in the mean we can all student invoice and maybe for this one I want to count.

So this dictionary says for each of these columns, we want to perform these operations on those columns, and then if we want to actually apply it, we two DF AG and add the AG columns to that so you can see here that it went through each column and it did various functions for each of the calm So I said, Let's get us some of the quantity, the mean and standard deviation price, the count of the invoice and the some of the extended amount.

And we have those in a in values there because pandas is constructing a single table or data frame and doesn't know what fill it in.

So it will just place the in A in there.

If you want to fix that so that you get a zero, we use fill in A.

And that function will fill all of your in a N values with zero so that we've talked about aggregating.

We're gonna talk about grouping because they really go together.

While you can aggregate on a data frame by itself most of the time you're going to group first.

So let's do a quick example.

Room in group.

Buy everything in the product column, and then we tell it the some.

So what this does is a groups, all the products.

So we have four products that has grouped together, and it's summed all the numerical.

so we know the total quantity, the total price and the extended amount.

But you think about this.

The some of the price really doesn't make a whole lot of sense.

So maybe what we really want to dio is just get the quantity was copied.

That and what we can do is tell it.

So now we've said group byproduct, but just run the some operation on the quantity column, and this functionality works.

But it's not the preferred way that I like to do aggregations in pandas.

And there's the structure that makes the most sense for dealing with more complex grouping and aggregations.

So I define a prod calls.

So I say in quantity column what some that.

So if you followed what I did on the aggregation, I'm going down that same path.

So now I say, group by product and now I ag broad calls.

So, combining what we've talked about earlier with aggregations and group buys, we defined the columns that we want to perform mathematical operations on.

In this case, we want to some all the values in the quantity.

We want a group by product and therefore we have be counts or our books, pins and posters, which, if you recall, is very similar to what we did with be some ifs function in excel.

So the groupie and aggregation that you've been doing an Excel.

This is just a different way of doing it, and it's really more powerful because you have a lot of flexibility and a very small subset of code to do at a lot of summary information and grouping on your data For instance, copy and paste this, but let's say we don't want just do the some.

Maybe we also want to see what the average size of each transaction is.

This starts toe give you a feel for how quickly you can iterated through analysing your data.

I just adding in the mean function, you start to get mawr information about what the purchase patterns look like, and we can go back in and out of state or deviation.

maybe we want to see what's the max.

Someone is purchased, and this gives us a really quick way to reiterate through our data and keep looping back and making adjustments and figuring out what insights we can get from our data.

And we can also do our group by on multiple columns.

So right now, up until now, we just grew by product.

But what if we want to see company in products Now we're gonna get more results.

So we're gonna see for each company what products they purchased.

The total amount of the average Mount Sandy eight deviation, the max in the men.

And once again, we have our Entei ends.

And what that's telling us is there are some negative values or some other values in there that are causing the standard deviation to not calculate correctly.

So if we want to, if we don't like, be in ends, we can fill in a was zero to turn those into zeros.

And one of the things you likely noticed is that once we do these aggregations, our indices and our columns look a little bit different.

And what what Pandas has done is put together a multi index force.

I'm not gonna go through the details of what a multi indexes or why you would want to use it.

But I am going to introduce this concept of doing reset index.

And once you call that you can see now that instead of having company and then product and book pin poster group together, it's reset so that there's one row for each value.

And I highlight that because sometimes some of the future summaries and analyses that you want to do are much easier when you've reset the index this way.

So I want to introduce that concept to you.

Now, for the final example, we're gonna introduce a concept called named Aggregations.

So probably the best way is just Ah, walk through this and then I'll explain what I'm doing.

Okay, so let me walk through this what we've done with our named aggregation here So we want to count the number of invoices that each company company has.

And then what is there?

Max Purchase?

And instead of just leaving the columns to the default naming convention based on the extended mountain invoice actually defined the column name.

So this says that the invoice total should be the name of the column, and Max purchase should be the name of the call.

Now, this is really helpful as you start developing these aggregations and want to present the results.

Other people you want to make sure that the column names are consistent

|

|

|

transcript

|

0:36 |

Here's a reference for the group by function.

This is the invoice level data we reviewed in our example.

And here's a group by function.

We want to perform our mathematical operations on the quantity and extended amount columns, and then we want a group.

The data based on the company and the products are resulting table.

Looks like this.

The some and mean of the quantity, as well as the some of the extended mount are shown here.

Here is a list of all the aggregation options that you can use.

I encourage you to try these out and get familiar with how they work.

|

|

|

transcript

|

1:33 |

within Excel.

The pivot tables probably the most common way that most people group and aggregate data and pandas has a similar functionality.

In this example, we have a pivot table that will summarize for each company and each product how much say purchased.

And we can use a similar Pandas command to do the same thing.

So here's the pandas command.

And instead of passing the Rose, which is the terminology that Excel uses, we use index.

And in this case, we tell it to index on the company.

And then we specify which columns to include across the top of the table.