|

|

|

20:21 |

|

|

show

|

0:53 |

Hey there, welcome to MongoDB for async Python with Beanie and Pydantic.

I'm so excited that you're interested in this course.

It is such a cool set of technologies that we're going to bring together here and it's really, really empowering.

In this chapter, we're going to introduce the course, talk about MongoDB a little bit, why people love it and care about it, some of the libraries and frameworks we're going to use, how do we use async Python to talk to MongoDB, how do we do that with Pydantic and Beanie.

And we're also going to talk about what you'll learn throughout this course.

Just going to get everything set up, set the stage, and then we'll be able to dive into actually writing some code soon.

I hope you're excited for this journey.

It's going to be a great time.

Come along with me.

|

|

|

show

|

3:06 |

When you think about databases, MongoDB is really popular and really loved.

Let's look at the stack overflow trends that shows the popularity of different databases over time on their platform, which is a pretty good proxy for general interest in a technology.

So you can see here that when MongoDB launched in 2010, it took off pretty quickly and caught up with Postgres.

Postgres has actually been growing quite a bit here, which is really impressive.

Postgres is definitely coming on strong as well.

There's Microsoft SQL Server, which was this brown line, used to be super popular, but it's certainly trending down and now is, I guess, depending on how you measure it, a little under or just tied with MongoDB, which is saying a lot given that database's usage inside large enterprises and medium to large businesses.

So MongoDB, while not the absolute most popular database, is certainly one of the most popular databases out there.

Which one's the most popular?

Postgres?

No, I would say the most popular is Excel.

Now, I mean, it's not really a server, right?

But people use it for one all the time.

But amongst the formal database servers meant to handle lots of traffic with concurrent users in a traditional ACID type of sense, well, MongoDB is certainly up there.

MongoDB is also very loved from the 2022 stack overflow survey, the most recent one we have results for now in 2023.

We can see that it is one of the most loved databases.

Again, Postgres is up there, right, but it's a relational database.

I know it has JSON fields, but it's not the same.

MongoDB is certainly the most popular document database and honestly, compared to pretty much any other database whatsoever.

It is one of the most loved out there.

They do have Redis here.

And I would say Redis is not really a database.

I don't know why they decided to put this in here.

So in my categorization, MongoDB is the number two most loved database as well, which is pretty awesome, honestly.

And finally, MongoDB from that same survey is also very much wanted.

When they ask, Hey, is there a database technology that you are not currently using that you want to use?

Well, again, as you might expect, the popular ones show up Postgres and MongoDB are the most wanted, again, even over Redis in terms of compared to how it was loved, I guess.

So MongoDB is a great choice, because many people want to use it, you'll be able to find teammates excited to work with you on it, be able to find people who are excited to take jobs or do consulting gigs to work with you.

And if you're on the other side, if you have MongoDB skills, you'll be very valuable to teams looking to invest in Mongo.

So it's not the only thing that matters about databases, whether or not they're popular and loved and wanted, but it's certainly not bad, is it?

|

|

|

show

|

3:44 |

One of the reasons that MongoDB is loved is because it's fast.

Not only is it fast, it's actually quite easy to build applications that are fast with MongoDB as well.

You don't have to think so much about joins and all of these types of things, as we'll see.

So you're taking this course here at TalkBython training.

Let's give you some numbers.

Let's give you some perspective on an app that I hope I imagine felt really quick to you and how that's working running on top of MongoDB.

Over here we have our courses page.

Here we've got quite a number of courses.

We've got which ones are new.

We've got which ones are free.

We've got all sorts of information about them, when they were released, their pricing and so on.

Now on this particular page, you can see it has account and logout, so it has to have information about who is logged in, as well as the courses and other things.

So on this particular page, there are four separate MongoDB queries, plus the page logic.

And if we measure the time of request hits the web server, not the database hits the web server, does all that logic and processing can it calls MongoDB four times, it takes those results back, it turns them into Python objects, then it turns that into HTML and HTML is then rendered back out so complete round trip, not just to the database, but actually through the web request and the end, those four requests, only 33 milliseconds on average for this page, 33 milliseconds and this is on a 10 or $20 server.

This is not on some insane, hugely expensive server running in the cloud.

It's a pretty standard one.

So if most websites out there had 33 millisecond response times, the web would be a better place, wouldn't it?

One more.

When you go to watch a course on our website, there's a whole lot of information that has to come back.

Maybe the course has 50 or 100 videos that are associated with it.

We like to keep our videos really short so you can jump around and use them as reference.

We need to know how long is this course, what is this repository, what are all of its chapters and lectures, do those lectures have transcripts, again are you logged in, do you have permission to access this, many things.

For this page, we actually have 10 MongoDB queries, which surprised me when I went to check that out.

That's fine.

10 queries plus the page logic and some of those collections, MongoDB's term for tables, have millions of records in them.

And we need to get many results, but you know, focus down to you and this particular course, but still many results back potentially from those millions that are in there.

How long does this take?

It must take forever, right?

It must just spin and spin.

Nope.

had it an entire four milliseconds for this particular page.

None of this is cached, not the example before, not this one.

These are live requests going through Python talking to the database every single time.

How awesome is that?

Such a cool system we got going here.

Not because we're awesome, but because we built on awesome technology such as MongoDB.

of the techniques I've used to make this page incredibly fast.

I'm going to teach you throughout this course.

Some are obvious, some not so much, and I think you'll really enjoy it.

|

|

|

show

|

2:41 |

Another important technology that we're going to focus on in this course is Python's asyncio.

When you see that async and await keywords in Python, that's what we're talking about.

Now, many of you may have preconceived notions about parallelism, about multiple cores and multi processing and threads and all those things.

And in Python, well, pretty much any of the asyncio frameworks, even outside of Python, they're a little bit different.

different.

So for example, if you were to go create a thread in Python to try to do concurrent work, first of all, you'd hit the gill, that's a bit of a problem.

But it would go and create an operating system thread that does something separate for that work.

And then you can sort of exchange data through shared variables.

Multiprocessing is like that as well, but it uses a separate process.

But asyncio, it's a little bit different.

By default, in asyncio, there are no threads, there's one thread, the main thread that you're working on, it does all that work, all that concurrent work on the same thread.

But it does it by saying, let's take instead of doing one big block of work, like let's query the database and wait for MongoDB to respond, it says, let's begin querying the database.

And then we're also going to have another part of that work, which is receiving the response and then you'll maybe deserializing it and so on.

We might be doing other work at sort of the same time.

So the idea is we're going to break up these jobs, like let's say this green one is querying MongoDB.

Instead of just one big long blocking bit, we're going to break it up into these pieces.

And where we're waiting, we can interleave other ones.

So for example, we can have some task like talking over a network for an API call might be happening here.

This one here, this one's MongoDB.

First we start the query, then we check on it, then we get the response, and then we deserialize it, so on.

So we're going to spend a whole chapter talking about how does async I work?

How do we do this with Python code and making sure your foundations for asyncio are really solid.

Now, a lot of times people say, concurrency is really hard.

Don't do it.

Parallelism is super hard.

All you're going to do is make mistakes.

Don't do it.

I don't really believe that it's not that hard.

The code that you write is almost identical.

There's a few new keywords, but they're pretty straightforward to use.

you kind of got to get your mind into a different space, but programmatically it's not that different, but there's a ton of advantages as we'll see throughout this course.

And what's really awesome is Beanie is going to allow us to do async I/O against MongoDB.

|

|

|

show

|

4:28 |

Speaking of Beanie, we're going to be using the Beanie ODM.

What is that?

Well, you look at their doc here, it says Beanie is an asynchronous Python object document mapper for MongoDB, and the data models are based on Pydantic.

Let's take that apart.

Asynchronous, that means we can use or I guess really have to use async and await to talk to it, which means Beanie will automatically break up interacting with the database into the hotspots where we're actually working with it, and allow us to interleave other work.

While we're waiting on MongoDB, or on the network, or on DNS, or you know, whatever's happening to complete that database query or insert, we could be doing other database calls also to that same database server.

Or we could just be processing more requests, we could be calling API's, who knows what but asynchronous like we just talked about, it's going to allow us to do that.

Object document mapper, ODM, you're probably familiar with the term ORM, but document databases, of which MongoDB is one, generally don't have relationships.

There are ways to model relationships, but they're strongly de-emphasized.

So what we get out of MongoDB are documents, not records, and so we have an object document mapper just a little bit more accurate acronym.

But think ORM, but for document databases.

And finally, Pydantic.

Pydantic is an incredible way to model classes.

It supports serialization from JSON, it supports transformation and validation.

It's type safe, really, really cool technology.

And if you're using things like FastAPI or other tools that use pydantic?

Well, guess what, it's really awesome that your database model, and maybe your API model can be compatible, or maybe even the same some of the time.

So beanie, a singers Python ODM, or MongoDB based on pydantic.

Really awesome.

Now before we dive into learning about MongoDB and some of the asyncio stuff and pydantic foundations, I want to give you a quick, quick glance, a little bit of a preview of what it's like to create a class that matches a MongoDB document and how we query that with Beanie and async and await.

So in this example here, we've got some code, we create an embedded part of our document called category that has a name and description.

And then we have a product that has also a name and description a price, this one is indexed.

And here you can see the embedded categories there.

Also in the class, we have a inner class called settings that allows us to configure how the ODM match it maps over to MongoDB.

For example, what is the name of the collection, what indexes are there, and so on.

Pretty clean way to create classes, right?

And once you have those, how do you query them?

Well, there's a bunch of cool examples on the documentation.

But let's just see how we might go to our product database and find all the ones that are in the category chocolate.

There's a few interesting aspects here.

Notice it says chocolates equals await product dot find.

So instead of just saying product dot find and blocking, we say await that allows us to break our code into these smaller tasks that we saw earlier.

So that's the only difference to do the async stuff, but it's awesome, right?

And then notice our product class had the categories field in the categories had a name and a description.

So we can automatically traverse that hierarchy within the document by just saying product dot category dot name, equal equal, the value we're trying to search for, as well as the price being less than $5.

So this query is going to give us all the chocolate items that cost less than five units, I'm guessing dollars, euros, something like that.

And then we can call to list to serialize that in memory into a list.

Alternatively, it could have been a cursor that we could work across, but to list is good if you want to have it all at once.

All right.

So really cool way to create classes that model documents, including the nested ones and a really slick way to query them, both from a how do I talk about the query itself as well as the async and await.

|

|

|

show

|

3:44 |

What are we going to cover in this course?

Well, we're going to have an introductory chapter that tells you about everything like this.

You are here.

You know what that's about.

We're going to talk about what are document databases and how do they work in general, as well as a technical overview of some of the MongoDB internals, how it works, how does it allow you to query things that are nested deep within some kind of document, as we just saw.

We're going to do a few foundational topics.

And we saw these important building blocks, asyncio, Pydantic and so on.

So we're going to have a few chapters that make sure you really understand those super well, because they're an important part to making all of this fit together.

First one of those is Pydantic.

Then we're going to do asyncio as well.

Then we'll take a couple passes about building with and learning about Beanie.

We're going to do a Beanie quick start.

Like what is the simple, minimal application and that we can get set up to just see all the moving parts and how it works.

And then we're gonna look at modeling a cool, well-known place, well-known dataset with documents or how that might look and what is the difference of modeling if you have a relational database and you're aiming for third normal form and foreign key constraints and all of that?

Well, how does that change in a document database?

We'll talk about that.

Speaking of a realistic data source, We're going to model the PyPI data, so pypi.org with packages and releases and maintainers and users, all of that.

We're gonna model that with Beanie and Pydantic classes.

And then we're gonna take that classes and the Beanie interaction that we've already created with the queries and collections and all that.

And we're gonna plug that into FastAPI and give us a really cool example of, Well, here's an end to end API talking about this pi pi data that we've modeled, but also how does it fit into a web framework, especially one that's pedantic friendly, let's say, when I told you that MongoDB was fast, there was a couple of techniques that I had in mind, because it's not always fast out of the box.

If you just throw a bunch of data in it, and you start doing queries, you might be really disappointed, could be really, really slow for a whole bunch of reasons.

So we're going to look at all the ways and knobs that we can turn, as well as programming techniques we can apply to make MongoDB go from who knows, maybe a one second response time to a 10 millisecond response time, you know, 100 times faster, maybe even 1000, who knows.

And finally, we'll talk a little bit about how do you deploy and host MongoDB.

There are database as a service or MongoDB as a service places that you can use.

And if you want to use them, great, you're kind of don't need this chapter.

But many people don't want to put their data in the cloud or for various operational reasons or even pricing reasons, you might decide to deploy it differently.

So if you want to self host MongoDB, either internally or in the cloud next year apps, we'll see how to do that.

And finally, in the performance section, we made our code faster, kind of in isolation, It would be cool to see, well, how fast is our API overall?

So we're going to do some cool load testing on not just MongoDB itself or Beanie queries themselves, but the entire system that we built to wrap up the class using a really cool load testing framework called Locust.

That's it.

I'm really excited about these topics I put together for you.

I think it's going to be a really fun sequence and you're going to learn a ton.

|

|

|

show

|

1:15 |

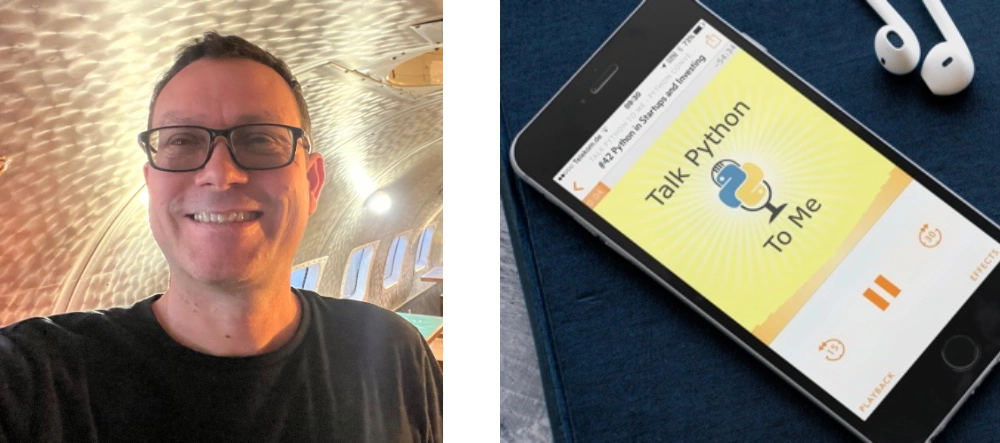

Finally, before we wrap up this introductory chapter, I want to just introduce myself.

You see me hanging out down here in the corner for a while now, but I haven't told you my name or anything about my background.

My name is Michael Kennedy.

I'm super excited about teaching this topic.

I've used MongoDB for a really, really long time.

Talk Python, all the sites, the Talk Python podcast, as well as training courses are powered by MongoDB.

And I was even for many years part of what they called the MongoDB masters group, which is kind of like an MVP external advisor group to MongoDB.

So if you want to stay in touch with me, you can find my blog over at mkennedy.codes.

Of course, I'm the host and founder of the talk Python to me podcast, co host of the Python bytes podcast, and one of the principal authors here at Talk Python Training.

I'm also a Python Software Foundation Fellow.

And finally, if you actually want to talk to me directly, find me over on Mastodon where I'm at mkennedy on fosstodon.org.

So great to meet you.

Thanks for coming the course.

|

|

|

|

0:30 |

|

|

|

10:57 |

|

|

show

|

2:22 |

It's time to get started with MongoDB.

Now, some of my courses, I don't bother to put a detailed setup chapter together because usually it's find a Python editor and start writing code.

But with this one, there are a lot of moving parts in terms of tools, none of them particularly hard to get or to set up, but you just need them in place.

So in this chapter, we're going to work through the various things that you need and how to get them set up on your platform.

Let's go.

First of all, does it surprise you that for a Python plus MongoDB course, you're gonna need Python?

Of course not.

But you do need a modern enough version of Python.

Now, in terms of the core features, for the most part, we'll not really be doing anything beyond Python 3.6.

However, there will be a few times we'll use Type-Ints, I'm sure, type hints were greatly improved, I think in 3.9.

So let's just go ahead and make sure that you have the latest version.

Instead of walking you through that, I put together a detailed article here at training.talkpython.fm/installing-python.

Go over there and check it out.

No matter what your OS is, it will walk you through the steps needed to see if you have Python, check out your version.

Hopefully you're using Python 311 or greater for this course, and then we'll be exactly level because that's what we're going to be using.

You're going to need to get some data files and setup scripts ahead of time before you can import the data and start using it.

We're using a well known data source, the PyPI data, and I've structured that in a special way you can import into MongoDB and that we can use.

So make sure that you go to the GitHub repository, a URL here at the bottom and star it and consider forking it as well and check it out, clone it.

If you're not into Git, if you don't do Git, no problem, don't worry about it.

Just click that green code button and there's a download it as a zip file too.

So however you get it, just make sure you grab it here so that you have these files to work with.

Right now at the beginning of this course, it doesn't have all the code we've written because I haven't written it yet.

We're doing that live, but when we do write that code, you'll see that it'll show up here as well.

|

|

|

show

|

2:14 |

Of course, you're also going to need MongoDB.

There are database as a service platforms where you can connect to MongoDB remotely, but I recommend you just install it locally for this course.

Keep it plain and simple, okay?

So if you visit this URL here at the bottom over on mongodb.com, it will take you through how to install MongoDB for your platform.

Whether you're on Linux, macOS, or Windows, they'll have you covered, okay?

Okay, so it's really important that you install MongoDB.

Many of the places you install it will suggest that it runs as a service or daemon that just constantly runs on your platform, on your OS when you log in.

You may not want this all the time.

You know, if you're not really doing MongoDB stuff frequently it's just taking up memory, a little bit of CPU time.

So you wanna turn that off.

But for this course where it talks about starting it as a service that just runs the Windows service or a macOS service, whatever it be, brew service.

Go ahead and do that just so that it's always running while you're going through this course.

And then you can tell that service to stop and not auto start if you need to.

So make sure you get MongoDB going, just follow the steps here.

This is important.

You'll also need a couple of command line tools to work with MongoDB.

MongoDB is queried through what's called the MongoDB or Mongo Shell.

And the command that you type to get that is mongosh or mongo sh.

Here, so you're going to want to download and install this in addition to MongoDB, the database server.

They're not included with each other.

So get MongoDB, the server and the MongoDB shell.

And finally, to import and export data and to look, if you care for things like the performance, CPU load, memory load, in real time in MongoDB itself, like monitoring tools, you can get the database tools itself.

Most importantly, we'll look at Mongo Restore and Mongo Dump, that is, import data and export data for the databases themselves.

So that's why you're going to need to get this third set of tools here.

|

|

|

show

|

1:16 |

So far what you've downloaded and installed will be the Mongo shell and another one, an older more legacy style shell to talk to and query MongoDB.

Technically you could do everything there, but I strongly, strongly recommend getting some sort of GUI tool to work with MongoDB when you're trying to get a quick overview or work with, You know, look at a table, see what its indexes are, and then try to explain those queries back to you in a nice concise way as we'll be doing in say the performance section.

So let's go ahead and download and install Studio 3T free.

So Studio 3T is a fancy paid tool that you can use to talk to MongoDB if you have it or want to get it, there's a trial for it, you're welcome to.

But we're not using that for this course, we're just using the free edition.

So here you can see I'm downloading Studio 3T for Mac.

Notice there is a drop down to choose either Intel or Apple Silicon.

Make sure you get the right platform there, but it also supports Windows and Linux as well.

So you should be good to go.

Just follow the URL here and download and install this.

It's a simple application, but I think you'll find it really valuable for working with the database and exploring our data when we're not writing code.

|

|

|

show

|

2:35 |

As I mentioned at the beginning of the course, we're going to be using data from PyPI.

Now the data we're using is actual real live PyPI data I've gotten from one of the APIs where you can query and export information about the packages.

So we're going to be not working with the website but just the data side of PyPI.

In order for you to do that, over here I've got a data folder in the course repository.

And there's a readme talking about how to do all these things.

So it says, follow the steps we've already discussed in the video.

So make sure you have MongoDB and the management tools installed and that MongoDB is running and the management tools are in binaries or in your path, okay?

Then we need to download the data.

Now I put this online in a MongoDB format for you right here.

So let's go ahead and download that data.

See, we got it in the downloads folder there.

And it's in this bson form binary JSON that MongoDB knows and understands.

Okay, so we're going to need to work in that folder.

Then it says you just need to run this command here, Mongo restore.

That's one of the tools that came with well the database tools you installed.

And it says --drop.

Be very careful that says if there is a database called PyPI, we're going to wipe it clean from your system.

And then we're going to import everything here as the complete representation of the PyPI database and the dot slash means this folder.

So you got to do it in the right folder.

Over here, I will say, open up a new iTerm window.

You can say new terminal if you don't have iTerm.

Here we have our files.

So I'm just gonna run this command.

First of all, we can ask which Mongo to restore just to make sure that it's in the path.

On Windows, you can't say which, you say where, basically the same thing.

But make sure this comes up somewhere.

Then we're gonna run this command.

You can see it did a bunch of work, reading the metadata for packages, release analytics and users, install those and notice it says no indexes.

I made sure that we started out with kind of a naive database here that doesn't have any of the performance tuning, extra indexes and things along those lines, set up yet, we're saving that for another chapter down the road.

But we've got 9,188 documents, zero failed to restore.

That sounds good to me.

So it looks like we probably have it imported into MongoDB successfully.

|

|

|

show

|

2:30 |

Now that we think we have our data installed, let's go ahead and connect Studio 3T and just have a look and see what's in there, okay?

Also, you can go ahead and completely delete that folder and download of the import data.

It's copied somewhere else in MongoDB's internal WiredTiger format, most likely.

So if you have installed Studio 3T, fire it up.

Now I have some older connections for various things hanging around.

let's just start from scratch, assuming you have none, you could paste your connection string here, or you could use the GUI, I'm just going to say use the GUI.

And let's give it a name, we'll just call it local.

And I'm gonna call it local to because I already have a local, but you just call it local.

And it's going to be a standalone connection type to the default MongoDB on localhost.

None of these other things need to be messed with in this simple early version, when we get to deployment, we're going to need to look at these other pieces.

So hit test looks like it's all going again, you must have MongoDB running for the import of your data as well as this here.

Hit Save.

And then let's connect.

Over here, you can see there's a bunch of databases can ignore most of them.

These are operational databases for talk Python and Python bytes and and various things.

This one comes, these here come with MongoDB.

But see this PyPI, that is the one that we're looking at.

So we got our packages, and we got our releases, and we got our users, you can double click it to see what is in here.

It says there's a bunch of data.

So it looks like you've got some data in here, you can also see how many packages there are you could type dot count, run it again.

4892 packages imported there and so on.

Alright, we'll talk more about this query syntax and so on.

But want to make sure you get connected and that you see a PyPI database with three collections.

Remember collections are what MongoDB would call a database table.

Because it's not necessarily tabular, they have a different name collections.

Yeah, and also you can just hover over these and get stats about how many items are in there.

For example, packages can just hover over it and see there are 4892 that got imported.

Excellent.

So it looks like we've got all of our data imported, and we've connected our GUI tool to work with it.

|

|

|

|

24:17 |

|

|

show

|

1:03 |

In this chapter, we're going to talk a little bit about how document databases work, how can you do queries into these weird structured JSON records and that kind of thing.

And then we're going to focus on MongoDB's native query syntax.

This is going to be mostly a sort of for your information type of thing.

You don't need to go in there and do complex queries in the MongoDB shell or in its native query language for your applications.

However, it will be very useful for you to be able to open things like Studio 3T or the Mongo shell and ask questions about your data.

And for that, you'll need to use this query syntax we're going to talk about here.

But programmatically for your Python code, we will be using pydanic and beanie and async and await.

So this is important, but not the main way that you're going to work with MongoDB.

Still understanding what's possible in and the query syntax is a really important part of getting good with MongoDB.

|

|

|

show

|

3:26 |

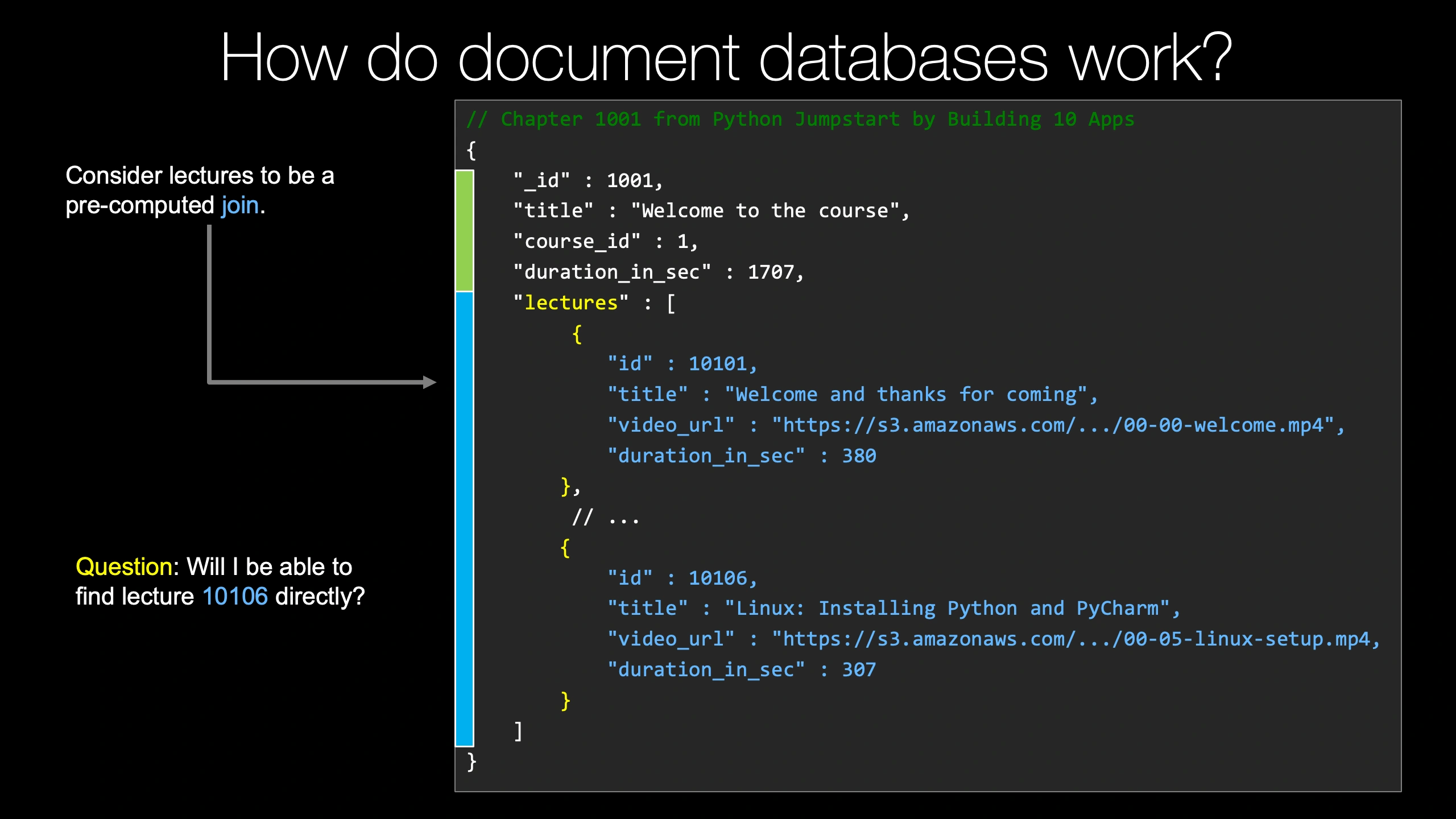

How do document databases work?

Well, let's look at a real world example from TalkByThun training.

Here is one of the chapter records that we store about our courses.

For our chapters, we have information about them as well as all the videos and details about those videos that make up that particular chapter.

So we're going to walk through this one real quick and see how we might understand it, how we might ask questions about it, and so on.

You can think of it as having kind of two different aspects, a traditional database aspect, this green, and something weird and unique, like a foreign key constraint or a join or something like that in a relational database for the embedded records.

So we have traditional columns, if you will.

They're not called that in MongoDB, but you know, from a relational database, that would be the analogy.

We have ID, underscore ID.

This is always the name of the primary key.

And then we have title, course ID, and duration in seconds.

All of those are standard things you could model in any other relational database such as Postgres or SQLite.

Then we have lectures.

And notice that lectures is not just a foundational type like a number or a string.

It is nested objects.

So we've got details about the different lectures, the welcome and thanks for coming, the Linux and installing, and so on.

So you can think of this part here as like a pre computed join.

And this is part of what makes document databases super, super fast.

At runtime, when you ask questions about the database, instead of going, well, first, we have the chapter and now let's go query stuff out of this big lecture table, bring a bunch of pieces together and compute something about that and then return those results, you just say, ""Give me something by ID.

Here is the answer.

And it already comes pre-joined with this lecture data because for us, if you need details about the chapter, you almost always need details about the videos in that chapter as well, the lectures.

So we modeled our data to say, ""Well, if you're going to need them together almost all the time anyway, when you get one, just return them all.

That's great.

I'm sure it sounds awesome in terms of performance, but there's an interesting question, like can you ask questions about this data like you would be able to if they were not mushed together in this pre-joined nested way?

So for example, what if you just want to know I need the chapter that contains the lecture with ID 10106?

Is it possible to even answer that question?

Is it possible to answer it quickly, say with an index?

And yes, absolutely, you can do those kind of queries.

You can ask questions about arbitrarily deep nested objects, and you can do that with an index.

So it would be just as fast to say, give me the chapter that has a lecture with this ID as it would be to ask for the chapter itself by primary key, for example.

And that's why this nesting is still productive and useful because you can still do high-performance queries about the nested data, not just the top-level data.

|

|

|

show

|

3:01 |

Let's highlight a couple of high profile users of MongoDB.

There are many more that are not in this list.

These are just the ones where write-ups have been done by MongoDB.

But if you go to mongodb.com/who-uses-mongodb, you get this page.

And over here, you can sort of peruse through your industry and see who else is using MongoDB and how.

This is really useful if you're considering adopting MongoDB and maybe there are people higher ups on your team or others you're like, I'm not really sure this would actually work for us.

Like we do e-commerce.

I don't know if you can do e-commerce with MongoDB.

Can you I've heard that's not a good idea.

And it's still it's a good idea.

But what's cool is you can look through here and find competitors or other companies like yours doing similar stuff and you can see well, how's it working for him.

So let's just hit a few highlights here.

Toyota said moving from a relational model to a document model and microservices with MongoDB Atlas.

So they're using MongoDB as a service, sort of, through MongoDB Atlas.

And Azure helps to create smart, autonomous, safer factories of the future.

So they're kind of automating their factories.

That's pretty cool.

This Sonoma company does 12 million learning exercises a day in MongoDB.

That's pretty awesome.

Forbes said they had a 20% increase in subscriptions and reduced total cost of ownership by using MongoDB Atlas, but on Google Cloud instead of Azure.

Presumably this is because their site is faster, right?

There's a certain amount of people who just decide to quit and go away if your site is too slow.

That number is really small, by the way.

So you definitely want it to be fast.

So here you can pick your industry or your region.

I'll just scroll through and see a few more that jump out.

GE Healthcare turned to MongoDB to manage their lifecycle of medical IoT devices, which is pretty excellent.

Volvo Connect is using MongoDB Atlas again, and MongoDB as the backbone of its interactive customer portal, that's cool.

One more here, maybe before we move on from this list, Shutterfly, they have like photo books and other photo sharing types of products.

They migrated their multi-terabyte dataset to MongoDB Atlas in minutes, bringing billions of photos to life for millions of people.

Pretty awesome.

And for all these, you can read the customer story.

I'll throw one final one here that's not on the list that I know of anyways.

Talk Python.

Talk Python training, the podcast, and all that.

As we already mentioned, the courses page, super, super fast.

It just kind of blinks there.

All of this is of course powered by MongoDB as well.

So if you're looking for a comparable to what you're doing, you know, have a look through that list.

Maybe it'll help with the adoption of MongoDB at your org or on your project.

|

|

|

show

|

2:12 |

In this chapter where we talk about the native tools and the native query syntax, we're going to use the Mongo shell for some of these examples.

So this is the mongosh or mongosh command that I had you install in the setup chapter.

Now in order to use it, we would just type mongosh or mongosh and it opens up.

If you type just by default, it's going to connect on localhost on the default port and all of those things.

You can also, of course, connect to remote servers with the right command line arguments.

You would say, ""Use your database.

So you connect in this way, in this case, ""Use training.

If you're not sure what the databases are called, you can always say, ""Show DBs,"" and it'll list them out.

Then you can say, ""Use one of them.

And then you write queries about the data in there.

So ""Find"" is basically the ""Select"" type of statement.

do, the way it works is you say DB, always always DB, this is directed at whatever the active database that we set in the line above.

And then you talk about the collections and then find so we have a collections collection called courses.

And then we want to find documents inside the courses collection.

So use this kind of dynamic programming style, db dot courses dot find.

And then what you pass defined is interesting, you pass a prototypical JSON object.

So in this case, we say we want to find the one where the ID underscore ID, the primary key is 30.

And you can see the result that we got back, obviously matches that.

But if you pass multiple things, we could say the ID is such and such.

And it has, let's say, a display order of five, that would be an and statement, there's ways to do ors as well.

But the more pieces you put in here the more specific that query becomes if you're not querying by the primary key.

We say the find, and normally you would get a, what would look like a minified result of JSON back, which is not the most amazing.

So if we throw this dot pretty on the end, we get this nice expanded good looking result set that is much easier on the eyes.

|

|

|

show

|

1:34 |

To look at a more complicated query example, let's connect to a different database.

This one doesn't come with the GitHub repo data.

This is just an example here in the slides.

So we can say use bookstore and it says switch to DB bookstore, show collections because what is in here?

We have capital B book, capital P publishers and user.

Something really important here, keep in mind, MongoDB is case sensitive, lowercase book and uppercase book, not the same collection, totally, totally different things.

Please don't have one and don't have both of those in there.

But you do have to realize that to talk to this one, you have to use the capital B.

It's not just a presentation style.

It's got exactly match.

All right.

So here we can say we want to go to our books and we want to find, give us all of them.

But what our goal is, is to show a set of them, five.

We want to do paging.

Like here's the first page of five, you want to say, ""Show me the next one,"" and then the next one, right?

Some kind of, ""Don't return a million records, but give a way to page through them.

So we can say two things in combination.

First, skip three times the page size.

So we're going to go to the third page, or fourth page, I guess.

Zero-based.

We're going to go to the fourth page, and then from those, we still want to show, well, the page size five.

So skip three times five and limit five.

That'll give us a list of these results of these books, five of them from the fourth page of page size five.

Cool, right?

|

|

|

show

|

1:08 |

As we've already discussed, the way that we query the data is to pass a prototypical JSON object.

So here we want to find a book by its title.

So we just say title, again case-sensitive, colon, and then the value.

In this case, it's a string from the corner of his eye.

So we actually get two books back, these two books by Dean Kunt.

Maybe one is like an audio book versus print or hardback versus paperback.

I don't know.

Doesn't show all the details of the records, but something like that, right?

Now maybe we want to be more specific.

So if you want to be more specific in your queries, we can pass two different things.

So we could pass the title and the ISBN.

And this query would say I want the book whose title is the one here as well as the ISBN also listed here.

So when you pass the values in like this, everything must match.

This is considered an ""and"" type of query.

The title is from the corner of his eye and the ISBN is 0553582747.

|

|

|

show

|

1:41 |

I open this chapter by saying you could query into these embedded objects.

In our imagined bookstore here we have this book and it has the details like the title and ISBN, but it also has things like ratings.

Which user rated this particular book and what value did they give it?

I guess zero out of ten or something like that.

So I wouldn't be able to answer the question with a native query.

I want all the books that are rated by the user with this ID.

Object ID is the default auto-incrementing, auto-generated primary key if you don't do anything specific in MongoDB.

So you'll get used to object IDs that are everywhere.

Now I want to know not just can I find a book, but I want to find all of the books in the database that have in their ratings a user ID field that matches this object ID.

How do we do that?

Incredibly simple.

So we just go over here and we say find ratings.userID equals this value.

Cool, right?

Now don't get confused that there's actually multiple values in the ratings that might have a value for user ID.

What it does is it looks here and it says we're going to go to the ratings list and And then in that list, we're going to see if any of the things contained in there, the user ID happens to match this object ID that we specified.

So in this case, we might have 21 books, all of which somewhere in its ratings collection had this user ID value.

I'm going to return that as a list.

Cool, right?

|

|

|

show

|

2:04 |

Now, some of you may really just love the Mongo SH, Mongo Shell tool.

I personally prefer getting a visual overview of what's going on, but I do really like to be able to actually type just like you would in the shell, ""Here is the query,"" and explore that.

And that's why I selected Studio 3T, the free version, for this course as the GUI tool.

There are others.

You can go and Google them.

But for all practical purposes, this one is pretty good and it matches this requirement that I have that I can type native shell commands and still get GUI results.

I think that's really, really awesome.

So here you can see what I did is I double clicked on the packages collection and it wrote this part here in the middle.

It says db.getcollection packages find and at first it was empty.

You know, just find everything.

That's why I came over here and said I want to find where the email is Samuel Colvin.

So S at, kind of clever there, emulcolvin.com.

And maybe I wasn't sure, is it email or is it author email or is it author, what is it?

So you can come here and type part of it and hit control space and it will pull up an autocomplete list.

Pretty awesome.

Notice at the bottom, by default, I get this hierarchical view that you can go and explore.

But if you want the true shell experience, you can flip this over in the bottom right where it says JSON view right here.

You can flip that to say, no, return the results, basically the equivalent of a dot pretty result that you would get exactly in the Mongo shell.

So hopefully you find this to be a useful tool to use throughout the course.

I'm definitely using it because I love the ability to type in this top text box area just like I was using a real CLI, but the results I get back are in GUI form where I can do things like edit them or explore the indexes and all kinds of stuff without bouncing around the CLI.

|

|

|

show

|

2:55 |

If what we pass for these queries are prototypical JSON objects like the ISBN equals this or the rating equals five or something along those lines, that's cool when you're doing an equals, but database queries often involve greater than, less than, not equal to, and these types of things, right?

I would like all the users who have registered in this time window from yesterday to today.

That's not an equal, right?

So how do we do that?

Well, there are these operators for querying that allow you to express things like greater than and less than and so on.

So we can have, they all start with dollar, the special operators.

We have dollar eq, that's just equal, that's kind of what we've been doing.

So usually don't need that, but there are places where you can use it.

The dollar gt is greater than or gte is greater than or equal to.

We have less than, less than or equal to, not equal to.

One that I find really valuable is in.

You might say, ""I've got a set of 10 objects, and I want to know if I want all the records where any of the values might match that.

I could say, ""Give me where the rating is in some number of rating values I want, like four, five, and nine.

I don't know.

Maybe you want the extremes.

You want zero and nine.

Our little bookstore example is not exactly lending a bunch of great examples to come to mind here.

But that's a really, really common query that I do all the time.

There's more of these as well and you can see examples at just follow the URL here at the bottom.

There's a reference for all the native operators, at least for querying here.

So you can go check them out.

This is one of the things that is not obvious when you get started with MongoDB because you can't just use the greater than sign or less than, like you might in SQL.

But there's not very many of them.

This is actually most of them that you get to use.

So you can check them out and use them appropriately.

Again, we're not going to do this in our application.

This is just when we're playing with the shell and maybe exploring the data a little bit.

And then we'll go back to writing Python where you actually use like the greater than sign.

Let's look at one real quick example, suppose we want to find all the books that have ratings of nine or 10, not all of the ratings, but they have at least one rating in that ratings list that's embedded in them that has either nine or 10.

And so we would write this we just say book dot find ratings dot value to navigate that hierarchy.

And then instead of nine, we say, pass in this this little query object, curly brace, greater than or equal to colon 9.

And that'll operate just as if we had said, you know, the greater than or equal to sign, and 9 in a traditional query syntax like SQL.

|

|

|

show

|

0:49 |

We also have a couple of logical operators.

Again, you can find them at the URL here at the bottom if you want to see all of them.

When we do our query passing multiple things into our find, that's always always an ""and"".

So what if you want ""or""?

Well, then you need to use a slightly different version.

You would use the $or and specify multiple things that go into those queries, kind of like we did with a greater two or equal than.

There's an ""and"", but I don't really ever use the ""and"" personally because you can just put multiple things within a document query, the little prototypical document.

Maybe you've got a list and you want to process them programmatically, but again, in Python that's also different.

We have not and nor as well.

So you can go see all of them here.

Really the one that I find I've most frequently used is the or version.

|

|

|

show

|

4:24 |

The final thing I want to talk about in this native query chapter, we're almost done with it, is projections.

Now, projections are super, super important.

Maybe you've done SQL before and people said, ""Never, never select star.

Always specify the columns you want back.

Well, you have a similar bit of advice in MongoDB and document databases, but it's even more important.

Why might people say, ""Don't select all the columns or parts of your data back""?

because you might only need two things.

What if you only need the ISBN and the title?

You don't care about when it was created, you don't care about the ratings, well you're still pulling all that data back across the network and off of disk, potentially on the database server, to just ignore it when you get to it.

So a good practice is to just ask for what you're going to use.

We get to Python, we'll talk about when you don't want to do projections or when you do and how to do so in Beanie.

But in the native query syntax, the way you do that is there's a second parameter you can pass to find.

So in this case, we only care about the ISBN and title.

So we say find whatever the query is, and then we project back ISBN title, ISBN title.

So we get just those back.

Notice we get one other thing.

We get the underscore ID, the primary key.

By default, that's always going to come back.

However, all the other fields you have to opt in by passing something truthy.

This case one will work.

This is basically JavaScript.

If you want to suppress the ID coming back, you could say _id colon zero and then you would literally just get back those two other fields, the ISBN and the title.

So this is really important because maybe we have a bunch of ratings and that's going to slow down the response and we're not going to pay attention to them here.

So forget them.

So projecting back a small subset of the document, it really helps performance.

I gave you some numbers earlier about talk by fun training, we have this number of queries and we have this amount of performance.

Very very carefully structuring the amount of data that comes back through projections on the server makes a huge difference there.

Let me just tell you a quick story about our apps.

So our apps on the client side, these are native applications for iOS and Android.

On the server side, though, it's all Python, APIs, and MongoDB.

Some of our APIs this app was talking to were taking as long as a second to respond.

Wow, what is going on with this?

These used to be really fast.

And I just don't understand why this part of our API started to slow down slower and lower.

It turns out that we were returning just the entire document for really, really small bits of information, but we needed many of them.

And so a slight change in how we ran our query through a projection, we said, actually, we only need these two pieces of data for this API response that we're querying here, not everything in the record.

And those went from taking like 900 milliseconds to respond down to 10 or 20 milliseconds.

Incredible.

So projections, super, super important, more important than in relational databases, much more important sometimes depending on your data model than relational databases.

Because if you go crazy and select star in relational databases, you'll get all the columns.

If you go crazy and select star in a document database, you might get whole chunks of other tables and relationships, right?

Remember, embedded objects, embedded records are kind of like a pre computed join.

If you don't want that part, project it out, and it'll be way, way faster.

So that's it for our quick overview of the native view of MongoDB.

This is a kind of a for your information for your reference as you need it.

Chapter of information, you're not necessarily going to have to go write these projections by hand.

necessarily going to have to use those logical operators by hand, but if you need them, you can come back and find them.

Mostly, from now on, we're going to focus on Python code for talking to MongoDB.

|

|

|

|

27:21 |

|

|

show

|

0:49 |

It's time for foundations.

Let's dive into Pydantic.

We talked about Pydantic just a little bit at the opening of the course, but Pydantic is a really, really important library and framework for building classes that map structured data with type information to whatever you want it to do.

This most popular use case would be with FastAPI on API data exchange with JSON documents.

However, there's many, many places that use Pydantic.

And so in this chapter, we're going to dive in and see how to work with Pydantic models and how we can parse data with them and even use some cool tools to generate the code for those models to start with.

|

|

|

show

|

2:11 |

You get started with Pydantic with just pip install pydantic.

And you can find out more about them over at docs.pydantic.dev.

Here you can see on the page.

Now, we're in a bit of an inflection point or a changeover in the Pydantic space.

And you can see right here this announcement on the website.

Pydantic v2 alpha is available.

So, Pydantic is getting completely rewritten in an almost year-long project from being based on Python to its core being based on Rust, which makes it about 22 times faster.

That is awesome and so many of these tools and frameworks that are built upon Pydantic, as we'll see in this chapter, will benefit just automatically from that.

However, in order for them to use the alpha stuff, we've got to wait for it to get a little long, a little more down the development pipeline so things like FastAPI and Beanie and others actually adopt those dependencies into their frameworks.

So in this course, we're only gonna be using version one of Bidantic.

V2 is not really much of a big API surface change, but it is a really big runtime change.

So that's big news that we have on the horizon coming, but things are not ready yet for that in terms of integrating those with the libraries that depend upon Pydantic.

So 1.10 is what we're using for now.

And I imagine over time, we'll update the course code to use version two when it goes for actual release.

If you wanna hear the entire story about this Pydantic version two update, I sat down with Samuel Colvin here on the left, the creator of Pydantic, as well as Sebastian Ramirez, the creator of FastAPI here in the center, and talk to them at PyCon about what's coming with Pydantic and how it will affect libraries that depend upon it like FastAPI.

So you can check out the Talk Python to Me episode at the bottom using that link there, which also links over to the YouTube video if you wanna watch the video version.

|

|

|

show

|

1:02 |

When you're working with Pydantic, these classes have a bunch of types and fields that have to be set and many of them are required unless you explicitly make them optional.

And yet, the initializer, the constructor for these classes is just *args, **kwargs, you know, the we'll take anything"" and it doesn't really give you much help on what types are required, what are expected, that sort of thing.

So you want to get the plugins for your editor.

If you're using PyCharm, grab the PyCharm plugin.

If you're using VS Code, there's one as well, an extension there as well.

If you're using mypy, which is a tool you run against your code to verify its type consistency across function calls and classes and that sort of thing, there's a mypy plugin.

Also for testing, generating test data, there's Hypothesis as well.

So if you're using any of those, do consider getting the plugin to make working with it a little bit better in your code editor or your CLI tools.

|

|

|

show

|

4:00 |

Let's survey the landscape of things that use Pydantic.

Pydantic is awesome on its own and you can use it in even say web frameworks that have no idea what Pydantic is and have no dependency on it.

Like a Flask website that exchanges JSON, you could manually use Pydantic.

But there are many frameworks out there that are Pydantic at their core.

So let's talk about a couple of those.

We have Beanie, obviously one of the centerpieces of this course, right?

That's how we're modeling our data in MongoDB and doing our queries.

All of those models are based on Pydantic.

So Pydantic is at the very center of working with MongoDB using Beanie.

We also have in the relational side, we're not going to use in this course for sure because MongoDB is not a RDBMS.

We have SQL model and SQL model is basically take SQLAlchemy and replace the SQLAlchemy models with Pydantic models and then that's more or less it.

But really really cool that you have these nice Pydantic models to model your data in relational databases such as Postgres or SQLite.

This is also created by Sebastian Ramirez, the creator of FastAPI.

Speaking of FastAPI, FastAPI is the most well known use case for pydantic.

And it plugs right into the API data exchange in super clever ways.

Here is a pydantic class modeling an item, I guess, and that's what we're calling it as a name, a description, a price and a tax.

We're going to get into this, but the name is a string and the price is a float and they have to be a float or parsable as a float in the data exchange for this to be valid.

If we want to say we're going to have an API endpoint, and that API endpoint accepts a JSON post body or something like that, that has a name, description, price and tax, and we want to automatically convert that, well, all we have to do is create a function here we have create item, and it's just a post operation to slash items.

And look in the parameter here, it just says we take an item of type item and FastAPI will say I see what's coming in.

This is the pedantic model.

And we're going to use that Pydantic model to parse and validate the JSON coming in.

So FastAPI, absolutely pedantic at the core.

There's many other uses for the Pydantic models here as well, such as response model for open API documentation, but not a class on fast API.

So we're not getting into that.

But right here, specifying the type as a Pydantic model is not just a hint to the editor, it changes how FastAPI processes the response that comes to it.

That was a bunch of awesome projects built on top of pedantic, right?

Well, let's Let's look at an awesome list to round out this small section here.

Over on GitHub, we can find github.com/cludexawesome-pydantic.

It's a curated list of awesome things related to, well, Pydantic.

You can scroll through here and there's things on machine learning that are based on Pydantic.

You can go down here and locate.

There's Beanie, but also Piccolo, which is an async query builder in ORM, which can auto-generate Pydantic models from database tables, or gigantic Pydantic model support for Django or HTTP runner, which is a HTTP testing framework, strawberry graph, and so on.

I'm not going to go through this whole list, just kind of scroll through it here.

There's a bunch more awesome things to find around Pydantic.

And if you know one that's not on the list, do these folks a favor and submit a PR.

|

|

|

show

|

5:11 |

Well, that's enough talking.

Let's write some code and play around with Pydantic.

And this is our very first chance to write some actual code in this course.

So let's just take an extra moment and get the repository all set up.

Over here, I've got the code repository as it would be checked out from GitHub.

What I want to do is open this in PyCharm and have it create a virtual environment.

Now PyCharm can do that itself or you could create your own virtual environment and work with whatever editor you like.

So I'm just going to go the PyCharm way.

Now on macOS, I can drag and drop this folder onto PyCharm and it'll open it.

On Windows or Linux, you have to say file, open folder, and then browser.

So drop it in like this.

Here our project is open.

You can see down in the right that it's detected the git branch and so on.

But the most important thing is what version of Python is running.

It says 3.11, that is great, but this is the global 3.11.

So let's quick add a local interpreter.

Now normally I would go here and just say, add interpreter, add local interpreter, but for some reason PyCharm like literally doesn't respond.

So I'll just go over here to the settings interpreter and say add interpreter this way.

a new virtual environment right in that location based on Python 3.11.

And here we go.

Now you can see the virtual environment has got the name, the MongoDB devs in it.

That means it's a isolated virtual environment, not just the global Python interpreter.

So while that's a lovely readme, we don't need that.

Let's go over to the code and we'll make a new directory.

And this directory is gonna be named after the chapter that we're on, we're on chapter four, Foundations of Pydantic, call it something like that.

And let's add a Python file just called first_pydantic.

This is gonna be our first exploration with Pydantic.

I'll let Git add that.

Now to work with Pydantic, we're going to need to import it.

And I'm gonna be super explicit here.

I'm going to use the Pydantic module as a namespace and not just imported the things from there.

So you can see exactly where these elements are coming.

So we'll say import Pydantic.

Now you can see that stopped auto completing there.

What we need to do is we need to add Pydantic as an external package.

And notice you can see this requirements.in that is not yet quite added to Git, but now it is.

And in here, normally we have a requirements.txt file, but I'm gonna use pip-tools to actually allow us to more carefully manage the dependency.

So into this requirements.in, we write only our top level packages without version.

So pydantic for the moment.

And then we're going to use that to generate a requirements.txt file.

So make sure your virtual environment is active here.

And this here, we'll first pip install pip-tools.

That's where the tools we're gonna need for this.

And also we want to run that.

Excellent.

And so now we can say pip-compile requirements.in --upgrade.

The upgrade doesn't matter the first time, but the second time it'll have an effect.

What this is gonna do is actually generate the requirements.txt.

And we can close that up and go here.

Let's add that Git as well.

And look what it's done.

It says you wanted Pydantic because you asked for it in the requirements.

And this is the latest version at the moment.

And Pydantic itself depends upon typing extensions.

And its current latest version is this.

This will right now, while it's quite simple, but it will grow in complexity as we build up working with things like FastAPI and Beanie and all of those, it's going to be much more interesting as well.

In PyCharm, we'll install these if we click here, you can click that if you want, or you can say pip install -r requirements.txt as you typically would.

Alright, looks like everything is happy.

And now we can import Pydantic.

Now let's define a method here, we could just write the code right into the just inline as a script without any functions.

But I kind of like to have some structure to this.

So we're gonna have a main method and it'll do whatever.

And then we'll do the dunder name thing.

And again, since this is only the very first one, let's go ahead and just make some output happen.

All right, I'm going to run this, right click, run Pydantic.

See, it's using our virtual environment Python and we get Hello World, fantastic.

From now on, we can press this button or on macOS hit Control + R or whatever hotkey you see up there as you hover over it.

|

|

|

show

|

6:20 |

So what we want to do is we want to work with some data.

This is the goal of Pydantic.

It takes some input data, and then it is going to convert that into a class.

Now in our world, we want to model some kind of API response.

Like we have here, we have an item ID, we have a created date, pages visited, and a price.

So we're going to go and model this with Pydantic by creating a class that's called an item.

And in order for this to actually be a Pydantic model, not a regular one, it can't inherit from object, but rather it has to inherit from Pydantic.baseModel.

And then we just specify the type.

So I see an item ID down there.

And what kind is that?

That is an integer.

Now, notice the quotes, it's submitted to us as a string, but really what we intend here is for that to be an integer in our API.

So we'll say that.

We have a created date.

This is a date time.

Not date time like this.

We have pages visited, which is a list of int.

Again, those are integers.

That's a string, but could it become an integer?

Yeah, probably.

This right here, by the way, instead of being capital L list, being lowercase list, there's your Python 3.9 dependency we talked about at the start of the class.

And finally, we have a price, which is a float.

So now we have a class that represents what we expect from our inputs to our API or to reading this data.

And we have data down here that's not exactly the right shape.

For example, this is not actually an integer, but it could be parsed to this is a list of integers, same thing here.

So let's go ahead and try to use pydantic to parse this.

So we come down here, we can say item equals item.

Now see this star star star kW args, come back to that in a minute.

What I want to do is I want to say item ID equals the value from data created date equals like this created date equals data of created date.

Now that's not fun, is it?

Comma, and so on.

So with a dictionary, we can write code that says for every key, write this expression by just saying star star the dictionary.

So those key value, key value, key value as parameters.

And then let's print out item.

So this tells pedantic to ingest that data using this model, and to parse it according to the rules that the types declare, for example, item ID must be an integer.

And it must be there because that's not an optional integer, it's a concrete integer.

Let's run it and see what we get.

Oh, look at that.

Here's our printout.

And a couple things to note that this is a actual integer, not just the string 123.

Because if we had a, let's put name, which is a string just so you can see the difference here, I'll put name is Michael, something like that.

Here you can see the quotes indicating that's actually the string type, right?

So this is not a string.

This is the number 123.

Here's our created date parsed correctly.

Here's our pages visited and notice that that three that was not accurate, actually also got parsed, as well as our price.

How awesome is that?

But there's more to it than just this.

Suppose here that we want to make sure there's an item ID.

If our data doesn't contain it, it says item ID field is required, it's missing.

Another thing that could happen is this could be ABC 123.

And up here in our data model, we say it's an integer, could that be parsed to an integer?

No.

So item ID now has this problem that its value is not an integer.

How cool is that?

Right?

All that is really complicated work.

Similarly, if this was A3, even better.

It says there's a problem trying to parse this data into item.

The problem is the pages visited the number two index, so third thing in the list, because it's zero based, is not an integer.

Look at that reporting.

How excellent is that?

So really, really cool.

Now if we wanted to say, you know, now if the name is missing, final one, it's going to crash and say name is missing.

If we want that to be something you could submit, but is not required, we could make this optional.

Now you can express optional in recent Python like string or none.

I don't really like that syntax very much because I don't really want it to be none.

I want it to be a string or maybe it's missing, right?

So I'd rather express this as optional, which you get from typing.

I know other people have different opinions and you're welcome to those.

You can do String Pipe None or you can do Optional String.

Either way, this is telling Pydantic if it's there it's got to be a string, but if it's missing, no longer is that an error.

It's okay, it's just None.

We can even set a default value.

Jane Doe if it's missing, or if you pass in a name, it's Michael.

really cool, right?

So excellent, excellent that we can create this model.

And this is our first pass one of many we have for working with pydantic.

We create a class, it derives from base model, we specify the types, those types have all sorts of meanings, what is required, item ID is required, because it's not an optional int.

We have default values, It does all the parsing if it can.

Really really great.

|

|

|

show

|

5:35 |

So we've seen that we can write classes that match a JSON document.

Then it will parse those using the rules encoded through Pydantic into that class.

What if you already have the JSON?

And what if it's complicated?

Our little order example was quite simple, so I didn't mind typing it out.

What if you have more ugly, complicated data that you need to work with?

Over here on the left, we have this code generation section, and it shows you ways in which you you can generate code from this using a CLI tool called data model code generator.

Really cool.

Now, we can use this and you can run with it if you like.

But let me introduce you to a website that will do the same thing based on that, that code.

JSON to Pydantic converter.

See on the left, we have a foo and a bar baz.

It will generate this over here on the right from Pydantic import base model.

Our model is that this is an integer.

That's a string.

Very cool, right?

Let's try to hit that with our order data here that we just wrote.

So item ID and then I guess we could add in name is Michael.

Remember in JSON, you can't have single quotes.

Look what we got over here.

Item ID is a string created date is a string.

We could do better than that, couldn't we?

So it's not exactly right.

This is detected as a list of strings.

And really, that's because we've kind of passed in failed bad data.

So let's let it do like that.

float in a string, we can make this optional, right.

But this is a pretty good jumpstart to writing these classes.

Okay.

Let's go with something a little bit more complicated here.

Let's take this weather service we have over We're at talk Python.

This is live real weather.

Let's get the weather in.

Let's say, where's that?

That's in Portland right now.

And notice how yucky this looks in Vivaldi or Chrome as well.

So Firefox to the rescue.

There we have nice structured data.

Now this is maybe too structured.

Let's go to raw data and pretty print it.

And there we go.

And look at that.

I would say this is just about like heaven.

75 degrees, 44% humidity.

And if you prefer metric, that's 23 degrees Celsius.

Over here, pretty print this.

Now look at this, it's pretty complicated.

So we've got this weather thing embedded in here, then we've got wind reports and then units and then the forecast and the location and the rate limiting.

So let's go and put that into our JSON to Pydantic model and see what we get.

A little more complicated here, but we've got our weather, which is this section, our wind, which is this section, and notice, integer and float.

Maybe you should upgrade that to an integer, make it a little more obvious.

Upgrade that to a float, rather.

And finally, you've got your model.

Let's go ahead and just make sure that we can parse all of this, make sure that it works.

Go back to PyCharm.

Let's call that weather for a second.

Paste all of the code and this will be, let's call it weather model, right?

This is the top level model.

It has some weather and wind and notice there's some really interesting aspects here.

So this itself is a pydantic model and the type of this is the pydantic model.

So that means we can have hierarchical, structured, hydronic models parsing and representing this, which guess what, for a document database like MongoDB, is exactly the type of thing we're trying to model.

So let's just go down here and say data equals and go grab our data we got here, like that.

And finally, say report equals weather model of star star data.

You want that even more when it's this complicated and then print the weather is now.

And let's run this one.

Look at how awesome that is.

Weather is, now we have our member, this weather.

The weather is this weather object with broken clouds and category cloud and the wind is like this.

Remember, even if this was off like that, when we run it again, it's still gonna parse it, not because of the top level model, but because of the nested model into a float.

And there you have it, right?

You could just print out the forecast if you want just the forecast, right?

Use that hierarchy to get just the pieces we're looking for.

Excellent, excellent.

So if you have complicated data, look and you already have it as JSON, you can go to the json2pydantic.com converter or use the CLI tool down here to run it with the input and the schema and so on.

All right, however you want, you can start with your data and generate these Pythonic models right away.

|

|

|

show

|

0:55 |

If you want to go deeper, here's some of the history and more details about Pydantic, some of the philosophies behind it.

I've interviewed Samuel Colvin, the creator of Pydantic, not one but three times on Talk Python to Me, back on episode 313, about automate your data exchange with Pydantic when it first came out, then about a year later when he said, ""We're going to do this big, massive rewrite and rust, interviewed him about Pytanic v2, the plan, like why is he doing this?

Where is it going?

What the community should expect on episode 376.

And very recently I interviewed him and Sebastian Ramirez, as I mentioned already about, well, now that you're basically done with this rust rewrite and you're looking at 25 times faster code, what are all the consequences of that?

So you can check out all of these episodes if you want to go deeper.

|

|

|

show

|

1:18 |

Let me close out this chapter on Pydantic with one more piece of information.

Pydantic has got a ton of support recently.