|

|

|

14:02 |

|

|

show

|

1:44 |

Welcome to the introduction to Ansible Course.<br>

My name's Matt Makai.<br>

I'm incredibly excited to help you learn this tool Ansible that I've been using for over five years which consistently helps me to solve technical problems every single day.<br>

Ansible is a configuration management tool which helps you to automate processes that a software developer or systems administrator would traditionally do manually such as standing up a new server modifying firewall rules, or deploying a web application.<br>

Ansible can automate steps, and do it in a way that is maintainable and easily readable by other developers.<br>

This course assumes that you've never used Ansible before or that you tried a different learning resource but that it did not quite click for you.<br>

You don't need experience in any particular programming language to use Ansible although familiarity with Python is occasionally helpful.<br>

If you're a Ruby, Java, C# or any other language developer though you will do just fine and Ansible will be just as useful in your projects.<br>

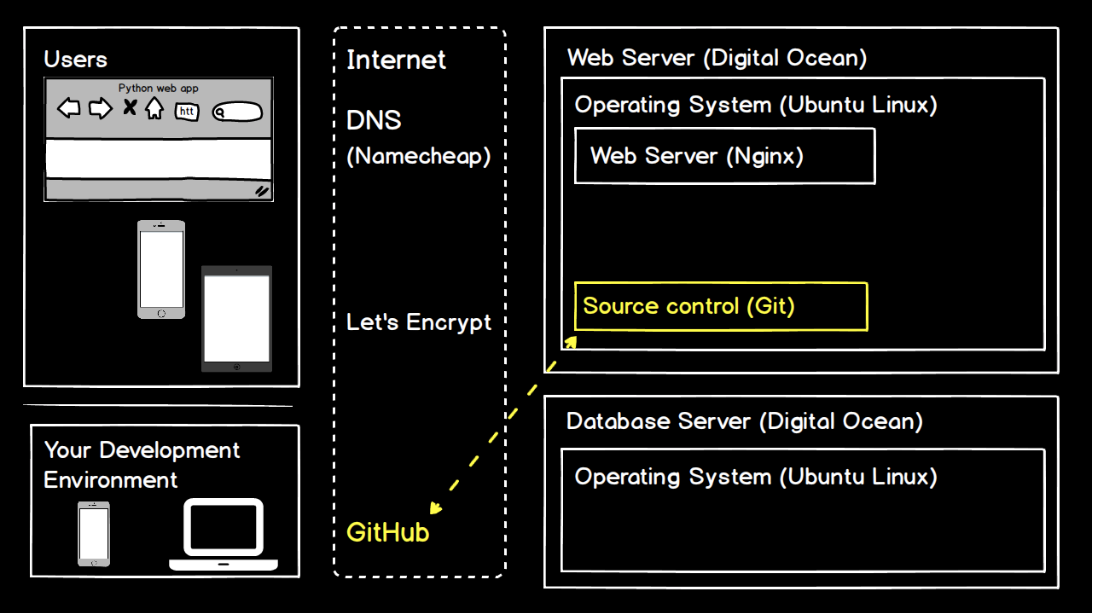

In this course, we will cover why Ansible is a great tool to have in your tool belt, what Ansible playbooks are and how you should write them as well as how to configure servers and secure your own data, such as passwords and other sensitive information.<br>

We'll touch upon source control because it's critical to every project and you can work with source control implementations such as Git repositories in your playbooks.<br>

As with any tool there's a learning curve so throughout the course I'm not just going to show you the happy path while live coding but actually make mistakes along the way and show you how to recognize what happened debug the issues, and fix the problems so that you can continue on with what you were originally working on.<br>

We'll learn about Ansible modules which provide the building blocks for almost everything we do in Ansible.<br>

Towards the end of the course we will deploy an entire web application together which will combine all the information you learn in each chapter so you can leave this course feeling really comfortable with using Ansible for your own configuration management and application deployments.

|

|

|

show

|

4:21 |

You may have already set your heart on using Ansible, and so you don't really need reasons why.<br>

But I want to get you even more excited, regardless of whether you've decided you're ready to use Ansible or you're evaluating alternative choices.<br>

First off, after years of working in the DevOps space, I've found Ansible to be the easiest configuration management tool to use.<br>

I had previously been working with Puppet and Chef, and just found that I couldn't keep up with what was going on with those tools.<br>

There is a reason why Ansible exploded in popularity once it was released.<br>

But rather than me just tell you about how easy it is, I want to show you a quick example.<br>

You can get a feel for what you're actually going to doing with Ansible.<br>

I've switched over into my own development environment, and I have three windows here.<br>

The one at the top left has a single Ansible playbook file with two tasks in it.<br>

The right-hand panel is where I've logged into a remote server that I've set up on DigitalOcean.<br>

It's a blank server that has not yet been configured.<br>

And then on the bottom, we're going to run our Ansible playbook.<br>

First, let's take a look at these two tasks on the top left corner.<br>

Both of these tasks use the same Ansible module.<br>

Don't worry if terminology like playbooks, modules, tasks are unfamiliar.<br>

We're going to cover all those in depth in Chapter 3 after you get your own development environment set up.<br>

For now, just bear with me as you take a look at what Ansible is able to do in just a few lines of the markup language, YAML, which Ansible uses for its playbooks.<br>

The first task we have here uses the file module, and we specify a path.<br>

We want to create a directory named matt.<br>

So we specify state of directory and the permissions we want for that directory as well.<br>

What will happen here is that if a directory does not exist, home/Matt, it will create one.<br>

And if not, it'll just let that one be, or it'll change its permissions to match the permissions that we want on our remote directory.<br>

The second task uses the same file module, but it creates a file named hello-ansible.md for markdown.<br>

And the state, instead of saying we want a directory, we want to use a touch command to create an empty file, and we give it some different permissions.<br>

So the gist here is, we want to create a directory, and we want to create a file within that directory.<br>

If we look over here on the top right where I've logged into my remote server, we'll see that no files or directories exist under the home directory.<br>

Let's run our Ansible playbook, and we'll see that that quickly changes.<br>

We're going to run the Ansible playbook command with a deploy.yml file.<br>

We'll specify a private key that is located in my .ssh home directory.<br>

And we'll specify an inventory file which contains the IP address for the remote server that we're working with.<br>

And the de facto standard is to call that inventory file hosts.<br>

We'll kick off our playbook, and Ansible has reported back that it was able to execute three tasks.<br>

First, gathering facts, so gathering all the configuration as it exists and then executing the two tasks that we specified above in our Ansible playbook.<br>

Let's see what happened on our remote server.<br>

We've got our directory named matt now.<br>

We can go into it, and we see we have a hello-ansible.md markdown file.<br>

So in just a few lines, we were able to modify a remote server, and we could actually do that with more than a single server.<br>

We only changed one server here, but we could've run the exact same file against thousands of servers.<br>

And we only used two tasks here.<br>

Tasks are the building blocks for entire playbooks that allow us to set up servers in the exact configurations that we want, do our deployments, and handle thousands of servers if we want using these maintainable playbooks.<br>

Throughout this course, you'll become completely comfortable with this very simple playbook and building much more complicated playbooks for real-world scenarios.<br>

I've used Ansible on applications ranging from side projects that I've built in my spare time to really large organizations, where dozens of developers are working on the same deployments.<br>

Ansible's ease of use allows it to scale, both in team size and in project size, and across many different projects.<br>

So what you're learning here, whether it's for a side project or for a large organization, it'll be relevant in many scenarios.<br>

Ansible's approach to configuration management is to automate what was previously done by hand using Open SSH.<br>

Other configuration management tools use an agent approach, which means that you have to have software running on all of your remote machines, and that can create an additional attack factor that your information security department may say, That's not going to happen.<br>

I gain peace of mind from knowing that Ansible is not creating a new paradigm.<br>

It's taking decades of system administration experience and making that much easier, whether you are a sys admin or a developer who is trying to deploy and configure servers.<br>

Finally, another huge thing for me is I've written playbooks, read them several years later, and immediately understood what they were doing.<br>

I wasn't the biggest fan of YAML when I first saw it, but I quickly became comfortable with it.<br>

And it has major advantages over other markup formats.

|

|

|

show

|

0:32 |

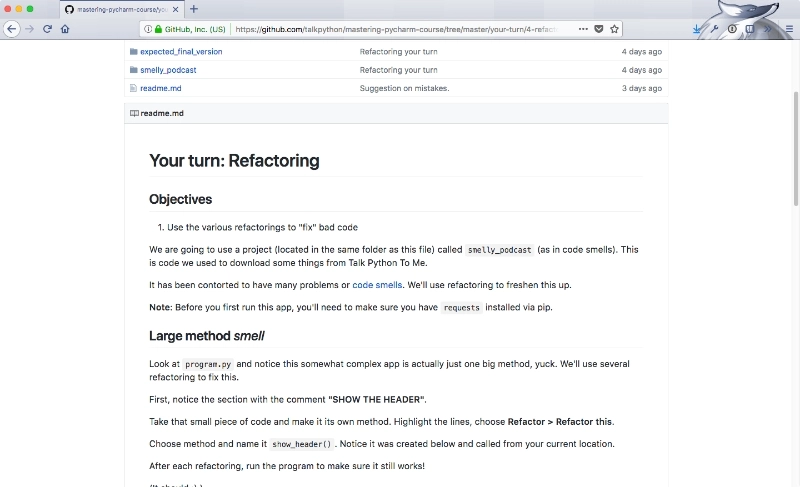

There will be a lot of live coding and working together on projects throughout these videos because I believe that's the best way for you to learn and get hands-on time with Ansible.<br>

However, you're not going to always want to type everything in by hand.<br>

Sometimes you just want to copy and paste or maybe you made a typo somewhere and you just want the code that works so this is the spot for you.<br>

If you go under the fullstackPython on GitHub look for the book-and-video-code-examples repository there's an intro Ansible directory.<br>

That contains all the code that we'll use throughout the entire course.

|

|

|

show

|

3:22 |

Here's some context for who I am and why I'm teaching about Ansible.<br>

My name's Matt Makai and I've been a software developer professionally for about 5 years, the first three of those in Java and then, since then, almost exclusively, Python with a little bit of Swift mixed in there as well.<br>

You can find me on GitHub, or on Twitter @MattMakai and I'm the creator and author of fullstackPython.com just read by over 100,000 developers each month so that they can learn how to build, operate and deploy their Python powered applications.<br>

Python's been my go-to language for both side projects and professional development for over 10 years and that's what originally got me into Ansible because Ansible is written in Python.<br>

It first took off in the Python ecosystem and then, grew from there into other programing ecosystems.<br>

As a Python developer, I first heard about Ansible in 2013 and my own growth as a software developer has paralleled Ansible's growth as an open-source project.<br>

Instead of just showing you a few slides with more about my background let's look at the history of the Ansible project and where I've been able to contribute and where I've been able to learn from it.<br>

Ansible is open-source so it can be found on GitHub at github.com/ansible/ansible.<br>

If you take a look back at the logs the earlier pieces of the project you can see most of the original ones are by Michael Dehaan, who's the creator of Ansible and also co-founder of the company that was eventually sold to Red Hat but still runs the Ansible project.<br>

I discovered Ansible around the 1.0 release back in the start of 2013.<br>

The project was still in really rough shape back then but it worked, and the potential was there.<br>

I must've played around with it on side projects and followed along as each incremental release built out new modules, and fixed bugs; Michael Dehaan and the initial community really built out the project and made it smoother from whence they had started.<br>

In mid 2013, Michael came up to Washington D.C.<br>

where I was living at the time to give a talk at a DevOps meetup about Ansible and this is where I really started to get engaged with the community.<br>

In fall of 2013 I was finishing up some consulting projects and was getting ready to start a new job at Twilio which I began in early 2014.<br>

I took a couple months off at the end of the year before I started my new job after my consulting projects had ended and hacked on Ansible full time to really understand how the project worked.<br>

I created a prototype based on my deployment knowledge having worked with Django for a long time created a prototype project called, Underwear which was a Django module that hooked into Ansible and made it possible to deploy entire Django projects to servers using a Python manage.py deploy command.<br>

In early 2014, I started speaking about using Ansible to deploy Python projects and I wrote my first Ansible module that is now included as a part of the Ansible project.<br>

The Twilio module shipped with Ansible version 1.6 in early 2014.<br>

It allows you to use the Twilio API in your playbooks if you want to send text messages while running your Ansible playbooks.<br>

Ansible put on our first conference in 2014 so I spoke at that then wrote another module to use the centigrade API so you could send emails and that was included in version 2.0 around late 2014.<br>

While all this was going on the open-source community in the Ansible quarantine frequently shipping releases making the project better and better and keeping that momentum in the community.<br>

I used Ansible to write my deployments book and as a part of the Python for Entrepreneurs Course that Michael Kennedy and I did together; huge upgrade to Ansible came in version 2.2.<br>

This is where the Ansible quarantine became much more serious about Python 3.0 compatibility.<br>

It's gotten better and better with each release.<br>

We are going to exclusively use Python 3.0 throughout this course as Python 2.0 is rapidly approaching its end of life.<br>

Ansible continues to evolve on GitHub every single day and as you become more comfortable with the project we'll spend a lot of time in this repository taking a look at the source-code so that you can better understand the how to use modules and how to build your own modules as you get more advanced with Ansible.

|

|

|

show

|

3:25 |

Like any software development tool Ansible pairs really well with strong reference material.<br>

I keep a few tabs open whenever I'm developing my Ansible playbooks.<br>

I'll show them to you now.<br>

You shouldn't need them while you're working through these videos, but if you want to take a breather or want to dive deeper into a subject find out more about a module it can be really handy to keep these open.<br>

We just looked at the Github repository for Ansible at github.com/ansible/ansible.<br>

I bring it up again now because there are a few directories that are more important than the other ones.<br>

Specifically, lib/ansible - this is where the majority of the source code for Ansible lives and in particular you'll want to look under the modules directory because these modules are going to be what's doing the work every time you write a playbook.<br>

For example, if you want to send notifications when you hit a certain step or something happens in your playbook take a look under the notification module and you'll see all the source code for every different type of notification and integration that you can apply in your playbook.<br>

I'm constantly referencing the Ansible source code not because the documentation isn't good but just because sometimes its easiest to read the source code especially if you're a Python developer.<br>

Speaking of Ansible documentation, it's fantastic and you can access it at docs.ansible.com.<br>

It's broken into many areas the one that can be most useful to you right now is under 'Getting Started.' You can read this to get some context about how Ansible works over SSH.<br>

It's handy just in case you want a secondary resource.<br>

Also under 'Documentation,' you can gain additional context about playbooks, inventory files, and developing your own modules.<br>

The one option that I always keep opened though is the module index.<br>

This is the master guide for everything you can implement with Ansible without having to write your own custom modules.<br>

We saw how to create directories and files with an example Ansible playbook.<br>

If you want to learn more about file module you go under 'Files modules,' and select 'File.' My favorite part of the Ansible modules documentation is that it follows a very clear boiler plate pattern you've got a quick synopsis all of the parameters that are available to you some notes just in case there are exceptions or things you need to be aware of with different operating systems and then he handiest bit of all I'm actually surprised every single bit of documentation for every project does not contain this example code.<br>

Example code, you can copy and paste by highlighting, copying, putting it into your playbook customizing it for your own purposes.<br>

If you scroll down a little further you'll see the status of the module.<br>

The main reason why this is important is because stable modules guarantee backwards compatibility.<br>

For most modules, you won't have to worry about this but if you see a new module in a new Ansible release you'll just want to check the status to make sure that it's not going to have some backwards breaking changes.<br>

One more bit of documentation if you want to get a high level overview of Ansible configuration management, deployments web server configuration take a look at the table of contents page at fullstackPython.com.<br>

The 2 chapters that'll be most applicable to you are going to be 'Chapter 5: Web App Deployments' and 'Chapter 6: DevOps,' and where possible I try to give plain language explanation along with diagrams to show you how deployments work or how configuration management tools like Ansible work along with the best resources that I've found to accomplish your objectives.<br>

So the two mandatory tabs github.com/ansible/ansible the second one docs.ansible.com and optionally, if you need a higher level overview the table of contents page on fullstackPython.com.

|

|

|

show

|

0:38 |

This course is sponsored by DigitalOcean.<br>

A big thank you to DigitalOcean for providing $100 in credit for their servers to every viewer who uses this link.<br>

That's do.co/fullstackPython.<br>

Go to this link now, or a bit later in this course when we start spinning up servers to use with Ansible.<br>

This free credit will make it possible to complete all steps in this course without having to pay any money for hosting and all of the videos for this course use DigitalOcean as the hosting provider so you will be able to follow along step by step in each video with your own DigitalOcean account.<br>

Thanks again to DigitalOcean for providing the credit and sponsoring the Introduction to Ansible video course.

|

|

|

|

11:14 |

|

|

show

|

2:53 |

If you're working on Mac then we'll get you to the exact point you need in order to run Ansible.<br>

If you're working on Linux or Windows feel free to skip this video and go to the appropriate video for your operating system.<br>

We're going to use Python 3 to run Ansible.<br>

So the first step is to go to Python.org in your browser.<br>

Click downloads.<br>

Download Python 3.6.5 or whatever the latest release is.<br>

Luckily, other than the Python 2, Python 3 split whatever the latest Python version is that's out there should be able to work just fine with this.<br>

And if not, let me know, and I'll update the videos.<br>

Click on the package and install it.<br>

You'll need to type in your password for root privileges.<br>

That's normal with installing Python.<br>

Once it's all done, click close and you can move it to trash, the installer.<br>

Now we need to test it out and install Ansible.<br>

Open up a new finder window.<br>

Click applications and then scroll all the way to the bottom to utilities.<br>

We're going to work on the command line so you need to open the terminal window.<br>

Assuming Python 3 has installed correctly we can type Python3 and we should see the appropriate version that we installed on our system.<br>

Don't just type Python because that will go to the default system installation.<br>

We really want to work with Python 3 so use the Python3 command.<br>

It's good practice to use a virtual environment that's included as part of the core Python installation.<br>

I have a directory called envs where I keep all of my virtual environments.<br>

And to create a new virtual environment type "Python3 -m venv" and then we give it a name.<br>

In this case, we'll just call it intro-ansible.<br>

To activate that virtual env type source and then the directory bin/activate.<br>

We can tell that it's been activated by the parentheses and the name of the virtual env that we're now working with.<br>

Now we can use the pip command, so P-I-P.<br>

pip allows us to install packages and if we type pip freeze right now we will see that we don't have any packages installed in this virtual env.<br>

Type pip install ansible, and this will go out and grab the Ansible package from PyPI.<br>

Give it a couple minutes and we should see successfully installed and then a bunch of packages.<br>

These packages are the dependencies that are used by Ansible.<br>

For example, Jinja is used for templates.<br>

Paramiko is used for the underlying SSH protocol.<br>

PyYAML is used for the YAML files in our playbooks.<br>

And of course we see the Ansible package itself.<br>

Now we can test out whether Ansible has been installed correctly.<br>

We'll run a command against localhost.<br>

This is an ad-hoc Ansible command.<br>

ansible localhost, for the system we want to run it against -a, for an ad-hoc command and we're just going to run an echo command that says hi.<br>

Press return.<br>

We'll get some warnings that tell us we don't have a host file, but that's okay.<br>

It's going to default to a localhost and then we'll see the output "hi" from the successful execution of our Ansible ad-hoc command.<br>

This tells us Ansible has been successfully installed and now we'll be ready to write our playbooks.

|

|

|

show

|

1:55 |

After installing and testing Ansible to make sure that it runs the next step is to create an SSH key.<br>

SSH keys are asymmetric keys.<br>

Which means that the public and the private key are different.<br>

You can share the public key with whoever you want.<br>

And, in fact, we're going to put the public key on remote servers.<br>

But a private key should never be shared.<br>

When a server only allows login via SSH keys it will use the public key to determine if someone is trying to log in with a private key regardless of the operating system that we're going to be using.<br>

We need to create an SSH key pair.<br>

Let's take a look at how to do that on macOS.<br>

Back in the terminal we're going to run the ssh-keygen command.<br>

If we type it without any parameters it'll just use default settings.<br>

We're going to use a few custom parameters with ssh-keygen.<br>

The first is to specify the type as RSA.<br>

The number of bits is 4096 which is the current standard that most people use.<br>

And we can specify a custom email address which in my case is matthew.makai@gmail.com.<br>

Now ssh-keygen will ask you where you want to save the public and private key pair.<br>

I'll store it in the directory that it's recommending but I'm going to give it a different name because I don't want to overwrite the existing public and private keys that I have there.<br>

I'll call this intro-ansible and press enter twice to not use a passphrase.<br>

And then we'll see a bunch of random art that represents the key's image.<br>

And our keys are created.<br>

So if we take a look under the .ssh directory and then we look specifically using the grep command for intro-ansible we'll see that we have the private key which is just called intro-ansible and then the public key which always has the .pub at the end after ssh-keygen generates those keys.<br>

intro-ansible.pub is the one that we're going to put on remote servers and intro-ansible is the one that is going to allow us to verify that we are who we say we are when we want to connect to those servers.<br>

Now that we have our public and private keys we can write and execute our first Ansible playbook.

|

|

|

show

|

1:29 |

Ansible was originally designed for Linux-based systems.<br>

And while Windows support has gotten much better for managing remote machines it's strongly recommended that you do not run Ansible under Windows as your control machine.<br>

There's simply no support by Red Hat or Microsoft in order to execute Ansible properly and not run into major issues.<br>

That said, a lot of people are running Windows machines.<br>

So here's the two possible routes you can take if you really want to get Ansible up and running and you have to use Windows as your base installation.<br>

My first recommendation is to use a virtual machine like VirtualBox if you want to go the free route or VMware works as well, in order to virtualize Linux on top of Windows.<br>

Right now, I recommend using Ubuntu 18.04 LTS which was released in April 2018 and will be supported for the next five years.<br>

If you download VirtualBox you can then install Ubuntu start that up and then follow the instructions in the next video for configuring your Linux environment to run Ansible.<br>

A much newer way to run Ansible on Windows is to use the Windows Subsystem for Linux.<br>

This is actually going to be similar to running a virtual machine, but it's a new edition of Windows 10 that allows you to install a Linux distribution of your choice and then use it from within Windows.<br>

Either one of these routes will get you to the same destination, which is to have a Linux system that is running within Windows that you can use as your control environment for Ansible.<br>

Once you either have VirtualBox running with Ubuntu or the Linux sub-system running with Ubuntu, take a look at the video for configuring your Ubuntu Linux development environment.

|

|

|

show

|

3:12 |

Whether you're running Ubuntu Linux as your base operating system or your virtualizing it on top of Windows or macOS.<br>

Ubuntu Linux provides a great environment to control Ansible.<br>

Let's first get Ansible installed, test it out and then in the next video we'll create an SSH key that we'll use for the remainder of the course.<br>

I strongly recommend that you use the latest version of Ubuntu which is currently 18.04 LTS as Bionic Beaver.<br>

This release will be supported for the next five years and it comes with Python 3 pre-installed.<br>

So we can even skip a step because we will need Python installed on the system in which we are going to control Ansible.<br>

Although Python 3 is`pre-installed we do need to install the venv package so that we can work with virtual environments.<br>

There's two ways we could install Ansible on the system.<br>

We can install it site-wide or we can use a virtual environment which is what I tend to prefer and what we're going to use in this video.<br>

But before we can use a virtual environment also known as a virtualenv.<br>

We need to install the Python3-venv package.<br>

Select 'Yes' that you want to continue.<br>

Then depending on the speed of your internet connection it should quickly install the package.<br>

We can test out that everything worked by typing 'Python3 -m venv'.<br>

Let's create a directory to store our virtual envs.<br>

Go into it.<br>

Then use our new venv package installation to create a virtual env called 'intro-ansible'.<br>

Then we can activate it.<br>

That'd be source intro-ansible/bin/activate.<br>

Now we can see here by the change in our command prompt that we're in the virtual env and it's been activated as our current Python installation.<br>

We no longer need superuser privileges in order to install the Python package.<br>

I always prefer to run with the least amount of privileges on Linux when possible.<br>

Just remember whenever you open a new term on a window you will have to run source intro-ansible/bin/activate in order to re-activate the virtual env.<br>

Now we can use the pip command to install the latest version of Ansible.<br>

If you haven't installed any other dependencies on Ubuntu you may see some errors about failing to build wheels for the Ansible dependencies.<br>

That shouldn't affect our ability to use Ansible.<br>

But if it bothers you, there are a few development packages that you can install to get rid of that.<br>

For now as long as you see 'successfully installed' and then the list of packages including Ansible we're good to go.<br>

Let's test it out though to make sure that it's properly installed.<br>

We'll run our first Ansible ad-hoc command.<br>

So type 'ansible'.<br>

Then we're just going to have placeholder for localhost.<br>

It's not actually going to use localhost it's going to fall back to localhost when we try to run this ad-hoc command.<br>

-a for an ad-hoc command.<br>

We're going to run echo and just say something like 'hi'.<br>

for 'hello world'.<br>

Make sure to have the single quotes around 'hello world' and then double quotes to end the ad-hoc command.<br>

We can test this out and we should see success and 'hello world'.<br>

What this has done is it executed via Ansible the echo command on our localhost machine as an ad-hoc command.<br>

This tells us Ansible is working and we'll be able to start creating our playbooks as soon as we create our SSH key.

|

|

|

show

|

1:45 |

We need to create an SSH key so that we can properly control from our computer, all other computers that we're going to configure using Ansible.<br>

On Ubuntu, we create SSH keys using the ssh-keygen command.<br>

Type ssh-keygen and then the type of key that we want to create is RSA.<br>

We're going to have 4,096 bits and you can also customize with the -C what you want your email address to be for this particular key.<br>

So I'll just put mine as matthew.makiah@gmail.com Now I typically save.<br>

ssh-keygen will ask you where you want to save both the public and the private keys.<br>

I don't use the default value what I do is give it a specific name that allows me to later reference and understand what that key is being used for.<br>

So for this one, call it intro-ansible and store it under your home directory within the .ssh subdirectory.<br>

Don't specify a passphrase.<br>

And now we'll have two files that have been created when we take a look at that directory that we just specified.<br>

The first one is our private key.<br>

The private key should never be shared publicly.<br>

This is what is going to authenticate you that you have access to a server.<br>

The public key can be shared widely in fact it can even be uploaded to GitHub if you want.<br>

We're going to put this on remote servers because we are the only ones with access to the corresponding private key.<br>

Occasionally we'll also even need to just copy and paste our public key.<br>

And what I do for that is that I use the cat command.<br>

And then you can copy and paste directly from the terminal.<br>

You'll never want to do that for the private key but for the public key, you can share that freely.<br>

And later what we'll see is we'll copy and paste the public key when we're provisioning servers.<br>

Now that we have our environment setup we can dig into the core concepts of Ansible.

|

|

|

|

21:34 |

|

|

show

|

4:44 |

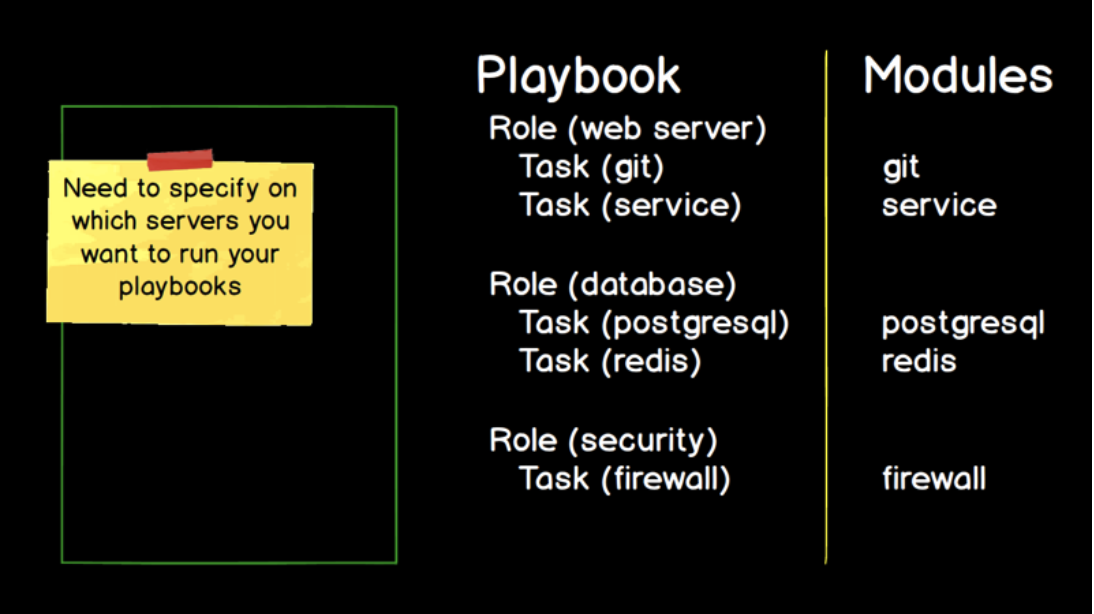

Ansible has a set of terminology and core concepts that are going to be crucial for you to learn and understand because we're going to use them throughout the rest of the videos in this course.<br>

This video is designed to introduce you to those concepts and show how they relate.<br>

Then we'll explain in further depth each of these concepts in turn.<br>

If we think about how a configuration management tool operates, there has to be some way for us to execute actions against the servers that we want to configure.<br>

With any configuration management tool that tool contains some code, and it will expose a way for you to use operations so you can execute your actions.<br>

In Ansible's case, these are modules and most modules contain a familiar name.<br>

For example, if you're working with a Git source control system, you're going to be working with a Git module.<br>

If you're trying to bring services up and down on a Linux system, you use the service module.<br>

The database back ends like Postgres or the in memory data store, Redis, likewise.<br>

They have modules named after them.<br>

There's a laundry list of hundreds of core modules that come with Ansible, which we saw in the documentation.<br>

And Ansible makes it easier for you to write your own modules if for some reason what you're trying to do is not covered by the existing modules.<br>

So modules are our first core concept.<br>

This is functionality that Ansible exposes to us but we need some way to use that functionality.<br>

We need a mechanism to specify the modules that we're going to use.<br>

Another way to put this is the modules are the code that Ansible already has, and you need a way to write code or some markup language that will let you use that Ansible code.<br>

In this case, we have tasks.<br>

A task is a specific implementation.<br>

If we need to use the Git module where we will specify a particular Git repository that we want to clone or push our code to, we'll write a specific task like restart the Nginx service.<br>

We'll modify or recreate Postgres or Redis database and we'll enable and disable rules on a firewall.<br>

Tasks are the bridge from the Ansible code that's contained in modules to what you are writing to use Ansible for configuration management.<br>

With any non-trivial configuration or deployment we're probably going to have dozens, or hundreds maybe thousands of tasks.<br>

So we need some way to organize and group these related tasks.<br>

Ansible has two more core concepts here that we're going to use, roles and playbooks.<br>

Roles are a flexible way to express what tasks to apply to one or more types of servers that we're working with.<br>

Think about roles as either being horizontal so they'd be cutting across every single server that you'd have as a part of your deployment.<br>

So if you have common security settings you would specify your security settings as a role.<br>

Or they can be verticals like a web server configuration or a database back end.<br>

Roles are one of the most flexible, conceptual ideas so they often take the longest to wrap your head around.<br>

And many roles are contained within a playbook and we can have one or more playbooks.<br>

Playbooks are the overarching way to organize all of your tasks and all of your roles so that Ansible can execute them.<br>

While it is possible to run an ad hoc task with Ansible most of the time you're going to use the Ansible playbook command, which will combine many roles and many tasks within each of those roles to complete a deployment or orchestrate your server configurations.<br>

The big missing piece here is the specific servers that we want to execute our playbook against.<br>

You wouldn't put specific host names or IP addresses in the playbooks because they wouldn't be reusable.<br>

Instead we have a different concept to describe which servers we want to run which roles on.<br>

This core concept is known as the inventory.<br>

The inventory maps the roles, such as a web server or a database server, to IP addresses and host names that you want to configure.<br>

The separation between what needs to be accomplished that is specified within your playbook and where to run those configurations goes a long way with making Ansible playbooks reusable.<br>

And its also a huge help when you're trying to deploy to a dev environment, a staging environment a test environment, production environment.<br>

You only need to change the inventory.<br>

You don't need to change the playbook itself.<br>

Finally, YAML, or confusingly known as YAML ain't markup language, is how we write our collection of tasks within the roles.<br>

We can also write our inventory in YAML although, as we'll see in a future video we can either us an INI format or YAML and I typically use the INI format.<br>

But just know that YAML is how you're going to write your tasks.<br>

So these six core concepts, the modules, which is the code that is a part of Ansible that we're going to execute.<br>

The tasks which allows us to describe which modules we want to use and how we're going to use them.<br>

The roles, which allow us to group related tasks together for purposes like setting up a web server or a database server.<br>

Playbooks are typically the overall unit that we're going to be executing, and we have one or more roles that we're going to execute as a part of our playbook.<br>

The inventory describes where we are going to execute our playbook against and YAML, which is how we write our tasks and is one way we can write our inventory files.<br>

We've seen a little bit about how these concepts interrelate.<br>

If you're still really confused by what each one means that's why we're going to dive into each one right now.

|

|

|

show

|

4:16 |

We see how each of the Ansible core concepts relates to each other and fills a specific need for how to handle configuration management.<br>

Now, let's take a look at each of these concepts individually, give them a clear concise definition so that we know what we're working with and to take a look at some examples so that in the next chapter when we write our first playbook we at least have enough of an idea of why we're working on each step.<br>

The first concept is modules.<br>

Modules are code provided by Ansible.<br>

Now, they're typically written in Python and in fact, the core Ansible modules that are provided with the Ansible project when you install it, are all written in Python.<br>

So, more advanced topic but they don't actually need to be in Python.<br>

You could write a module in Java or a module in JavaScript, or Ruby.<br>

There's a standard way to call Ansible modules.<br>

But for our purposes in the introduction just think about this as Python code that Ansible provides you to perform a specific action you want to take.<br>

What do we mean by specific action?<br>

Well, for example, if we want to clone a Git repository.<br>

For working on a web application we want to clone it to our development server where we're going to do a dev deployment.<br>

There is a Git module for handling that task.<br>

If we want to enable an operating system's firewall and configure various settings on it there's a module for working with firewalls in various operating systems.<br>

If we want to send notifications let's say, e-mail or text message, or Slack message there are notification modules.<br>

The creators and maintainers of the Ansible project have done a fantastic job of providing modules for almost any purpose you can think of.<br>

And as you can imagine, that's a whole lot of modules.<br>

So, if we look at the Ansible modules documentation it's listed by category.<br>

It can get overwhelming very quickly.<br>

And as I mentioned, there's notification modules.<br>

So, if you click on notification modules in the Ansible documentation it's going to list them all out for you.<br>

If you're ambitious enough to list all of the modules you will be inundated with everything that comes with Ansible.<br>

However, I would say this is a really bad place to start.<br>

I have never used all of these modules as we scroll through we're barely past the modules that come with Ansible that start with C.<br>

I strongly recommend against trying to memorize all the modules.<br>

Instead, throughout the course we'll introduce the most important modules and then you can branch out from there depending on how your deployment needs and your configuration management needs require various modules.<br>

At least you know you can search for all modules on the all modules page.<br>

For know, let's say we want to accomplish an action.<br>

Typically, what I do is go to the specific module.<br>

If we want to send a message in Slack/Slack notification we can click on the Slack module it'll give us any required parameters and it will give us examples.<br>

This is typically where I copy and paste start playing with the module that I've never used before and start using it.<br>

We'll cover how to use the modules when we go over tasks.<br>

But I have to commend the Ansible core developers and contributors for all the great documentation that they provide.<br>

I strongly recommend keeping the documentation up so we know that when we want to accomplish an action in Ansible there's a documentation list by category.<br>

There is also the code on GitHub.<br>

Everything about Ansible is open source and reading the source code is really helpful when you're working with modules.<br>

So, on GitHub, github.com/ansible/ansible we've got the code for Ansible and you're going to want to look under lib/ansible and this is where the modules directory lies and all of the modules underneath.<br>

So, when we took a look at the notification modules if we click on notification this is where the source code to each one of the modules lives if we look at slack.py, we can reference the source code for sending messages to Slack.<br>

So, we've got Ansible documentation so as we start writing our first playbooks we're going to want to accomplish one or more actions we'll use the module's documentation and the module's source code how to use each module properly and philosophically, Ansible modules work well because they abstract all the low-level complexity the previous automation systems like Bash Shell scripts and have really hard time of containing.<br>

One of my first jobs out of college before there were any configuration management systems we used Bash scripts to automate our whole deployment and those scripts were littered with conditional statements if we're deploying to dev, take these steps if we're deploying to staging, to test, to production for deploying one version or another version Ansible modules work well because they abstract that low-level complexity.<br>

So we know where to look when we want to use a module but how do we actually use them?<br>

They are invoked by writing Ansible tasks.<br>

So, let's take a look at how to create our tasks.

|

|

|

show

|

2:09 |

Tasks are Ansible's building blocks for accomplishing your objective.<br>

Tasks are written in YAML and they are in the instructions that invoke Ansible modules to execute an action.<br>

We took a look at how these concepts related in the first video and we saw that there are modules for all sorts of actions we want to take like working with Git or installing and configuring Redis and we needed a mechanism to specify how to call those modules.<br>

That's where tasks fit in.<br>

Here's what a task looks like.<br>

We start out with a - and then a space and keyword name.<br>

And we start with a human readable name.<br>

Think of this as a comment.<br>

Technically you don't have to start your Ansible tasks with name but as these tasks are running having a comment on every single one to explain what it's doing and why is really useful.<br>

So the first line that we start out with on the task is a name what this task is doing, and why.<br>

In this case, we're making sure that Git is installed on the system as this can do one of two things.<br>

First, it's going to check if Git is installed.<br>

If it's installed, it's going to say hey, we're good.<br>

Move on to the next task.<br>

If it's not installed, it will handle installing it for you.<br>

It does this by using the apt module.<br>

So assuming we're on an Ubuntu system or a Debian based installation that has the aptitude package manager we're using the apt module and we're passing it three parameters.<br>

The first is the name of the package that we want to install.<br>

That would be git-core.<br>

The state that we want it to be in.<br>

In our case, we want the git-core package to be installed.<br>

If we said state=absent that would mean we do not want the git-core package to be installed and it would remove the package if it was installed.<br>

And the third parameter that we're passing in is to update the cache.<br>

The cache contains a list of all the packages that can be installed and their version numbers.<br>

And we always want to grab the very latest version of the git-core package.<br>

So this ensures that the cache is updated equivalent to running the sudo apt update command before we check to see if git init is installed and install it.<br>

And finally, become true specifies we want to use our super user privileges because apt requires super user privileges to install our package.<br>

That's what a task looks like written in YAML and there's two ways we can execute this.<br>

The most common is going to be in a playbook where you have a bunch of tasks grouped together.<br>

And the other way is we can run ad-hoc commands.<br>

So let's run a couple of those just so we can get comfortable with how to invoke modules using tasks.

|

|

|

show

|

2:34 |

Let's use this example Ansible task and run it using the Ansible Ad-Hoc mode.<br>

Move over into your Mac or Linux environment where you're planning to work with Ansible.<br>

First we're going to test and were going to see that Git is not installed on our system.<br>

We do want to use Ansible to install it.<br>

Remember that you need to keep your virtualenv activated.<br>

So, use the source command point it to your virtualenv, then activate.<br>

You can use the pip freeze command to make sure that Ansible is actually installed.<br>

In this case, yes it is we're using version 2.6.2 Now let's run a couple of Ad-Hoc commands.<br>

We're just going to run these against our localhost system.<br>

And the first one is just to gather the facts.<br>

Now what do we mean by facts.<br>

A fact is a piece of data that Ansible gathers on the system before working with it.<br>

Ansible gathers a wide range of information.<br>

So that it knows how to take the actions specified in an Ad-Hoc command or in a Playbook.<br>

And we can see all the facts that Ansible gathers.<br>

Use this command: ansible localhost -m setup.<br>

This isn't going to apply any action.<br>

It will only gather the facts about our system.<br>

Now I put in localhost as a placeholder.<br>

We don't have a hosts file.<br>

Ansible will tell us that you couldn't find a host file.<br>

But it will gather the facts for our localhost system.<br>

When you execute that command, you're going to see a whole bunch of information about your current system.<br>

Ansible will do this every time it runs.<br>

For our next Ad-Hoc command type in ansible localhost as we just did, in the previous command and this time with -m specify the A-P-T apt module.<br>

Specify -a and pass in, name=git-core state=present, update_cache=yes specify -b, which explicitly states we wana use our superuser privileges in order to execute this command since we're installing a system wide package.<br>

Specify -K, which will force Ansible to ask you for your superuser password and then one final argument, which is -e where we set an additional variable which is the ansible_Python_interpreter=/usr/bin/Python3 explicitly set as /usr/bin/Python3.<br>

In this case, this is to prevent Ansible from using the default Python 2 installation which does not have Ansible installed.<br>

Once you've got all that typed in, go ahead and hit execute.<br>

Now we're good to go.<br>

We have Git installed, we can use it on our system.<br>

So we just ran a couple of Ansible Ad-Hoc tasks.<br>

For the remainder of these videos we're going to run tasks through Playbooks.<br>

That's how you can run individual tasks if you want to test out a module or just do something quick from the command line.

|

|

|

show

|

2:18 |

Ansible Roles are another core concept that are closely related to Tasks.<br>

Tasks, which we just went over are an atomic unit of instructing Ansible to take some action.<br>

Roles are a way of taking those grouped Tasks and any corresponding variables and using an Anisble-defined, file naming convention and directory structure convention.<br>

So that those grouped Tasks can be reused for more than one server or as part of more than one playbook.<br>

It's much easier to understand this with an example.<br>

In the following directory structure we have two Roles: Common and Web Server.<br>

As well as two variable files: All and Web Server.<br>

The first Role, common, has two files that specify Tasks.<br>

main.yml and git.yml that are stored under the tasks directory of the common directory.<br>

There are also other subdirectories such as handlers and templates that we'll take a look at in future videos.<br>

The subdirectory you'll most frequently use as a part of Roles, is the tasks subdirectory.<br>

Ansible defines that the directories contained within the roles directory are the names of your Roles.<br>

And the directories, and the files within a Role directory such as common or webserver are accessible when you run the Ansible commands that understand this directory and file grouping structure.<br>

In a couple of videos when we run our Ansible commands you'll see that Ansible will automatically pick up Common and the Tasks within it.<br>

The second Role, webserver uses the files, with variables in them stored under the group_vars directory.<br>

Both all and webserver.<br>

And it also has multiple YAML files with Tasks stored within those YAML files.<br>

Those tasks can reference the variables stored under the group_vars directory.<br>

So why would we want to use roles?<br>

The most important part is that they make what we're creating, reusable.<br>

Rather than just having a one-off bash script to handle the configuration of web server we can reuse a web server configuration across many servers and many playbooks.<br>

And when we want to make some changes whether large or small Roles make it easy for us to re-run those Tasks and iterate on our configuration.<br>

Once we get into the meat of creating our playbooks you'll understand how important Roles are.<br>

And if you don't use Roles you're really killing power of Ansible's implicit runtime configuration.<br>

Ansible defines a file and directory structure for Roles to make things easier on the developer.<br>

That structure will become second nature to you after you create a few playbooks.

|

|

|

show

|

1:18 |

Playbooks are the highest level concept in Ansible and playbook is likely the most frequent term that you will hear used when people are talking about Ansible.<br>

Playbooks are the top level collection and every playbook contains one or more roles typically many tasks within those roles associated variables and all the other information necessary for execution such as which servers are we going to execute this playbook against.<br>

Playbooks are run by using the ansible-playbook command.<br>

Just as we had roles to group related tasks together to accomplish various actions we also needed a way to organize and group the tasks, roles and variables.<br>

And that is what a playbook is.<br>

A simplified example of a playbook would look like this, with the following file names and directory structures.<br>

As we saw in the roles video in this case we have two roles common and webserver.<br>

We also have a couple of new files.<br>

The first one, deployment.yaml is the playbook instruction file we'll reference when we use the Ansible playbook command.<br>

Deployment.yaml would contain references to the groups of servers that we'll run our roles on.<br>

So deployment.yaml contains the bridge between our roles and the servers that we want Ansible to manage or handle a deployment on.<br>

There's also a hosts file.<br>

The hosts file contains the list of servers that we want to execute our playbook against.<br>

Hosts is also known as the inventory file which we'll discuss next.

|

|

|

show

|

1:37 |

Let's talk about what servers Ansible knows how to execute your playbooks against.<br>

Inventory is a core Ansible concept where a file specifies all of the servers that you're going to run your playbook against and it's typically grouped by role.<br>

There's a default location for the inventory file which is, by convention, named hosts and Ansible will look for that in the Ansible subdirectory of the etc system directory.<br>

However, I always recommend that you set which inventory file you want to run against with the -i flag.<br>

The -i flag is useful for two reasons: one, if it can't find your inventory file it'll stop and give you an error message so you know you've immediately done something wrong rather than it work off of the default inventory file.<br>

That might be the one that you want to use.<br>

And second, getting comfortable with the -i flag will allow you to use different inventory files for development, staging, local, production however many environments that you have.<br>

Let's take a look at a playbook example with a single inventory file.<br>

We already went over the structure of how a playbook would look with our roles directory in group_vars/ and our playbook file deployment.YAML.<br>

We would also have a host file.<br>

And the host file would look something like this.<br>

We'd have the name of our roles so we had web server and common as two of our roles.<br>

And we might have other roles listed as well.<br>

We can list out one or more servers.<br>

And servers can also be listed many times under different roles.<br>

So for example, 192.168.1.1 is listed under both web server and common so both web server and common roles will be applied against that server.<br>

So the Ansible inventory shouldn't feel like a complicated subject.<br>

It's really just a list of the servers that we're going to be working with explicitly specified in your host file.

|

|

|

show

|

1:56 |

YAML is a recursive acronym that stands for YAML Ain't Markup Language.<br>

That's probably just going to be trivia for you hardly anyone ever says anything other than YAML when referring to this markup style.<br>

This is the last of the core concepts we're going to introduce for now so that we can get started writing our playbooks and getting comfortable actually using Ansible.<br>

There will be other concepts that will be introduced along the way but this is the last of them that we need in order to get started because these are the key words we're going to be using constantly to describe what we're doing.<br>

We've already seen an example of an Ansible task written in YAML Here's what it looks like again.<br>

Let's highlight the important parts when it comes to the YAML format versus the task itself.<br>

Now, on the first line, we have a hyphen and then a space and then a key.<br>

The key in this case is name with a colon after it and then a value and the value in this case is ensure git is installed.<br>

And thinking in key-value pairs is basically the core idea with YAML.<br>

Even on this next line, which looks more complicated the key is apt, and the value itself is just three key-value pairs.<br>

The key of name is equal to git-core that's the name of the package that we want to install.<br>

The key of state and the value of present indicating that we want the get core package to be installed.<br>

The key of update_cache and the value of yes which tells apt that we want to update the versions package in the package manager before we install anything.<br>

And then, on this last line, become true key of become and the value of true indicating that we want to use our super user privileges in order to execute this task.<br>

One thing to note is that spacing is required for the syntax.<br>

It's not just to make our tasks easier to read which it certainly does.<br>

There are two spaces before apt.<br>

There are two spaces before become.<br>

The spacing indicates that the second and third lines are grouped together with the first line.<br>

If you were to indent or remove the indentation from the second or third lines it would no longer be valid syntax for Ansible.<br>

So that's what YAML looks like.<br>

That's why the spacing is as you see it in this example.<br>

And we're going to be writing a whole lot of YAML in all of our playbooks to configure servers and play applications.

|

|

|

show

|

0:42 |

We just covered the core concepts for Ansible that we need to know before we can start writing our playbooks and using Ansible for configuration management.<br>

We took a look at how these concepts relate to each other and how we would take a look at the documentation to see which modules are available for the task that we want to perform.<br>

We'd right tasks in YAML and we'd group these tasks by roles and each of those roles and all the tasks would be put together into a reusable playbook that would use the inventory file which is typically named hosts and the inventory file would have corresponding roles.<br>

They would group the servers we're going to do work on.<br>

Now there will be other concepts that we'll introduce along the way.<br>

These are the ones that we needed to get started.<br>

Come back to these videos and use them as a refresher as we're working through our playbooks in the next few chapters.

|

|

|

|

18:26 |

|

|

show

|

1:01 |

Time to match those Ansible concepts with a specific example, and we're going to write our first playbook together.<br>

This playbook will contain several directories and files and here's the structure we're going to create.<br>

I'll have a project directory named first playbook.<br>

We're going to have an inventory file named host that'll contain the list of IP addresses.<br>

In our case, only a single IP address for server that we want to work with.<br>

We'll have out top level playbook.yml file that will tell Ansible what users should be executing which tasks mapped to the servers.<br>

It's specified in the host file.<br>

We'll have a directory and a few subdirectories roles/common/tasks that will contain YAML files with the tasks that we want to run against the server specified in the inventory file.<br>

main.yml is the entry point for a role so we'll include other files in main.yml like ping.yml and other files that we'll create along the way with tasks in them.<br>

So, we'll build up main.yml by including other YAML files as we go along.<br>

That's the overall structure of the directories and the files we're going to be creating in the next few videos and the purpose of these should become apparent as we start writing our files and then running them against our server.

|

|

|

show

|

1:49 |

Now that we're ready to write our Playbook switch over into your development environment.<br>

I'll be writing this code on Ubuntu 18.04.<br>

If you're working on a Mac you should be able to follow along with the same commands.<br>

And again, unfortunately on Windows, Ansible is not set up to run on Windows as a command machine.<br>

You can control Windows machines from Ansible but executing Ansible Playbooks really doesn't work even for basic purposes on Windows.<br>

First thing we're going to want to do is create a directory for our first Playbook.<br>

Switch into that directory and, of course, it'll be empty.<br>

We need to write a first YAML file.<br>

Throughout these videos, I'm going to be using Vim but if you're more comfortable in Sublime Text or another editor, feel free to use that instead.<br>

Start out with a little note and you can put whatever comments to yourself that'll help you remember what this file is for.<br>

It just is, these are high-level instructions that tell Ansible which tasks to apply to which hosts.<br>

Now we're going to write some YAML.<br>

This YAML instructs Ansible to take every host in the inventory file that we'll write.<br>

We're going to use the root user which, typically, we would not do when we configure a server.<br>

We'll learn how to lock down a server later.<br>

But, for simplicity's sake we're going to use the root user on the server that we provision.<br>

We're only going to have a single role and that will be common.<br>

So what we've said here in this Playbook with high-level instructions, take every host we're only going to have a single host on that hose, use the root user and apply the common role to all those hosts.<br>

Here's how we know which host to apply it to.<br>

Create a host file.<br>

In the host file, specify the role named common.<br>

Now we need to say which server we're going to apply this to.<br>

And we need the IP address, but we don't have a server yet.<br>

So let's go to DigitalOcean and provision a server and then we'll know what to put in here.

|

|

|

show

|

1:08 |

To know what to fill in here we need to provision a server.<br>

The easiest way to do that is to use a hosting company.<br>

Switch over into your web browser and if you've already got a hosting company that you use, that's awesome.<br>

I've been using DigitalOcean for a few years now and I found it really easy, and they have a few features like uploading a public key to lock down your server that are super helpful and we're going to actually use in these videos.<br>

Not all the hosting providers use that but you can go to the following URL that's do.co/fullstackPython, and this will give you $100 credit so that you don't have to pay anything in order to use DigitalOcean servers.<br>

So throughout these videos, you shouldn't have to spend any money on servers as long as you use that promo code.<br>

So you should see a screen like this which will just ask you for an email address and password.<br>

And then you just need to confirm your email address.<br>

Now as soon as you click on the link in the email it'll change the screen, and then you'll be prompted for some billing information.<br>

Pop that in.<br>

This helps DigitalOcean protect against or spambots.<br>

You won't actually get charged.<br>

There'll be a temporary authorization on your card to make sure it's valid, and then we can proceed to provisioning our server.

|

|

|

show

|

1:33 |

First time you sign up for DigitalOcean you're going to see this screen, which is for onboarding.<br>

So let's give this a first project name.<br>

If you have a project name you want to punch in there, go for it, otherwise just call this Ansible Test.<br>

And they're going to ask you some basic information about why you're using DigitalOcean.<br>

I typically put development and, of course, select Ansible.<br>

Feel free to check any of these boxes that are related to what you're working on.<br>

My usual stack is Python, use GitHub Django, or Flask, some Redis, Postgres, Nginx and of course, I always use Twilio in my projects.<br>

And then when you're ready, hit the start button.<br>

So DigitalOcean has a bunch of concepts such as projects, that allow you to organize your servers by application.<br>

We're not going to be using any of that right now.<br>

We just need a single droplet so you can go ahead and click okay and get started with a droplet.<br>

Our case, we're going to bump up the version to 18.04, we want the latest Ubuntu LTS release.<br>

And one gigabyte of memory is plenty for us.<br>

Of course, you got $100 free credit on the account so feel free to bump up to a bigger server.<br>

Especially if you're using DigitalOcean for most Python projects, getting started with a one gigabyte server is usually enough.<br>

So for me, I usually switch the data center region to New York one, just 'cause I'm on the East Coast.<br>

And then this is crucial, we want to add an SSH key.<br>

Now if you created one earlier, awesome.<br>

We can add that here.<br>

If not, let's walk through how to create an SSH key.

|

|

|

show

|

2:24 |

So we want to create an SSH key so, when you click "new SSH key" what they're looking for is the public key.<br>

So this was on earlier videos every SSH key pair has a public key you can share that with whoever and you've got your private key which you never want to share with anyone and your private key is what authenticates you instead of a password, or together with a password.<br>

So switch back over into the command line and we'll save this host file for now.<br>

Reuse the ssh-keygen command, so RSA key we want it to be 4096 bits and then depending on your operating system version it's good to try out "-o" flag.<br>

And that's a lowercase "o".<br>

And some versions of ssh-keygen don't have the "-o" the -o indicates that ssh-keygen should save it in an OpenSSH format that is more secure.<br>

There were previous versions of Ubuntu and other Linux distributions that were saving SSH keys in more insecure formats, so try the "-o".<br>

If it doesn't work for you, remove that from the command and then specify your email address, with "-C".<br>

We'll save it as "first playbook" and we won't use a passphrase.<br>

Okay, so now we've got first playbook and first playbook pub, we want to use "first_playbook.pub" copy and paste this over into digital ocean.<br>

Paste that in as long as there's no error message, should be good if there is an error message that may indicate that you've pasted in your private key by accident.<br>

Click "Add SSH key", and that'll actually be saved for future use whenever you create a new droplet.<br>

All right, scroll down, and if you want to, optionally you can change the host name.<br>

I usually like to change it to something a little simpler and go ahead and click "create".<br>

Now, it might take a few minutes but the droplet will be revisioned and then we'll be able to click into it and get our IP address.<br>

Go ahead and copy that, if you just click on the IP Address it should copy it.<br>

Back over into our inventory file and paste in that IP address.

|

|

|

show

|

4:11 |

Okay so we've now got our SSH key we've got a server provisions we have a hosts file and the beginning of our playbook.<br>

But our playbook needs tasks that are stored in roles in order to have something to do.<br>

So right now we have the following files: we got our private key public key, host file, playbook.yml.<br>

We're going to create a directory within our project directory.<br>

We'll call this roles and we'll store every single role that we create for this playbook.<br>

Now in this playbook we're only going to have a single role and we'll just call that common.<br>

So when you go into the roles directory create another role called common.<br>

And common is typically used for roles that cut across every single on of your servers.<br>

You'll usually want to give your roles more descriptive names like web server or data base server.<br>

When there expected that there only going to apply to certain types of servers.<br>

But I typically use common when I know a task is going to apply to every single server that I want to use that playbook for.<br>

All right so within roles/common create a directory named tasks.<br>

And this will store our yml files with each of our tasks.<br>

Now there is a specific file naming convention we need to use here.<br>

And that is, there needs to be a main.yml file so that Ansible automatically picks up what tasks need to be executed.<br>

Think of main.yml as the starting place for the role.<br>

So create a new file named main.yml and we can give this just a brief comment.<br>

And then we're going to import other files that have individual tasks.<br>

Unless your role is only going to execute one or two tasks you're typically going to want to split out your tasks into multiple files.<br>

The way that you'll handle that is with the include syntax.<br>

In our case, we're just going to have our first file be ping.yml.<br>

So that's it for main.yml so far.<br>

Only one usable line, that is the include line for ping.yml.<br>

But of course we need to create ping.yml So create a new file named ping.yml And the ping command just tries to connect to a remote server and sees if its operational and we can actually connect to it and its responding to us.<br>

And this is going to ping whatever servers that we have listed in our inventory file.<br>

And save ping.yml and we're going to try and run this playbook.<br>

Now you can't run it from within the task directory.<br>

So go back into the base directory for first playbook.<br>

Should be at the top level directory of the project directory.<br>

Now we're going to try to run our playbook.<br>

The way we do this is with the ansible-playbook command.<br>

So if you just try to type in ansible-playbook it'll tell you all the options the arguments we can pass in.<br>

We are going to pass in a few arguments.<br>

We'll specify ansible-playbook and then an inventory file.<br>

That inventory file is the one that's in our current directory and that's hosts.<br>

We're going to specify a certain private key and that is first_playbook.<br>

And then we have our playbook.yml file.<br>

Question is whether this will work just the first time we try to execute it with the single ping command that we've put in place.<br>

And unfortunately it failed on the first run.<br>

Now I actually wanted to show this error because this is something that will pop up frequently if you are working with new servers.<br>

And here's what's happening if you see this issue where there was a module failure specifically, you see this particular key which is usr/bin/Python not found.<br>

What's happening here is that there is no Python version 2 installed on that remote server.<br>

So Ansible is trying to execute commands on a remote server using the Python command but only Python 3 is installed not Python 2.<br>

So we need to tell Ansible don't try to use Python 2 we explicitly want to use Python 3 on remote servers or on specific remote servers that we would specify in our hosts file.<br>

So here's how we do that and how we can fix this problem.<br>

Open up the hosts file and right next to the IP address were going to specify the particular location and file name of the Python interpreter that we want to use on that server.<br>

So by default it was trying usr/bin/Python but we want to specify ansible_Python_interpreter=/usr/bin/Python3.<br>

Save that file.<br>

And lets give our playbook a try again.<br>

Okay this time it was able to execute using Python 3 on the remote server and it looks like everything ran properly.<br>

We were able to ping that remote server.<br>

And this is a good start to our Ansible playbook.

|

|

|

show

|

1:51 |

Ansible told us everything went well when we ran our playbook, after we added the explicit instruction to use the Python 3 interpreter.<br>

What if we want even more output?<br>

Surely there's a lot more happening under the covers, than just a few lines of, okay this was done.<br>

If you're running into an error or you just want to see very detailed information when your playbook is running.<br>

Use the -vvv argument.<br>

This is for the most verbose output.<br>

Now instead of just a few lines of output we're going to see a whole lot more this time.<br>

We got a whole lot of information about the connection, the specific versions of OpenSSH that were used.<br>

Which modules, that output really flew by so one thing you can do, is you can also redirect the output from standard out which is currently sent to your console, into a file.<br>

So if you want to save the output somewhere the way you can do that, is rerun your command.<br>

When you're running your command, redirect the output to a file named, for example ansible.out and when it's finished executing be able to open up that file.<br>

Can open up in our text editor, or we can use the typical Linux commands like grep.<br>

We already saw the four Vs for the most verbose output but there is a middle ground where we can see more information about what Ansible is doing without getting too overwhelmed with what's out there.<br>

And in that case, you can use one -v, two -vv three -vvv, or obviously we already saw four -vvvv So if you take a look at two -vv when we execute our playbook, we see more information about what Python version we're using and which files are being included in our playbook.<br>

So two -vv can be a nice middle ground between getting overwhelmed with all the output and not really being able to see enough.<br>

So as you're working with Ansible if you're running into errors, use fully verbose output, and as you get more comfortable with Ansible, figure out what level of verbosity you're most comfortable with.

|

|

|

show

|

3:34 |

Our playbook isn't doing much at this point so let's enhance it so it's executing useful commands on the remote server.<br>

We have a fresh server where we're logging in via a root user.<br>

Typically, that's not a good safety practice.<br>

Let's create a new user vr_playbook and we'll log in to our server using that new user.<br>

Go into the roles Directory, common/tasks and we're going to modify our main.yml file.<br>

We'll include a second file named new_user, and save that.<br>

Now we'll create the new_user.yml file and we're going to write three tasks.<br>

But first, we'll create a new group, the non-root group then we'll create a user and we'll add it to that group that we just created.<br>

And then third, we'll add a public key for this new user so that we can log in.<br>

For our first task, let's create a non-root user and do that with the group module and the name of this will just be deployers.<br>

In our next task, we'll add the deployer user to the deployers group.<br>

And we want this to exist so the state will be present.<br>

Now if we write re-run our playbook multiple times Ansible will check to see if deployers has already been created.<br>

If so, it will simply skip over the step.<br>

Or if later, we change the state from present to absent it will remove the Deployers group.<br>

In our case, we want it to be present.<br>

The second task is to create a non-root user.<br>

We will use User Module for this task.<br>

We need to call the new user deployer place into the deployers group that we just created and we can set things like the default shell otherwise it's just going to default to sh the old school shell rather than Bash.<br>

And we want the state of this user to be present.<br>

Okay, one more task and then we can try to run this.<br>

We're going to use our public key and when someone is trying to log into this deployer user that they need the private key that matches this public key.<br>

And we'll use the authorized key module to add to the deployer user and at present, we want an authorized key to exist.<br>

And this will be slightly trickier.<br>

What we want is, we want the contents of the public key to be saved in the authorized key file.<br>

So we're going to use some more advanced Jinja which is the templating engine, Jinja syntax to look up the contents of a file and that file in my case is stored under /home/matt/first_playbook/first_playbook.pub In your case, that's going to be wherever you saved the public key for your first_playbook wherever your working project directory is.<br>

So this value instructs Ansible to go look up the contents of the file for first_playbook.pub and we want to save that in the authorized key on the remote server.<br>

All right, I'm going to save this file.<br>

Now let's give our playbook a try.<br>

Move back up to the top level directory.<br>

Looks like everything looks good with these three new tasks.<br>

And now we should be able to log in on that remote server with our new Deployer user.<br>

Let's test this out.<br>

With SSH, first_playbook, we'll use our private key to deployer@, and then you're going type in the IP address of your remote server.<br>

And now everything looks good.<br>

We didn't need a password because we had our private key.<br>

And now we're logged on to our remote server using the new user we just created with our playbook.

|

|

|

show

|

0:55 |