|

|

|

12:47 |

|

|

transcript

|

3:01 |

Hello and welcome to Full Web Applications with FastAPI.

Applications, that's the key word about what we're gonna build in this course.

FastAPI well, it has API right in the name, it's really, really good at building web APIs.

But, as you will learn during this course, it's also really, really good at building web applications.

Many of the cool, modern features of FastAPI can, with a very small amount of effort, be applied equally to web applications, those that generate HTML for browsers and humans, as it does for JSON, which is intended for computers and computer software to exchange data with each other.

So let's start with this really important question here.

Should you be focused on building an API or a web app?

In the API, we're going to build applications that exchange JSON, they deeply leverage the HTTP verbs like GET PUT POST DELETE and so on and they exchange data that is generally unseen.

Just a little progress bar spinning in you're app and then data appears, or do we want to build some kind of web application?

Somewhere on the Internet users go and type and they show up on your page and they've got really cool dynamic HTML.

They can go over, maybe create an account.

They log in, they interact through their web browser.

Now, this is a challenge that basically everyone has to deal with if they're building a rich application, say a mobile app, and they're going to build a website that corresponds to it.

So often this is presented as APIs versus web apps.

I maybe want to build some API or use an API framework like FastAPI, so that I have this great way to build APIs And then we'll go find another way to build that web app or we've already got our web app.

How do we add an API to it?

Do we have to completely start over?

Good news, what you're going to see in this class is that it's not "or" or "vs" or anything like that.

No, it's "together".

I will show you how to build APIs in FastAPI and then mostly we will focus on building fantastic web applications.

We have another course that focuses purely on building the APIs, but the interesting point here is how do I take this really powerful and modern API framework and allow it to also serve web applications, web UIs out of the exact same data models, out of the exact same code base?

So you don't have two things to deploy, two things to manage, two things to run.

It's not like, well, let's use FastAPI for the APIs an then completely start over and use Django for your web app.

No, it's FastAPI through and through, And you're gonna be able to blend them together within the same application with the same versioning, the same deployment, everything.

It's going to come out with one of the best web frameworks, not just API, but web frameworks that you can use for modern Python web applications.

You're gonna see how to unlock that power in this course.

|

|

|

transcript

|

3:01 |

Now let's compare FastAPI to some of the other popular Python options that you might choose.

Here's FastApi's home page, it's documentation and so on.

The documentation is really thorough and really good.

So what else might you use instead of FastAPI?

Well, there's what I think of as the big two: Django and Flask.

These two combined represent about 80 to 82% of the current Python web application framework mindshare, deployments and so on, especially looking at stuff that's been built recently.

Between them they are the two ways that people often think about web applications and Python.

Django has all these somewhat larger building blocks that you click together to build your app whereas Flask is all about it's micro framework lifestyle.

You get to pick every little thing and there's zero help for you.

You want a database?

Okay, go get a database.

You want to talk to it.

OK, go figure that out.

Right?

Do you decide on SQLAlchemy Do you decide on MongoDB?

Do you?

What do you do?

So it's all about picking the little pieces and putting them together however you like in Flask.

We also have Pyramid and Tornado.

I'm a fan of Pyramid, I like that framework quite a bit.

It's very fast, and Tornado is one of the very first asynchronous style of frameworks.

It doesn't necessarily use the, at least in the early days the async and await style of programming that's popular today because it actually existed before that style of programming but these air two good options.

And if you're looking at just your API, there's frameworks like Hug or Django REST framework, or so on that you might consider.

So which side are you leaning?

More APIs and also need a little web or more web, and maybe we'll need an API.

So if we compare these, you might say, well, how does this compare to FastAPI?

One way to think about it is popularity.

We're gonna talk about the features that make FastAPI awesome as well.

But one is popularity.

If you look Django and Flask, they're quite popular.

54k and 53k stars on GitHub.

This represents their very nearly equal split in the mindshare.

We have pyramid at 3k.

Tornado at 20k, Hug at 6k.

What's FastAPI at?

26k.

Now wait.

Maybe it's not as good as Flask or Django.

Here's the thing.

Django is like 10, 15 years old.

At this point, FastAPI is less than two years old.

So in the, you know, Django's had all this time to build up people like following and being part of it and FastAPI is really, really coming on strong.

As far as I can tell, it is the most popular, relatively new framework, and being relatively new is an advantage.

It means that supports async and await right out of the box without jumping through a bunch of hoops.

It does really powerful things with type annotations.

It works with Pydantic data models that do automatic conversion and validation and on and on and on.

So because it's new, it's awesome.

It has all the modern Python features you would hope for, but you can see it's nearly as popular as some of these older ones, the most popular, too, amongst the Python web landscape.

|

|

|

transcript

|

3:17 |

Briefly, let's just dive into a couple of ideas, The big ideas that we're going to cover in this course you get a sense of what you're gonna learn throughout the whole adventure that we're about to embark upon.

We're going to start by building our first FastAPI site and at this level it's just gonna actually be an API that exchanges JSON with some data that we compute in our API endpoint.

So we'll see what it takes to build from scratch from, you know, empty Python file till we get something running on the Internet, at least running on our local server that theoretically could be on the Internet that we can interact with.

So we're gonna start out and see what is the essence.

What are the few moving parts that we must have for a FastAPI site and then we're going to move on to the goal of this course serving HTML.

Take this cool API now, how do we also let it handle the HTML, the web application the user interactive browser side of what you also need to build?

Sure, you could build some APIs and those are great.

But in addition to that, how do you also build your web application?

So that's what this next section is gonna be all about.

We're going to see how we can return first of all, basic HTML and then how we can use a template language like Jinja or Chameleon to create these dynamic templates that actually generate the HTML.

We're going to talk about this idea of view models.

If you're familiar with FastAPI, You may have heard of Pydantic this are really cool ways to exchange and validate data at the API level.

They're great, and I absolutely adore that technology, but it doesn't make sense for working with HTML.

As you'll see, when I talk about why that's the case.

But it turns out that a similar but not exactly the same design pattern is what's gonna make the most sense here.

So I wanna see about using that to correctly factor HTML and the validation and data exchange and then the actual doing the logic part of our web app.

We're going to work with the database, of course.

So we're gonna use SQLAlchemy to map Python classes to the database and we're gonna do this in two passes.

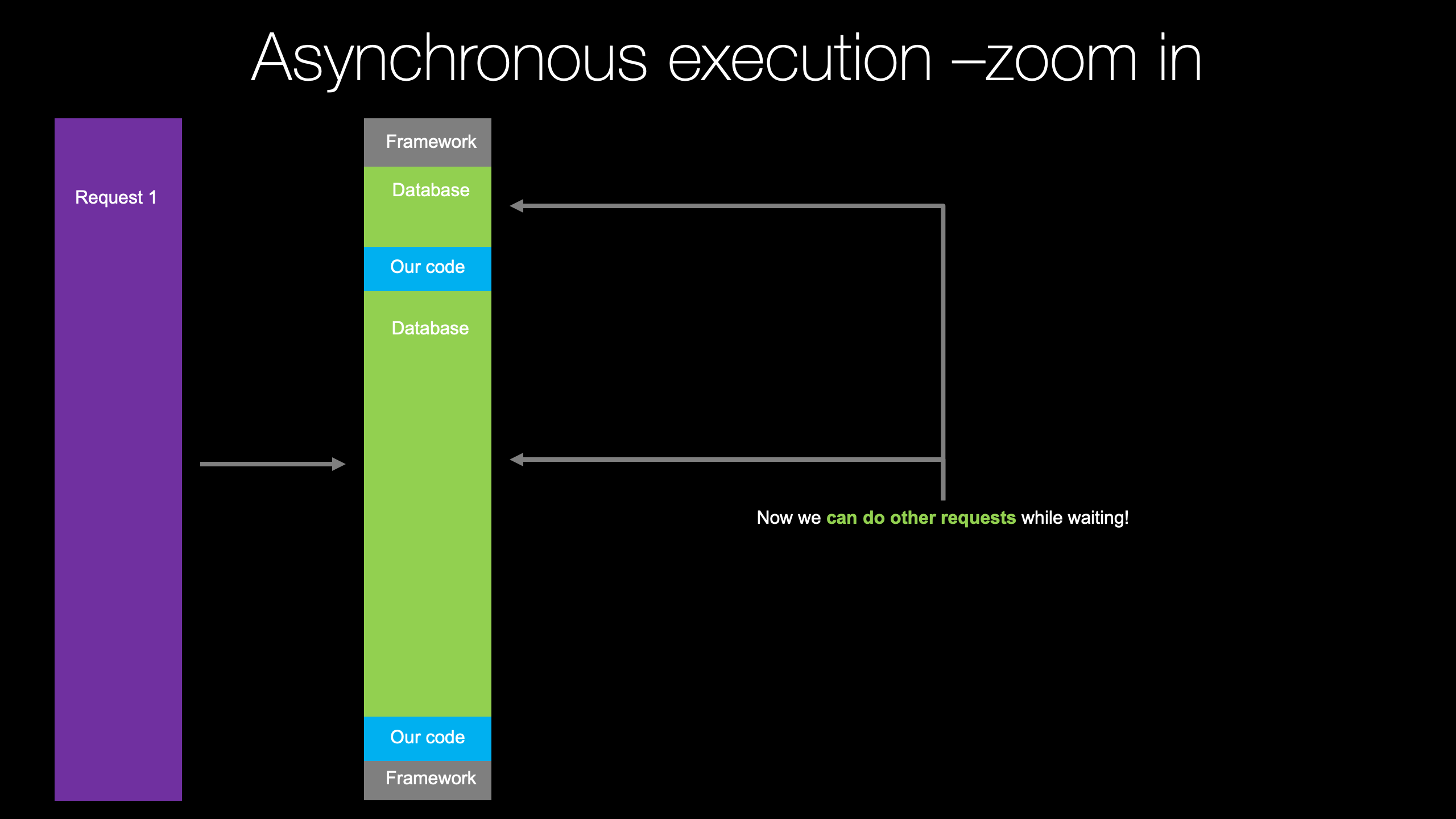

First, we're going to use the traditional SQLAlchemy, API, which does not support async and await.

But there's a new API.

That's coming, starting in 1.4 and then heading towards version 2 of SQLAlchemy.

That absolutely supports async and await, which is going to be a really important aspect of working with FastAPI and making it fast.

So we don't do that in two passes, start out with the synchronous version that you're probably familiar with and then upgrade it to this new async version.

And once we have an async database in place, we can now convert our entire web application to run fully asynchronously.

This means many, many, many times more scalability for the same hardware.

We don't have to write as complicated software with different tiers and caching and all these different things.

Our application is gonna be super fast right out of the gate, and finally, once we get this cool app built and running, we're going to put it out on the Internet.

We're gonna actually go out, create an ubuntu server and deploy this to the Internet where we'll publish it, people can interact with the browser, even talk to it over SSL.

These are the major ideas that we're going to cover in this course.

And when we get through all of them, were gonna have a fantastic web application that you can use as an example for whatever it is you want to build.

|

|

|

transcript

|

0:58 |

What do you need to know To be successful as a student in this course?.

What do we assume that you know and we don't go into while we're going through it?

Well, we assume that you pretty much know Python.

You don't have to know all the advanced features of Python.

You don't have to be able to create weird, dynamic meta classes or anything like that.

But basic things like variables, functions, loops, classes, that's the kind of stuff we expect you to know, we also expect that you have a little bit of familiarity with the web and HTTP and HTML, but we work with some HTML, some CSS and some dynamic templates in this course.

So we don't go through and teach you what this part of HTML means or talk about how CSS actually works.

We just use the CSS and use the HTML.

If you're somewhat shaky on it, probably OK, you can pick it up along the way, but it's not a focus to this course.

So we do assume that you at least can pick it up on the way or you know it already.

So with those two things in place, you're ready to take this course

|

|

|

transcript

|

1:14 |

So what application are we gonna build during this course?

If we go over two PyPI, we could find that we search around.

There's all sorts of different projects and maybe we could build one of those, but no, in fact, what we're gonna build is pypi.org itself.

That's right.

We're gonna create a clone of pypi.org.

This entire site.

Well, much of the site anyway, we're going to build a UI That looks like this, would have data coming back from the database like that.

We're gonna have featured packages that are in here like that, who have the ability to log in, to register, have accounts, all that kind of stuff.

So that's the application, the web application that we're gonna build.

We're going to create a clone of pypi.org.

It's a great example because it talks to a database, it has a decent number of multiple pages, it has HTML forums, it has validation, has accounts.

Many, many of the things that almost any web application that is meaningfully large or meaningfully real will have.

You wanna build a bookstore, it'll be similar.

You wanna build a forum site, it will be similar.

So I think this is gonna be a great example.

We're all pretty familiar with it from our experience with Python.

It's not too complicated, but it's not too simplistic at the same time.

And we're gonna build an awesome asynchronous version of it with FastAPI

|

|

|

transcript

|

0:39 |

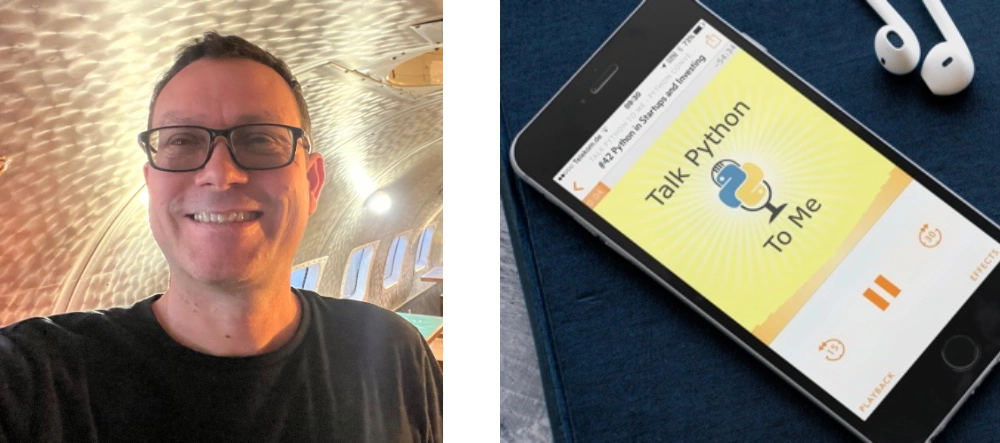

you might be wondering, who is this disembodied voice talking to you?

Well, here I am, Michael.

My name is Michael Kennedy, @mkennedy over on Twitter.

Thanks for being to my course.

I'm really excited to teach it to you.

You might know me from the Talk Python To Me podcast where I founded it, and I'm the host.

I'm also a, founder and co-host of Python Bytes, which I co-founded with Brian Okken.

Maybe even more relevant for this particular situation is I'm also the founder and principal author at Talk Python Training.

I've got a lot of different exposure to different types of Python and I'm going to try to bring all that experience to this course and share it with you

|

|

|

transcript

|

0:37 |

Now, if you really diving into FastAPI, you might want to take an hour when you're doing the dishes or you're out for a walk or something and listen to one of my Talk Python To Me episodes.

In particular, Episode 284 where I interviewed Sebastian Ramirez.

He is the founder and creator and maintainer of FastAPI.

So he and I gotta have an awesome chat about why he created it, some of the design choices, where some of the inspiration came in and how to use it.

If you want to go a little bit deeper, feel free to check out this episode where I interviewed the creator of FastAPI about his creation on that show.

|

|

|

|

6:54 |

|

|

transcript

|

3:09 |

Before we start writing code and just jump right into our editor, let's make sure that you have your machine set up and you can follow along, and you can build these applications with the course.

It's really important that you follow along.

So when we do stuff in the course at the end of a chapter, stop and go back and add that to either the same application that you're building along with me or create a parallel but very similar application and add the functionality over there So in this super short chapter, what we're going to do is just go through and make sure that your machine has all the requirements and tools that you need.

The first question is, do you have Python?

And importantly, is it the right version?

FastAPI has a minimum requirement of Python 3.6, and we're also using features like f-strings in our code that require Python 3.6 or later.

So you need 3.6 we're actually gonna be using, a higher version.

But make sure you have at least Python 3.6.

You wanna know, do I have Python?

It's a little bit complicated to tell, but here's a couple things we can do.

If you're on Mac or Linux, Yu can go and type Python3 -V and you'll get some kind of answer.

Either Python 3 doesn't exist, in which case you need to go get Python or make sure it's in your path.

Or it might be higher version lower version, whatever.

You need to make sure that this runs and that you get 3.6 or above, we're gonna be using 3.9.1 Actually, during this course.

On Windows, it's a little bit less obvious.

There's a few things that make this challenging if you're not totally sure.

So on Windows, what you type usually is Python, not Python3, even though you want Python 3.

So you say Python -V.

And if you get an output like this Python 3.9, 3.9.1 or whatever as longs that's above 3.6, you're good to go, but here's where it gets tricky if your path is set up to find, say Python 2.

But you actually have Python 3 in your system it's just later in the path definition.

You're going to need to adjust your path or be a little more explicit how you reference that executable.

And here is the super tricky part.

Python on Windows 10 is not included.

But there is this Shim application whose job is to take you to the windows store and help you get Python.

If you don't have it yet, it will respond to Python -V but it will respond by having no output.

It won't tell you that Python is not actually installed that you need to go to the store and get it.

It will just do nothing.

So if you type Python -V and nothing happens, that means you don't actually have Python.

You just have the shim that if you took away the V, would open the Windows store for you to get it and so on.

So just make sure you get an actual output when you say Python -V or, you know, follow the instructions coming up on how to get it.

Speaking of getting Python, if you need it, go visit realPython.com/installing-Python/.

They've got a big range of options for all the different operating systems, the trade offs, how to install it for your operating system.

And they're keeping this up to date.

So just drop over there, get it installed in your machine and come back to the course, ready to roll.

|

|

|

transcript

|

2:31 |

what editor are we gonna be using for this course?

Well, my absolute favorite is PyCharm.

I think PyCharm is by far the best editor for Python applications, and the larger and more diverse they are like web applications are set to be, the better off that PyCharm is.

Now, you don't have to use PyCharm, but if you want to follow along exactly, I recommend you get PyCharm.

And for this course you're most likely, going to need the professional version for some of the features, you could get away with a community free version.

But a lot of the functionality, the auto complete in the HTML and CSS side won't work.

You'll have to have the PyCharm Pro Edition, and I'll give you some other options if you don't want to get that.

But you can get it as a student if you work with any university or high school or whatever, you can get it completely for free, even the Pro Edition, and there's also a trial, so those are some options.

I recommend it.

I think it's absolutely worth it.

Get visited over here at jetbrains.com/pycharm/ Now, the way I would put it on my machine as I would get, or I do get the JetBrains toolbox.

This auto updates it for you.

It lets you jump easily between different versions, that lets you know when there's new versions I think that's really the best way to manage JetBrains tools and products on your OS.

So if you do go with PyCharm, get it this way.

Now for some reason, if you don't want to use PyCharm, the other really good editor that I would recommend is VS Code.

I don't know that I'd like VS Code.

In fact, I'm pretty sure I don't like it as much as PyCharm.

But I do like it.

I think it's good.

It has a lot of great features.

It supports the HTML and the CSS and all the different languages that we're going to be touching during this course So that's cool.

You go download it for free.

And if you have an M1 one Mac, there's even a way to get the M1 version, native version over here, which is cool also for PyCharm by the way.

If you do get it, You're going to need to install a couple of things.

You want to make sure that you install the Python extension or it won't have all the Python smarts.

You also, on top of that, want to install Pylance, which gives it better auto complete and better understanding still of your code.

In order to get this dialogue to come up, you press that little box icon thing there and then you get the marketplace.

Python should be right near the top.

It's by far the most popular extension for VS Code.

So this is a really good choice if you don't want to use PyCharm.

Either way, get one of these two and or pick your favorite editor that you think will work well for this course and we'll be ready to work on the code together

|

|

|

transcript

|

1:14 |

Last, but definitely not least you want to make sure you have the source code for this project.

Now, we are going to come and just create a new folder and create a new file and start writing code from scratch.

But there are a couple of things that you're going to need if you want to follow along, for example, see where it says data/pypi-top-100 Once we get to the database section, we're gonna load up the database with a whole bunch of data that's in JSON format that is going to represent the actual live data on pypi.org.

In order to get that, you got to get to the GitHub repository or if you want to just jump into, say, chapter five and start working from there, we'll have the code that we started with and finished for chapter five.

So make sure you get this repo.

You're going to clone it for sure.

If you're not a friend of git, you can just click on that green button where it says code and download it as a zip file, but if you do have a GitHub account and you use git frequently.

Be sure to star and fork this so you have permanent access to it.

Once you get this downloaded and cloned or unzipped, you'll be ready to follow along with the course.

That's it.

If you have Python, you've got a decent Python editor and you've got the source code repo, you're ready to take this course.

Let's get going.

|

|

|

|

13:36 |

|

|

transcript

|

1:43 |

Hey there.

Now that we've got all that positioning and motivation out of the way.

It's time to start building.

We're going to begin by building our first FastAPI site.

In fact, what we're gonna do is going to take the site and build it up and build it up and build it up over time.

First, it'll be just a really simple site then it'll start having things like dynamic HTML in templates and static files.

And we're gonna add a database and all sorts of cool layers to make it realer and realer until we end up with something that is very similar to what you might consider a full professional web application.

And the question is, well, what are we gonna build?

We're gonna build something that I'm pretty sure you're familiar with already.

We're gonna build a clone of pypi.org for those of you don't know pypi.org is where you go to find Python packages and libraries that you can install with pip, anything you can install with pip, as long as you used the standard mechanism, not some URL or something like that.

It's going to be coming through this central package index here.

So what we're gonna do is we're gonna build an application that looks like this.

It's gonna have similar elements on the page.

You'll be able to log in and register and get help and do other things as well.

You'll be able to go to a package and see the details.

Many of the things you would do with pypi.org you're gonna be able to do with our application, and we're gonna build this with basic FastAPI functionality and a few cool libraries we're gonna add on to make it working with HTML even better in FastAPI.

So I hope you're excited.

We're going to start small in this chapter and build and build and build until we have something that looks very similar both visually and functionally to what we have here at pypi.org

|

|

|

transcript

|

4:09 |

it's time to start writing some Python code and creating our FastAPI not API, but web application.

Our Python project for a web application.

Here we are in our GitHub repository and you can see we have a bunch of empty chapters of what I think the structure is going to be.

And we're going to start by creating our project here, evolve it.

And then when we're ready to move on to templates for chapter four make a copy from what we did here, over to here.

That way you could always just jump in at any given section throughout the course in case you didn't follow along exactly, or you just want to quickly jump into a section and see what it was like there.

We want to go over here, and we're going to create that project.

Now we're gonna open this in PyCharm, but also show you how to get started before, just in a terminal.

So I have this cool little extension called Go2Shell.

That'll let us jump in here.

But you could obviously open a terminal or command prompt and just cd over into this directory.

So if we look here, there's just this placeholder text so GitHub would create the or git would create the project structure.

Now what we would need to do there's a couple of things that make up a FastAPI project.

We're going to start by just having a main.

So what we're gonna do is We're going to create a main.py, file.

We're also going to need some requirements.

And the easiest, most common way to do that is to use a requirements.txt.

Yeah, we could use poetry or pipenv or whatever, but I'm still a fan of just the requirements.

We're going to create that as well, and we'll put in things like we require FastAPI, and we require uvicorn to run it and so on.

And then I wanna have a virtual environment.

So I'm gonna come over here and say Python, you may need to type Python3, depending which version you got.

-m venv, venv.

We're not gonna do this from scratch for everyone.

I'm just gonna walk you through it once and now we have our virtual environment.

But if we ask which Python.

It's still we ask which Python3 It's still the system global one.

On Windows, which is not a command, but where is a command that will tell you basically the same thing.

So we need to make sure we activated.

So on macOS or Linux we say dot or source.

venv/bin/activate like that and notice our prompt changes.

If this was Windows, we would just venv/scripts/activate.bat like so you could drop the bat that would still run.

But over here, I got to say this.

Now, if we ask which Python it's this one here all right?

Any time you work with virtual environment, it's almost always got an out of date pip.

So let's fix that real quick.

pip install.

Sorry.

-u for upgrade pip and setuptools.

Now we do a pip list.

You see, we've got the latest version.

Now that we have our virtual environment up and running, let's go ahead and open this in PyCharm, we're no longer going to need this on macOS you can drag the folder onto PyCharm and it'll open.

On the other operating systems, you have to go file open directory.

Same basic idea.

You can see PyCharm's found our virtual environment.

Surprisingly, this sometimes works, sometimes doesn't.

So you can always go add interpreter and pick the existing one that usually finds it or if you have to, you can browse to it as well, but it looks like we're good over here.

This are red because in GitHub, they're not yet staged.

So our project is over here.

And let's just do a quick print hello, web world just to make sure that we can run everything and right click, say run.

Perfect.

It looks like everything is running over here just fine So we've got our project created.

Obviously, it's not a FastAPI project just yet, but this is the process that we're gonna go through for each one.

I won't walk you through it again.

Well, I'm just gonna do this and say, Hey, I did this set up to get our project running and start with the existing code at each chapter

|

|

|

transcript

|

5:13 |

Now that we've got our Python project set up in Pycharm, let's make it a FastAPI project.

The first thing we need to do is go to our requirements here and have our requirements stated.

So to get started, we need just two, and we'll keep adding onto this list as we start to bring in things like an ORM with SQLAlchemy, as we bring in static files support with aiofiles and so on.

But we can start with FastAPI, and we can also start with you Uvicorn.

So Uvicorn is the web server, FastAPI is the web framework.

PyCharm thinks this is misspelled.

It is not.

So we can go over here and hit Alt + Enter and say Do not tell me this is misspelled.

They're actually working on a feature, to understand these packages and not say that they're out of date or whatever, but there we go.

Now we need to install these.

So we're gonna go to our terminal, make sure your virtual environment is active here.

I want to say pip install -r requirements.txt right.

Looks like everything installed just fine.

I'm not a fan of the red.

Let's go ahead and commit these to GitHub here and notice over here, we've got our source control thing.

We can do this to commit.

It shows us Command+K on macOS is the hotkey.

But I've also installed this tool called Presentation Assistant.

When I click this, Note at the bottom we got this.

But also, if I just hit Command+K, this would come up.

So you'll see me do a lot of things with hot keys as I interact with PyCharm in general, in the code and so on.

So if you're not sure what just happened, keep an eye on that thing in the bottom.

Now, down here.

We've got these two files.

These call this starter code for our project.

Here we go.

Now things don't look broken with red everywhere.

But how do we create a FastAPI project?

Well, there's a really simple, what I would call the PyCon talk tutorial style where you just put everything into the main file and, hey, you have a whole app.

Look how simple it is.

And there's the realistic one where you break stuff into you know, isolating the views into their own parts, the ability to test the data exchange between the views and the templates.

And you got the template folder and all that.

We're going to start with the simple one, and we're gonna throughout this entire course move towards what?

Maybe we should call the real world one where you actually organize a big, large, FastAPI web application.

We're gonna start by saying import fastapi and we're also gonna need while I'm up here uvicorn to run it.

Now, if you've ever worked with Flask, working with FastAPI, is very similar.

It's not the same, but it is similar to what you would do with Flask.

So we're gonna create an app.

The way we do that, is we say fastapi.FastAPI And then we're gonna need to create a function that is called when a page is requested.

So we're gonna gonna call that index.

That's just like forward slash And what are we gonna do?

Let's just return something really simple.

"Hello, world".

It might not do exactly what you're expecting, but we'll see.

Okay, and then we need to decorate this function to say this is not a regular function, but a function on the web.

We'll say app dot get.

Notice all the common HTTP verbs here get put post delete so on.

And then we specify the url.

You can see there's an insane number of options.

We only care about specifying the path, so this will be fine.

Bring that up.

And how is everything looking?

It looks pretty good, but there's one more thing to do.

We need to actually run our API.

So we say uvicorn.run and we just give it the app and that's it.

We've built a FastAPI web API so far as you'll see.

Let's go ahead and run this.

Here it is running down there like that.

Hello, world.

Wait a minute, Look carefully.

What is all this JSON Raw data.

Why does it say JSON?

It says JSON, because FastAPI is most natively and API.

It's here to build APIs that exchange data.

So, for example, if we had said something different here like we had said The message is, Hello, world and we run it again and we look at the raw data, you can see it's returning JSON here.

Okay, So one of the things that we need to do for this course is to tell FastAPI for certain parts, may be much of it, maybe just a little part.

Whatever part that we want to be an HTML browser oriented web application, we need to tell it.

Don't just, don't return JSON.

No, no, no.

Return HTML and ideally, use a nice structured template language like Chameleon or Jinja or something along those lines.

But you can see we've got our little FastAPI app up and running here on locahost.

All we have to do import fastapi, create an instance of it, use its HTTP verb decorators to indicate which methods are web methods and then run, that's it.

|

|

|

transcript

|

2:31 |

Now, before we call our little basic structure here finished.

I do want to show you one quick little thing.

Notice I was able to go over here, right click and say run.

And I can also press this button and everything looks golden.

But the way that we actually run FastAPI in production is not to go and say Python run this file.

What we're gonna do is we're gonna say set up Gunicorn, which is going to oversee a farm of Uvicorn worker processes, and each one of those is going to run this application.

And that way, if something goes wrong with one, it can be restarted.

You can do scale out.

There's all sorts of cool advantages to that.

And that's generally how Python web apps run in production anyway.

And when we do it in that mode, the way we're gonna have basically implicitly behind the scenes do that chain.

I talked about what's gonna happen is, we're going to say uvicorn main thing here.

What is it that we're working with?

And then what is the name of this thing here, which is app in our example.

So we're gonna go to the main module and find the app instance and run it.

Watch what happens if I try to run it here.

It says whoa, whoa.

You're somehow like double running it.

I'm not really sure what's going on here.

And that's because this is really just for testing, and we need a different way to run it in production.

So we're just gonna quickly fix that and say:only if you try to run it here in dev, do we want to do that.

So notice this, a live template is gonna expand out to if the name is main.

This is the Python convention.

Say this file is being run directly rather than imported and else I don't know.

This is what we're gonna end up doing in production.

There's actually some things will ultimately need to do here.

But for now, there's just nothing else happening.

If I run over here through this mode, you can see it's running fine.

And if I go into the terminal now and I try to run it like this hey, look, it runs fine as well.

Okay, so you'll see that as we get this little bit more complicated.

Think more things going on, like setting up the database at start up and so on.

There's gonna be some interesting balancing act that we have to do around this but I wanted to make sure that you I focused on this specifically because it's easy to just say uvicorn run, but then seems like it works and then it doesn't work in production.

And that's why, right, this is fine when we press the run button but in production were running through a completely different chain of events that uses uvicorn module:variable_name.

So I want to make sure we get this set up right from the start.

|

|

|

|

53:10 |

|

|

transcript

|

1:13 |

In our previous chapter, you saw we built a simple FastAPI, API more or less, returned JSON.

And our goal now is actually to exchange HTML.

We may also want to build APIs with FastAPI, I mean, that's one of its main purposes.

But if we're gonna have web pages that talk to browsers, we need to return HTML.

Now, the last thing we want to do is write static HTML in strings in Python and then return it.

No, no, no.

We want to use one of these dynamic templating languages like Jinja or Chameleon or even similar to what you have in the Django templates, where we write HTML and we put a little bit of scripting in the HTML and the scripting varies by templating language.

But the general idea is we put some scripting or some conditional stuff into our HTML.

In FastAPI we're going to create a dictionary and hand that off to this template and say: render all these items and here's the pieces of data that you're going to need to work with.

So that's what we're gonna do in this chapter.

We're going to start really simple and just see how to return HTML.

Then we're gonna leverage, a cool library that allows us to basically do what I just described: create a dictionary and just hand it off in the simplest possible way to under one of this underlying templating languages

|

|

|

transcript

|

4:07 |

Here we are in chapter four and in our GitHub repo I just want to give you the lay of the land.

We completed all of our code in chapter three, and now I made a copy of that into chapter four.

So the way this is gonna work is the final code for chapter three is the starter code for four.

The final code for four is the starter for five, and so on.

I've already done all the set up.

Like I said, we're not going to go through that again.

So let's just jump into it over here.

Now, you would be forgiven to think that FastAPI is really just for building APIs.

And if you wanna have a proper web app that has HTML and bootstrap and CSS or Tailwind CSS, whatever you want, that you need another framework like Django or Flask or something.

Because remember, when we run this, we look at it, what we get back is JSON.

And if we look in the network stack what we get over here when you do a request, the response type on this one Its content-type is application/json.

Of course, it seems like, well, what this does is it returns JSON and that's true.

But what we can do is we can actually change how it works.

Let me introduce you to the HTTP responses.

So over here in FastAPI, we have a whole bunch of other things we can do than the default.

The default, of course, is to return JSON.

But if we create a response under FastAPI responses and you look, we have HTML response, a file response, a redirect, JSON.

That's what we're getting now, plain text, streaming content and so on.

What we're gonna do is going to create and HTML response, and we're just going to say the content is some local variable.

Notice all the other defaults are fine, the header defaults are fine, the status code of 200 is fine and so on.

I'm gonna come over here, and for the moment, just for a moment, I'm gonna do something bad and type some inline HTML.

This is not the end goal.

But in here let's say we want our page to look like this.

Here's some HTML and here will say: "hello FastAPI Web App", and we'll have a <div> and then we wanna make sure we close our </div> and it's gonna be: "This is where our fake pypi app will live!" And instead of returning this dictionary, we're going to return the response, and the response is gonna tell FastAPI you know what this is actually not JSON, it's an entirely different thing, like a file or in this case, HTML.

We can actually just inline this right there, like so.

OK, let's run it again and see what we get.

Look at that.

"Hello FastAPI Web App This is where our app will live" And if we go to our network and we look again at our content-type down here somewhere, there it is: text/HTML.

Like all friendly HTML pages, its text/HTML and utf-8.

Pretty cool, right?

And if we go to View Source this time, it's well, exactly what we wrote.

Is it valid source?

Not really.

We didn't put a body and a head and all that kind of business in there, but this is enough to show you how we can return HTML at a FastAPI.

That said, this is not how you should be returning HTML out of FastAPI.

We're gonna do what all the other major frameworks do: That's we're gonna use dynamic web templates, gonna put those over there, and we're going to pass a dictionary like we did before, off to that template and the template engine will generate our HTML and our static content and our dynamic content, all those sorts of things.

So we're not going to do this, but this is the underlying mechanism by which we're gonna make that happen.

We're gonna use another library that's super cool, in my opinion, I really, really enjoy working with it, and it makes it super easy.

We're just gonna put a decorator up here that says use a template, and magically, instead of being an API, it now becomes a web view method or web endpoint here.

All right, so this is what we're gonna do for this chapter: we're going to start like this, but we're gonna build up into moving into those templates and serving static content and things along those lines.

|

|

|

transcript

|

1:30 |

With Python, we have a choice of different template languages.

So what I'd like to do in this section is talk about three of the main possible choices that you might choose for this dynamic HTML templating that we're gonna do and see the trade offs, and then we have to pick one and go with it for the course.

So we're going to do that, and I'll give you the motivation for doing so.

But let's go through three popular ones.

If you've ever done Flask, you've done Jinja.

So, Jinja is really the one templating language that is deeply supported by Flask.

There are many other frameworks that use Jinja as well.

A lot of different, maybe smaller web frameworks that are not quite as popular, that also happen to leverage Jinja, so Jinja very well may be the most popular, most well known choice.

Mako is another template language and looks quite similar to Jinja.

I think I actually like Mako a little bit better, you'll see that there's a little bit less: open, close, open, close all of these things you have to keep writing; and it's, here's a template line and then you just write some Python.

That's pretty nice.

And then we're also going to talk about Chameleon.

Chameleon is unique because it doesn't leverage directly writing Python as much, but it uses more of an attribute driven way of working with the HTML so you might pass some date over.

There might be an attribute that says: if this condition is true, show or hide this element that has the attribute, whereas Jinja and Mako would actually have an if statement directly in their HTML, so there's drawbacks and benefits to each one of these as we will see.

|

|

|

transcript

|

6:22 |

I think the best way to get a feel for these three different template languages and which one might be the best to choose for our application, would be to compare them with a simple example.

So what we're gonna do is, say, given a list of simple dictionaries or simple objects, each of which has a category in an image.

We would like to show these in a simple, responsive grid.

So this comes from a bike store and would look something like this: would have comfort bikes, speedy bikes, hybrid bikes, folding bikes and so on.

And what we want to do is generate this HTML results, and we're going to do it with these three different template languages, given this data on the left here.

So how would this look in Jinja?

Well, the first thing we have to address is what if there's no data?

So either the list is not there at all.

It's None, or it's just an empty list.

In that case, we want a simple <div> that says "No Categories".

So that's the first block, and what you should notice here is you have curly percent.

If statement close curly and then annoyingly curly percent endif curly percent, there's a lot of this open close.

Like if this and if for that and for and so on.

So you see a lot of that in Jinja.

Alright, so we've got this categories up here and this is standard Python.

If you can read Python, you can read this, right?

Then, if there are categories we want to do a for in loop over them and repeat a <div> that has two things: it has a hyperlink that wraps an image and it has a hyperlink that wraps just the name.

So we're going to do a four c in categories in this bracket percent thing and then in the for, we have the HTML and wherever we have a dynamic element from our loop, we have c and then we'll say go to the name lower case the name just in case because that's how our routing works, let's say.

Over to image then we have just the name properly cased shown there.

OK, so this is what it looks like in Jinja.

It's not terrible.

If you know Python, you know it pretty well.

Here's what it looks like in Mako and the only real difference that you'll see here is that instead of having curly percent closed curly percent and closed percent curly, you just have percent on one line.

And to me, honestly, this is not a very nearly as popular as Jinja but I think this is actually a pretty neat language because it's got the same functionality, but you just write your symbols, which I think is kind of the Pythonic way.

One difference is the way you get strings from objects.

So instead of saying curly bracket curly bracket variable, you say dollar curly, variable.

So that's how it looks over here.

One thing that's annoying, it's that dictionaries don't get dot style traversal.

You have to say, you know, call the get on them and so on.

Right.

So this is Mako.

Third one is Chameleon.

Now again, we're gonna have these two conditions.

The top condition is we want a <div> to be shown if there's no categories.

The thing to take away from Chameleon, the Zen of Chameleon, really, is that it has this template attributes language t-a-l or tal.

And the way you program it is to not write Python, but to write small, small expressions as attributes.

So here, if we wanna have a <div>, that's only shown when there's no categories, we say <div> no categories, and then we put this attribute that says tal:condition.

And here we put basic Python, not categories, you call functions, do all sorts of Python things in here.

That's how we do that part.

Then the next part, we're gonna have the opposite condition.

So just tell tal:condition="categories" and then we want to do a loop like before So we say, <div tal:repeat="c categories"> and then it's gonna repeat that entire block, the <div> and the two hyperlinks, each time it sees an element in that category, then it's gonna write it out.

Here we use dollar curly brace like we do in Mako, but it does get the dot traversal of even dictionaries.

All three of these, they're pretty decent, right?

It turns out, Chameleon is really by far my favorite language.

There's two primary reasons I think this is a much, much better language, even if it's not as popular.

I think it's much better than Jinja.

One, you don't write symbols over and over and over again.

You don't say curly percent test closed, percent closed curly, curly percent and if close percent curly and just that stuff all over.

But the other drawback of writing code like that is what you get is not proper HTML like this.

This is proper HTML, there might be attributes that don't make any sense to a standard web browser, but if I were to load this up in some kind of tool and I try to look at it or I hand it to a designer, they would be able to look at it straight away and go: yep, I know what this is.

This is HTML.

So I think the fact that it's basic HTML and you don't put a bunch of code in there, I think that makes it really quite nice.

The other one is, and I think this one you could be split on is a lot of people see that they could, they cannot write arbitrary Python here, where, over in say Jinja, there's ways to write arbitrary Python code in your template.

Some people might see that as a drawback, like I can't do all this cool stuff in my template.

To me, it's a feature.

It means I can't put logic, complicated Python logic into my HTML.

Where does that belong?

I don't know.

Not here.

It belongs somewhere else.

We're gonna talk about where it belongs in our web application we'll build later.

But limiting the amount of Python code that we can write over here, to me is a feature, because it means you have to have more professionally, more properly factored applications.

You guys can decide to use whichever one you want for this one, we're going to use Chameleon because it's proper HTML and it doesn't let you write arbitrary HTML throughout it.

It doesn't have all these symbols everywhere in terms of the open close and all the Python code that's interlaced and so on.

I think this is a great language, we're gonna use it for our course.

What I'm going to show you, there's a very, very, very small change to make Jinja do the same thing So if you prefer Jinja, you can use that, we're gonna use Chameleon for the reasons I just laid out here.

|

|

|

transcript

|

6:28 |

Well this was nice here, to render this, but that's not how we want to write our code, is it?

We want to write our HTML in an HTML file and then write our Python in a Python file and then put those together.

That's how all the common web frameworks work.

So let's go and create one of these templates over here.

We're gonna start by creating one.

This is the whole HTML page, and then we'll work on some nice shared layout.

I'm gonna create a folder directory called templates.

And then in here, I'm going to HTML File called, what I like to do is, I like to name my files the same as the view methods.

So if the view method is index, I'm gonna name the file index and this will be Fake PyPI like that and let's just put something similar, but not exactly the same.

We have <h1> now we've got a nice editor for working with HTML, beautiful.

Say, call it "Fake PyPI" for now and <div>, we'll just put, actually, let's put an unordered list of the popular packages so we'll have a <ul>, it's gonna have an <li> it's gonna have three popular ones.

We could do this short, cool little expansion thing in PyCharm if you hit Tab.

So let's say we wanna have fastapi, we have uvicorn and chameleon.

And let's also add one other thing here, let's say we wanna have a <div>.

Let's put the user name and this is gonna be something I'm gonna pass.

A piece of dynamic data is gonna be passed.

We'll just have user_name like this.

And in Chameleon, the way you say output a string from a variable, Turn that variable into a string, you say: dollar curly braces.

If you're familiar with Jinja than it would look like this.

But in Chameleon, you do it like that.

All right, so we want to render this.

How are we going to do it?

Well, turns out Chameleon is not built-in in FastAPI.

You wanna work with Jinja?

There's actually some built-in stuff, but I'll show you a better way I think anyway.

So let's go look at an external package here.

Let's go over here to GitHub.

Here's a cool package I created, I don't like it because I created it, I like because I really wish that it exist and it didn't so I created it for FastAPI So the idea is, it's called fastapi-chameleon and what it is, is a decorator that you can put onto your view method that will take a dictionary plus a template and automatically turn that into HTML.

What we got to, we've got to install it, right now It's not yet on PyPI.

Check back here when you're watching this course, there's a good chance I'm going to publish it to PyPI, but for now, just install it this way.

So the way it works is you've got a template directory and I like to actually name a little more structure here.

We'll get to that in a minute, but got some template file.

We're gonna set this overall path of where those live and then all we gotta do is just say, Here's a template and return a dictionary and we're good to go.

If you return any form of response like an HTML response or redirect response, it just ignores the template idea and just says, return that response directly.

So let's go and use that over here.

We're gonna install it like this.

And PyCharm says: Oh, you better install it like OK, super.

It's installed.

And then when I go over here and I'm just going to say @template and we need to import that from fastapi_chameleon.

I like that up there.

Now I'm gonna first type out the template file to be index.html and we don't need this and we don't need that.

All we gotta do is return some piece of data.

Maybe this comes from the database or something.

Will say user_name is mkennedy or something like that.

And and that's spelled ok.

And we try to run this.

It's not gonna love it, I don't think.

So when I click on this, it gives us an error.

That's unfortunate, what happened?

It says you must call this initialization thing first.

So what we've got to do before we start using it, and we're gonna organize this better in just a minute.

Is we need to go to I guess we need to import this as well, directly.

And we'll go to this and we'll say global_init() and we have to pass along the folder "templates", that's what we're calling it.

And I think that should do it.

If we have the working directory right.

If the working directory is not in the same place, you probably need to pass a full path here.

Let's try it again.

Fingers crossed.

Look at that.

How awesome is this?

So here's our HTML and this is the dynamic data that was generated and we passed this over.

We could actually do something like this.

We could say this has a user which is a string.

OK, come over here and say it's going to be user if user else "anon", something like that, OK.

And we try this again.

Ah, yes, it needs a default value, I guess that's the way that works, I forgot, equals, just do it like this.

"anon".

There we go.

So if there's nothing passed, it's anonymous.

But if we say user=the_account, be whatever you want.

So this part is totally dynamic, as our dynamic HTML is.

And this is our static stuff that we can write so so cool, so super cool.

This lets us come over here and just specify a dynamic template.

A Chameleon template in this case, you render.

If for some reason you don't want to use Chameleon and you prefer Jinja.

Check this out over here, scroll down a little bit.

This friendly guy over here, cloned this project and created a Jinja version.

So all you gotta do to use it is go and put a decorator or your Jinja template on as well.

So use one or the other, depending on the template language that you would like.

But I really like this style of let's just put this here and return a dictionary and let the system itself put the pieces together and build the HTML.

|

|

|

transcript

|

11:16 |

Let's pause building our application for a minute and actually do a little bit of organization.

What's going on here?

We have got our one file where everything is in here and right now it's only 20 lines of code.

It's no big deal.

But as this grows, is going to get larger and larger and larger.

We've got to read stuff from configuration files or secret files, for our passwords potentially like to our database connection.

We've got to set up the database connection.

We're already setting up the templates.

Maybe we're setting up other things, like routing and so on, as you will see, and it's gonna be a real mess for a real application.

So what I want to do is first sketch out what we might expect to build here.

So we're gonna go over here.

I want to say, @app.get there mostly gonna be, but not all, gets.

I'm gonna go over here and have an about, let's just have these be empty for a moment.

We're gonna have this about function and like these are the sort of the homepage overall type of things.

But we're also gonna have, say, account management.

So let's go over here and have a an index for the account.

Although in this case, because it's in the same file, we'll maybe call it not index, but you'll see that we don't really want to.

For the url it's gonna be "/account" And we're gonna have a way to register for the site.

So this could be "/account/register".

This will be register.

We're actually gonna have one to accept a get and one to accept a post.

Talk more about that later.

Also gonna have a login and we're gonna have a logout and so on.

As you can see, this has started to get really not so nice.

And this is just scratching the surface.

Also in our templates over here we have a big pile of dough.

So what I want to do is just take a moment and talk about reorganizing these for a larger application that looks like this.

What I'm gonna dio is I'm going to go and create a folder for all of our views and call it views.

And then I'm gonna further organize this.

Say well, over here we're gonna have the home views and over here we're going to have Let's say the views that go with account.

We're also gonna have stuff about packages like package details, package list, search package and so on.

So I'll call that packages like this and so on.

And then what we're going to do is move some of these over.

So, like these two right here, this should be about.

That's it.

Let's move those over into home.

You're gonna need to import some stuff like that.

Notice this is a problem.

Will fix this in a second.

We have our account things.

So here's the three about account, like that.

We're also gonna need that same template thing.

So I'm gonna go ahead and just rob that from up here.

So we got it.

Put that at the top of account as well and packages we'll put some stuff in here eventually.

OK, but this is a problem, isn't it?

If I try to run this, I don't think it'll crash or anything.

It's just gonna give me 404.

That's weird.

Why?

well, remember the way it worked is we load up this main file and everything that was listed there.

I left my logout.

Everything that was listed here before we had run, got registered.

Well, now we're not doing that anymore.

So what we need to do is use something different and FastAPI has a really great way to deal with this.

We need to import FastAPI and then what we do is: we create this thing called API router.

So we say router = fastapi.APIRouter() like that.

And what this lets us do is it lets us build up the various routes or roots for my British friends.

Do basically whatever you would have done with app.

You just do that here.

So we've got our get, we've got our post and so on.

And then later we say, well, router, everything you've gathered up, install that into the application.

So we're gonna do that everywhere we were using app Perfect, those were all good.

And let's go ahead and do this for packages as well.

OK this are all done.

If we run this.

Nothing magical has happened.

It's still going to be that same "404 Not Found" that we saw right there.

Until we go over here and we say from views import home and account.

Ypu can put this all in one line if you want.

I kind of like to have it separate.

We're gonna import this and then somewhere along the way, we need to go to the app and say include_router and the order here might matter.

So make sure you do in the order you want.

Do home, we do account, then we do packages, clean up on that.

Not gonna need this template business over here anymore.

Now, if I run it, it should be back to good.

Let's try.

Tada!

perfect.

We got our anonymous user sort of passed in.

This we should we go back and we do user=abc.

Now we get our abc user right there.

Okay, So what this is gonna let us do is this unlocks the ability to build much, much larger applications.

We can put all the stuff we need in the home here.

We do all the complicated account stuff over here, and it doesn't all get jammed into the same file.

Now, corresponding to this, let's go over and organize this a little bit.

Because when you see index, well, is that the index of view for home?

Or is that the index view for help?

I don't know.

So I'm gonna make subdirectories here, and what I'm gonna do is I'm gonna name them exactly the same as the view module.

So home, account and packages.

Perfect.

And I'm gonna take this and I'm gonna put it in home.

Now, PyCharm may have fixed this.

Maybe not.

No, it didn't.

So what we need to do is go over here, say home/ like that, go templates and then whatever goes there, let's run this one more time and make sure everything's hanging together.

Perfect, still works.

So this gives us a lot more organization.

Now for in the home views.

We know exactly where all the dynamic HTML is going to live Let me do one quick change.

Here, let me rename this to pt.

I renamed in here and PyCharm found that and named it over there because the way we're naming this, we actually, our template has a convention template decorators, a convention that will look at the module name: home, and it will look at the method name: index.

And it says, Where's the template folder?

Well, let's go look for home/index if you don't specify anything here.

So we can actually omit that in this case.

And look, it still works.

Cool, huh?

So very, very nice that it will go and find that over there for us.

One final thing to address over here is this.

I don't really like the way this is working here.

So what I like to do is make this a little bit more clear.

Because, as I said, it's going to get more complex and more complex and so on.

I want to define an overall function here.

I'm gonna say define a function called main and main is going to run this.

And I want to define a function called configure and actually configure, at the moment can just do all this and here we'll say configure.

So the idea is: there's a bunch of different pieces that we're gonna be putting together here and it'll make a little more clear where to go find those pieces.

Like, for example, in the Talk Python Training equivalent of this file the only does what.

The kind of stuff you're seeing here.

I think it's like two or 300 lines long.

You want some organization, in the end.

So one thing to be aware of, I told you about in production, what gets run is the else stage.

This part.

We wanna make sure that we still call configure over here.

Otherwise, in production you have no routes.

Nothing.

It won't be good.

The other thing is, I wanna have more partitioning here.

So I wanna make that a function and that function.

So we come over here and say, extract method, say configure_route and this one.

I know right now, simple.

But let's go ahead and make a method out of it configure_templates because, you know, maybe you've got to do a little more work to find this thing.

And there's some path juggling and whatnot.

This feels to me a lot cleaner.

I could come in and say OK, well what do we do for the main?

When you run the app, you say I'm gonna configure it.

I'm gonna run it with uvicorn.

We could even go and be more explicit.

Say the host="127.0.0.1" I wish you could say "localhost".

I don't think you can.

And then I want to configure the template and configure the routes and then either run it directly or for running in production.

Just set it up, but don't actually call "run".

Let's just make sure everything still works.

Should be unchanged with that refactoring.

And it is everything is still working.

OK, so this gives us a really nice convention for the way that main works and using the routers we're able to partition out the different pieces.

You very well may have APIs as well.

So you might have an API directory like this and then a views directory like that.

That's what I typically do.

But I don't think there's any reason for us to write an API in this example course because I'm keeping it focused just on the website.

But in a real one, you probably have both.

I think PyCharm has special support for templates and for some reason, I think it marked it up there.

If we go and say unmark is just the name, it automatically grabbed that one.

We can come over here and say mark directory as template folder and it says, hold on, hold on, hold on.

For this project, we don't know if you want to use Jinja or if you want to use Chameleon or Django templates.

So let's go set that.

Now, what would be nice is if you said yes and it took you to where that's supposed to be.

For some reason, it doesn't do that.

So you got to go over here and type template language and wait a second.

I noticed over here it says None, and it gives you all these different template languages.

I'm gonna say we're doing Chameleon, so that will give us extra support for Chameleon syntax.

But now, if I go over here, I can type things like tal: repeat, whatever, right?

This is the syntax that will be working with for Chameleon.

And now you can see we get support in the editor for it.

To me, this is a much, much more professional looking application that's ready to build a real, complicated app that is easy to maintain over time

|

|

|

transcript

|

2:24 |

One really important thing you want to keep in mind when building web applications is how are you gonna have some shared look and feel, some common look for your application.

Let's take the Talk Python To Me podcast website for example.

Here's the home page you can see we have the navigation across the top.

We have this Linode sponsorship bar and some general styles.

There's, in fact, a bunch of other stuff going on, like CSS, like JavaScript like analytics and whatnot that you don't even see here as well.

But obviously the calm look and feel, it's the same, right?

Over here, go to the episode page, similar navigation along the top, similar style sheets and whatnot.

Going to a particular episode, Surprise!

Same banner, same navigation, same style sheets and so on, maybe some extra ones for this particular page.

But in general, it's the same.

So having this one look and feel for your site is really important, and what we want to do is we wanna make sure that we write a single HTML page, put that code into one place and use it throughout the entire site So when we make a change, let's say we want to change that banner or we want to add a new navigation element, we change it in one place and everything changes.

So there's all sorts of benefits to having these layouts that are shared.

It's not just the look and feel.

That's the obvious thing.

But you have much more consistent things, like a consistent title.

Or if you've got meta description or meta other info in the head of your document, that will all be the same and shared, and if we want to make a change.

You do it in one place.

You have consistent CSS and JavaScript files not just having the same ones, but in exactly the same order all the time.

Consistent analytics.

So if you want to do something like have Google Analytics that tracks people on every page, I don't do that in my sites with Google Analytics.

But if you want to have something like that, you could add that, say at the bottom of your shared layout and every single page is always gonna have the same analytics.

This one's slightly less obvious, but it's really important.

You have a structured way for every other page to bring in it's extra CSS.

So imagine that that episode page had an extra CSS file, an extra JavaScript file I wanted to bring in.

You could have certain little parts in that shared layout That's here's where that page can bring in its extra files.

You can do that in a really consistent and structured way, so these are super useful and we'll see how to add them now.

|

|

|

transcript

|

6:14 |

So we just talked about how important it is to have a shared common layout for the entire site.

You wanna add a CSS file to the parent site, put it in the layout.

You want to change the title?

change it in one place.

You want to add some JavaScript?

What's, includes over there.

So that's really, really important for having navigation for common look and feel as well as those other things.

So let's go and do that here.

So what I'm gonna do, I'm gonna start out first by creating a real simple version that we can see.

And I'm gonna drop in the actual version that we're gonna work with.

So I'm gonna create a place called "shared" over here.

And in here I'm gonna create a file, an HTML file called "_layout.pt" My convention is: an underscore means you're never really supposed to use this directly, but it's supposed to be used as part of building up something larger.

Then I'll call this "PyPI site", and then we wanna have some content here.

This could also have a footer.

"Thanks for visiting".

Now in here, let's have some content in our site.

This is just a CSS class that lets us maybe style it or add padding or something like that, because we could also have, like, a <nav> section or whatever.

And in here, I would like to say: other pages, you can stick your content right here.

Everything else will stay the same.

But you control what goes in here.

So the way that we do that in Chameleon is we do what's called defining a slot.

So let me just change this names.

They have nothing really to do with each other, other than, like, conceptually, you're styling this thing.

We've got our main content CSS and we're gonna call it content from the other pages.

And if they don't put anything here, it's just going to say no content.

All right, But if they do this whole <div>, it's gonna be replaced with whatever that page wants to do.

So let's go and change our home over here.

Not do all of this stuff up there, not worry about this, not worry about that.

It's just going to do this one thing here.

So what, we're gonna do is gonna have a <div> and put that stuff into there.

That's the way Chameleon likes to work.

Always likes to be valid HTML.

Now, in order for us to use this, what we need to do is we need to go over here and use this template language.

Notice here there's "metal", we've got tal and then metal, the metal one is about these templates and so on tal is a temporary attribute language.

So what we gonna do is we're gonna say "use-macro" and the easiest way to do this is say load:, and we give it the relative path from this file over to shared over to "_layout.pt".

What do you get named pt.html?

Let's rename that.

There we go.

layout.pt, could be HTML, could be pt, "pt.html" is kind of weird.

OK, so there and then we need to go over here and say, there's a section that has this content and it's gonna be metal:fill-slot="content" and we don't want this tag to be part of this.

Will say tal:omit-tag="True".

So just this content is going to go into that hole.

You want to tighten this up a little bit.

All right, that should have no real change.

Our code should still run.

What's gonna find this index.pt when it starts to render through Chameleon, Chameleon will read this, go well, we've got to go get the overall look and feel from here and just to make it, I don't know, feel like it's got something going on, let's put a little style in here.

body background.

Let's make it a really light green.

We do that by something like that, just so you can see some sort of common look and feel being specified here.

We run it.

We should get this new view.

Must have made some kind of mistake, and I have not.

Awesome!

We've got our PyPI site and we've got our footer and then this comes from that particular page.

Let's go.

And not you, View Page Source, let's view the whole source.

There we go.

That was cached or something.

So this comes from the overall layout page.

This comes from the overall out page layout page, and this is what our index had to contribute.

Well, with that in place, let's do a real quick navigation thing.

Actually, here, let's just do a <div> that has two hyperlinks.

One is going to be slash home and one is going to be about.

We'll do better navigation in a minute, and let's go and add one of these for about so these are views.

It's down here.

We're gonna add another template.

And if we call this in the home folder about.pt, it'll automatically find it which we will.

Now the way I find the easiest to create new pages is just find a simple one of these and copy and paste it because the top part is always the same she'll say about and this will just be about PyPI something like that.

If we run this again.

Now, we should be able to jump around.

If we go to home, we're here.

If you go to about, about PyPI.

You can tell the formatting is totally wonky.

We need spacing and whatnot, but check that out.

We've got our common look and feel We've got our styles coming across.

We got our footer very, very cool, right?

So this is how we're going to create this common look and feel over here in our FastAPI web app.

Well, really, we're just leaning on Chameleon.

OK, this overall common look and feel here.

And then within one of these, we just say, here's the overall layout and then we're gonna fill various slots.

You can have multiple ones of these, like we could set the title and so on.

But we don't really need to go into that right now.

This should give you more than enough to know what to work with here.

So I think this is a really, really good idea for building maintainable apps because you define your layout once, and then you're good to go.

|

|

|

transcript

|

3:53 |

You might be thinking: hey, this belongs in a CSS file so that you could maintain it separately, just like we did with our HTML and Python.

Maybe you wanna I don't know, have an image that you show on your website or use JavaScript.

Well, those would be files in a static folder that gets served directly, not through some sort of processing that FastAPI might be doing.

And some frameworks come with this built-in or defaulted like Flask automatically you can go to /static and just serve stuff out of there.

FastAPI doesn't work like that.

You've got to explicitly enable static files in two steps or three.

So what we're gonna do is gonna create a folder, directory called static.

And in here, let's just create something simple.

We'll do a "site.css".

In our site, let's go and move this over.

<style> here.

And let's change the color just slightly.

So you get a sense of, you know, here's something different.

Let's make it like a light blue, maybe purple.

Anything different is all we need.

And then let's go over here and try to include it.

So we have a style sheet and check this out.

If I select, type /static, I'm already getting some completion here.

You get even better completion if you go over here and mark directory as a resource route.

But it seemed like that worked pretty well for us.

And if I run this, let's go and see what happens.

Well, where did our green go?

Green is gone because I took it out of the page.

But why isn't it purple?

Well, because this is not working.

If I try to click, this is cached again.

But a quick click this, not found, can't serve that.

So the fix is easy, but not obvious.

Let's go over here, told you we're doing more and more things in these various stages, which is why I broke it out.

I want to go to the app, and I need to mount the static folder.

And then what I need to do is pass along this thing called StaticFiles, and that comes out of Starlette.

Not FastAPI, FastAPI is an API framework built upon Starlette, so a lot of times you'll see things like request, response things or this actually being driven by Starlette.

We'll go in here, we're going to give the name of this as well.

"static" like that.

I think we gotta explicitly do it.

These are all keyword arguments.

And then, if that wasn't enough static, we're also going to say name="static" like that.

All right, You would think this might do it, and it will get us part of the way there.

Check this out.

It says if you're going to use static files, we would like to be able to serve them asynchronously as fast as possible without blocking the rest of the requests.

A really cool way to do async await file access is with this package called aiofiles.