|

|

|

8:51 |

|

|

show

|

2:43 |

As you know, it's a services world out there, almost anything you need to interact with has an API and if there is no API, there is probably a webpage and I am going to show you that the combination of APIs and web pages allow you to connect your app to almost anything.

So we might want to talk to github to work with source code, repositories, automated builds, maybe we want to use Twilio for adding telephony and text services to our app, of course we want to update people through their Slack channel, send and subscribe people to our mailing list via MailChimp charge them money over their credit cards using the Stripe APIs, query some backend business data using Salesforce, send out some emails with SendGrid or even manage and work with our projects offline through Basecamp.

So if we want to connect our app to these types of services, and many more, we are going to need to learn how to work with services in Python.

And that's what consuming HTTP and Soap services in Python is all about.

So, whether you want to talk to github, Stripe, Basecamp or even that old fashioned Soap service that runs the backend of your business, you are going to learn how to do that here, And by working with all this services, you'll see that you can give your app super powers.

I hope you are very excited to explore the world of services from Python with me.

What are we going to cover in this course?

Well, we are going to talk primarily about transports that run over HTTP, Rest, Json over Rest or XML, or a variety of other things, and we are even going to talk about Soap.

So we are going to use Requests, we are going to use urllib2 and we are going to use urllib.requests.

Now, if you are unfamiliar with these, Requests is the most popular way to write Python clients for services, however, sometimes we want to use stuff that is in the box if you will that comes with your Python distribution that everybody already has, because Requests you have to install separately.

So we are going to talk about Python 2's urllib2 and Python 3's urllib and associated submodules like Requests and error and so on.

Now, many of these services are going to require us to authenticate, to log in, so we are going to see how we can do username, and password authentication to access restricted services, we are going to work with a variety of different data formats.

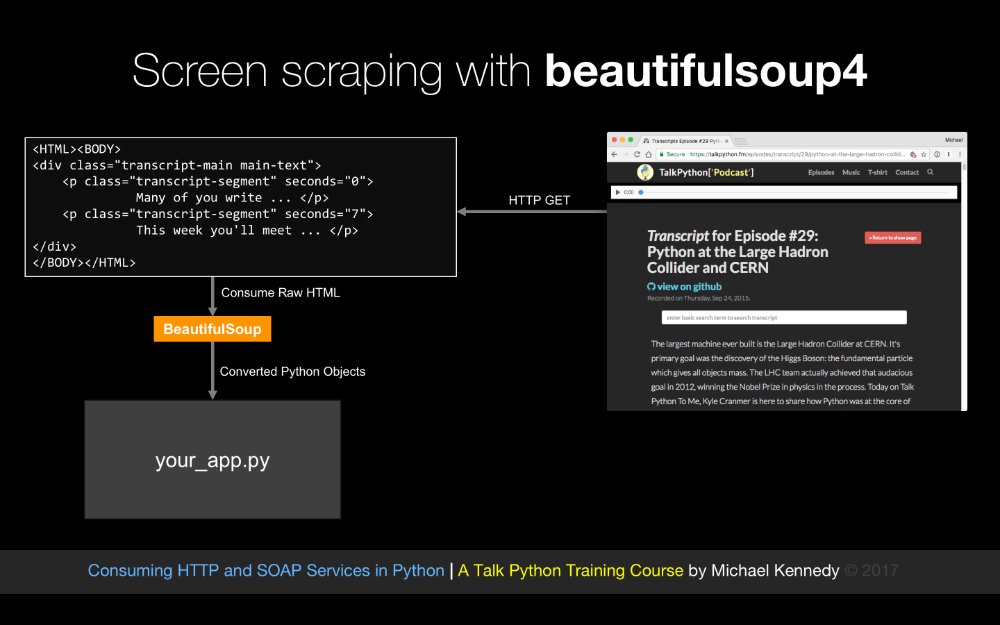

When we talk to these HTTP services, they might want to send us data back in the form of Json, or xml, or super complicated xml in the form of Soap or even binary data, so, we are going to look at how each one of these data formats is managed and worked with in Python and how to use that kind of data from a service, and finally, if there is no service, we are also going to talk about screen scraping.

Often we'll have a direct end point that we can contact and use a well known API against, but if the data is on a webpage and there is no API, what do you do?

Well, you do screen scraping, and screen scraping will let you go to a standard HTML page and more or less turn that HTML page into an API or series of HTML pages, so at the end of the course, the final chapter that we are going to cover is doing basically adding services to websites that don't have services by making their webpage themselves the thing that provides data to our app.

So that is what we are going to cover, and who am I anyway?

Well, my name is Michael Kennedy, thank you so much for taking my class, hey, that's what I look like, it's nice to meet you, you can follow me on twitter at @mkennedy and why should I be teaching this course?

Well, I've been working with Python for quite a while, I run Talk Python To Me, the number one Python podcast with over three million downloads, so I've had the unique opportunity to interview hundreds of people who are doing amazing things in Python and many of them have written the libraries that we are actually going to cover here, so when it makes sense, I am going to actually give you a look behind the scenes, at the people behind some of these technologies that we are actually going to cover in this course.

I am also the creator and founder of Talk Python Training, I've written many of the courses and I have over ten years experience teaching hundreds of in person and online courses throughout the world, so I am super excited you are at my class, it's great to meet you and we are going to have a great time working with all of these services.

|

|

|

show

|

1:34 |

The first thing you are going to need to take this course is Python of course, and I am sure many of you are happy to hear it's Python 3, not Python 2, and you might as well use Python 3.6 but if you have 3.5 or something like that installed, that's totally good, if you are on windows, there is a good chance you have no version of Python and you'll need to install Python 3; if you are on OS 10 or macOS as it's called these days, you probably have Python 2 which ships with it, but not Python 3.

If you are on Ubuntu, chances are that both Python 2 and Python 3 are there, so check your system, see what it is that you need, but we are using Python 3 and we are not using neither of the 3.6 features, so if you have 3.5 or 3.4 that's totally fine.

Now, there is a very small segment where we do talk about using Python 2 because the way the built ins work in Python 2 and Python 3 are different, so we do touch on Python 2 just for a moment, if you are on Windows, you are going to need to install that as well, but only if you want to do that little piece, you don't technically have to do it.

Some of you may be thinking oh should we do this on 3, should we do it on 2, I think the debate about Python 3 and Python 2 is pretty much at the end.

If you look at the last several PyCon keynotes done by Guido Van Rossum he always opens up with there is going to be no new versions of Python 2, no Python 2.8.

So I think certainly going forward Python 3 is the place where it's at, that said, the actual differences except for the built in sections are extremely small, so really, it's either way we'll work but the code we are writing is technically Python 3 so you want to have that installed.

|

|

|

show

|

0:59 |

The editor and the IDE that I am going to be using throughout this course is called PyCharm.

In my opinion, this is hands down the best tooling for writing Python applications; you don't have to use PyCharm, if you do want to just go to jetbrains.com/pycharm, they have two basic versions, you can get the community edition which is free and open source, or you can get the pro version which costs some money, it's not super expensive but does cost some money.

For this course, I don't believe there is anything in there that is required that is only in the pro version, so the community edition should be totally fine, for taking this course, if you have the pro version, hey you have some other cool features and that's great.

If you don't want to use PyCharm, that's fine, the code we are working with is not that complex, it's pretty self contained, you can use Sublime text, you can use Visual Studio Code with the Python plugin or extension, you can use Emacs, you can use Vim, but the demos I am doing are going to be in PyCharm so if you want to follow exactly along- get PyCharm.

|

|

|

show

|

0:32 |

We are also going to be using a API exploration tool called Postman, so Postman is a cross platform gui for interacting, analyzing and just generally recording and playing with APIs.

We won't make heavy use of it, you don't have to install it if you don't want to play with it, but I find working with APIs sometimes is great to get a good understanding and play with is and then implement it in code, and you'll see me do that a few times in the course, and we are going to be using Postman so Postman is cool, and free, so it's worth checking out.

|

|

|

show

|

0:58 |

Finally, we are going to write a lot of code in this course.

Well, I am going to write a lot of code in this course, and hopefully you follow along, it would be great when you see me doing these demos, for you to say okay, I am going to take basically the idea that I saw Michael do there, and I am going to write that, all of the APIs in fact that we are working with are public and out in the open.

So, even the ones where we have to modify data are publicly accessible, I've created some special ones for this course, so you should be able to write the code and follow along in examples.

So I strongly encourage you to have your own little projects, your own set of Python apps that is more or less replicating what I am doing here, but I do have the source code of exactly what I typed on the screen available to you here at github, it's consuming_services_Python_demos on my github account, and I encourage you right now to go star and/ or fork that repository so you have it as part of your record.

|

|

|

show

|

2:05 |

Welcome to your course i want to take just a quick moment to take you on a tour, the video player in all of its features so that you get the most out of this entire course and all the courses you take with us so you'll start your course page of course, and you can see that it graze out and collapses the work they've already done so let's, go to the next video here opens up this separate player and you could see it a standard video player stuff you can pause for play you can actually skip back a few seconds or skip forward a few more you can jump to the next or previous lecture things like that shows you which chapter in which lecture topic you're learning right now and as other cool stuff like take me to the course page, show me the full transcript dialogue for this lecture take me to get home repo where the source code for this course lives and even do full text search and when we have transcripts that's searching every spoken word in the entire video not just titles and description that things like that also some social media stuff up there as well.

For those of you who have a hard time hearing or don't speak english is your first language we have subtitles from the transcripts, so if you turn on subtitles right here, you'll be able to follow along as this words are spoken on the screen.

I know that could be a big help to some of you just cause this is a web app doesn't mean you can't use your keyboard.

You want a pause and play?

Use your space bar to top of that, you want to skip ahead or backwards left arrow, right?

Our next lecture shift left shift, right went to toggle subtitles just hit s and if you wonder what all the hockey star and click this little thing right here, it'll bring up a dialogue with all the hockey options.

Finally, you may be watching this on a tablet or even a phone, hopefully a big phone, but you might be watching this in some sort of touch screen device.

If that's true, you're probably holding with your thumb, so you click right here.

Seek back ten seconds right there to seek ahead thirty and, of course, click in the middle to toggle play or pause now on ios because the way i was works, they don't let you auto start playing videos, so you may have to click right in the middle here.

Start each lecture on iowa's that's a player now go enjoy that core.

|

|

|

|

11:51 |

|

|

show

|

2:49 |

Let's begin our exploration of services and consuming services in Python by taking a survey of the various types of services that we might encounter out there in the world.

We are going to do this by focusing on the lowest level services and moving up in abstractions.

So the lowest level service that we could have, the way that we could talk to the service is with using just raw network sockets and just sending bytes on the wire.

So we would open up a socket with bidirectional communication and we would send 00101 over the service and it knows what that means, they will say great, 1101 and so on.

This is the fastest, lowest level, lowest latency way to interact with services, so there are benefits here, it's super fast and it's responsive because we can actually send custom messages tailor made for the types of messages that these two apps exchange, it can be extremely low overhead in terms of bandwidth, do you need to send four bytes as an integer?

Well, maybe you just send four bytes and it just knows an integer is coming, so literally, there is no wasted sort of packaging on the messages, so why aren't all of our services like this, well, there is usually way more drawbacks than there are benefits.

many time this means there is special protocols, you have some app, in order to talk to it you have to use an entirely unique protocol just to speak to that.

And often those don't have standards around them, often those are brittle and just not easy to work with, not easy to get started.

Another problem is proxies and firewalls, especially firewalls.

So packet inspecting firewalls really hate letting traffic out of the firewall, if it's some sort of binary blob.

They would much rather see some kind of HTTP traffic where they can inspect it and make sure that it's all legit and good.

Also, these often run on non standard ports and there can be problems with firewalls as well.

There are some platform specific issues you run into as well, like just above raw sockets, but still more or less sending raw bytes are things like on the Windows platform DCOM or .NET remoting, on Java we have RMI, remote method invocation and these might be sending like in memory representations of some kind of object from one .NET app to a .NET server for example, and that means it's extremely fragile and really inaccessible to Python or Javascript or other things like that, it's like you have to basically have the same technology on both sides.

And also, if you look at the network traffic, can you make sense of it?

I can't, not usually anyway, it's very hard to read just binary blobs, it's also hard to debug if things go wrong because you cannot read it, okay, but we do have low latency, low bandwidth, high speed raw sockets, and that is possible of course in Python.

|

|

|

show

|

5:42 |

Another thing that we might run into, especially little bit older services, especially inside enterprises, inside these big companies, they built a bunch of internet apps that they can connect together, it's very likely that what you are going to run into is a Soap web service.

Now, in the early days of the 2000s, 2001, 2002, Soap services were fairly ubiquitous for intranet communication.

And, of course, as Javascript grew and the internet grew in popularity especially with regard to services, the HTTP services and Json Restful services more or less edged Soap out, but it's very likely that you are going to run into a Soap service if you are working with older systems inside of a company.

So it's really important that we talk about them here because you don't want to have to switch technologies just because you are talking to a old service, right, so what's the deal with these Soap services, how does that work?

Well, it's basically HTTP traffic, the thing that is kind of an unusual is every request, regardless of whether you are getting something or you are updating something, every request is a post which indicates some kind of action, so here let's suppose we want to login and we are going to post to a single location and this action header actually tells the service what to do, and what we are going to send is a big blob of XML.

It looks like this.

And the service is going to respond with another big blob of XML, we are going to parse that apart back in our app.

Now, what are the benefits?

One of the things that is nice about these Soap services is they typically have some kind of UI describing them, and they also have UI that describes them for tooling, so they are really built with the expectation, it's basically required that at least one side of these, the fence, either your app or the Soap service have some really strong tooling support to generate the stuff.

I go so far to say these are the easiest services to create and consume if, and this is a huge if- if both sides of these arrows, your app and the service have excellent tooling support for Soap services.

that's not the case with Python, and these are certainly not the best services, that's why they are not very popular anymore, but they do have a button press generate the server side, and client side tooling support, and that way they make them easy but like I said, I don't think they are the best and I definitely don't recommend using them if you can avoid them.

So what else is a drawback about Soap services, we talked about post, and this post only behavior means that it basically breaks the architecture of the internet, the internet is built around get, post, put, various HTTP verbs, and only HTTP get is allowed to be cashed, and that makes proxies work better, that makes local cashing work better, there is all sorts of scaleability benefits, and other semantics around being able to support HTTP get the most common way to access resources on the internet but Soap services just entirely missed this, they just didn't even understand that HTTP verbs meant a thing on the internet when they were created, so everything is post which means a lot of the infrastructure benefits of being an HTTP service are lost.

It requires tooling, so things like Javascript and Python are the real disadvantage in working with these services, but that said, we are going to cover some pretty cool stuff on working with them from Python if you must, they are often focused on remote methods instead of remote resources, so here we have a log in method rather than accessing something about our user and that can lead to poorly designed services.

These Soap messages they exchange obviously they are bandwidth heavy and while you technically can read them, if you must, they are not really friendly to human beings, they are friendly to computers, and they can be read by humans, but they are not what I would call readable.

Let's look at this Soap exchange, in detail.

So here I have an operation, imagine this is going to be called on a web service that is a Soap service, so I want to take a number, and I want to take a number and I want to double it, I want to take an integer and double that integer, so how does this work?

Well, of course we want to send this as an HTTP post, so what we are going to do when we call this, the tooling that we would have had to use to somehow generate this service, would convert this action and this data into what is called a Soap envelop.

So the Soap envelop is an XML namespace laden bit of XML that is quite dreadful and you should stay away from it if you can.

But like I said, if you have to call these services, being able to do it in Python is cool, so here you can see we have a Soap envelop, and there is a Soap header and a Soap body, the Soap body contains some parameter, and that parameter's value you can see is 123.

So we are going to want to double the number 123.

It's going to go off to the server, via post, via HTTP, some parts you don't see here, the action is actually stored in the header, it's going to process that, and generate a response that comes back to us that is also a Soap envelope.

And this time the Soap body contains a double an integer response which also has an integer and that integer's value is 246.

So you can see, yes, awesome, we doubled the number and you can bet that the value returned from this function very much returned 246 when we gave a 123.

But it took almost a kilobyte of data exchange just send the four bytes over, the 123, and return the four bytes back, 246.

So, this is not very friendly to people, to the network, there is lots of problems with it, but this is how Soap works.

|

|

|

show

|

2:28 |

Alright, next up, the majority of services that you are going to see, are going to be HTTP restful services.

Be careful using the word restful because just certain people restful means very specific set of criteria, that most HTTP Json based services don't actually meet.

Maybe they are 75% restful or whatever, so I am going to try to use HTTP services but these are more or less restful services for their features, even if they are not entirely complete.

So how does this work, well, we have our app, and again we are going to do an HTTP request and again, this is going to go over HTTP but this time it's going to be a get request and it's going to be a get request against something that is a noun not a verb.

And this time we are going to request API/users/7 indicating we want the details about user 7 and what we are going to get back from the server, is going to be a Json message with the name Michael and id 7, presumably, we even get more information, but just keeping it simple for the slides here.

So what are the benefits?

Well, there are many.

This is universally accessible, this service is universally accessible, almost every platform has decent support for basic HTTP string exchange.

This is super easy to do from Python, this is super easy to do from Javascript, as well as C, ObjectiveC, swift, you name it, we can call this function from basically anything that will talk to a network.

Because it's HTTP and it follows the get post put standard HTTP verbs, it's firewall friendly, proxy friendly and for example this request is potentially cashable.

Reading this message name Michael id 7, is ridiculously easy, it's entirely human readable, and while it's not as light weight as the binary exchange it's dramatically more lightweight than what we would get if this was a Soap message, I've put this in the relatively light weight bandwidth story.

There is some drawbacks, it's often that you will find these services that are not self-describing, there is some tooling called swagger that you can add to the server's side and then use some tooling on the client side to help describe and generate proxies and things like that but, generally speaking, these services are not self-describing and like I said, if the thing you are absolutely maximizing is bandwidth exchange, well maybe raw sockets would be that, but if it's got to go over the internet, this is about as good as it's going to get.

|

|

|

show

|

0:52 |

Finally, let's talk about queuing.

We are not going to cover queuing in this class, but if we are taking the survey of the range of possible ways of communicating with services, queuing probably shouldn't be left out.

So queuing is typically done sort of behind the firewall, right, so I've got a set of apps they are running behind the firewall, one of them is going to get some sort of operation, it's going to add some kind of work or message to the queue later on asynchronously another app that might pick that up and do something, so for example, hey I am a web server, will you send an email to this person, and I am going to carry on serving at my web pages.

Some other sort of email work or process might grab that go yeah sure, I will start the outbound email campaign to this person.

That is queuing, we are not going to cover queuing in this class, but just to round down the spectrum there you have it.

|

|

|

|

11:44 |

|

|

show

|

1:27 |

The first type of services that we are going to focus on, not surprisingly are the most popular type, that is HTTP restful services, and we are going to use a very popular package called Requests for our first look at it and then we'll come back later and look at it through the eyes of the built ins.

So where are we in our overall course map?

Well, we are talking about HTTP clients and right now we are focused on Requests.

You may have heard of Requests, Requests or HTTP for Humans as Kenneth puts it is the most popular package for Python, not the most popular networking package, it's the most popular package, it's been downloaded seven million times a month.

So, it's really popular, and you can see that it has a very simple API and the reason this exists is the built in urllib and urllib2 have some really funky APIs and it's generally hard to work with, there is a lot of funkiness and it even gets less good, if you will, in Python 3, rather than Python 2, so the built ins are not super joyous.

There are reasons to use them and we will talk about what those are and when, but for our first look at HTTP services, we are going to absolutely use Requests.

Like most Python packages, we are going to install Requests via pip, so here you can see its projects page on PyPi, this is the newer version of PyPi at pypi.org/projects/requests and it says to install it, we are just going to pip install requests, that's pretty straightforward, so let's go do that now.

|

|

|

show

|

4:06 |

Now, we are going to need to install some packages, during this class, in particular we need to install Requests, we are going to need to install Beautiful Soup, and some of the other foundational or dependent packages there.

Now, one thing we could do is we could say pip install requests or something to this effect, however, if we do, this is going to modify our global system, and there is a couple of drawbacks to that, we don't want to modify our global system because it requires us to run these installers as admin, and this is kind of running arbitrary code off the internet as admin, not super recommended, it also means that we are going to use the same version of the library across every project we run, on our computer, so maybe we want to use the newest request for this, but some other projects it's old and it needs to use an older request because there is some kind of change, how do you manage those two environments?

So the answer of course is to use virtual environments, so let's just ask which pip or which pip 3 is really what we want, so we want to say, see this is obviously the global one, and if we ask it what is installed, you'll see that there is a bunch of stuff installed, so, let's actually create what is called a virtual environment that we'll use for the rest of this class, so I am in a directory called /pyhton/environments, there is a lot of conventions around this actually, there is different locations for different people, sometimes you put it in your project directory, sometimes you put these outside, I am going to put them in this folder in my user profile.

In Python 3, there is a built in way to create a virtual directory, so we can say Python 3, now on Windows, there is no Python 3 just be aware, you just have to have the path to Python 3 versus Python 2.

So that is a little tricky, but on Linux and OS 10 we can say Python 3 and that is pretty straightforward, and we want to run the module, venv, and we want to give it some sort of path, so let's say we'll go to consuming services venv.

Now, this will totally work, however, just one word of caution here- if your are on a Mac, and you are going to use PyCharm, both of these things need to be true, if you are on a Mac and you are going to use PyCharm, there is a problem with PyCharm understanding the type of basically following the symlinks too far when you set up a virtual directory this way, and so what you need to do for the time being, is add a copies flag here to say don't create symlinks, create just a copy of the Python file that we can link to directly in this environment.

So, because I am going to be using PyCharm, I am going to put --copies and I am on a Mac, it's up to you, but, I recommend doing the --copies for the time being.

Okay, great, we've created it, how does this change our pip story?

Not at all, because notice my prompt, it still says Mac Book Pro, just standard prompt there, so what we need to do is we need to activate this, we need to basically change this individual shell temporarily to know only about that Python, so I can come over here and say .

(dot) so apply this what I am about to do to this shell, and I am going to run ./consuming_svc_env/bin/activate on Windows, you don't need the dot and this is activate.patch.

Notice, my prompt changed, if I ask which pip, now it's this one, again, if I ask which Python, it's now this one from this environment.

Great.

So, most importantly, what we are after, we were asking for a clean environment, and here we have a brand new fresh clean virtual environment, with pip and setup tools.

So now we can start installing things that we are going to use for this project, here, so let's say pip install requests.

And it either uses a cash version or downloads it from the internet, and then installs it, and now if we ask pip list we can see, yeey, requests is installed, so let's just verify that everything is hanging together, we'll do a quick little thing here, we can come over and import requests, and because that didn't break, things are looking good, and then we can just make sure that there is actually something reasonable here, there we go.

So we've got cookies, sessions, get, post, those kinds of things.

Alright, so it looks like requests was installed successfully.

Now we are ready to use this environment, this clean environment which does not require admin rights on the machine and is just dedicated to our little project here, we can use this clean environment for the rest of the class.

|

|

|

show

|

1:53 |

So we've been able to create this virtual environment, that's great, however, let's get this into PyCharm, let's load up our project and let's actually register this environment as the one that we are going to use to execute all of our code.

So over here, I actually get cloned the service demos, these are the demos that you have access to on github, and I've actually just renamed them so the name isn't so super long.

And on OS 10 or macOS you can just drag and drop this into PyCharm or even Sublime text or Visual Studio Code, lots of these editors support that.

And it will open the project.

Okay, cool, so here we are, now how do we take our virtual environment which is over here in this Python/environments/consuming_svc/bin, this executable and make it the Python that we are going to use in PyCharm.

Well, we can come over here and we go to settings, and there is a project interpreter, notice right now, it's just the system wide 3.6.0 so what I want to do is add a local virtual environment.

If you want to do this entirely from PyCharm and not use the command line to create the virtual environment, that's fine, you can just use create virtual env, but because we already have one, we are going to go and do add local, now over here, I am already in this directory, because you can see here is the Python so I can just say open.

on windows it's start.

And I could take this over here and say okay, I want to use this, and let's go and drag it down here and say whatever that is that is the path.

So now you can see it's a virtual env at Python/environments/consuming_svc_env, and everything looks good, it looks like we technically could upgrade this here, so we can go ahead and upgrade setup tools and that will happen in a second, great, install a few other things.

Alright, now, when we run some code, PyCharm will now use our virtual environment.

|

|

|

show

|

0:38 |

So let's review installing requests, we had already created a virtual environment, but don't forget to activate it, so .

(dot, space) the path activate on Windows, you don't have to do the dot and this is just activate.batch.

So either way, our prompt will change and then we'll have access to the packaging tools for that environment.

Then of course, we are going to do pip install requests, that is going to download and install it and then just to make sure you can make a quick pip list to see what you've got, and here we can see that requests 2.13.0 that is the latest at the time of this recording was successfully installed and ready to roll.

|

|

|

show

|

2:41 |

Now that we're up and running with Requests, let's make a simple get to a web page.

So first of all, we are going to need the Python script to run aren't we, so let's go create a new Python file and we'll just call this simple get, and PyCharm is asking should this go into the github repository, yes, it should.

Okay, so what we are going to do is we are going to start by importing Requests and that is coming out of our virtual environment that we wired into PyCharm here, and let's just make sure that works, so we'll just do a little print here and we can make, over here there is no our little run button, it's grey, that's because there is no what is called a run configuration, so let's create that by right clicking here, and saying run, and notice it's using our virtual environment Python here, and it just says hello.

And so import, that worked well, our environment is working, everything is looking great so far, so the first thing we are going to need is a url.

And let's just go to talkpython.fm and do a download there, so what we are going to do is we are going to use requests we are going to do a get, we are going to issue that get to a url, we are going to capture that into a variable called response, or resp, now we can come over here and just print out and see what happens, see if this works, so there is a little pause, it actually did the download and we got a 200, that is awesome, so it looks like that worked.

HTTP 200, that is about as good as it gets.

So, let's actually add a little check to make sure this works, so suppose I had something wrong here, we should have a 404 or something to that effect, or even worse, something worse.

Okay, so it looks like the response came out great, so let's do a little test, we'll say if resp.status_code !

= to 200, then something went bad, we'll print error requesting url, maybe we'll put the little response code here, something like that.

And then, I like to return or break, I can do an else here, but let's go ahead and make this a little nicer, I am going to define a main method, which is this, and that is going to be the code, and we'll just run this main method, if and only if it's time, so here we can say return and we'll be out of here, so we'll cancel a lot of this execution if there is an error but if there is not, we are going to print out, let's just print out resp.text.

Now, that is going to show the entire page, you'll see it's screen by, maybe we just want the first 500 characters, just to see the title, so of course we can use a slice on the string, which is nice, here we go, so let's see the title here, talk Python to me podcast- alright, it looks like this works, so super easy, request.get, give it the url, check the status code and work with the text.

|

|

|

show

|

0:59 |

So that was simple, right, the whole promise of Requests is that it makes doing simple operations simple and easy and straightforward, I certainly think that that operation fit the bill.

We start by importing Requests, and then we just do get url to actually download the response, now this is a blocking call, it's not going to return it's we've actually gone to the server, looked up the dns for talkpython.fm done the get, got the response, entirely downloaded it so this is a blocking call, and we have response and it has both the status code set so we also have headers and cookies and things like that and we can access the text property, so here we are just printing out, here is the first 500 characters that we got, just see what this was all about, now this was really cool that we could go do this simple get against the website, but what we want to work with are actually services, things that are meant to talk, computer to computer, program to program, not program to humans or program to browser to humans, however you want to think about it, so that is what we are going to do next.

|

|

|

|

17:18 |

|

|

show

|

0:57 |

While HTML and text is really made for humans, that is not the focus of this course, a focus of this course is to consume data and formats meant for programs and computers.

So, what does that include, well, Json is the most popular exchange format these days, especially around HTTP restful services, back when Soap was popular XML was really important and we saw how XML being passed around or take things like rss feeds, there is a number of older services that still use xml so we are going to work with that, obviously binary data is very often something we want to access and download, think of a zip file or an image or something and then.

Soap, we are also going to come back and look at Soap, but for now, let's focus on Json, so we are going to look at how we consume Json services, obviously, but before we do that, I think the right place to start is for us actually to just do a little bit of work with Json in Python and leave the network out of it.

|

|

|

show

|

5:53 |

So here I have a little bit of Json text.

So, this Json string, if converted to Json or to other objects has a demo field and that says processing Json in Python, and the instructor is Michael and the duration is 5, presumably minutes.

So let's start working with this, let me just print this thing out for a second, now, notice this is still running the old one so I am going to right click and run that and it prints it out and it's kind of indistinguishable from say like a Python dictionary, which actually means working with Json from Python is super natural because the mapping between Json as something in Javascript or text mapped over to Python as dictionaries is nearly one to one, it's not exactly but very close, but let's just make sure that that is actually a string so I'll say what is the type of this things as well, now you could see, okay it is a str, it is, so how do we load this?

Well, Python comes with what is described as 'batteries included' and that means it has a rich standard library including support for Json, you could see that is coming straight out of the library, so we don't need anything external, we don't need to pip install anything, to work with this, so what we are going to do is we are going to come over here and we are going to parse this Json so we'll say data, so there is a couple of things we can do, we can load or we can load s, now, I think the naming choices here were super poor how about load from string, or load from file, or something to that effect, but load you can see fp for file pointer and load s, s for string so much for good variable naming, but the one we are looking for is we have some text in memory, we want to turn that from text in the form of Json into Python dictionaries in the form of data, so we are going to use this and this is the one you are going to use most of the time when you are doing services because you will make an http request and you will have text in memory, so text Json and then we can print this out again, we'll just print out data and if I run this, you could see it looks really similar, it's different it has single quotes instead of double, because that is the way Python dictionaries represent themself, and it's all in one line, again, because of that.

But if I gave you that string there, you couldn't really be sure that that didn't come from some sort of service, so let's go and do type again, here we go, str says it's string, now it's a dictionary and you can see that actually the duration is a number, it doesn't have quotes on it, and that is pretty cool, let's get a few pieces of information here, we'll say in structure= now we want to get information, we want to get something on this dictionary, so that is standard Python, you just do that by indexing in with the key so we'll say instructor and then I'll print your instructor is { }.format, maybe I'll even spell it right, how about that?

Now if we run it, you can see your instructor is Michael, now this is usually good, but sometimes this isn't so good, so for example if this doesn't appear we are going to get something super bad, we are going to get a KeyError, right, it sort of interspersed throughout here but somewhere in here, there we go, KeyError instructor, so we can use a different format, different style of getting the value, so that is probably recommended because the internet you never know, we can say get me an instructor like this and then it just says oh, your instructor is none, or we can even ply a default here saying substitute if there is not instructor presented or we are just going hey look, you are getting a substitute teacher.

Alright, right now it's substitute, so if I go and put that back, now, hey your instructor is Michael, very cool, okay, so that is great, final thing let's make a change to this data, let's say you know what, your new instructor is going to be Jeff, and somehow I want to let's go and just print out the data and see that is changed, oops, don't need that twice, do we, we have your instructor is Jeff, okay, so here we are processing this, instructor Jeff, but this is actually a Python dictionary, right, it's a dict, we want this back serialized as Json, so how do we get this back, we'll say new Json and then we have a reverse of load s to dump s, again, naming is really kind of unfortunate but it is what it is, you can dump Json to a file pointer, or you can dump s into memory for the same reason that we frequently use the load s in services, we are going to use dump s in services as well, and then let's just print out both the type of new Json and also new Json itself.

Let her run, boom, there it is at the end we have class str and now this is the Json, notice the double quotes and our class is still called the same but our instructor is Jeff, same duration.

So that is basically it, working with Json from Python, really it comes down to the Json module, load s, dump s, and then somewhere in the middle, just working with straight up Python dictionaries, one thing that may become some sort of hangup for you and there are ways to work around it, with the Json module is that is there was some kind of date in here, this would not work so for example, if we come over here, and this has a datetime like now, and we'll just put str time or something like that, if we try to run that, it's woow, datetime is not a serializable, so basically, you can register handlers for serialization and you could also just do like a string representation, so you could choose like what string or representation you want, and then we'll store it like that, okay.

But dates themselves are not supported in Json, just be careful of that.

Alright, now you know about Json and working with it from Python, let's go get it from somewhere way more interesting than an in memory static string, let's go get it from a web service, somewhere like gihub.

|

|

|

show

|

1:17 |

So you've seen Json parsing and serialization in action in Python, let's review the key concepts that were in play; so this is more or less the same code that we just wrote and remember we started with importing of the Json module, this is a built in thing in the standard libraries, this is a built in thing in the Python standard library so we didn't have to install an external package to get this support, we just import Json and we're off to the races, so we started with Json text which is just an in memory version of some Json string representation, and we want to turn that into something that we could actually work with in Python, so what we did is we called the load s, load from sting, and we passed the Json text in to be parsed, we got back data, which we saw was a dictionary, so we have this dictionary, we work with it however you work with dictionaries in Python using get, assigning to a key to change the values, things like that and then to get the data back out, to send it off to somewhere to save it to a file of course we just did a dump s, dump to string and what we get back is more text, now, it's worth noting that the thing that came out here is sort of somewhat minified Json, there is no like line breaks or indentation or anything pretty about it, you can set an indentation setting on the dump s method and it will be a little bit nicer text if you care about readability.

|

|

|

show

|

8:35 |

I bet by now you are ready to actually consume some real services, we've made some requests and we played with Json, but we haven't really put it all together and we are going to start by doing that with github, so github is a public API and we can use request issue a get to it and then what we'll come back is some kind of Json document that we can work with in our code, so let's switch over to PyCharm and do that now.

So, I've made a new file here first to work with and let's go ahead and just set that as he run configuration, notice we are running out of our virtual environment and we'll just start by adding a little structure, so we are going to import requests and then let's have a main method, and let's conditionally call this, we can use PyCharm's little template to help us out there, there are live template.

Okay, so we are going to need to get the url here for the API and then of course we are going to do something like this, maybe we are going to need to add some headers, maybe we are going to need to do some other things, but we are going to need to start by knowing what is the API we are going to actually work with.

So let's drop in on developer.github,com and check out through API so if you scroll down there is a little bit of a getting started guide, authentication, and so on, we are just going to go over here and let's suppose we want to work with repositories, so if we want to get information about like say repository here, we could go to twitter bootstrap/bootstrap, we could also go to the github repository for this project, so we are going to access https://api.github.com/repos/ let's get the repo for this course, so it would be mikeyckennedy that's the username and then it's going to be the repo name, so over here, let me paste that, okay so now we have our url, we are going to go to the repo which basically contains well, literally this source code, that is kind of meta isn't it, and we are going to go get our own thing here, now the question is what are we going to get back, we can start by exploring this in a real simple way, we already know how to do this request here and we can just print out the response.text okay, so this is kind of the lowest level, let's go ahead and run that and see if this works, cool, it looks like we've got something here, now I don't know about you, this is a little hard to read, if you look at this scroll bar it's huge okay, so that's not great, we technically could do this, import json.loads and we can give it this, and we could then say json.dumps, this might seem a little bizzare but then of course we could say indent=true, run this and we get at least formated Json, but, let's have a better way to explore this, shall we?

So let's jump over here and run postman.

Postman is great for exploring APIs, see I've got some saved ones, that we are going to work with later for more complex interaction when we get to modifying data on interesting APIs and so on, but we can just put this url in here and notice, we can do a get put post but this is just a get and we could set like headers, for authentication if we needed it but we'll just go and send this off, here we get what came back, so you can see we have information about the full name of the repository, its id, the number of teams, the number of forks, all sorts of stuff whether or not it has issues and so on, okay, so we could print out the get url, the clone url, so let's do this, let's say what we want to do is given the name of a repository and the user, we would like to go and actually get the clone command in order to clone it, or maybe get the url to open its documentation or homepage, okay?

So we are going to be doing that, by going and downloading this, converting to Json and then let's say access the clone url.

Okay, so we've seen we've already gotten the text and we can also parse it like this, but of course we should really check that this works, right, and let's also get some input here so we'll do this.

So we'll write a little function here called get_repo_info and we'll write that down here above the dunder main check, and this is going to get two things, this is going to get the user, it's going to be input, we'll just ask whoever is typing here, what is the username, and then we'll do the same for the repo, and then we'll return user, repo.

Okay, great, so I am going to put this in a comment up here so we always have it, in case you forget what the name especially this right here.

Alright, so we are going to do a little bit of formatting like so, okay so that again is going to get what we need, let's format that a little bit and tell PyCharm that no, my name is not misspelled.

Alright, so let's run it make sure this little step is working, what is username, what is a repo name, this is going to be a copy paste, and it looks like it still works, great.

So the next step is to actually check, because it could have gotten a 404 all sorts of things.

So, we'll do this, we'll say if resp.status_code != 200 then we'll print error accessing repo and then we'll just bale, so if we put in stuff that's wrong, error accessing repo 404, if we put in stuff that's right, everything is working, okay.

So this is great, we've got our information, and maybe we should check that the format is Json, but we are more or less guaranteed through the API that we are going to get Json, so we could avoid this whole step and we can actually avoid even using the Json module whatsoever, because requests actually already supports this, so what we are going to do is we are going to have a repo data and we are just going to go to the response and if we know that the inbound data is Json, we can just say Json and that will actually convert this to a Python dictionary so if I just print the type of repo data and we run this, and we put in something legitimate, we get a dictionary.

So the final thing, what we were trying to do the whole time is we were going to say this, print to clone this person's repo named let's just put a little bit info here, so we'll say user repo print the command is get clone and then whatever the clone url is here, so we'll say format and let's just store this for a moment, so what we need to do to get this if we go back over to postman, is we need to go to the dictionary and get the thing called clone url, so we can just go to the repo data and say either like this, this is a little bit dangerous because who knows what you are going to get, you might get a key error, so we could do a get and this will just return nothing, if it doesn't exist, we'll say error no data, something like that, so we are going to get this clone url, we can get rid of this and we are going to come down here and just say here is the clone url to go.

Alright, so let's put this all together, we are going to run this username is mine, repo name is the repo from this class consuming services Python demos and if I hit go, the clone command is get clone such and such.

Well, let's see if that is going to work.

Get clone that, oh it looks like it works.

I guess our service is working perfectly, so that is how we consume services that are Json based, HTTP services at least read only get pay services with requests, we just do basically construct the url we do a get we make sure the status code is whatever we expect, 200 is not always what is expected, sometimes if we were doing a post and creating thing, 201 would be expected but you check for what is the success code if you will, and then we can call just the Json function right on the response and it converts that straight to a dictionary, it's a not a huge step to save, that we are skipping over using the Json module, but it's just one less thing to think about how do you work with Json from this other thing, we just work with it straight from requests and then, once you have it as a dictionary in memory, well, it's no longer a web service it's a problem, it's just data in Python, go.

|

|

|

show

|

0:36 |

Let's review the core concepts about accessing Json services with Requests, of course we are going to start by importing Request, I have to have the url to the service that we are going to call, in this case we are just going to do a basic get so we say request.get given the url, we get our response back and we've worked with this already, but the thing that is new this time is we now can call .json and that will directly translate the text, string representation of Json into a Python dictionary and then we just work with the data in there in our example we got the clone url for the get clone command from wherever repository we were pointing at in the API.

|

|

|

|

21:02 |

|

|

show

|

0:29 |

Now let's focus on xml as a data exchange format, we are still going to be using HTTP clients, we are going to be using rest in particular although this equally applies to urllib once you get the text representation of xml downloaded, we are going to focus on how to work with xml for basic read only services.

Now this is not Soap, this is just straight up xml, things like rss feed and other xml data sources out on the internet.

|

|

|

show

|

9:37 |

Let's work with some xml data from Python.

So here at the University of Washington, they've got some xml data that we can go grab, and they happen to have something from the course catalogue at Reed College which is fancy university here in Portland Oregon, it happens to be where Steve Jobs went; so we are going to import this xml file and we are going to do something interesting, we are going to be able to answer some interesting questions about these courses using xml processing in Python.

Now, in the beginning, we are just going to read the file, we are not going to do any sort of web service, although technically we could sort of point directly at that xml file, later we are going to come back and do this sort of processing from Requests, calling services that actually return xml.

Alright, so let's go add a file here, process xml, and I happen to have already downloaded that Reed file, this xml data that we can look at it here, it's what it looks like.

So, we are going to just work with this locally, in order to get started with xml in Python, we are going to work with a particular class called Elementary, and it's going to come from xml.etree, okay and we are also going to need to work with the file system a little bit, to get to our file, let's get a little structure, alright, so the first thing that we need to do is actually find that file, so let's go and create a variable called folder, and this is going to be os.path.basename(__file__) of wherever this particular file is.

Okay, dunder file will say the name of this executing Python module, so process.xml is a full path, this will give us the folder where that lives, and then we can reach and sign xml data and lad up reed so we'll say file=os.path.join and we want to give it the folder, the xml data and reed.xml, so this works in a nice platform independent way for example this is going to be like /xml/ on OS 10 and on Linux, but on Windows it would be back slashes, perfect, okay, so then what we need to do is just load this up so we'll say xml text, let's go and just put this a width block, width open(file) as fin: and then we'll just say xml_text = fin.read() that is going to read all of the text, so now that we have that loaded up, we are going to want to load, start working with the xml, just like we used Json before, we are going to use the xml element tree, so we are going to say ElementTree.

now there is a parse, but, again, I don't know what the deal is with these sort of file format modules but they kind of suck in names, again, so parse actually loads like a file type thing, so what we want is ElementTree.fromstring, so it seems to me like there could have been a more clear way to name these things but it doesn't matter, xml text is what we want and then we could just print out the DOM just to make sure that this works, and notice we are running the github one from before so let's go ahead and run this one now.

Not a directory, yes, oh I said base name, I meant dir name, let's try that again.

There we go, element at route and so whatever this is element route if we look you'll see that's this, so what we want to do is we actually want to get these little individual courses here, so you see there is a course, more courses, lots of these courses.

So, we are going to read in these courses, and we want to answer questions like what course is running and Eliot, in this room at a given time or something like that okay, you can see this is not a huge file but it's decent, it's 13 thousand lines, so it's got a lot of data about these courses, so we've already loaded these up and the way that we can access these course pieces here is we can use what is called an xpath expression, so what I want to do is actually say courses, we can say dom.findall and we can just say course.

Now we could find, let's find the course titles, just to show you more interesting, so we have course and then we can navigate down to title, so I could do course/title and then I could do something like this, for c in courses: print (c.text), let's try this.

Alright, so there is all the course titles, we've done a search and we've lost some of the information like what room was that course in, I don't know, its title is Genetics and Molecular Biology, but that is really all I know, so we are going to take a step back and we are going to actually get that entire course node here like this little bit right there, little pop up and then we'll be able to answer questions about it, okay, so this is going to give our courses, and now it's cool that this is an xml thing, I'll call this course_nods, something to that effect but I want a richer container for this, so I am going to import something else up here, we are going to import the collections module and here I am going to create a thing, actually I'll do it outside this method call, although it's called once so it didn't really matter, I am going to create a thing called a course, and I'll create that as a collection.named collection, so normally what we get back is just an xml node and we could maybe stick in the dictionary but a named tuple is much nicer, so we have course and then we just say the variable, so let's say we want the title, the room and the building, those three things, so if we do that, and we come down here we can do something more interesting, I can say the courses are going to be equal to, now we can do this as a loop, I'll write it as a loop first, and I'll do a list comprehension in a minute so I'll say for n in course_nodes, and then I am going to create a course, I want to pass some stuff to it and then I'll say courses.append, of course, now we can just come down here print out the course is, okay, so what goes in here, well first the title, then a room, then a building, so the title like this, back over here we are going to need we are kind of working with an element at this level we are going to need to do another query to get this, one to go to the place and find the building one to go to place to find the room.

So those are the three things that we need, first thing is the title, that's easy, so n.find('title') and we can say text, and let's just print that out, and see if that works, oh it's missing its arguments, okay, no worries, we'll do that next.

The next thing we need to do is we need to find the place, then the room, and then the next one, the last one is building, let's see if they call it room, building room, they do.

So here you go, this is working perfectly, we've loaded up the xml, using elementary.from string, we've done an xpath query, a very simple one but you saw that we could do more interesting ones, like for example the place and then we got a bunch of nodes back and for each node we did a little bit of work to transform that node from just a bunch of xml nodes that we could still work with, down to actual almost a class to one of these named tuples and then afterwards, we can do interesting things like we can answer the question like what are the classes running in the building Eliot, okay, so this is pretty cool, but we still have a little bit more work to do, let's go over here and I'll just print it out, it seems like it's working, let's do one final thing, let's go and say building= and we'll do an input from the user, we'll say what building are you in, so maybe somebody sit in there like wait, what class is running in this room right now, and then we'll ask what room are they looking for, what room are you next to and then I could do some kind of query here so I could say room courses, and we'll just write this as a simple list comprehension, so I'll say c.title for so we are going to say for c in courses, and we are going to pull out the title, but only the c where the building, c.building=building, notice the incompletion here, that's beautiful, and c.room=room.

Okay, so these are the ones we are looking for, so then we'll say for c in room courses, print, let's do a little star like this, see that title, actually we just put in the title, so we'll go like that, alright, so let's go and run this and see if this works.

First of all, I'm in Eliot, I'm in room 414, boom, those are the rooms where this is, not that many, just ten or so, let's just double check, go to xml file here and just make sure if I go find this course that it's actually in that room.

And that there are courses that are in other rooms, so here, so those will be 234, let's try this again, I'm in that room, 234, boom, Topics in French Enlightenment, First Year Russian, very cool, right.

So that's all there is to it, we are just going to somehow magically get a hold of the text, later we are going to do this off of the web but right now we just got off the file system, we are going to create an element tree, we are going to parse it from a string, then we are going to run possibly as sequence or series of xpath expressions, one to find the course nodes and then once we have all the course nodes, to pull the individual pieces of data out of them, right, and then finally, once you have the data like from here on down, this is pure Python, once you have that loaded as a bunch of named tuples, well, it's just a matter or writing code against them.

I did say we can improve this a little bit here, so let's work on this, so I can take that and say okay for n in the nodes, what I want to create and send back is one of these, and that's that, right, so that will simplify that a little bit there, just see that it still works, Eliot, 414 boom- still working.

Okay, so maybe that is cleaner, maybe it's not, I don't know, it's up to you but here is just two nice little list comprehensions working across and in memory xml dom.

Next up we are going to apply this to some web services.

|

|

|

show

|

3:22 |

Let's review working with xml in Python.

We're not yet to the services part, but that's actually the easy part, it's working with xml itself that can sometimes be tricky; so here we've got a little fragment of the data that came from that Reed college course catalogue that was reed.xml, you can see we have a title, we have days, we have building, and room, and if we want to work with this, we go and import ElementTree, and just to make our lives easier, it wasn't technically necessary but it is better to create a named tuple which is more or less a class with fields only and no functions, which was all we really needed it was little data containers, and then we are going to parse that from the string representation of xml into the DOM, the object model, we are going to use the from string method on the ElementTree, and then we start writing xpath, queries against it, so here we are looping over the find all of the courses, and for each course, or each really node that we find in the xml document, we are going to run a set of subqueries on that document, so we are going to query for the title, for the place/building, place/room, and then in this example we're also getting the days that it runs.

Then if we want the courses that are held in Eliot hall, or a building, all we have to do is write a little list comprehension say give me the course for course in courses, these are the transformed named tuple types, where the building=eliot.

Boom, it's done, it's worth considering the trade-off of xml versus json and how it relates back to Soap services.

So Soap is a much more strict and honestly convoluted protocol than simple xml exchange.

So Soap has envelops and headers and a bunch of namespaces, and certain ways in which things must be structured, and xml is just much more general, there can be simple xml or complicated xml.

So what we are doing and what we are going to do, for this section, is all about just working with plain xml data, not Soap services, Soap services require tons of tooling and we'll work on that later, right, we'll see that Python actually has some decent answers to the Soap story if you must use those services, but in this case, we are just working with straight xml.

So, we are just focused on working with xml by itself, now, if you get the choice, if you get to decide whether you have an xml service or a Json service, choose, Json, it much more closely matches the way data is represented in Python and the way it's represented in Javascript, so if you are building a web app and it's got a Javascript front end and a Python back end, Json all the way, but, consuming xml is still not too bad as we've seen.

So, let's go look at another example.

|

|

|

show

|

6:57 |

Alright let's put this xml concept together with requests and work with some real live xml data on the internet.

Real canonical case for xml these days is rss, so we are going to go and get the rss feed from talkpython.fm, pull back and answer a few questions about the state of the podcast.

Back over here in PyCharm I've got a new file consume_xml_service and we are going to start by finding a way to get the data off the internet, you can bet that that is request.

We are also going to need to work with xml itself so we'll do a from xml.etree import ElementTree, now we are also going to do some interesting work with dates, and I am going to need to parse dates, and parsing dates is always super not fun, but, we are going to use this package called Python dateutil, so check this out, I can come down here at the terminal, and notice, that it already has the virtual environment activated, that is super cool, so then we can say pip install Python dateutil and that is going to install it for us and from that, we can say from dateutil.parser import parse and this is going to allow us to parse some dates in a little bit.

Okay, everything is set up, let's add a little structure, we'll have a little main method for now, we'll figure out what to do with that, and like so, there we go, so first we are going to start by getting the xml, and let's write a function this time, so we'll call it dom, say get_xml_dom and we are going to give it url, so let's put that up here, and this is just going to be https://talkpython.fm/rss, okay, let's write this function rather than have PyCharm write it, so here we are going to go and do a get, we will get a response=request.get_url nothing fancy here, and we probably should check the response code, so we'll say if resp.status_code !

= 200, return none, maybe you want to log it or something but for now we are just going to do that, and now we are going to say dom=ElementTree.fromstring, and we are going to give it the response.text and we are just going to return the dom, okay so this little function here, we can put it below, I like having the main method at the top, it kind of orchestrates everything, so we are going to get that, we could even inline this, like so, okay, so we want to get the dom here and then we would like to do some kind of query, and to be honest, this really so far actually this point right here is really whole service story and the rest of it becomes like an exercise in straight xml, let's go ahead and run this, rss element, excellent, okay, so the next thing we want to do is let's define an episode and we are going to do that with my friend collection.namedtuple.

okay so I'll just throw in fields title, link and pubdate, and then here we'll have an episode, we'll say get episodes and we'll give it the dom, alright so this function we are also going to write and what it's going to do is given a dom, it's going to go find all he episodes, so the way this works, we have rss and then inside there we have this thing called the channel and inside there we have an item for each episode, so in xpath you don't name the top element, and we don't need the star, we just going to get more than one back, so we'll get item nodes it's going to be dom a find all, with some parenthesis, with some quotes, and then let's just print out how many items we have, I think if this comes back correctly, we should have 97, let's see, do we have 97- server says 97, fantastic.

It looks like this is working, so now what we can do is we can just return a list of episodes, so we'll say episode of and for something, we'll say for n in item_nodes okay great, so now we are just going to need to do some queries, we can say n.find_title, we have link, and I think it's pubDate, it's the way they say it there and that looks good, let's go back and get our episodes and we'll just print episodes.

Now because these are named tuples we should see the data, bam, actually close but no cigar, so we actually found all these but what we gave as field values is the actual nodes, we just want the text from these.

Okay, very cool, look publish date looks reasonable, the length looks reasonable, the title looks reasonable, super.

So there is another thing we'd like to so is like if we look at the publish date, this is just a string in a certain format that is required in rss, it's not really time, so that is where our parser is going to come in, now normally parsing dates is super hard, because all of the various formats, there is like over 700 different ways to represent dates, it's insane, but this Python dateutils we'll try to parse them and it knows quite a bit of them, so let's just give it a parse and see if it will take it.

Oh sweet, it's a datetime, look at that, so we got the parsing of the date working, and yeah, that looks right to me, let's check the next one, yeah, these are good.

Okay, so here we have our episodes and technically, we have these episodes in reverse chronological order, if we wanted them in another order, we could now sort on this like suppose we want them oldest to newest, like in increasing order, so I'll say episodes = this and then we could return sorted episodes and then we want to give it the key it's going to be a function, a lambda function, that takes an episode and it return the pubDate and that will sort it the way we want it, so we should see one right here at the top, episode zero, here we go over, episode one, episode two, perfect.

Because we were able to turn these into real dates easily, we can actually run sort algorithms and not just do string sorting which wouldn't really help us, okay, let's put that character down here, do a little cleanup, okay, so now we have our episodes, let's just print out the first three episodes, so for e in episodes, and we are going to print out, let's print the number and let's print out the episode title.

Now, let's do an enumerate here so we can get the index and while normally the index would be off by one, we do want to add one to it, my show numbers also start at zero because it's a zero based podcast, come on, so the index itself will work perfectly and here we can say title, and this should give us some kind of report on all of them, let's just do the first five so we can see them on the screen.

Boom, number one, notice, number zero introducing the show, number one- Eve, number two- Python and Mongo and so on, and so on, how cool is that?

So, here we are, pulling live xml off of the site, and processing it in real time using these xml techniques.

|

|

|

show

|

0:37 |

So to review, working with xml from services is pretty straightforward.

Again, because it's the easiest way to do stuff on http, we are going to work with requests, and then, we are also going to use the ElementTree, and we are going to call request.get given url, that is going to get us the response, remember, check the status code, make sure everything worked out okay and then we can get a hold of the actual xml as a string, via response.text and then we are going to use ElementTree and parse it as fromstring, and once we have that, we are off to the races, we now have an xml dom in memory and we just do xpath queries against it as we saw in the previous section.

|

|

|

|

10:59 |

|

|

show

|

0:39 |

We've worked with a number of the text data types.

Now, let's talk about binary data, so again we are going to use requests, but this time we are going to focus on downloading and saving, or even a memory processing binary data, this could be things like zip files, it could be images or a whole bunch of stuff, In the demo, we are going to is actually mp3 files, those are fairly large binary files that we can work with.

So a lot of things with requests are really straightforward, this one is a little less straightforward, but not too bad once you see a couple of techniques that will make this much easier.

|

|

|

show

|

9:40 |

In this demo, we are going to go and access a binary resource off of the internet using requests.

So, I am going to get you a get request, but this time to some kind of binary resource, and what we'll get back is a bunch of bytes, we want to bring this back to our app and while we could work with them in memory, we are actually going to write them down to disk.

Okay, so let's see what's involved in making request do this whole round trip.

Now you've seen me create a number of these little starter file projects throughout this class so far and I decided we are just going to start from something that is kind of already structured, so we are going to have a function called get_episode_files it's going to take a random rss url, this could work for any podcast, I just happen to be picking on my own and we are going to say for file in these files here, maybe file url or something like that, we are going to call this function download file, so there is going to be two parts to this, we are going to go and work with xml, here, right, this is standard xml so we are going to need to add an import at the top, so we'll have our ElementTree of course we are going to use request to download it, ElementTree to parse it, and then we are going to use OS and some other modules we are going to talk about in a moment to actually put it on disk.

Okay, great, let's do it, let's write the download part, now this is not going to download the files, it's going to download the xml which will tell us where the files live, so we'll begin like this, so we'll again do a get request against the main rss url and we should check the status code but you guys know how that goes, we've done it a lot, so we are going to assume that the xml text is response.text and then we are going to load it up into a dom, ElementTree.fromstring(xml_text) and then we just need to write some kind of list comprehension here that is going to say given a dom get me all of the links, so remember, it went channel/item and in the item we had a link, we can just use that as our xpath query, so for link let's say link_node.text for link node in dom.findall('channel/item/link') like that and that should do it, let's just run this bit real quick and we can go ahead and print the file, so run boom, so that is actually not the right xpath query this while decent, this is going to show us the actual episode page what we want is the actual episode rss.

So, I need a different one, let's look at the xml real quick.

So what we actually want is we want this thing called an enclosure, not a link, so then in there you can see we have the url which is an attribute, so we haven't really talked about that, so we are going to do this, enclosure and let's call this enclosure_node here and instead of getting the text, we are going to get the attribute here, which is url.

So we'll do url, let's run this again, see if we got it right this time.

Oh, oops, this has to be attrib, so here we have all of the actual mp3 files, so there we go, now I got exactly what we were looking for, so channel item enclosure, get the attribute from the attrib dictionary called url.

Perfect, So now it's really down to the binary data part, how are we going to download these files once we have them, right, we are passing them off of this download file function, how does that work?