|

|

|

13:09 |

|

|

transcript

|

0:44 |

Hello and welcome to build an audio AI app with Python and Assembly AI.

In this course, we're gonna explore how we can apply machine learning through the Assembly AI platform to audio to build some really, really fun and insightful applications.

We're gonna build a cool website with FastAPI that lets us turn audio into text in all different ways, and then build on top of that information to provide rich details that you otherwise wouldn't be able to get.

So if you've ever wanted to work with audio in your Python application and create a cool service around it, follow along.

It's gonna be really fun.

|

|

|

transcript

|

2:24 |

We're all familiar with large language models and advanced machine learning that have really come on the scene in a huge way in the last couple of years.

Now, some of these features can be kind of frivolous.

Like here's a funny joke.

Imagine this, this cool guy over there, he thinks he's beat the system.

He's like, you know what?

I gotta send these business emails.

They gotta be real formal, but I don't wanna write a lot.

I don't wanna write those formal emails.

So here's what I'm gonna do.

I'm gonna write two bullet points, and then I'm gonna hit a button and say, Hey AI, flesh this out, make it sound real professional and send it off, okay?

Off it goes, lands over on someone's desk.

They come back and check their email.

They're like, ""Hmm, really?

All of this?

I gotta read all of this stuff?

You know what?

I don't need this.

I got AI, I'm gonna push a button.

It's gonna reduce this down to two bullet points and tell me exactly what I need instead of all this fluff, right?

Maybe we should just learn how to communicate better and no one has to go through this, right?

So a lot of AI features, they feel like this.

However, there are areas where AI machine learning really, really do work super well in constructive ways.

One of those ways is audio.

So imagine we've got some audio file like a podcast, for example, and we apply machine learning to it, maybe through Assembly AI's platform.

And what do we get back out?

Yeah, it's interesting.

Mark Shannon definitely has vision.

He's developed a plan as of years ago, but we finally were able to put him in a position where he could do something about it.

And we've all been pitching in.

A lot of it has to do with just applying some of the general ideas that are out there regarding dynamic language and optimization.

Things have been applied to other things like LLVM or various JavaScript runtimes and so on.

So here's a transcript encoded through Assembly AI in the Talk Python from the Talk Python To Me podcast.

That is word for word perfect of what was said on that show.

How incredible is this?

It truly is magical.

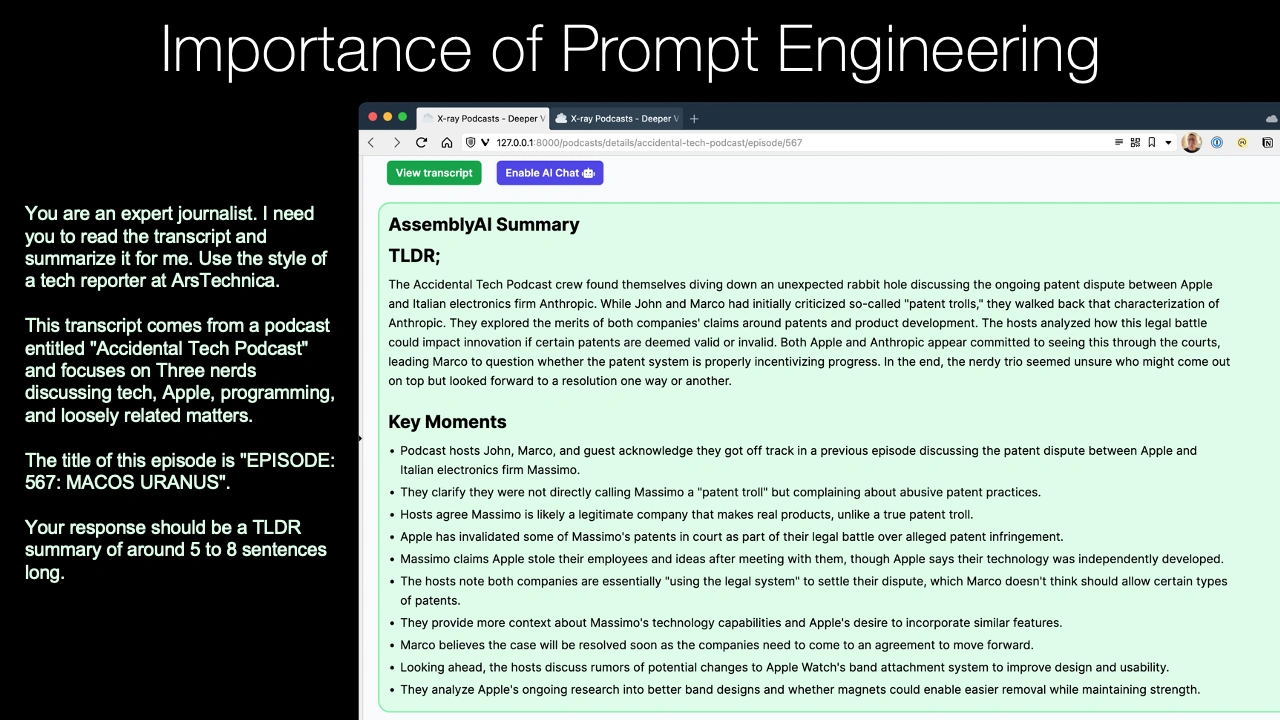

Now, if we take this, maybe mix in a little large language models, then we can start to do really interesting things with machine learning and audio.

And this is the kind of stuff we're gonna explore, these useful AI features in this course.

|

|

|

transcript

|

3:41 |

So we're inspired to work with audio and do something amazing.

What could we build?

Well, possibilities are pretty endless.

We can go this way or that, but I've got a really clear idea of something super fun that we can build for this course.

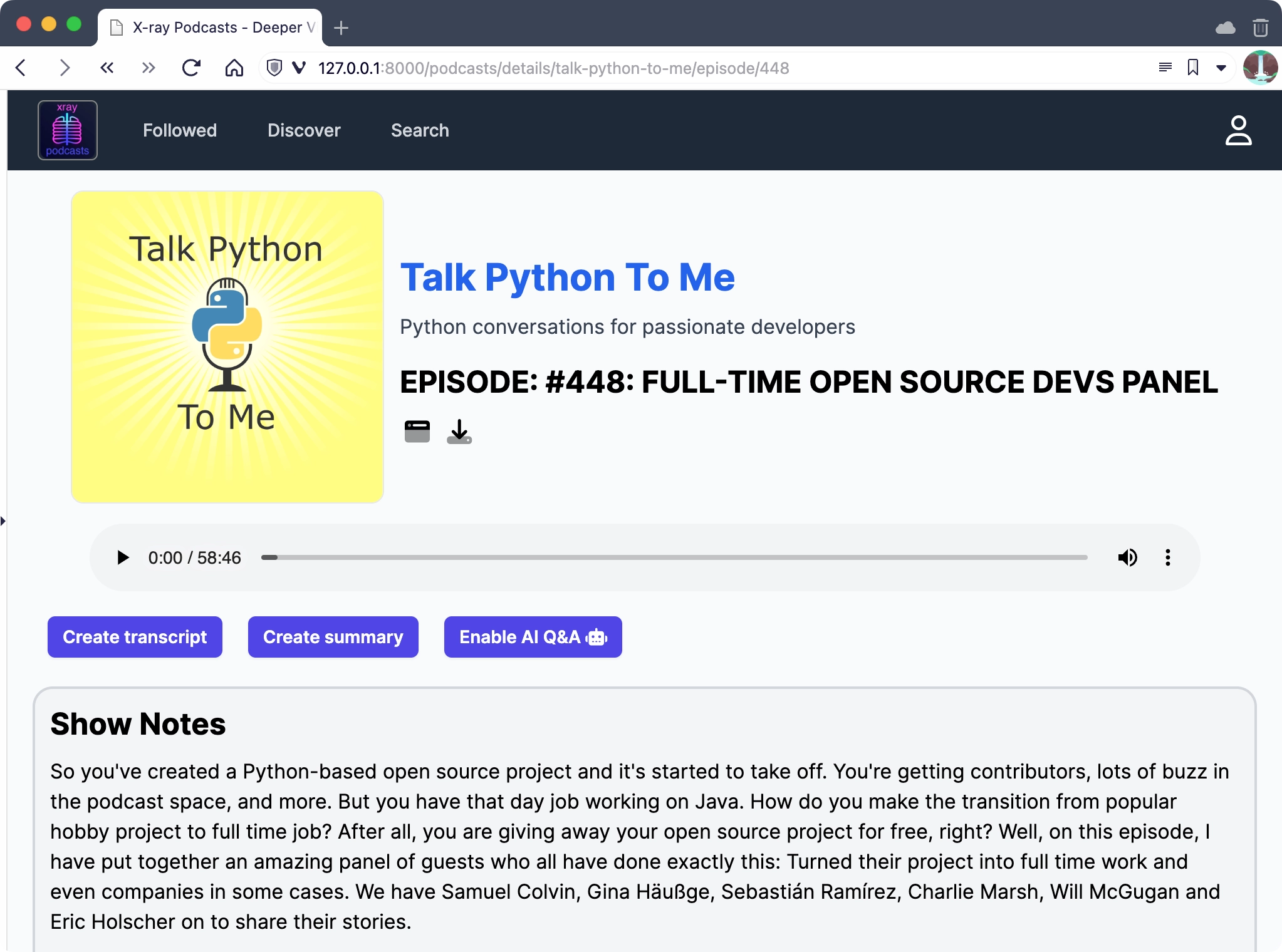

We're gonna build something I'm gonna call X-Ray Podcasts.

This is an app.

What does it do?

Well, as the name kind of indicates, it lets you look inside of a podcast.

It lets you look and see more about it.

So obviously I run a couple of podcasts, but I'm also an avid podcast listener.

Do you know what's really boring and not great?

Is if there's one paragraph for show notes, there's no links, not much about it.

Do you wanna listen to that one or not?

That particular episode?

I don't know.

You don't have enough information to make that decision, do you?

Well, with X-Ray Podcasts, you'll be able to get a TL DR, a little bit of a high action point summary type of thing.

What's happened throughout the show.

We'll be able to get transcripts.

We'll be able to search in them, all of that kind of stuff.

So that's what we're gonna build, this X-Ray Podcasts app.

And here's what it's gonna look like.

Starts out, you can just enter a podcast URL here.

The app is going to download, parse the podcast all through its RSS feed, import it into the system, save in a database.

And then it will start to apply its magic through the machine learning and assembly AI platform and some search engines and those kinds of things.

So here we can start by getting an app.

Then we can go into the details.

For example, here's one of the recent Talk Python ones.

You can see it's pulled in the show notes from the RSS feed, but more importantly, see those purple blue buttons there?

Create a transcript.

Don't have one yet.

And we actually supply one at Talk Python, but many podcasts don't have transcripts, but X-Ray Podcasts, it's gonna let you create them even if the podcast person, the host didn't provide them.

Create a summary, a TLDR and important action items and even have a chat, a Q&A with the podcast content itself.

That's amazing.

Click that transcript button.

We'll get really cool looking transcripts like this.

Press the summary button.

We'll get the TLDR and other things.

Here you can see Sydney Ruckel discusses her career journey in software engineering and offers advice to inspiring programmers, et cetera, et cetera.

That's not from me when I wrote the details for the show.

Sydney didn't exactly say that.

Assembly AI read this and gave us this summary for us.

Super cool.

And once we have all this extra information, then we can deeply search within podcasts.

If you search for a podcast today, you get show notes, you get the title, things like that.

Very, very limited information.

This app that we're gonna build, this X-Ray podcast is gonna allow you to search all of that extra information that we just talked about, surfacing through AI.

So this app is gonna be super fun to build.

We're not gonna build it from file, new project, completely from scratch.

That would spend a lot of time on things like web design and frameworks and database design, all those things.

We're just gonna start from an empty shell of an app and we're gonna add these features.

We're gonna add search, add the transcripts and those kinds of things.

So if you're not a web developer, don't let this scare you away.

We're gonna start with a pretty decently working model that you won't have to change a whole lot for the website and then just adding these cool features, but it's still gonna be cool to see how they surface in a real app.

|

|

|

transcript

|

2:11 |

What technologies are we gonna use, you know, frameworks and things like that in this course?

Well, there's two core ones.

We're gonna use the Assembly AI service, machine learning or AI as a service around audio, and that's gonna be really cool.

And we're gonna plug that into this web app that we just saw, which is built on FastAPI.

Yes, you didn't really see too many APIs.

You can build websites with FastAPI as well, and there's a lot of benefits to doing so.

So we'll see how to do that.

These are the two big pieces we're gonna be working with.

There's also a bunch of supporting technologies.

We have Pydantic, which normally comes with FastAPI, and we may use it with FastAPI a little bit, but primarily what we're gonna be doing is using it with MongoDB and Beanie.

So the database backend that's gonna allow us to do all the cool stuff, like save all that information, do lightning fast queries, and build a search engine, we're gonna build that all on top of Mongo.

We're gonna run that infrastructure through Docker.

So don't worry, you don't have to install anything on your computer other than Docker, and you don't need to know how MongoDB works.

Just one line of commands, boom, it's up and running.

But if you do care about exploring that, well, you'll be able to go a little deeper in the app and see what's happening.

And finally, we're gonna be using HTMX.

We're gonna make this a real dynamic, cool, lively app without going to things like React and all the consequences of going down one of these front-end framework sides of things.

And we're just gonna keep it real simple and use FastAPI and HTMX to create a dynamic and interactive site without actually reloading the pages all the time.

Kind of a several-page app, not quite a single-page app.

Now, you don't need to know most of these.

We're gonna focus on Assembly AI, and when we talk about those, they've got a really nice Python SDK, so there's not a lot we actually have to do in terms of understanding there.

And these others, they're gonna be in the background where you need to know a thing or two.

I'll teach you about them along the way, but they're mostly gonna play a supporting role for what we're doing in this course.

|

|

|

transcript

|

3:16 |

So what are we gonna cover in this course?

We talked about the app that we're gonna build, but specifically, what are the chapters and how are we gonna break up our time?

We're gonna start out by welcoming you to the course.

Congratulations, you're most of the way through that actually.

Then we're gonna be focusing a little bit on setup and making sure you can get this app up and running.

You know, make sure that you can get your editor configured to run the couple of commands that we're gonna need to, virtual environments, getting the database running so that it just can chill in the background and do its magic.

So we'll talk about that just to make sure everything's good to go.

Then I'll give you a tour of that starter code, that starter application.

Remember I said we're not gonna start from complete scratch because that would add a lot of time around something that's not really central to the idea of building audio AI apps, although important, not central.

So I'll take that starter code and we'll just spend a little time understanding how all the pieces fit together so that you can jump in and kind of make the code your own as we go.

Then we're gonna add four core features to this application.

Number one, the ability to generate transcripts using machine learning.

And we'll be able to do this from not just some files that we download, but anything on the internet that basically relates to a podcast is gonna be really cool.

So that's the first start.

Feature number two is gonna be adding deep search.

Once you have all the information about the podcast, plus word by word content of what is inside of that audio file, well, it becomes much more interesting to add search to that situation, right?

So we're gonna add search.

We'll see there's some really cool things we can do to make that really fast and also really user-friendly using HTMX.

So this is gonna be a super fun part.

Building on search, we were excited about what we got out of transcripts.

What about summarization?

So we'll bring in some large language model features from Lemur at Assembly AI.

And we'll be able to ask questions like, give me a TLDR summary of this podcast, or give me action items, or, you know, it's open-ended.

It's an LLM.

So we're gonna do a bunch of interesting things to get additional information on top of just the spoken word, the show notes, and those kinds of things.

And then we'll fold that, of course, back into feature two to enhance our search further.

Final thing, we're going to go and build a chat with the podcast episode.

So imagine this, there's a podcast episode that interviews one of your heroes or somebody you're a fan of, somebody who's really focused on some topic, you know, whatever it is out there in the world.

Wouldn't it be cool to kind of have a Q&A, open-ended conversation with them?

Well, we're gonna come up with something along those lines using LLMs and chats with all this information that we built up.

It's gonna be pretty awesome.

And that's it.

We'll just wrap up the course and make sure that you're good to go and build, you know, all set to go build whatever it is you want, armed with all this awesome knowledge.

So thank you for taking the course, and hopefully this roadmap looks exciting to you.

It's gonna be a lot of fun, what we're gonna build.

|

|

|

transcript

|

0:53 |

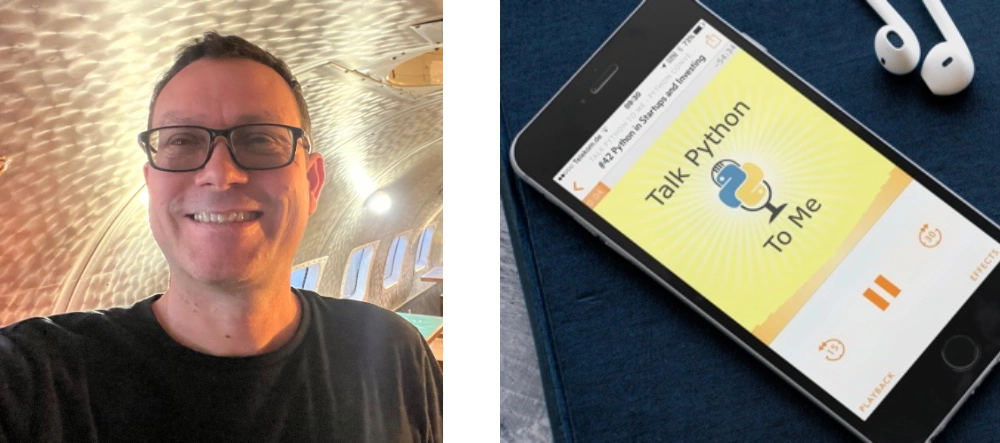

Finally, as we close out this chapter, let me just quickly introduce myself.

Hey there, I'm Michael Kennedy.

You may know me from other courses or from the podcasts that I run, but if you haven't, it's great to meet you.

You can find more about me, my essays that I write and links to many of my personal things over@mkennedy.codes.

I'm the founder and host of the Talk Python To Me podcast, co-host of the Python Bytes podcast, founder and one of the principal authors here at Talk Python Training.

And finally, I am a Python Software Foundation fellow, which is a really cool honor as well.

If you want to get in touch with me, find me over on Mastodon, where I'm there@mkennedy@fosstodon.org.

You can also find me on X, where I'm @mkennedy, if you wish, though I spend more time at Mastodon these days.

Great to meet you.

Welcome to the course.

Let's dive into some content.

|

|

|

|

8:05 |

|

|

transcript

|

0:48 |

In this short chapter, I'm going to show you everything you need to know to make sure that your system is ready to run the app and edit the code and all those different things so that in case you want to follow along, which I highly recommend, you'll be able to do so.

Of course, if you just want to kick back and watch, you know, feel free.

That's great.

That's totally great.

But if you do want to follow along, you'll get more out of it.

So I encourage you to, through each chapter, you know, watch what I'm doing for a while, pause the videos, go over and work on it on yourself, and then come back to the videos and carry on.

So if that's your goal, then you definitely want to pay attention here.

And if not, you know, you just see what it requires to run the app anyway.

|

|

|

transcript

|

0:55 |

The first thing is you're going to want to clone and probably star or fork the repo for the course.

Here you can see it's at github.com/talkpython/audio-ai-with-assembly-ai-course.

And just go over there.

You can also just click the ""Take me to the repo"" button in your video player here.

And this is going to have all the code that you see me write as well as the starter code.

The starter simplified, not AI enabled version of the app yet.

You don't see that yet because we haven't written that yet.

We're going to do that together throughout this course, but I'll put the starter program in here and we'll start from there and go.

So be sure to go over pretty much, I recommend you pause it right now.

go make sure you have the code for the course, and then we'll carry on.

|

|

|

transcript

|

1:25 |

Newsflash, you're gonna need Python to run the code in a Python course.

Actually, not too surprising, is it?

Well, there's a little bit more detail I want to give you.

You're gonna need Python 3.9 or higher.

Right now, 3.12 is the latest, so I recommend that you have 3.12, or if you're watching this later after I've recorded, of course you're going to, because I'm not gonna ship it instantly, it's not a livestream.

You might as well have whatever the latest version of Python is.

At a very minimum, you're gonna require 3.9.

There's certain things we're doing with Python type hints and those types of things that will not work on Python 3.8 or lower.

I've had people send me messages, ""Michael, you've written bad Python code, it doesn't work.

Look, it says that this thing is not understood, and I have Python 3, and I'm trying to run it and it doesn't work.

Guess what?

They had Python 3.8.

Python 3.9 is different syntax, and on and on.

Every version brings a little bit something new, but the backwards compatibility story is really strong with Python.

So get the latest one.

If you don't know if you have Python, if you don't know how to check, or you want to make sure that you're just doing it in a good way, you can go over here to Talk Python Training, and we have an article that is kind of a choose-your-own-adventure.

Are you on Mac?

Have you installed it this way?

Et cetera.

And it'll help you get all set up if you don't know.

|

|

|

transcript

|

1:07 |

Once you can run Python, you will need to be able to write Python and edit Python.

So we're going to need an editor.

I'm going to be using PyCharm for this course.

I'm a huge fan of PyCharm.

I will show you some awesome stuff if you're not familiar with it, and maybe encourage you to be a fan as well.

So get that over at JetBrains.com/pycharm.

There's a free community edition and there's a paid edition.

The community edition should be more than enough for this course.

So you don't need to pay any money for it.

So recommend that.

If for some reason you're on the other side of the fence, and these days the other side of the fence pretty much means VS Code, you're welcome to use VS Code.

This is a great app as well.

Just make sure when you get it, you go to the extensions, that little set of boxes clicking together, and that you install the Python tooling for VS Code.

Because without that, it doesn't really do that much.

It does suggest it, I believe, if you try to open up a Python file and it doesn't have it.

So PyCharm or VS Code, but if you get VS Code, make sure it has its Python capabilities clicked

|

|

|

transcript

|

0:30 |

Good news, I've worked with the folks over at JetBrains to get you a six-month free trial for PyCharm Professional.

It'll save you around $60 and give you a chance to use some of the paid features like support for the web frameworks, FastAPI, Flask, Django, and others like that, the JavaScript frameworks such as React, as well as the data science features.

Just use the link jetbrains.com/store/redeem and the code PyCharm for DocPython with the capitalization you see on the screen to redeem the code.

|

|

|

transcript

|

2:02 |

The final piece of infrastructure or tooling that you're going to need is you're gonna need to be able to run the database.

It doesn't really matter what database we're using 'cause a lot of that's abstracted.

You won't need to see it or know how to work with it, but we're using the free and open source database MongoDB.

Now to run it, if you already have MongoDB installed and running on the default port 27017, that's fine.

Just let the app create a database over there.

It will do that on its own and it's off to the races.

You don't have to worry about that at all.

But I imagine most of you out there don't have MongoDB just running in the background.

You're not like me 'cause I work with it all the time.

I recommend that we use Docker because with Docker, it's one line to install, i.e.

Docker pull, and then one more line to run MongoDB without it leaving any traces on your computer.

Just run it in the most lightweight, isolated way as we can.

So you can do that if you're on Mac or Windows by getting Docker Desktop.

If you're on Linux, you can just get real Docker and go to Docker and then it'll show you how to install it for that.

But if you're on Mac or Windows, download Docker Desktop, you can see right here.

This is a free app and long as it's running in the background, then the Docker commands will work.

One thing I've been playing with lately that you might wanna check out is something called Orbstack at orbstack.dev.

And this is also an alternative desktop environment for Docker containers and has some really cool features like you can see here that this container Mongo server is running and it has a little folder icon.

You can actually click that and open up, in this case in Mac, open up a Finder, just start exploring and editing the files and really getting a look inside similar things with Windows.

So one of these two things, if you're on Windows or Mac to run Mongo, don't worry about how we do that yet.

We'll talk about that just a minute.

But recommend this, unless you already have Mongo, then you don't need Docker.

|

|

|

transcript

|

1:18 |

So in summary, what do we need to run our app?

What do you got to do to get your system ready?

I know there's a few steps here, but I really wanted to put together an app that felt like a real app and not just a toy.

So that's a couple of steps.

Number one, you have Python 3.9 or higher, ideally 3.12 or higher, but you know, go with the minimum version if for some reason you have restrictions there.

3.9 is the minimum.

Go and star and clone the GitHub repo if you want to make changes and push those changes back, maybe fork it and then clone your fork so that you have permission to push changes back and don't change it for everyone.

Install a proper editor, an editor that understands the whole projects.

We'll see there's many moving pieces in web apps, like that is the nature of the web, and having a project structure-based editor like PyCharm or VS Code is invaluable.

We're going to need to run the database, so either have Mongo directly and be happy to run that.

And if you don't, install Docker Desktop, either the proper version or the Orbstack version.

You can check those both out and see what you like.

And that's it.

Once you get this stuff ready, you know, don't go on to the next chapter till you get these things done.

They're all super quick.

And then let's move on and get into the code.

|

|

|

|

19:30 |

|

|

transcript

|

0:44 |

You know what we're gonna cover in the course, and you know how to get your computer set up, at least what's required for it.

We'll talk a little more about that in this chapter.

It's time to dig into the code, isn't it?

So in this chapter, because we're starting from a somewhat complicated, 'cause I want it to be a realistic app, I wanna show you or walk you through a little bit of the code, some of the moving parts before we start adding features to it.

Again, take this as a FYI for your information.

You don't need to follow along.

You don't need to be an expert in say, FastAPI or whatever it happens to be that we're doing.

We'll work in little tiny pieces as we go.

I just wanna give you the big picture when we get started, okay?

So let's dive in.

|

|

|

transcript

|

6:27 |

Here we are in the GitHub repo, and let's just start where I recommended you start.

Let's go up here and clone it.

Notice down here, we now have a code 00 starter app, and there's gonna be more 01, this feature, 02, that feature, and so on.

But down here, it even comes with instructions on what to do, so this is what we're gonna talk through, but it's also written down right here in the readme.

Step number one, we're gonna go and clone it.

Now, I'm just gonna clone it to the desktop for now so you can see what's happening, then I'll move it somewhere else later that you don't need to worry about.

Also, I have a non-default terminal.

This one is warp, and I recommend you check out warp if you're on Mac or Linux.

Maybe later, they said they're working on a Windows version, but it's not out yet.

So sometimes people ask me, Michael, what is this weird terminal thing you've got going on?

That's what it is.

So we're on the desktop.

We'll just git clone, and we'll just put in the URL.

You don't need the .git, by the way.

You can have it, but it's not required.

And here it is over on the desktop.

It'll be called audio something or other.

So you can see we've got our readme.

That's just not, that's not the one I was talking about.

That just talks about the overall project.

We go into code, and we'll have the starter app here.

And then this is the place that we're gonna be working.

So for each one of these, we've got, I'll open it up in Finder here for you.

Each one of these has kind of got this top-level point-in-time name, so starter app, finished with transcripts, or whatever we end up calling these.

And it's got the node modules, and the source, and the requirements.

So I recommend that you open up this folder, or whatever section of the course, or point-in-time you wanna work with, into your editor.

And because of that, I'm over here, and we're gonna do a couple of things.

First of all, notice there's no virtual environment.

It does show there's this global one, but I run with a virtual environment active all the time on my computer in case I wanna blow it away.

It doesn't mess with System Python.

So what I'm gonna do is I'm gonna create a virtual environment.

That's the first thing that we wanna do because you can see we have some requirements.

In fact, quite a few that we're gonna need for this app to run, and that's just a good practice.

So we will say Python -m venv, venv.

Now, that would be fine, but I would like, see how this one is named global, not just venv.

We can say --prompt.

And when that creates this virtual environment, the name is going to be just venv.

But when we activate it, so this varies depending on your operating system also laid out in that readme right here.

On macOS and Linux, you say dot for apply to this shell.

Then you say venv/bin/activate, like so.

Notice how it's 00starterapp, so it tells me which one it's in.

If I was on Windows, I would do venv scripts/activate.bat, why, oh why, for all that is right, do these have to be named separately?

I don't know, but that's the way it is.

So that's one of the weird differences between Python on Windows and Mac, so we gotta do this.

Next, we need to get those requirements.

I use piptools, and piptools takes this file, which is just the top-level unspecified requirements, and compiles it, something like compiles it to, this more specific version.

So what we're gonna do is, you don't need to use piptools, you can just simply go here and pip install the requirements, but if you wanna mess with that and upgrade it, you're welcome to.

So I'll say pip install-r requirements.txt, virtual environment active, and as always, Python is always, always, almost always, except for one or two weeks a year, out of date with its pip, so I actually have a thing that normally creates virtual environments and automatically upgrades it, but it's not required, but go ahead and upgrade it, just so it doesn't complain to you.

Okay, and there we have it.

We've got our virtual environment created, we've got it activated, the things we need to run the app are here.

So we could come over here and just say Python main and run our app, but at this point, I kinda wanna put the terminal and the shell down and go over to a proper editor.

So this folder is what I want to be the base, so in PyCharm on macOS, macOS only, you can do this, drag and drop it, and it will open up this project.

On Windows, you have to say file open directory and browse to it, not a huge deal, but that's how it works.

All right, here we have it in our program, and notice, got our source files down here, this is where all the code that we're working with.

One more thing we have to do before we're able to run this is we need to set things like the assembly AI API key.

Should that stuff be committed to Git?

No, no, no, no, no, no, no, no, it should not.

So what I've done here is I've created this settings file that has a template default values for just local host server, but then here's where your API key goes.

So what we're gonna do is we'll talk later about the API key, but in order for the app to run, it just expects this file to be there, and later we'll go and enter our key in there.

If you already got it, go ahead and put it.

Don't put it into this template.

The goal is do what the action says, make a copy, save it to settings, and in here put, and this is no longer needed, and put my API key.

I'm gonna put my API key there, but you know what?

I'm not gonna share it with everyone because that one's mine, you put yours there.

So with all of that in place, we should be able to run this, although without MongoDB being here yet, it won't really work, so that's one more thing I'm gonna do before we actually go and run this code.

|

|

|

transcript

|

3:29 |

Now that we've got our virtual environment set, you can see, move out of the way here for a second, you see PyCharm down here says it's using this virtual environment, which is excellent.

The final thing to do is to set up MongoDB on our server.

And again, here are the steps.

So let's go back to our terminal here.

It doesn't matter where on your computer you do this.

So we'll say docker pull mongo, and that'll get the latest MongoDB.

It says, look, you've already got it because guess what, I've done this before.

It actually just updated a couple hours ago.

Then the next thing we're gonna do is say docker volume create.

I'm gonna say docker volume create MongoDB, and I'm not going to actually run this because I already have this volume, but run this, it'll say created a volume.

Again, the point of this is here's a persistent bit of storage.

So when Docker restarts or whatever, the container goes away, you upgrade it or something along those lines, this persists.

Otherwise the database files backing in Mongo will go away every time you restart your container.

Not great for a thing that's supposed to be a persistent data storage, right?

And then finally, I'm gonna go down here to the command so I can copy it, paste it in here.

We're gonna say docker run, don't block, and just run it in the background as long as I'm logged in until I do something in a mode that if I stop it, it cleans up after itself so that it listens on localhost to this port and forwards it inside the container.

So just forwards on all traffic using this new volume we just created, mapping that to data/db and give it a stable name so we can refer to it running this image that we just got.

So let's go and run that.

And look at that, it's up and running and now we can say docker ps, you can see it's sitting here running and its name is, if I zoom out, its name is a Mongo server.

It's just gonna always be named that, perfect.

So pretty much you don't ever have to do anything again unless you reboot your computer or for some reason stop Docker.

But if you don't do those things, just close this and you have a database server running.

All right, excellent.

So now our settings here, our MongoDB installed and it's incredibly lightweight and easy to get rid of.

Told you about Orbstack, we can go down here, you can see that it's running.

If you go to the volumes, you can see there's Mongo data.

I've actually loaded some other data into it for other apps that I have going and some other things just hanging around.

These are unused, we can clean them up if we wish, like so.

All right, so you can monitor what's going on with all these things, but you don't need to, just in case you're curious.

Finally, I want to run this code so I can right click on main and say run.

Excellent, see this stuff came from the database.

That's 'cause I ran it before.

You guys will see importing these various podcasts and then the next time you run it, you'll see this.

So we're talking to our local MongoDB and everything looks like it was great there and yeah, the app is running.

Let's just click this and see what we get.

Here it is, yes, the app is up and running.

I'll give you a tour around the app next.

|

|

|

transcript

|

2:09 |

So we got our app all running.

Everything's configured Mongo setup, virtual environment is set up, our settings are set up, run it one more time.

And let's just open this up.

So here's our app, you can see it has this little pretty simplistic landing page I built, I just wanted to have something for you.

And if we go over to let's say discover, it'll say here are podcasts that you can follow that you might be interested in.

So here's a really awesome one, talk Python underneath.

And there's darknet diaries.

And oh, look, there's also fresh air.

MKBHD is great.

And because we're using HTMX, we can do really cool stuff.

Like if I want to first go here and see followed, you can see I've got these four that I followed, I must have followed many by now I've logged in earlier and did this.

Now, if I click this watch right here, it goes follow just changes to following.

So it I have now that means I am following it.

And how cool is that?

So if I go back over here, you can see it's now following I can explore it.

See here all of its episodes, there's actually more we can just load those without a refresh right there because of HTMX.

Super cool.

Let's see what this vision pro thing is about.

It's Vision Pro Week.

Yay.

So we can play the episode.

There's going to be a lot of cool AI and assembly AI and audio work that I'm going to put right into that section.

And then, you know, just on down, here's the show notes, and so on.

Alright, so we can discover new ones, we can enter them in here if we want.

If you had a podcast URL or web page, put it in there.

If we can parse it and find a RSS feed in there, we'll include it in the library and save it that MongoDB that we just got running.

Yeah, so we can click around here.

And that's, that's what this app is about.

Okay.

Final thing, we'll be able to if you log out, you can log back in, come over and create an account.

I'll call this Michael to put in a password, we'll create an account and boom, we're back logged in.

But now we're not following anything.

It brought us back over here to discover.

That's pretty much it.

That's the app and how it works.

|

|

|

transcript

|

6:41 |

All right, let's get our bearings in this code and see how everything works, and then we'll start adding features.

Notice we have our virtual environment still active on the right.

I'll dive over there and cover it back up.

Get out of the way of the project view on the left.

We have a couple of important moving parts.

We saw that we're working with MongoDB based on Beanie and Pydantic.

We're working with FastAPI and Assembly AI SDK.

Most of the FastAPI apps start this way.

They have a main.py.

Close this up for a sec.

And it even includes the way to start it up here as well if you don't have the readme.

So there's a couple of things we do to configure our API app here.

Say configure routing.

We want to be able to serve web pages, not just APIs out of FastAPI, so we mount a static route for things like CSS and images and so on.

Then we have home views.

We have account views and podcast views.

This is a way to categorize and break up our code so it's not just one stupid big main.py or app.py with everything in it.

No, we're gonna have stuff to do with APIs and AI.

We'll have stuff to do with home pages and podcast views, all that stuff organized.

And these live over in the view section.

So for example, let's go to the podcast view down here.

If you just go to /podcasts, this is gonna give you a list of, I think the ones that are popular, and then /podcasts/followed, so this is Discover.

This is the ones that you follow.

And here's the host.

If you're on the Discover and you submit a new one, you can see it's coming in and it says, we're gonna try to find whatever URL you specified.

And we have this service that is quite intense that goes, discovers the HTML page, pulls out the RSS feed meta tag, then uses the meta tag to get the RSS feed XML itself, and then parses that with a whole bunch of variations 'cause there's standards that nobody can agree on, apparently.

So that's what we got going over there.

And then for example, here we have this HX.

I'm just using HX to tell me what it's about.

This is a HTMX request.

Remember when I click the little follow button, it turned into followed or following.

It ran this code behind the scenes kind of magically the way it did.

Okay, so we have these different ways of organizing our code and these, you know, like the home page, this is just slash index.

And then we're using a template over here in the templates folder.

And each categorization of the views, like home or podcast or whatever, gets their own section.

So over home, we have an index and podcast.

We have details, episodes, following, discover and so on, right?

Here's the HTML for that.

So our templates match very much close to our views.

And you also saw inside the views, like in the podcast one here, there's a complex data exchange that happens sometimes, especially when we're passing in data.

So instead of making this code super disgusting and just busy and full of details, I'm following what I'm calling a view model pattern.

And again, organized like the templates, view models, and then in the view models, we have podcasts.

So we go to the podcast and then the URL is followed.

So we have followed podcasts view model.

And in here, this thing is storing the data that's exchanged between the HTML and the Python.

Now, normally this would probably have all of it just happening in the constructor, but because Python cannot have asynchronous constructors in our data layer, as well as FastAPI forms that must be asynchronous, we had to break this into two sections, okay?

So it'll do things like go to the form that was posted and get the URL.

And if there's not a URL, it says, you can't follow a podcast, discover a new podcast that has no URL, stuff like that.

So view models organized like this, views, drive templates and drive view models, they're registered through routes in the main.

Then we have a couple other things, grab bag of stuff like cookie authentication and just convert to web URLs, store the secrets that we put in our settings file.

We have our data layer.

For example, here's what a podcast looks like in Beanie.

A Beanie document is just a pedantic document if you haven't seen that.

So it has an ID, a title, a created date and the last updated date that is defaults.

And then it has a bunch of details about it.

And then they also have episodes and an episode has things like an episode good, episode number, but they're not always there.

So it's optional and so on.

And then we just say, look, this is where it goes in the database.

This is our, it's indices and so on.

So really nice, clean way for us to work with that.

And then the last thing is the services.

And these are not services like APIs or HTTP services.

These are just parts of our code that provide services through the rest of it, I guess.

Like there's stuff grouped around AI and there's stuff grouped around search.

And users, so for example, the user service, it can create an account.

So instead of putting that code somewhere, we just say, what do you need?

You need a name, email, password, whether or not it's an admin.

And then, and it comes and it goes and creates one of these users that goes in our database.

And then it calls save, says it was created and then it gets back the value out of the database.

Technically, this should be good enough to just return, but there's like a really fresh new version of it exactly as the database sees it.

And it'll run queries like find me an account by email.

And here's what a database query looks like for MongoDB in Beanie.

User.find1, user.email equals email.

Of course, we normalize that to be lowercase and without spaces if they typed in extra spaces.

All right, so that's the services.

And we'll play with those later as well.

Well, that's it.

And I don't know how much more there really is to show you.

We'll get into some other pieces like how does a little HTMX work when we actually put it into place for probably transcripts is gonna be the first thing that we're gonna use HTMX for the further background work that we're gonna have to run.

But few things we didn't cover, but this is the code.

You can start it up and play with it or you'd rather just follow along.

We'll do that really soon.

We'll get on to adding our first feature.

|

|

|

|

1:12:54 |

|

|

transcript

|

2:09 |

It's time.

It's time to start building.

We're gonna work on our very first feature that we're gonna add to our application, transcripts.

That first feature is gonna be transcripts.

And you can see we have this create transcript button.

Now, when we do this at Assembly AI, it's not instantaneous.

For example, this podcast that we're showing here is 55 minutes long and we want high quality transcripts.

That's not click the button, boom, here are your transcripts.

That has to run for a while.

And it's amazingly fast.

I talked to the founder of Assembly AI and about how they're doing their infrastructure and all those things behind the scenes.

And they're using a crazy number of really high-end GPUs in Amazon, I believe AWS, and certainly in the cloud, to take this and process it for you.

For example, when we do this here, it probably takes 20, 30 seconds.

If I do that on my M2 Pro on my Mac, it takes like 15 minutes.

So it's still incredibly fast, but it's not instant.

So what we're gonna need to do is add a really cool background feature to say you've started your transcripts and we're watching.

We'll let you know as soon as your transcripts are ready, we'll pull that in for you.

So that's gonna be what happens when we click this button.

It's gonna kick off a job that's going to start the request to process the transcripts in the cloud at Assembly AI.

Then we're just gonna hang around until that's done.

And then we're going to, when they are done, we're gonna put that into the database so we never have to do it again, right?

Save it there.

After that, we're gonna show this really cool page.

And you can see here, it even has a little green highlight over the first section.

You can actually move around here, you're gonna be able to click on it, play exactly at that point by just looking at the text and clicking on it.

So that's what we're building in this chapter.

I think it's a cool feature, and it's really gonna get us using some of the neat aspects of this web app.

|

|

|

transcript

|

3:25 |

Now, before we get into the weeds and the details of writing all this code, I wanna give you just a quick HTMX primer.

Now, if you already know HTMX, feel free to just skip to the next video or this is short anyway, but I'm gonna just show you really quickly what this is and how it works.

It's an amazing technology.

It's an alternative to front-end frameworks like React and Vue.

And it says, instead of running everything in JavaScript on the front-end, what if we just made the front-end more dynamic by working with the server more closely?

So you write your interesting behaviors in Python and you just click that together on the front-end with HTMX.

Let me show you an example.

So down here we have, let's just do a click to edit, say.

So here is all of the client-side HTML that you need to know.

We've got a label, not a form.

Notice there's no form here.

There's just static data in a label with values joeblue@joeblue.com.

And then it has, instead of an href, it has an hxget.

And when you click this button, what it does is it uses the server at that URL to figure out what happens when I click that.

And what it does in this case is it's gonna say, return a form that we can edit.

Let's see this in action down here.

Here's our static stuff, right?

Try to double-click it, typing, no changes.

But if I hit edit, notice it's downloaded that form without a page refresh.

It just, in that little fragment, sets it.

I'll just put this as michaelk@mk@mk.com.

And if we hit submit, it's back.

Now it's back to the static version.

And you can actually see down below, we show this, it'll show you what's happened.

So first it shows this static form as we just saw.

And when I clicked edit, it took that data and on the server it said, you know what?

The edit version is gonna be a form that has a label, some text area, another label, another text area.

It has the values that were, there's the static data to start.

And it says, we're gonna do a put back to that URL.

And we're gonna have the target be this whole form and swap it if there's a change.

What do we do in the change?

You can't see it on the screen, but if you click that button, it'll do this thing.

It makes this change and it returns back the new updated static version.

So this is HTMX.

There's a ton of different examples down here.

Another one that's fun is active search.

We're gonna see that going in a little bit.

So down here, I can just type, we need Michael, the M.

I can see we've got Owen, so I could type O.

There's two Owens, keep typing.

Now it narrows down just to Owen.

We're gonna do that for our search as well using HTMX, super, super cool.

So there's really not much to learn at all about this.

We do have a course on it.

It is amazing if you take it to its extreme, but what we're gonna use in this course is a really, really small sliver of this to make our code super nice, allow us to add this dynamic behavior.

For example, when we kick off a transcript, we can start that, monitor the progress without just reloading the page all the time.

Really, really nice.

We won't need any JavaScript, which is also okay with me.

|

|

|

transcript

|

3:56 |

Now, we want to run our main file, of course.

This may just run in PyCharm.

Let's see if I hit run.

It turns out that I had to install a security update, so I have not yet restarted MongoDB.

You must have MongoDB running.

So let's do that again real quick.

Run this command.

It's up and going.

Perfect.

Looks like everything is back and running just fine.

One thing you may need to do is, it's going to help out a lot, is if you go over here and you right click and you say mark directory as, hiding down here, mark directory as, come on, sources root.

What that does is basically when you say something like from db import mongo setup, it's going to look here and say, oh, I see there's a folder called db and in there there's a mongo setup.

If you don't have that set, then it's going to be a problem.

Basically you want this to be the working directory, whatever editor you're using.

This is up and running.

That's great.

So it's up and running.

Go here, take this off.

We want to go just check out any old podcasts.

I'm logged in and I'm following Talk Python.

That one's going to be fine.

So let's go over to this parallel Python apps with sub-interpreters.

It says to do episode action buttons.

So what we want to do is we just want to put those buttons there.

They're not going to do anything at first.

We're going to add that functionality, but let's just add that little bit of UI here so that we can add those action buttons and then click it together with HTMX into the assembly AI API calls.

Okay.

So what we need to do is go to the details template in the podcast section.

Remember my organization over here.

So notice this is not colored.

You can sometimes do a little bit better if you mark this directory as a template folder.

And what we're using is the chameleon language.

I know a lot of people use Jinja.

We'll talk about chameleon and Jinja in a sec, but I'm using chameleon because I think it's much, much nicer.

All right.

And over here, we're going to go to episode because this is episode details, not podcast details.

And in here somewhere is a to-do and it says, all right, what we want is this episode actions.

We're going to put, replace that section with some plain old HTML.

Let's get some room here so we can see it.

So we're going to have a couple of things.

We're going to have a button, a rounded button.

It's going to be indigo.

And we have a hashtag transcribe that doesn't really apply or do anything, but having something in the URL means that you get a pointer instead of an arrow when you hover over it.

So it looks clickable.

It thinks it's clickable.

We'll wire that up to a JavaScript event in a second.

And in chameleon, the way you do if statements is through what's called this towel template attribute language where you have conditionals and loops and stuff.

So we're going to say, if there is no transcript that already exists, we want to show the button that says create transcript.

But if it's the case that there is a transcript, this will be hidden and we can just say view transcript.

So this is where the action is going to be.

And then later we're going to be able to interact with the transcript there.

And here's stuff about creating the summary and enabling AI chat or question and answer sort of thing.

We'll come back to that later.

So it's just these first two parts for now.

I just put it all together.

So we have the pieces there.

Let's see what we get if we refresh it.

Boom.

And sure enough, create transcript, create summary and create Q&A because the hashtags they have like little links looking things there.

Step one, done.

|

|

|

transcript

|

2:51 |

Now, we're going to need somewhere when we click this button for the API to call to or HTMX to call into to say they've clicked the button to create a transcript and moreover which episode.

So we'll use this episode variable and episode ID and those types of things to pass that over and say create a transcript for this episode.

Now we could stick it in one of these views but I like to have everything kind of broken up and you know isolated.

So I'm going to create a new file called AI views.

And in here, we're going to have two functions def start job.

Now you might say start transcript.

But in fact, there's different kinds of jobs there summarize, there's transcribe, there's chat, all these different things that we are going to be able to do.

And it turns out to be exactly the same.

The way it works is just what is the action we pass off to this background subsystem that is in here.

So we're going to just have a start job and def check job status.

Okay.

Now we want to make these something that we can call from FastAPI.

Now a lot of times you'll see at app dot.

How do you get app here?

Well, it's over here.

There it is.

But we're going to need to import this library.

We can't give it that.

So it's going to turn out to be a circular reference, which doesn't go well with Python.

So FastAPI has a cool way to do that.

PyCharm is suggesting we do something with flask.

Thanks.

But how about we do this router equals FastAPI, API router like that.

And then this can stand in for.

I do a get here and we'll put in slash URL.

And let's put a check status for this one.

Now this is not enough information, but let's just make sure that's working well.

We'll just return.

Hello world.

Just for a second.

And we want to make sure just that we're clicking everything together here in the app.

So what we're going to do is we're going to go down to this register or configure routes and we'll do another one of these.

And we'll say API or AI views.

Import that at the top dot router.

Now if we run it, we should be able to go over here and just say, what did I call it again?

Slash URL.

Hello world.

Okay.

So we got this new view plugged in and everything's ready to go.

these are not the URLs that we're going to need.

We'll put those in in a minute.

|

|

|

transcript

|

2:25 |

Well, /url looks fun, but kind of useless.

What are we gonna put?

Let me just paste this in 'cause there's kind of a lot going on here.

So we're gonna have /ai to represent our AI view here.

Start, start some action.

This is transcribe, summarize, whatever.

And so this is gonna be a string I'm gonna pass in here.

And we're gonna have which podcast ID is this?

Is this talk Python, Python Bytes, Fresh Air, whatever.

PyCharm is suggesting to auto-complete that perfectly, so I'll accept that.

And then episode number, and that's an int.

Now, one thing we can do is we can actually, if you look here, we can go to a symbol.

There's a job action.

There's the job action class over here.

Where is this living?

It lives in the database under jobs.

And this is an enumeration that we can use.

You can see it's a string enumeration.

Transcribe, summarize, and chat.

And that restricts what strings can be passed in here because FastAPI is awesome.

I can actually go over here and say this is a required job action and import that, not just a string.

Okay, so let's just print out the things that are coming in here now.

Here we go.

Try it again.

And I'm gonna need to copy this URL 'cause I won't remember it.

So we wanna go up here and say, well, what goes in here?

Let's put the action, we transcribe.

Podcast ID is gonna be Python-bytes episode ID.

Let's put 344.

Then transcribe Python bytes, 344, perfect.

And what if we put something else in here is like jump up, error.

Input for that section of the URL must be either transcribe, summarize, or chat.

You said jump up.

Jump up is not one of those.

See how cool it is we're using that string enumeration and how cool FastAPI is.

Okay, so yeah, that doesn't work, but this sort of thing right there, that's exactly what we're looking for.

So we've got this data being passed over.

And while we're at it, let's just do the other one as well.

On this check status, instead of that, we're just gonna say check status for job ID.

So what in practice this is gonna do is you're gonna say we started your job.

Here's the ID.

You can ask later whenever you want.

Just come back and ask using this endpoint to see if it's done.

And if it's done, we'll do one thing.

If it's not done, we'll do another.

|

|

|

transcript

|

5:43 |

Well, this little echo of this dictionary was just so that we could have something to verify that the data exchange is working and play with that for a bit.

Let's get more real about it.

So what we're gonna do is we're gonna bring in a view model.

Remember, I told you a lot of parts of this app are already built because I don't want you to have to juggle with like, how do we create a new asynchronous background task system?

So guess what?

That's done.

So I'm gonna create a view model and it's gonna be called a start job view model.

And we're gonna need to import that.

And let's just go look at it real quick.

So it takes, well, what you might expect, podcast ID, episode ID, and an action, which is one of these enumerations.

It also takes the FastAPI request, or more specifically the starlet request, because the stuff underneath, there's a view model base down here where it does things like hold the request for you and handle a couple other things.

Like for example, check if somebody's logged in, which requires to check the cookies of that new incoming request, that kind of stuff.

So don't worry too much about it, but we got to pass that along as well.

And then we're just gonna store different things about this.

All right, we're gonna say, look, if there's an, I'm gonna switch on the action, say for transcribing, and then we're gonna set some values.

Otherwise we'll set summarizing and so on.

And then finally, there's no asynchronous stuff we're doing here.

So this is just about the data exchange.

We know forms to parse asynchronously like you do in FastAPI.

So this will be a pretty simple one.

And what we need to do is just pass it the information that it needs.

And we're also gonna need to pass in the requests right here and let's import that.

As I said, from starlet.requests.request.

Okay, so then we'll pass requests.

So then I get that order, right?

Let's see requests.

No, it did not.

Good guess, PyCharm, guess better.

Okay, episode and action.

So this view model is gonna store that data.

You might wonder, well, we already have it kind of parsed here.

What are we doing?

There's HTML on the other side that expects all those different variables, those self variables from that class to work with.

And that's kind of the role of the view model.

So that's part of its job.

And the next thing we wanna do, speaking of jobs, is we wanna go to this thing called a background service over in services.

And say, create a background job.

We'll look at this in just a minute.

So what we're gonna do is pass in one of these job actions, which is the action, the podcast ID, and the episode number, which is episode ID.

I kind of would prefer to rename that thing to episode number and we're gonna have to sync that up there as well.

Excellent.

So then in order for this to actually run, we'd look at this, we'll say, yes, async def.

So in order for this to do anything interesting, we have to await it.

And that makes this an async function.

It requires it to be an async function.

And we can just print started job, job.ID.

And then we'll return nothing.

And let's go ahead and return the job ID as well, just so it's in this data.

(silence) Let's run it, see what happens.

All right, here we go.

It turns out for some weird reason, and for unable to get that to show up, I had to call str on it, because that's technically a object ID out of the database.

And its default string representation was weird, but there we go.

So we've started a new job, it's called this.

And if you look over here, you can see started job, such and such.

This theoretically is off to do something.

It's not quite yet.

That's going to be one of the core parts where we plug in our assembly AI magic, but we're off to a good start.

All these pieces and data are passing along.

One final thing here we could do is this is going to have a job ID.

And as you can imagine, this is going to be a, we'll just let it be a string over there.

And we may need a request as well.

So let's go ahead and take that.

And we can go to our background service and ask, is job finished?

Passing in this job ID, we'll say await.

Now, what does this want?

Does it want a object ID?

So we can just say bson object ID.

That'll parse this across like that.

And for us to call await, we have to say a single say print the job, whatever it is, is finished.

I have to need to start a new one here and then say check status.

And it printed out.

Is it finished?

No, it's still running.

We didn't return true or false, but it returned anything, so it just came up nothing.

But here we go.

The job is not yet finished.

And because it's not really running yet, that's not a surprise.

But there we go.

We've got our two important moving parts here.

We're going to have to plug in a little bit of more UI, but let's go ahead and work on making this, make the jobs actually run over in the system and do their thing there.

Then we'll put the UI in place, okay?

|

|

|

transcript

|

7:42 |

In this video, let's work on making this background job system work and I'll just introduce it real quick.

So what we're doing is we're creating one of these things I'm calling a background job and storing it in the database.

So it has a time it was created, started and finished.

You know, neither of these are set by default.

Definitely not the finished.

They start out awaiting to be processed and they are not finished, but they do require an action and episode number and a podcast ID.

Guess what, that's what we're passing along, right?

This is being used over by this background service.

You saw it's called this function.

And what it's going to do is just literally create one of these and then save it and then give it back to you.

This save generates the sets the database generated ID and that's the ID that we were just playing with.

And then somewhere down here, we can ask, is the job finished?

So it just goes and gets the job by ID, which is literally find one ID equals the ID you asked for.

And either it's false if it doesn't exist, or it checks to see if it's been set to be finished.

Now that all gets kind of put in the database and chills.

And then this function, the worker function is the thing that goes around forever and ever.

Now, first of all, notice there's something very uncommon here says the background services starting up and it's based on async and await and just the default, not the default, the FastAPI's core asyncio event loop.

And what it does is it says while true do a bunch of work, you'd think that would block up the processing.

But no, no, it's really cool.

So what happens is, when you go to await something that frees it up to just keep on doing, you go pro doing other processing.

So it'll say, get me one of these jobs, if there is nothing, just go to sleep for a second, and then go through the loop again, which means get asked good for a job again in one second.

But if there is one, get us the job and start processing it.

There's an error.

Well, that's too bad.

Get the next job.

Sorry, job failed.

And finally, it's going to come down here and says, all right, well, what was I supposed to do with this job here?

Was I supposed to summarize or transcribe?

And then it goes to the AI service and says, transcribe episode.

This is where we're going to write our assembly AI code using their API.

But this is how it happens kind of automatically.

And then once it gets through whichever step it takes there, it marks it as complete and successful in the database.

So that when we're over here, we say, give me this thing.

Is it finished?

When we did that successfully, it'll say, yeah, it's finished.

Here's your information.

You know, whatever has to happen when that transcript gets generated and so on.

So this is how it works.

Now, where does this run?

If you go look in the main, you're going to see nothing to do at all with background services.

We got secrets, routing, templating.

That's the chameleon connections there.

We'll get to that very, very soon.

And the caching, this is just if there's a change to a static file, we don't have to actually make any changes or hard reloads.

There's basically no cache, no stale stuff in our cache as we develop it or even as we run in production.

But there's nothing here.

So where is this happening?

Well, FastAPI has a really interesting restriction, but also way where requires anything that uses asyncio and async and await.

It needs to share that core asyncio thread, but FastAPI manages it or the server running FastAPI manages it.

And it doesn't exist until you get down to here or you pass it back or that something grabs it and runs it like UVA corn or granny and those kinds of things, hyper corn.

But it's not available to us yet.

And this is where it gets funny.

So over here in infrastructure, we have app setup.

So there's this thing called an app lifespan that we can plug into FastAPI and FastAPI will say, look, I know you got to do some async stuff, like for example, initialize MongoDB and it's async loop needs to run on this core FastAPI asyncio loop.

This is going to be called when everything starts up.

Right?

So FastAPI is going to call this app lifespan for us when everything starts up.

How does it know one thing back over here?

You go over here where we're creating the FastAPI stuff, I'll wrap around so you can read a little better is right here.

It says when we create the app, we say don't have any documentation in your website.

Are you in debug mode?

Right?

Or development mode?

Right now we say true.

We could switch that to false later.

And then here's the code that you run at startup.

So it runs all of this stuff.

And then it yields to run the entire application.

When the app shuts down, there's like a shutdown cleanup if you want.

There's nothing for us to do.

But the core thing is register the background jobs here.

So what we can do is we can say asyncio create a task.

And what we're going to do is we're gonna give it the background service, that worker function and call it that'll kick it off there.

Now there's a warning, it looks like I did something wrong.

Perhaps I have but this is saying look, this is not awaited.

I really, really think async and await in Python are awesome.

But there are some big time mistakes in the design here.

And one of the big times mistakes is there's no way to just say start this work.

I don't care about the answer.

I don't care about it.

That's why you can't just call this function directly.

And then it runs.

It's going to do this create task.

Then also it expects you to wait on it.

I don't want to wait, I just want to put a bunch of stuff out into the ether and let it run as long as the app is alive.

So I'm have to use this like this is not an error.

I mean to not wait on this, right?

We'll come back for search when we get there as well.

And search is going to be another thing that just runs in the background periodically, it'll wake up index some things and go back to sleep.

Here we go.

This will have the background job actually kicked off and running.

It's going to run this worker function.

So this is how we get this asyncio function running continuously in the background doing a while true on the right loop in FastAPI.

The next thing we got to do is to actually transcribe the episode at assembly AI.

Fun.

Right now if we run it, it's not going to take very long.

I guess we could go ahead and just complete this loop here.

Go over here and hit go.

And we get this new job and we can check if it's done.

I imagine it probably is done.

Yeah, look at that.

So we started a new job and it's finished because well, when it ran this, it didn't have to wait anything.

So it marked this finish in the database.

Excellent.

It looks like everything's running and maybe one more thing we can check up here is that right there.

The background asyncio service worker is up and running, just cruising along.

You see it's getting these new jobs and then the finished.

Yes, it is finished.

|

|

|

transcript

|

3:01 |

All right, now we're getting to the heart of the app.

We've got this cool web stuff, but the goal is to work with audio using AI and Assembly AI, right?

And here we are at their website.

I'm sure you've checked them out already, but assemblyai.com.

Turn your voice into chapters, insight, summaries, transcripts.

Here we go with their leading speech AI models, right?

They're focused and they have been for years just on doing cool stuff with audio and speech.

So what you're going to need to do is you're going to need to create an account over here to get started.

And then once you have your account, go over to your slash app slash account here, click on account to get there.

And you're gonna need this right here, your API key.

This is not actually my API key.

So don't feel like you've got a big secret, but notice if you hover over it, you can say click to copy and it'll give you your real API key.

When I take that API key, go over to our settings and put it in there.

Remember in the template, I'm not showing you my real settings, but it just goes right there, right?

One, two, three, four, five, whatever that was, it goes right into there, but not in the template, but in the real settings, right?

I'm just not showing you mine 'cause it has my API key, which I don't wanna share with the world 'cause I don't wanna pay for everyone's transcriptions.

So make sure you go over and put that in there.

And then once you do, you'll notice there's a right up at the top here, it says init secrets.

We go to it, it just goes to assembly AI settings, sets the API key.

Where does assembly AI come from?

Well, it is installed and it should already be installed.

And it's gonna be installed as one of the dependencies.

So this comes from PyPI.

And this is the official API for working with, official Python package for working with assembly AI's API.

It's their Python SDK, as they call it.

And you can see they got a bunch of cool examples, how you work with it, real time stuff.

This actually, this landing page here on PyPI is actually a really good resource for what they're doing.

And we're gonna go through and use it and I'll show you how to do a lot of different things with it already, but there's more things to do if you'd like to explore more.

So in summary, assemblyai.com, create an account, go to the account, get your API key, copy that over, put it into the, we shall never look at it settings.json in the API key section and the app will take care of setting everything up for us from there.

We've already pip installed assembly AI and wired that together as I showed you right here in main, like that's assemblyai.settings.api key.

Then for the rest of the app, it's good to go.

|

|

|

transcript

|

3:03 |

We've got all the supporting pieces in place.

It's time to start using Assembly AI and actually transcribe this episode here.

So we're gonna need to do a couple things real quick.

First, we're going to need to get a hold of the actual episode details because the way it works is we're gonna have an MP3 file equals something like that.

Then we're gonna pass that off to Assembly AI.

We're not gonna download the audio or provide the audio in any way directly to Assembly AI, although we could.

We're just gonna say, see that out there on the internet, go grab that and transcribe it.

It can be things like MP3 files or I think even YouTube videos.

There's all sorts of stuff that we can put out there and say, go find that on the internet, get it for yourself and transcribe it.

So in order to make that happen, the first thing we need to do is say the podcast is equal to, and we have this podcast service, podcast from ID and what are we passed in?

A podcast ID.

So perfect, we'll say if not podcasts or podcasts is none, depending on how you wanna look at it, we'll say, right, it's not that we could do anymore.

This is done, so we're out.

We also need the episode.

And we need the episode by number.

We'll have the podcast ID and the episode number that we're gonna get.

Now, these also are themselves asynchronous, so don't forget to await them because what you'll get back otherwise is a coroutine, which is never none, but also doesn't work.

It doesn't do anything.

So there we go.

So we have our episode.

I'll have basically the same setup here.

Something like that.

All right, so now we know we have our episode and the most important thing here is gonna be going to the episode and saying called the enclosure, enclosure URL.

And that's just RSS speak.

And let's just do a little printout.

Would transcribe.

There we go.

So let's just go and hit that and try to make one of these jobs again.

And we'll just say, let's consider doing episode 344 of Python bytes.

Gives us a job back.

And what do we see?

Would have transcribed.

Oh, look at that.

Python bytes, episode 344, AMA ask us anything from that URL.

Let's see if it works.

Sure enough, there it is.

Perfect.

Look at that.

So we're getting all the data that we need here.

We haven't yet sent this off to assembly AI, but we've got everything we need.

The next thing to do is gonna be to use assembly AI and import that and start calling functions like with the transcriber.

|

|

|

transcript

|

8:05 |

Transcribe in time, let's do it.

So, Assembly AI, we have to make sure that that's imported.

We wanna create a transcriber.

So we're gonna do things with this thing.

We'll say transcriber equals that.

And we're also gonna need to pass a configuration file.

We could just call transcribe, but you'll see there's an incredible number of options and features available.

So we'll have to come up with a config equals assemblyai.

transcriber config like this.

Now let's see what we can pass to it.

If we jump over here, yeah, there's a couple of things.

So we could pass in the language, whether or not to do punctuation.

And we're gonna wanna say true for that one.

So let's pass in some things that we'll want.

That one is true.

Format text, dual channel.

What else have we got here?

That's a lot of help text it was trying to show us.

We could do a subset.

If we knew here's a huge long thing, but I just want this little part of it.

Also, if you have certain words in, doesn't necessarily make sense in a completely general app like this, but let's suppose you're in like the medical field or in technology, programming, Python, you can say these are words that often get confused and put it for something else like PyPI might be spelled like the food instead of P-Y-P-I.

You don't want that.

So you can use word boost to say these words are really important in this particular area.

All right, and you can say filter out profanity, redact personally identifiable information, which is excellent.

You can put speaker labels on there.

None of these we're gonna put on ours yet.

Oh, I really need that to not be in the way.

Disfluencies, however, if you like, when you don't do that, you don't want those kinds of things in your transcripts.

So you wanna, if somebody says, I went to the store, it might be nice to just say, I went to the store, right?

You don't need all that babbling stuff in there.

So we'll say disfluencies, false.

We don't want them transcribed.

We want them omitted.

You can do sentiment analysis, auto chapters, entity detection, summarization.

We're gonna actually use lemur and the LLMs for this later.

So that's great.

That might be all we're gonna add.

Now that I think about it, let's actually put a format text is true and just so it doesn't do it, 'cause arbitrary set of speakers, I'm not sure how well that's gonna work for us in this situation.

So we'll say false.

So now come over here and we'll say transcript, almost future equals transcriber.

So we could say transcribe, and this is a blocking call, but we have such a nice setup here with async and await.