|

|

|

9:43 |

|

|

show

|

1:27 |

Hello and welcome to Asynchronus Techniques and Examples in Python.

In this course, we're going to explore the entire spectrum of asynchronous programming in Python.

This means threads, this means subprocesses the fancy new asyncio and the async and await keywords.

All of that and much, much more.

I hope you're excited about digging into it because I'm super excited to share it with you.

Now before we get into the details of what we're going to cover, let's talk really briefly about what asynchronous programming is.

If we go and look at Wikipedia it'll say asynchrony and computer programming refers the occurrence of events independent of the main program flow and way of dealing with such events.

These may be outside events such as the arrival of signals or actions started by the program without blocking or waiting for results.

Now what's really important about this statement is it doesn't say asynchronous programming is you create threads and then you join on them or you create other processes and wait for them to finish or get back to you.

It just says stuff happening at the same time.

So we're going to look at many different ways in fact we're going to explore three very different approaches that Python can take to this: threads, processes, and asyncio.

We're going to explore more than that of course.

But there's actually a lot of ways in which asynchrony can be achieved in Python and knowing when to choose one over the other is super important.

We're going to talk all about that.

|

|

|

show

|

1:51 |

Here's a graph we're going to come back to and analyze at great depth later in the course.

It came from a presentation by Jeffrey Funk.

You can see the SlideShare link there if you want to go check it out.

It's actually 172 slides.

I want to just call your attention to this graph around 2005.

If you look at, most importantly, the dark blue line that is single-threaded performance over time.

Note, it's going up and up and up following Moore's law, that's the top line and then it flattens out and it actually is trending downward.

What is going on here?

Well, look at the black line.

We have the number of cores going from one up to many right around 2005 and continuing on today.

To take full advantage of modern hardware you have to target more than one CPU core.

The only way to target more than one CPU core is to do stuff in parallel.

If we write a regular while loop or some sort of computational thing in regular Python that is serial and it's only going to run on one core and that means it's following that blue downward line.

But if we can follow the number of cores growing, well, we can multiply that performance massively, as we'll see.

One of the reasons you care about asynchronous programming is if you have anything computational to do that depends on getting done fast not like I'm calling a database or I'm calling a web service and I'm waiting.

That's a different type of situation we'll address.

But no, I have this math problem or this data analysis problem and I want to do it as fast as possible in modern hardware.

You're going to see some of the techniques that we talk about in this course allow us to target the new modern hardware with as much concurrency as needed to take full advantage of all the cores of that hardware.

So like I said, we're going to dig way more into this later I just want to set the stage that if you have anything computational and you want to take full advantage of modern hardware, you need asynchronous programming.

|

|

|

show

|

4:52 |

Let's talk about the actual topics that we're going to cover chapter by chapter and how it all fits together.

We're going to start digging further into why do we care about async, when should we use it, what are its benefits.

So we're going to go way into it so you understand all the ways in which we might use asynchronous programming in Python, and when you might want to do that.

Then it's time to start writing some code and making things concrete.

We're going to focus first on the new key words introduced in Python 3.5 async and await.

Now some courses leave this to the end as the great build-up, but I think you start here.

This is the new, powerful way to do threading for anything that is waiting.

Are you calling it database?

Are you talking to a web service?

Are you talking to the file system, things like that?

We do these kinds of things all the time in Python and it' really not productive to just block our program while it's happening.

We could be doing many other things.

And the async and await key words in the asyncio foundation make this super straightforward.

It's almost exactly the same programming model as the serial version but it's way more scalable and productive.

Next we're going to focus on threads, sticking to making a single process more concurrent doing more at once.

We're going to talk about a more traditional way of writing asynchronous code in Python with threads.

We'll see sometimes this is super-productive.

other times it's not as productive as you might hope especially because of things like the GIL raise its head.

And we'll see when and how to deal with that.

Some things are well-addressed with threads others not so much.

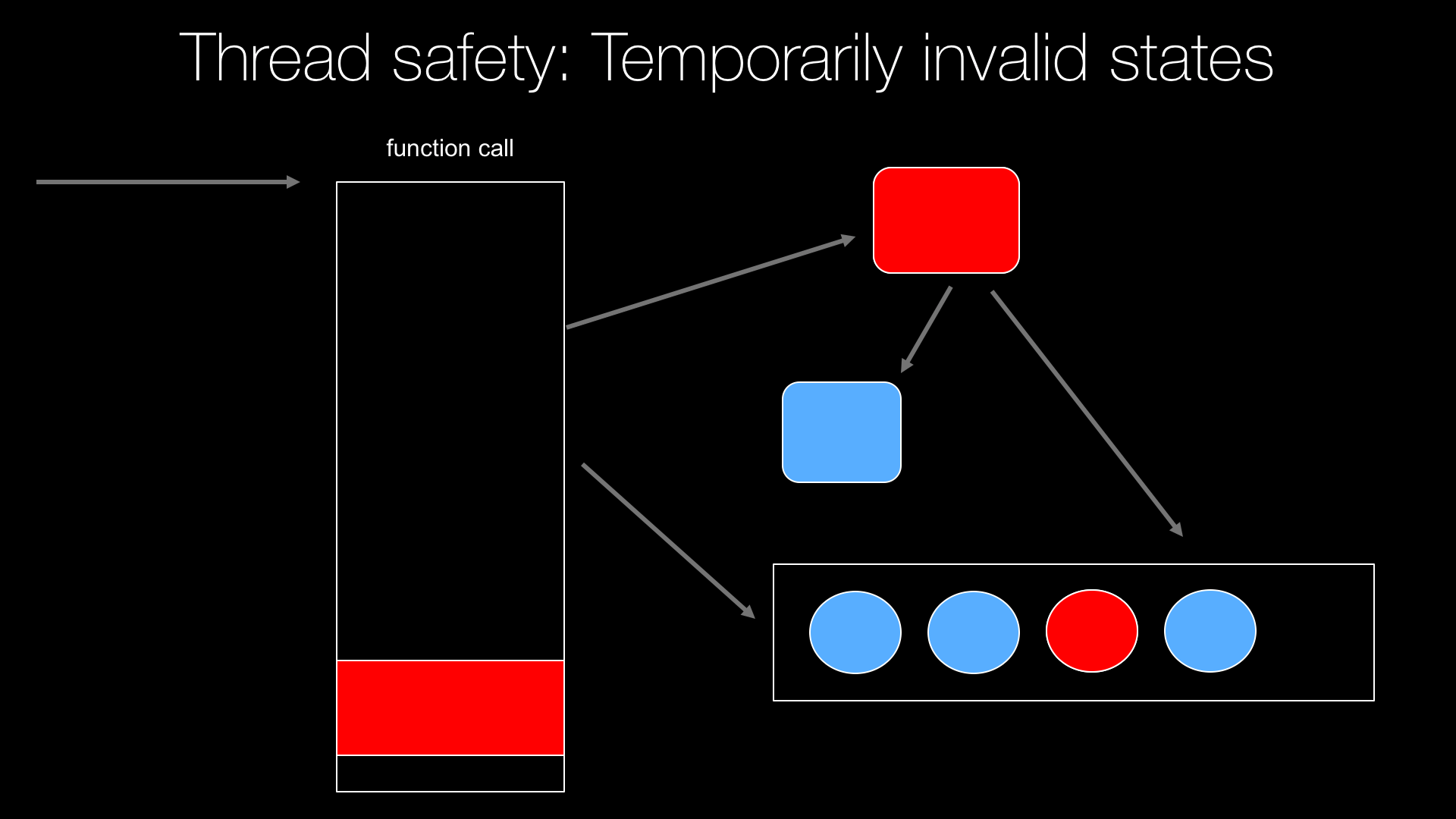

When we start writing multithreaded code or asynchronous code, in general we have to think very carefully about the data structures that we use and making sure that we don't encourage a race conditions or deadlocks.

So in this chapter we're going to talk about both of those.

How do we prevent race conditions that might allow code to see invalid data or corrupt our data structures?

And how do we prevent deadlocks from completely freezing up our program by the improper use of the tools trying to prevent the first?

So we're going to talk a lot about thread safety make sure you get that just right.

Now Python has two traditional types of parallelism threaded parallelism and process-based parallelism.

And the primary reason we have this is because of the GIL.

We'll see that threaded-based parallelism is great when you're waiting on things like databases or web calls, things like that.

But it's basically useless for computational work.

So if you wanta do something computational we're going to have to employ process-based parallelism.

We're going to talk about Python's native, multiprocessing process-based parallelism, with tools all around that meant to take a bunch of work and spread it across processes.

You'll see that the API for working with threads and the API for working with processes are not the same.

But the execution pools are ways to unify these things so that our actual algorithms or actual code depend as little as possible on the APIs for either threads or processes, meaning we can switch between threads or processes depending on what we're doing.

So we wanta talk about execution pools and how to unify those two APIs.

Then we're going to see two really interesting libraries that take async and await and asyncio and make it better make it easier to fall into the pit of success.

You just do the right thing, and it just happens.

The way it guides you, things work better.

So things like cancellation, parent/child tasks or any mix mode of, say, some IO-boundwork and some CPU boundwork.

That can be really tricky, and we'll see some libraries that make it absolutely straightforward and obvious.

One of the great places we would like to ply asyncio is on the web.

That's a place where we're waiting on databases and other web services all the time.

We'll see the traditional, popular frameworks like Django, Flask, Pyramid do not support any form of asynchrony on the web.

So we'll take something that is a Flask-like API and adapt it to use asyncio and it's going to be really, really great.

We'll see massive performance improvements around our web app there.

Finally, we'll see that we can integrate C with Python and, as you know, C can do just about anything.

Your operating system is written in C.

It can do whatever it wants.

So we'll see that C is actually a gateway to a different aspect, different type of parallelism and performance in Python.

But we don't wanta write C.

Maybe you do, but most people don't want to write C if they're already writing Python.

So we'll see that we can use something called Cython to bridge the gap between C and Python and Cython has special key words to unlock C's parallelism in the Python interpreter.

It's going to be great.

So this is what we're covering during this course and I think it covers the gamut of what Python has to offer for asynchronous programming.

There's so much here; I can't wait to get started sharin' it with you.

|

|

|

show

|

0:44 |

Let's take a moment and just talk really quickly about the prerequisites for this course.

What do we assume you know?

Well, we basically assume that you know Python.

This course is kind of intermediate to advanced course I would say, it's definitely not a beginner course.

We run through all sorts of Python constructs classes, functions, keyword arguments, things like that without really explaining them at all.

So if you don't know the Python language you're going to find this course a little bit tough.

I recommend you take my Python Jumpstart by Building 10 Apps, and then come back and pick this course up.

You don't have to be an absolute expert in Python but like I said, if you're brand new to the language take a foundational course first and then come back and dig in to the Asynchronous Programming after that.

|

|

|

show

|

0:49 |

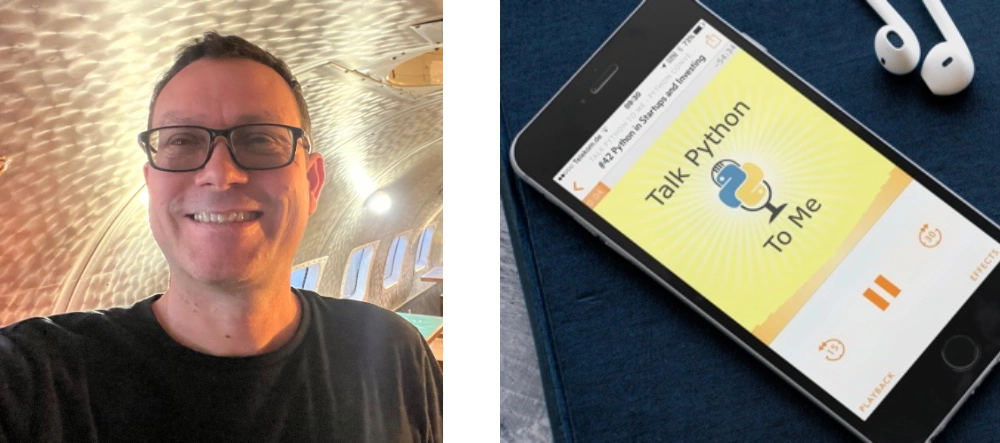

Finally in this chapter, I just want to say hello and thank you for taking my course.

My name is Michael Kennedy, find me on Twitter @mkennedy.

You may know me from The Talk Python to Me podcast or the Python Bytes podcast.

I am the host or co-host on both of those and each of them cover different aspects of asynchronous programming, so for example I've had Nathaniel on to talk about Trio which is one of the libraries we're going to cover.

Philip Jones to talk about Quart another thing we're going to use in this course on the Talk Python to Me podcast.

You can listen to those interviews.

And on the Python Bytes, we highlight all sorts of libraries that are really useful for asynchronous programming.

So make sure to check those out if you're interested and I'm also the founder of Talk Python Training.

Welcome to my course, it's great to meet you and I'm looking forward to helping you demystify asynchronous programming.

|

|

|

|

5:57 |

|

|

show

|

1:40 |

In this short chapter, we're going to talk about setting up your computer so that you can follow along.

Do you have the right version of Python?

What editors are you going to use?

Can you get the source code to get started on some of the examples?

Things like that.

Obviously this is a Python course so you're going to need Python.

But, in particular, you need Python 3.5 or higher.

Now I would recommend the latest, Python 3.7.

Maybe you're on a Linux machine, and it auto-updates as part of the OS, and that's probably 3.6.

But you absolutely must have Python 3.5 because that's when some of the primary async language features were introduced namely, the async and await keywords.

Do you have Python, and if you do, what version?

Well, the way you answer that question varies by OS.

If you are on Mac or you're on Linux you just type 'Python3 -V' and it will show you the Python version.

In this screenshot, I had Python 3.6.5 but in fact, 3.7 is out now so you might as well go ahead and use that one.

But if you type this and you get an answer like 3.5 or above, you're good.

If it errors out, you probably don't have Python.

I'll talk about how to get it in a sec.

Windows, it's a little less straightforward.

You can type 'where Python' and the reason you want to do that is there's not a way to target Python 3 in particular it just happens to be the first Python.exe that lands in your path.

So by typing 'where' you can see all the places and down here you can see I typed 'Python -V' again same command but without the '3' and I got Python 3.6.5, the Anaconda version.

That would be fine for this course Anaconda's distribution should be totally fine.

But, again, has to be 3.5 or above.

|

|

|

show

|

0:39 |

If you don't have Python or you have an older version of Python or you would just like to get a newer version of Python yes, you can go to Python.org and download it but I recommend that you check out Real Python's article on installing and managing and upgrading Python.

So just visit realPython.com/installing-Python and check it out.

The guys over there are putting lots of effort into keeping this up to date.

For example, one of the ways you might want to install it on macOS would be to use Homebrew and that's different than what you get off of Python.org.

It let's you upgrade it a little more easily.

That's the way I'm doing it these days and really liking it so far.

So this article will help you find the best way to install it on your OS.

|

|

|

show

|

0:53 |

In this course like most of our courses I'm going to be using PyCharm.

Now you don't have to use exactly the same editor.

You can use Visual Studio Code with a Python plug-in.

You can use Emacs.

You can use Vim.

Whatever makes you happy.

But if you want to follow along exactly.

Then I recommend you use Pycharm.

Now almost all of this course can be done in the Community Edition, the free edition of PyCharm.

There is one chapter on web development.

Maybe it's a little bit easier with PyCharm Pro but because we're not really working with CSS, JavaScript the template, anything like that I think you could actually use PyCharm Community Edition for the entire course.

So if you want to use that just visit jetbrains.com/pycharm.

Get it installed and I'll show you how to use it along the way.

If you want to use something else, like I said, no problem use what makes you happy.

Just be aware this is the one we're using so you have to adapt your editor and waverunning code to what we have over here.

|

|

|

show

|

0:30 |

You might consider what hardware you're taking this course on.

Most of the time, the courses we have it doesn't matter what you're running on.

You could probably take many of the courses we have on here, on say like, a Raspberry Pi or something completely small and silly like that.

However, on this one you really need to have at least two cores.

If you don't have two cores, you're not going to be able to observe some of the performance benefits that we talk about.

So here's my machine, I have a MacBook Professional 15 inch, 2018 edition with the Core i9 which you can see 12 cores here.

Six real cores, each of them hyper thread.

So it appears as 12 to the operating system.

But this system will really let us see a difference from when we're running on single threaded mode to parallel mode that actually takes advantage of all of the processors.

But you don't have to have 12 cores.

If you have two or four that will already let you see a difference but the more the better for this course because when we get to the performance section in particular, the CPU bound type of stuff we're trying to take advantage of the CPU you'll just see a bigger benefit, the more cores you have.

So at least dual core is not required but to see some of the benefits you have to have more than one core.

|

|

|

show

|

1:13 |

Every single thing you see me type in this course will be available to you in GitHub.

Before you go farther, pause the video and go over to GitHub.com/talkPython/async-techniques-Python-course You can also just click the link to this GitHub repo in your course details page.

You want to make sure you get access to this and go over there and star and consider forking it as well.

That way you have a copy of exactly we're working with during this course.

I encourage you to play around and write some code and try out the ideas from each chapter as we get to them.

Most of the chapters have a starting and final version of code.

So if you wanted to take, say, a serial single threaded computational little app that we build, and convert it to threads and convert it to multiprocessing, and things like that you'll have a copy over here on GitHub.

So just be sure to star this, and consider forking it.

Definitely check it out so you have access to it.

If you don't do Git and that's fine, just click where it says clone or download, and download a zip, and then you'll have the source, as well.

|

|

|

|

1:02 |

|

|

|

26:24 |

|

|

show

|

3:42 |

Let's begin our exploration of async by taking a really high-level view, we're going to look at the overall async landscape, some of the particulars about working with async in concurrent programming in Python, and the two main reasons that you care about asynchronous programming.

In this first video we're going to focus on async for speed or for performance, the other main reason you might care about asynchronous programming or concurrent code would be for scalability, doing more at once.

Right now we're going to focus on doing things faster for an individual series of computations.

Later we're going to talk about scalability say for web apps and things like that.

Let's look at some really interesting trends that have happened across CPUs in the last 10 years or so, 15 years.

So here's a really great presentation by Jeffrey Funk over on SlideShare and I put the URL at the bottom you can look through the whole thing, you can see there's 172 slides, but here I am pulling up one graphic that he highlights, because it's really, really interesting.

See that very top line, that red line, that says transistors in the thousands, that is Moore's Law.

Moore's Law said the number of transistors in a CPU will double every 18 months and that is surprisingly still accurate; look at that, from 1975 to 2015 extrapolate a little bit, but still basically doubling just as they said.

However people have often, at least in the early days thought of Moore's Law more as a performance thing as the transistors doubled, here you can see the green line "clock speed" and the blue line "single threaded performance" very much follow along with Moore's Law.

So we've thought about Moore's Law means computers get twice as fast every 18 months and that was true more or less for a while, but notice right around 2008, around 2005 it starts to slow and around 2008 that flattens off and maybe even goes down for some of these CPUs and the reason is we're getting smaller and smaller and smaller circuits on chips down to where they're basically at the point of you can't make them any smaller, you can't get them much closer both for thermal reasons and for pure interference reasons.

You can notice around 2005 onward, CPUs are not getting faster, not really at all.

I mean, you think back quite a bit and the speed of the CPU I have now is I have a really high-end one, it's a little bit faster but nothing like what Moore's Law would have predicted.

So what is the take away?

What is the important thing about this graphic?

Why is Moore's Law still effective?

Why are computers still getting faster, but the CPU and clock speed, really performance speed, single-threaded performance speed, is not getting faster, if anything it might be slowing down a little.

Well that brings up to the interesting black graph at the bottom, for so long this was one core and then when we started getting dual-core systems and more and more CPUs, so instead of making the individual CPU core faster and faster by adding more transistors what we're doing is just adding more cores.

If we want to continue to follow Moore's Law if we want to continue to take full advantage of the processors that are being created these days we have to write multi-threaded code.

If we write single-threaded code, you can see it's either flat, stagnant, or maybe even going down over time.

So we don't want our code to get slower, we want our code to keep up and take full advantage of the CPU it's running on and that requires us to write multi-threaded code.

Turns out Python has some extra challenges, but in this course we will learn how to absolutely take full advantage of the multi-core systems that you're probably running on right now.

|

|

|

show

|

3:54 |

Let's look at a real world example of a Python programming that is doing a very poor job taking advantage of the CPU it's running on.

I'm running on my MacBook that I discussed in the opening, has 12 cores.

This is the Intel i9 12 core maxed out you know, all nobs to 11 type of CPU for my MacBook Pro, here, so you can see it has a lot of processors.

It has a lot of cores.

In particular, has 12 hyper-threaded cores so six real cores, each one of which is hyper-threaded.

Here we have a program running in the background working as hard as it can.

How much CPU is the entire computer using?

Well, 9.5%.

If this were 10 years ago, on single core systems the CPU usage would be 100% but you can see most of those cores are dark, they're doing nothing they're just sitting there.

That's because our code is written in a single-threaded way.

It can't go any faster.

Let's just look at that in action real quick.

Now, this graph, this cool graph showing the 12 CPUs and stuff this comes from a program called Process Explorer which is a free program for Windows.

I'm on my Mac, so I'll show you something not nearly as good but I really wanted to show you this graph because I think it brings home just how underutilized this system is.

So let's go over here and we're going to run a performance or system monitoring tool actually built in Python which is pretty cool in and of itself, called Glances.

So we're sorting by CPU usage, we're showing what's going on.

We had a little Python running there, actually for a second, running probably in PyCharm which is just hanging out, but you can see the system is not really doing a whole lot, it is recording.

Camtasia's doing a lot of work to record the screen so you have to sort of factor that out.

Now, let's go over here and create another screen notice right here we have 12 cores.

Okay, so if we run PtPython, which is a nicer REPL but just Python, and we write an incredibly simple program that's going to just hammer the CPU.

Say x = 1, and then while true: x += 1.

So we're just going to increment this, over and over and over.

I'm going to hit go, you should see Python jump up here and just consume 100% of one core.

This column here is actually measuring in single core consumption whereas this is the overall 12 core bit.

Here it's working as hard as it can.

You'll see Python'll jump up there in a second.

Okay, so Python is working as hard as it can, 99.8% running out of my brew installed Python, there.

But the overall CP usage, including screen recording on this whole system?

11%, and of course as we discussed, that's because our code we wrote in the REPL, it only uses one thread, only one thread of concurrent execution that means the best we could ever possibly get is 1/12%.

8.3% is the best we could ever do in terms of taking advantage of this computer unless we leverage the async capabilities that we're going to discuss in this course.

So if you want to take advantage of modern hardware with multiple cores, the more cores the more demanding or more pressing this desire is you have to write concurrent multi-threaded code.

Of course, we're going to see a variety of ways to do this both for I/O bound and, like this, CPU bound work and you handle those differently in Python which is not always true for other languages but it is true for Python.

And by the end of this course, you'll know several ways to make, maybe not this simple, simple program but to make your Python program doing real computation push that up to nearly 100% so it's fully taking advantage of the CPU capabilities available to it.

|

|

|

show

|

3:53 |

Now you may be thinking, "Oh my gosh, Michael you have 12 CPUs, you can make your code go 12 times faster." Sorry to tell you that is almost never true.

Every now and then you'll run into algorithms that are sometimes referred to as embarrassingly parallelizable.

If you do, say, ray tracing, and every single pixel is going to have it's own track of computation.

Yes, we could probably make that go nearly 12 times faster.

But most algorithms, and most code, doesn't work that way.

So if we look at maybe the execution of a particular algorithm, we have these two sections here, these two greens sections that are potentially concurrent.

Right now they're not, but imagine when we said "Oh that section and this other section.

We could do that concurrently." And let's say those represent 15% and 5% of the overall time.

If we were able to take this code and run it on an algorithm that entirely broke that up into parallelism, the green parts.

Let's say the orange part cannot be broken apart.

We'll talk about why that is in just a second.

If we can break up this green part and let's imagine we had as many cores as we want, a distributed system on some cloud system.

We could add millions of cores if we want.

Then we could make those go to zero.

And if we could make the green parts go to zero like an extreme, non-realistic experience but think of it as a upper bound then how much would be left?

80%, the overall performance boost we could get would only be 20%.

So when you're thinking about concurrency you need to think about, well how much can be made concurrent and is that worth the added complexity?

And the added challenges, as we'll see.

Maybe it is.

It very well may be.

But it might not be.

In this case, maybe a 20% gain but really added complexity.

Maybe it's not worth it.

Remember that 20% is a gain of if we could add infinite parallelism basically, to make that go to zero which won't really happen, right?

So you want to think about what is the upper bound and why might there might be orange sections?

Why might there be sections that we just can't execute in parallel?

Let's think about how you got in this course.

You probably went to the website, and you found the course and you clicked a button and said, "I'd like to buy this course," put in you credit card, and the system went through a series of steps.

It said, "Well, OK, this person wants to buy the course.

"Here's their credit card.

We're going charge their card then we're going to record an entry in the database that says they're in the course and then we're going to send an email that says, hey, thanks for buying the course here's your receipt, go check it out." That can't really be parallelized.

Maybe the last two.Maybe if you're willing to accept that email going out potentially if the database failed, it's unlikely but, you know, possible.

But you certainly cannot take charging the credit card and sending the welcome email and make those run concurrently.

There's a decent chance that for some reason a credit card got typed in wrong it's flagged for fraud, possibly not appropriately but, right, you got to see what the credit card system and the company says.

There might not be funds for this for whatever reason.

So we just have to first charge the credit card and then send the email.

There's no way to do that in parallel.

Maybe we can do it in a way that's more scalable that lets other operations unrelated to this run.

That's a different consideration but in terms of making this one request this one series of operations faster we can't make those parallel.

And that's the orange sections here.

Just, a lot of code has to happen in order and that's just how it is.

Every now and then, though, we have these green sections that we can parallelize, and we'll be focused on that in this course.

So keep in mind there's always an upper bound for improvement, even if you had infinite cores and infinite parallelism, you can't always just make it that many times faster, right?

There's these limitations, these orange sections that have to happen in serial.

|

|

|

show

|

1:49 |

Next up, let's focus on how we can use asynchronous or concurrent programming for scalability.

I want to take just a moment to address this word, scalability.

Often, people sort of think of scalability as performance and performance equaling speed and things being faster.

And that's not exactly what it means.

Let's think in terms of websites here.

That's not the only time we might think of this scalability concept.

But let's think in terms of websites.

If your website can process individual requests 10 times faster, it will be more scalable because it won't back up as much, right?

Scalability doesn't refer to how fast an individual request can be it refers to how many requests can your website handle or your system handle, until its performance degrades.

And that degrade might be just really long request times before you get back to someone or the browser.

It might mean it's so bad that requests start timing out.

It might mean that your system actually crashes.

There's some point where your system degrades in performance.

And when you talk about how scalable is a system it's how much concurrent processing?

How many requests at one time can it handle until it starts to degrade?

As we add scalability to the system we might even make it a tiny bit slower for an individual request.

There's probably a little bit more work we're doing to add this capability to scale better, maybe.

It's not exactly talking about individual request speed because that actually might get worse.

However, it means maybe we could handle 10 times as many concurrent users or 100 times as many concurrent users on exactly the same hardware.

That's what we're focused on with scalability.

How do we get more out of the same hardware?

And we'll see that Python has a couple of really interesting ways to do that.

|

|

|

show

|

3:34 |

Let's visualize this concept of scalability around a synchronous series of executions.

So many web servers have built in threaded modes for Python and to some degree that helps but it just doesn't help as much as it potentially could.

So we're going to employ other techniques to make it even better.

So let's see what happens if we have several requests coming into a system that executes them synchronously one after another.

So a request comes in, request 1, request 2 and request 3 pretty much right away.

In the green boxes request 1, 2 and 3 those tell you how long those requests take.

So request 1 and request 2, they look like maybe they're hitting the same page.

They're doing about the same thing.

3's actually really short.

Could theoretically if it were to move to the front be needing a return much quicker.

So let's look at how this appears from the outside.

So a request 1 comes in and it takes about that long for the response.

But during that time request 2 came in and now before it could even get processed we have to wait for most of 1.

So that's actually worse because we're not scaling.

Okay, we can't do as much concurrently.

And finally if we look at 3, 3 is really bad.

So 3 came in almost the same time as 1 started but because the two longer requests were queued up in front of it, it took about five times four times as long as the request should of taken for it to respond because this system was all clogged up.

Now let's zoom into one of these requests into request 1 and if we look deep inside of it we can break it down into two different operations.

We have a little bit of framework code I'm just going to call that framework because you can't control that, you can't do much with that, right.

This is the system actually receiving the socket data creating the request response objects, things like that.

And then right at the beginning maybe the first thing we want to do is say I would like to find out what user's logged in making this request.

So when I go to the database, I want to get we'll maybe get a user ID or some kind of cookie from their request and I'm going to use that cookie to retrieve the actual object that represents this user.

Then maybe that user wants to list, say their courses.

So then we're going to do a little bit of code to figure out what we're going to do there.

Okay, if the user's not None and we're going to make this other database call and that database call might take even longer in terms of more data.

And then that response comes back.

We do a little bit of work to prepare it we pass off to the framework template and then we just hand it off to the web framework.

So this request took a long time but if you look why it took a long time, it's mostly not because it's busy doing something.

It's because it's busy waiting.

And when you think about web systems, websites web servers and so on, they're often waiting on something else.

Waiting on a web API they're calling waiting on a database, waiting on the file system.

There's just a lot of waiting.

Dr.

Seuss would be proud.

Because this code is synchronous, we can't do anything else.

I mean theoretically, if we had a way to say well we're just waiting on the database go do something else, that would be great.

But we don't.

We're writing synchronous codes, so we call this function call the database query function and we just block 'til it responds.

Even in the threaded mode, that is a tied up thread over in the web worker process so this is not great.

This is why our scalability hurts.

If we could find a way to process request 2 and request 3 while we're waiting on the database in these red zones, we could really ramp up our scalability.

|

|

|

show

|

2:14 |

Let's look at this same series of requests but with our web server having the ability to execute them concurrently at least while it's waiting.

So now we have request 1 come in and it's...

we can't make that green bar get any shorter that's how long it takes.

But, we saw that we were waiting before and if we could somehow run request 2 while we're waiting and then request 3 while both of those are waiting we could get much better response times.

So if we look at our response time again we have...

1 takes exactly as long as it did before cause it was the first one, it was lucky.

But it takes as long as it takes.

However, request 2 is quite a bit shorter.

Didn't have to wait for that other processing we're able to execute it concurrently.

request 3 is the real winner here.

Actually, it returned much, much sooner.

It takes as long as it takes to process it instead of five times.

Now we can't possibly get perfect concurrency, right?

If we could do every single one of them concurrently then there'd be no need to have anything other than a five dollar server to run YouTube or something.

There is some overhead.

There are bits of R Code and framework code that don't run concurrently.

But, because what our site was doing is mostly waiting we just found ways to be more productive about that wait time.

Zooming in again, now we have exactly the same operation.

Exactly the same thing happening but then we've changed this code.

We've changed ever so slightly how we're calling the database and we've freed up our Python thread of execution or just the execution of our code.

Let's not tie to threads just yet.

So that we can do other stuff.

So request 2 came in, right during that first database operation we're able to get right to it.

By the time we got to our code section request 2 had begun waiting so then we just ran our code, we went back to waiting request 3 came in, and we just processed that because both of the others were waiting and so on.

So we can leverage this wait time to really ramp up the scalability.

How do we do it?

Well, it's probably using something called AsyncIO which we're going to talk about right away.

It may even be using Python threads but it's probably AsyncIO.

If you don't know what either of those are that's fine, we're going to talk about them soon.

|

|

|

show

|

4:25 |

I want to draw a map or an illustration for you of Python's Async landscape.

So you'll see that there are many different techniques that we're going to employ throughout this course and different techniques apply in different situations or they will potentially give you different benefits.

So we're going to break the world into two halves here.

On one, we're going to say "Can we do more at once?" That's exactly the thing we just spoke about with say the web server.

While our database request is busy waiting let's go do something else.

The part we opened this chapter with about leveraging the CPU cores and actually computing a single thing faster by taking advantage of the mutli-core systems, that's doing things faster.

Over in the do more at once, we have this thing called Asyncio.

Asyncio was introduced to Python around Python 3.4, but really came into it's own when the language began to work with it, with async and await keywords in Python 3.5.

So Asyncio is the easiest, clearest, simplest way to add scalability like we just saw in our concurrent request example.

You're waiting on something, go do something else.

We also have threads and threads have been around much longer.

They're harder to coordinate, they're harder to deal with error handling, but they're also a really great option to do more at once.

In some programming languages, threads would also be over in the do things faster.

We're going to get to why that is, but you may have heard a thing called the GIL and the GIL means computationally threads are effectively useless to us.

They let you do more things at once if there's a waiting period, but if it's purely computational in Python, threads are not going to help us.

What will help us?

Because the GIL is a process level thing, Python has this capability to spawn sub-processes and a lot infrastructure and APIs to manage that.

So instead of using threads, we might kick off five sub-processes that are each given a piece of the data and then compute the answer and return it to the main process.

So we'll see multi-processing is a tried and true way to work with adding computational performance improvements and taking advantage of multiple cores and this is usually done for computational reasons.

We want to leverage the cores, not we want to talk to the database a bunch of times in parallel.

We would probably use threads for that.

We're also going to look at C and Cython.

C, C++, and Cython.

So C obviously can do multiple multi-threaded stuff.

It could also do more at once but you know, we're going to leverage it more for the aspect of doing things faster in a couple ways.

However, writing C can be a little bit tricky.

It can be error prone and so on.

As a Python developer, it would be better if more of our code could actually be in Python or very very close to Python.

So we're going to talk about this thing called Cython not CPython but Cython.

Cython is a static compiler that compiles certain flavors of Python to C and then basically compiles that C and runs it under the restrictions that C has which are very limited rather than the restrictions that Python has which is fairly limited.

So we'll be able to use Cython for very powerful multi-threading as well.

That's more using the threads in a computational way.

So this is the landscape.

On one hand we have do more at once.

Take advantage of wait periods.

On the other, we have do things faster take advantage of more cores.

Now these are all fine, Asyncio is really nice, but there is also other libraries and other techniques out there that allow us to do these things more easily.

We're going to look at two libraries.

These are by no means a complete list of these types of libraries, but we're going to look at something called Trio something called Unsync.

Aynsc Unsync - get the play on words right?

So these are higher level libraries that do things with the Asyncio capabilities, with threads with multi-processing and so on, but put a new programming API on them, unify them, things like that.

This is your Python Async landscape.

Do more at once do things faster, do either of those easier.

Basically, that's what this course is about.

By the end of this course, you'll have all of these in your tool box and you'll know when to use which tool.

|

|

|

show

|

2:53 |

I mentioned earlier that threads in Python don't really perform well.

They're fine if you're waiting but if you're doing computational stuff they really don't help.

Remember that example I showed you how we had our Python program doing a tight while loop that was just pounding the CPU and it's only getting 8.3% CPU usage.

Well if we had added 12 threads to do that how much of a benefit would it have gotten?

Zero, it would still only be using 8% of the CPU, even though I have 12 cores.

And the reason is this thing called the GIL or the Global Interpreter Lock.

Now the GIL is often referred to as one of the reasons that Python isn't super fast or scalable.

I think that's being a little bit harsh.

You'll see there's a lot of places where Python can run concurrently and can do interesting parallel work.

And it has a lot of things that have been added like AsyncIO and the await, the async and await keywords which are super, super powerful.

The GIL is not the great terrible thing that people have made it out to be but it does mean that there's certain situations where Python cannot run concurrently.

And that's a bummer.

So this is the reason that the Python threads don't perform well.

The Global Interpreter Lock means only one thread or only one step of execution in Python can ever run at the same time regardless of whether that's in the same thread or multiple threads, right?

The Python interpreter only processes instructions one at a time, no matter where they come from.

You might think of this as a threading thing and in some sense it's a thread safety thing but the GIL is actually a memory management feature.

The reason it's there, is it allows the reference counting which is how Python primarily handles its memory management, to be simpler and faster.

With the Global Interpreter Lock that means we don't have to take many fine-grained locks around allocation and memory management.

And it means single-threaded Python is faster than it would otherwise be although it obviously hurts parallel computation.

But the thinking is most Python code is serial in its execution, so let's make that the best and the GIL is what it is.

It's going to make our parallelism less good.

As you'll see throughout this course Python has a lot of options to get around the GIL to do true concurrent processing in Python and we're going to talk about those but the GIL cannot be avoided and your should really understand that it exists, what it is, and primarily that it's a thread safety feature for memory management.

You can learn about it more if you want at realPython.com/Python-gil, G-I-L and there's a great article there it goes into all the depth and the history and so on, so go check that out if you're interested in digging into it.

Keep in mind, this GIL is omnipresent and we always have to think about how it affects our parallelism.

|

|

|

|

56:33 |

|

|

show

|

1:15 |

We're going to start our actual exploration and programming examples of concurrency in Python without threads and without sub-processes.

That's right, we're going to do concurrent programming no threads, no sub-processes.

It may sound impossible.

It certainly sounds kind of weird, right?

We think of parallel programming as involving multiple threads or maybe multiple processes in the case of a sub-process.

But we're going to see that there's a new, fancy way that is mostly focused on scalability that does not at all involve threads in Python.

It is my favorite type of concurrent programming in Python by far.

Of course I'm speaking about AsyncIO.

AsyncIO is what you might call cooperative concurrency or parallelism.

The programs themself state explicitly "Here's a part where I'm waiting you can go do something else.

Here's another part where I'm waiting on a web request or a database you can go do other work right then, but not other times." With threads we don't have this certainly with multi-processing in Cython we don't have this.

So where are we on this landscape?

We're in this green AsyncIO area and of course, Trio and Unsync are built on top of that.

So, I kind of highlighted that as well but we're not going to talk explicitly about those yet.

This is the foundation of those libraries.

|

|

|

show

|

3:51 |

If you haven't worked with AsyncIO before it's going to be a little bit of a mind shift.

But it turns out the programming model is actually the easiest of all the concurrent programming models that we're going to work with.

So don't worry, it's actually the easiest, newest, and one of the best ways to write concurrent programs in Python.

So let's step back a minute and think about how do we typically conceptualize or visualize concurrency?

Well, it usually looks something like this.

We have some kind of code running and then we want to do multiple things at a time so we might kick off some other threads or some other processes.

And then our main thread, and all the other threads are going to run up to some point, and we're going to just wait for that secondary extra work to be done.

And then we're going to continue executing along this way.

Like I said, this is typically done with threads or multiprocessing, okay?

Many languages it's only threads in Python, because the GIL multiprocessing is often involved.

Now, this model of concurrent programming one thread kicking off others waiting for them to complete, this fork-join pattern this makes a lot of sense.

But in this AsyncIO world, this is typically not how it works.

Typically, something entirely different happens.

So in this world, we're depending upon the operating system to schedule the threads or schedule the processes and manage the concurrency.

It's called preemptive multithreading.

Your code doesn't get to decide when it runs relative to other parts of your code.

You just say I want to do all these things in parallel it's the operating system's job to make them happen concurrently.

Now, contrast that with I/O driven concurrency.

So in I/O driven concurrency we don't have multiple threads.

We just have one thread of execution running along.

This may be your main app, it actually could be a background thread as well but there's just one thread managing this parallelism.

Typically, when we have concurrency if we have multiple cores, we're actually doing more than one thing at a time assuming the GIL's not in play.

We're doing more than one thing at a time.

If we could take those multiple things we're trying to do and slice them into little tiny pieces that each take a fraction of a second or fractions of milliseconds and then we just interweave them, one after another switching between them, well it would feel just the same, especially if there's waiting periods, this I/O bit.

So what if we take our various tasks green task, pink task, blue task, and so on and we break them up into little tiny slices and we run them a little bit here, a little bit there.

So here's a task.

Here's another task.

And we find ways to break them up.

And these places where they break up are where we're often waiting on a database calling a web service, talking to the file system doing anything that's an external device or system and we keep going like this.

This type of parallelism uses no threads adds no overhead really, and it still gives a feeling of concurrency, especially if we break these things into little tiny pieces and we would've spent a lot of time waiting anyway.

This is the conceptual view you should have of AsyncIO.

How do we take what would be big, somewhat slow operations and break them into a bunch of little ones and denote these places where we're waiting on something else.

In fact, Python has a keyword to say we're waiting here, we're waiting there we're waiting there, and the keyword is await.

So we're going to be programming two new keywords async and await, and they're going to be based on this I/O driven concurrency model and this you might call cooperative multithreading.

It's up to our code to say, I'm waiting here so you can do, so you can go do something else when I get my callback from the web service please pick up here and keep going.

It's really awesome how it works and it's actually the easiest style of parallelism that you're going to work with.

|

|

|

show

|

9:05 |

It's high time to write some code and much of what we are going to do going forward is to write demos and then think about how they worked how we can change them, what were the key elements there.

Let's begin by actually building a little of foundation.

So, what we're going to do is we're going to talk about using asyncio.

Now, asyncio is quite magical in how it works but actually pretty easy in the programming model that you're going to use.

So, what I want to do is give you a conceptual foundation that's a little easier to understand that doesn't actually have to do with concurrency.

So, we're going to look at this idea of generator functions.

If you're familiar with the yield keyword you'll know what exactly I'm talking about.

So, let's go over to our github repository.

We have 04 section and we have generators and we're going to move on to this producer and consumer thing.

But, let's start with generators.

Now, the first thing I want to do is open this in PyCharm and configure this to have it's own virtual environment.

The generator example won't have external packages but, the other stuff will.

So, let's get that all set up.

We'll come over here and we're going to create a virtual environment.

Now, I have a little command that will run Python3 -m venv and then activate it and then upgrade pip and setuptools.

That all happened.

Okay, so, now we can pip list see are Python has this virtual environment we just created here.

And in Windows, you would type where Python.

Okay, so, we're going to hope this in PyCharm.

So, here's our new virtual environment and we can see that it actually is active.

So, we have our Python that is active here.

So, this is great.

Now what we want to do is we want to write a very simple generator.

Why are we messing with generators?

Why do we care about this at all?

Like, this is not asynchronous stuff.

Generators don't have much to do with asynch programming or threading, or things like that.

Well, this idea of a coroutine or what you might think of as a restartable function is critical to how asyncio works.

And, I think it's a little easier to understand with generators.

So, what we want to do is write a quick function called fib.

And, at first we're going to pass in an integer and it's going to return a list of integers.

We can import that from typing.

So, we're going to take this and we're going to generate that many Fibonacci numbers.

Totally simple.

We're going to write it as a straightforward, non-generator function.

So, I'm going to return a list.

We have to have some numbers.

Put those here.

Those would be our Fibonacci numbers.

And, then we're going to have current and next.

These are going to be 0 and 1.

The algorithm is super simple.

While, a line of numbers.

Say next.

Say it like this, current, next = next and then current + next then numbers.append(current).

We'll return a lot of numbers.

Let's see if I wrote that right.

So, let's print fib of the first ten Fibonacci numbers.

Go over here, I'm going to run this.

And, let's just actually set each one of these are going to be separate code examples so I will mark this directory as a source root.

Doesn't look up above this for other files and we'll go and run this.

1, 1, 2, 3, 5, 8, 13, 21, 32, 55 Perfect, it looks like I managed to implement the Fibonacci numbers, once again.

However, there's some problems here.

Not very realistic.

The Fibonacci sequence is infinite, right?

By passing in this number, we are working with a finite set.

What if we want to work through these numbers and go, I'm looking for the condition where the previous and current Fibonacci numbers excluding 1 and 2, are actually both prime numbers may be greater than 1000.

Who knows.

Something like that.

If you're looking for that situation you don't know how many to ask for.

What if you ask for 10,000.

Well, that didn't work.

Ask for 100,000.

did that work?

I don't know.

Ask for 1,000,000.

What will be better is the consumer of this truly infinite sequence could actually decide when they've had enough.

And, what we can do that with is what's called a generator.

So, I'm going to comment that one out.

Actually, let's duplicate it first.

I'm going to change this.

First we're going to leave it like this here except for we're going to say, no longer returns that.

So, what we're going to do, is we're actually going to go through instead of creating this list, right and, I can just make this true.

Let's actually just make the whole sequence all at once.

It's a little easier.

So, what we're going to do, is this.

Now, we're never going to return numbers.

Instead, what we're going to do is generate a sequence.

And, in Python, there's this really cool concept with this yield keyword of these coroutines.

These generator methods.

The idea is, there are effectively restartable functions.

So, what yield does, it lets you say I want to execute up to some point in a series until I get to one and then I can give it back to whoever asked for it.

They can look at it, work with it and then decide whether this function should keep running.

It's a totally wild idea, but it's entirely easy to write.

So check this out.

We do this, we don't want to print all of them.

So, now, we want to be a little more careful cuz that's an infinite sequence for n in fib(): print(n) And say, this is going to be comma separated there.

Then we'll say, if n > 10,000, break.

Then break and done.

So, now, we have an infinite sequence that we're deciding when we want to stop pulling on it.

Well, let's just run it and see what happens.

Hmm.

If we want one more print in there.

Here we go.

1, 1, 2, 3, 5.

And, incredible, it just went until we decided to stop asking for it.

How does that work?

So, understanding what is happening here is key to understanding how asyncio works.

So, let's set a breakpoint.

Actually, let's set it up here.

Set it right here.

I'm also going to set a breakpoint right there.

On a normal function, what would happen is we would hit this breakpoint.

This line would run and it would of course, go in here and execute all of this and then this code would run.

But, these are restartable functions, these generators.

Just like the async coroutines.

So, let's step through it and see what happens.

Here we are, we first ran to this and that makes perfect sense.

Nothing surprising there.

But, watch this breakpoint on line 14.

If we step over it didn't run.

What we got back is not a sequence but is a generator that we can then iterate.

So, it's not until we start pulling on this generator that it even starts to run.

So, I'm going to step a little bit.

I'm going to be over here in current notice.

0 and 1.

This function is starting up.

It's totally normal.

Now, we're going to yield, which means go back here.

Oh, I need to use the step into.

We're going to step in down here now n is a 1, the second 1 actually.

We go down here and we print it out.

Now, we're going to go back into the function but look where we started.

We started on line 16, not line 13 and the values have kept what they were doing before.

So, I'm just going to run this a few times.

Now if we step in again, again it's back here and it's 3 and 5.

These generator functions are restartable.

They can run to a point and then stop and then resume.

There's no parallelism going here but you could build this cooperative parallelism or concurrency pretty straightforward with just generator functions, actually.

You just need to know how and when to say stop.

So, let's go through just a little bit more until we get to here.

So, now we have n at this point and if we don't no longer loop over we break out of the loop we start pulling on the generator and that basically abandons this continued execution of it.

So, if we step, we're done, that's it.

So, the last time we saw these run they were, you know whatever the last two Fibonacci numbers were.

And then, we just stop pulling on it it stopped doing it's work.

We didn't restart it again and this example is over.

So, of course I'm going to put this in your code.

You can play with it.

There's nothing that hard about the actual written code it's the order and flow of the execution that is really special that you kind of, get a little bit used to.

So, I encourage you to take moment and open this up in PyCharm or something like that that has a decent debugger and just go through the steps a few times and see what's happening.

Once you get comfortable with that we're ready to talk about asyncio.

|

|

|

show

|

3:08 |

Let's begin our exploration of asyncio by looking at this common pattern called a producer, consumer pattern.

The idea is, there's some part of the system that typically runs independently asynchronously, that generates items.

Maybe it receives a request to fulfill a job charge to this credit card, send these emails import this data file, things like that.

There's another part of the system that is also running asynchronously that looks for work to be done, and as the consumer it's going to pick up these jobs that are created by the producer, and start working on them.

I have an extremely simple version of that here.

It doesn't really do anything useful.

So, we have generate data, and we have process data.

And we're just using this list to share to exchange data between these two.

So, let's look at generate data.

It's going to go, and just create the square of whatever number we give it and it's going to store in our list, a tuple.

So that's one thing going in the list that is a tuple the item that was generated, and the time at which that generation happened.

And then it prints out in yellow.

We're using this cool library, called Colorama to do different colored output.

And color, in threading concurrences is super helpful to understand what part is happening where and what order it's all happening in.

We're also flushing everything to make sure there's no delay in the buffer when we print stuff.

As soon as we say print, you see it.

So, to simulate it taking some time to generate some data or do the producer side, we call time.sleep() for between 0.5 and 1.5 seconds.

On the process data side we're going to look for some data if it's not there, this structure doesn't really have a concept of get it, and wait we'll talk more about that later.

But once an item comes in, we just grab it.

In cyan we say we found it, and we also print out and we determine how long, from when it was created till we were able to get to it, and process it.

Because the lower that number, the more concurrency the better we have.

A soon as something is deposited for us to use, and pick up we should immediately get to it.

But this code is synchronous.

We do all the generation, then all the processing.

So the first item is not going to be processed until all the generation is done, in this 20, and 20.

Let's just run it and see what this means.

The app is running, you can see we're generating in yellow.

It's kind of a subdued color in the pycharm terminal but yellowish it's going to come out and cyan for the consumer.

We're waiting for all 20.

There we are.

Now we're starting to process them.

Notice, we were not able to get to the first one until 20 seconds after it was created.

Now, the consumption of these is faster than the production so we were able to catch up somewhat and we got it down to 10 seconds, 10.48 seconds for the best case scenario.

You can see these numbers that we picked up out of there were the ones generated previously.

In the overall time it took to run that was 30, 31-ish seconds.

None of this is great, and we can actually dramatically improve this with asyncio.

Not a little bit, a whole lot.

|

|

|

show

|

5:36 |

You've seen our producer consumer running in synchronous mode.

We generate all the data and then we process all the data.

And of course the first thing happens and then the second because we're not using any concurrent algorithms or techniques here.

Now also notice I have a produce-sync and a produce-async.

Currently these are exactly the same so I'm going to leave you this sync program in source control so you can start from scratch and basically where we're starting from right now if you'd like.

But we're going to convert this what currently is a synchronous program to the async one.

So in the end async program will be, well the async version.

So there's a couple of things we have to do to make our program async.

Syntactically they're simple.

Conceptually there's a lot going on here and we've already talked a lot about that with the event loop and slicing our functions up into little pieces.

We've talked about the generator function and these restartable resumable coroutines.

All of that stuff is going to come in play here.

But the API, pretty straightforward.

So what we need to do is two parts.

Let's start with the execution of these things.

So in order to run asynchronous coroutines we can't just call them like regular functions like right here.

We have to run them in that asyncio loop and that asyncio loop is going to execute on whatever thread or environment that we start it on.

And it's our job to create and run that loop.

So let's go over here and create the loop.

And it's really easy.

All we do is say asyncio and we have to import that at the top.

We say, get_event_loop().

And later we're going to say, loop.run_until_complete().

And we have to pass something in here.

So we're going to talk for just a moment wait just a moment to see what we're going to pass there.

So we're going to create the loop we're going to give it these items to execute and then we're going to have it run until they are completed.

The other thing that we need to do is we need a different data structure.

So right now we're passing this list and we're just this standard Python list and we're asking, hey is there something in there?

And if there is, let me get it otherwise let me sleep.

And we technically could do that again just with the async version.

But it turns out that asyncio has a better way to work with this.

So we can get what's called an asyncio.Queue.

It's like a standard queue.

You put stuff in and it sort of piles up.

You ask for it out it comes out in the order it went in.

First-in, first-out style okay?

The benefit is this one allows us to wait and tell the asyncio loop you can continue doing other work until something comes into this queue and then wake up resume my coroutine, and get it running.

So you'll see that it's going to be really helpful.

Now it looks like somethin's wrong.

It sort of is.

Right here you see this little highlight around data and that's because in the type annotations we've said that this is a list but we just need to type that as an asyncio.Queue and then just adapt the methods here.

Okay so we don't have an append, we have a put.

We don't have a pop, we have a get.

Now we're going to see some interesting things happening here that we're still going to have to deal with but we're going to use this asyncio queue.

So the way it's going to work is we're going to track the execution of these separately.

We're going to kick them off we're going to run all of those and then we want to go down here and say start them and wait until they finish.

So we're going to use another concept to get them started here.

We're going to go to our loop and we'll say create task and we're just going to pass in here.

And we actually call the data with the call the function or the coroutine with the parameters.

But like a generator function it doesn't actually run until you start to pull on it, make it go and that's what create_task is going to do.

So we'll say task1 equals this and we'll do exactly the same for the other except we'll call it task2.

And then we want to wait come down here and run our loop but wait until complete.

Now if you look at what this takes it takes a single future or task.

A single thing that is going to run and then we can wait on it.

Not two, one.

And that's super annoying.

Why that's not a star args and they do what we're about to do automatically for us.

It's okay, so we'll say final_task or full task final_task, and we're going to go to asyncio and say gather and what you do is you give it a list of tasks.

task1, task2, et cetera.

And then we run the final_task to completion.

Alright so this is the execution side of things.

We create the loop up here on line 10, create the loop.

We use a better data structure we kick off a few coroutines and we convert them into a single task that we can then run until complete.

What's interesting is nothing is going to happen until line 21.

If we printed out hey starting this loop, starting this coroutine starting this method, generating data.

None of that is going to actually do anything until we start it here we're going to block and then we'll be done and we'll figure out how long it took.

So this is how we run coroutines but we don't yet have coroutines down here.

So our next task is going to be to convert those into actual asynchronous coroutines rather than standard Python methods.

|

|

|

show

|

7:17 |

We've got the execution of our async loop working and we've created our tasks, we've gathered them up we're waiting to run until complete.

But obviously these are standard old Python methods so what we need to do is adapt these two methods generate_data and process_data so they work.

If we try to run it now I'm not really sure what's going to happen it's got to be some kind of error, let's find out.

Run time warning, stuff is not awaited.

Well they did start but when we tried to generate and when we tried to gather up the data and process it didn't work out so well, did it?

No, it did not.

Yeah, right here it said you know that didn't work, we called it it returned None and this thing said I expect a coroutine not this thing that ran and returned some value.

Let's go and convert this, now there's two things that we have to do to make our method's coroutines, asynchronous coroutines and there's two Python keywords to make this happen.

So what we have to do is we have to mark the method as an async method.

So we say async like this this is a new keyword as of Python 3.5.

So now we have two async methods, and if we run them they do sort of run but notice there's no asynchronous behavior here they're not actually asynchronous, so that's not great.

Because just the fact that we say they're asynchronous you know, Python doesn't decide how to slice them up.

Our job is to take this code here and say I would like to break it into little pieces that can run and the dividing points are where we're waiting.

Well, what are we waiting on here?

So PyCharm helps a little bit and this data structure helps a lot.

So there's two places we're waiting on generate_data we're waiting, clearly on line 33, we're saying time.sleep.

Now you don't want to use time.sleep this puts the whole thread and the entire asyncio loop to sleep.

There's a better way to indicate I'm done for a while I'm just going to sleep, you can keep working so we can use the asyncio's sleep, okay?

Same as time.sleep, but now there's two warnings and what are those warnings?

It is PyCharm saying you have an asynchronous method with two places you could wait you could tell the system to keep running and go do other stuff, but you're not.

So Python has a cool keyword called await and you just put that in front and these are the markers on line 30 and 33 that tells Python to break up the code into little slices.

Come in here, run until you get to this point wait and let other stuff happen here when you're done with that and you have some time come back and do this and then wait until you're done sleeping which is, you know, .5 to 1.5 seconds and then carry on again, okay?

So we've converted this to an async method now we got to do the same thing down here.

So this is async, and this one, what we have to do is for some reason PyCharm doesn't know about the get but if you go here you can see it's an async method.

So that means that we have to await it when you await the item, it's going to block until the return value comes out and the return value is going to be this tuple.

Notice before the await, we had errors here it was saying coroutine does not have get_item.

So when you call a coroutine you have to await it to actually get the value back from it, okay?

Again, we were sleeping down here so this is going to be an asyncio.sleep and now that it's that style we have to await it as well.

So pretty simple, the two steps mark the methods as async required and then once you do that, you can use the await keyword anywhere you would have waited.

You're calling a function that is async.

In this case it's kind of silly, sleep stuff but we're going to do a much better, realistic example when we start talking to web servers and stuff, real soon.

So this is sort of a toy example we're going to get some real work done with async and await in just a moment.

I think we're ready, our times are awaited and they're async and the various places where we get and put data we're awaiting and we have the async methods here let's run this and see what we get.

Notice we don't need time anymore.

Notice a very different behavior, look at this we're generating the item and then we're going to wait on something, waiting for more data to be generated or delivered, so then we can immediately go and process it.

Look at that latency, 0, soon as an item is delivered or almost as soon, we can go and pick it up.

It took 20 seconds instead of 30, that's pretty awesome.

Now we're having 0000 here because the producer is not producing as fast as the consumer can consume.

So let's take this and make one minor change here let's generate one more of those, I call that a task2 that task3, and this one we need to tell it it's expecting more data we're not dealing with cancellation and our other types of signals like that not yet, task3, okay.

So now we should be producing more data than we can consume and that should be even a little more interesting.

Notice the beginning it's kind of similar but now we sometimes process two sometimes we're processing one.

The latencies are still pretty good but like I said, we can't quite keep up, so that's okay let's see what happens at the end.

Yeah, pretty good, so in the beginning we generated a couple had to kind of do a couple double steps to catch up but in the end we were more or less able to keep up after we generated all the data especially when one of them finished and the other was still working.

Really, really cool, even generating twice as much data it took only 22 milliseconds.

I believe that would have been 50, sorry 22 seconds I believe that would have been 50 seconds if we had done that synchronously.

How cool is this?

So all we had to do is to break up these functions into parts where we're waiting on something else we're waiting on putting items into a queue we're waiting on, well, time, but like I said that's a stand-in for database access file access, cache, network, etc.

Okay so we just break them up using this await keyword we didn't have to change our programming model at all that's the most important takeaway here.

We didn't have to, all the sudden start off a thread, use callbacks and other weird completion.

It's exactly the same code that you would normally write except for you have async and you have these await sections.

So the programming model is the standard synchronous model that you know and that's really, really nice and important.

All right, that's a first introduction to asyncio.

We create the loop, we create some tasks based on some coroutines that we're going to call and then we run until they're complete.

|

|

|

show

|

1:17 |

Now that you've seen our asyncio example in action let's look at the anatomy of an async method.

Remember there's two core things we have to do.

We begin by making the method async.

So we have a regular method def method name and so on.

To make it async you just put async in front.

Remember requires Python 3.5 or higher.

And then you're going to await all of the other async calls that you make so anytime within this method if you're going to call it another async method you have await it.

And that tells Python here is a part, a slice of this job and you can partition with other slices of other jobs in the asyncio event loop.

So we know that get is an async method.

Python didn't help us this time very much on this one but if you go to the definition you'll see and also we didn't actually get a tuple back we got a coroutine, so that's a dead giveaway there.

And that's pretty much it.

You don't really have to do much differently at all.

The most important thing is that anytime you can that you use some sort of method that actually supports asynchronous calls.

So if you're talking to a database try to find the driver that supports asynchronous operations and so on.

We're going to talk more about that later as we get farther in the class but the more times you can await stuff the more fine-grain the work will be probably the better.

|

|

|

show

|

1:46 |

Let's take a moment and just appreciate the performance improvements that we got.

And there's two metrics on which I want to measure this.

One is overall time taken for some amount of work to be done and the other is what is the average latency?

How long does it take for the consumer to get the data that the producer produced?

So, here's the synchronous version a subset of it, we generate all the data and then we process all the data.

And you can see the overall time it took was about 30 seconds and the average latency is about 15.

The best we ever got was 10 seconds that was for the very last one that we processed.

And if we did the example where we had two of them, it would be even worse.

So, this is okay, but it's not great.

And we saw that with asyncio we can dramatically improve things.