|

|

|

26:58 |

|

|

show

|

2:38 |

Hello and welcome to 100 Days of Web in Python, written by Bob Belderbos, Julian Sequeira and myself, Michael Kennedy.

Maybe you're wondering what this #100DaysofCode you've seen all over social media is all about.

It's really really taken off, and people are finding this concept of 100 Days of Code really powerful for getting them over the hump, and to become capable developers, or maybe learn a new language like, say, Python.

Here's an example of what you might see on Twitter.

Horne Sanchez says, "Day 11, #100DaysOfCode progress." "Today I worked more on byte 18." "Find the most common word from codechalleng.es/bytes." This code challenge platform they're referring to is actually from your coauthors, Bob and Julian.

We'll talk more about that later.

Here's another tweet.

"Day five of 100." "Did some short exercises about modules." "Imported modules, did a couple of my own." "Tomorrow, I/O." "#100DaysOfCode #Python." Way to go, Briggs.

Here we have "Round one, day 101." "Had to do an extra day due to one day off sick" "earlier in the 100 Days of Code." "Today's more of Python debugging." "Tomorrow starts round two." And Jeff says, "Round one, day 19 #100DaysOfCode." "Did three exercises in the book, basically my average." "Been taking it a bit slow these last few days." "#CodeNewbie #Python #IndieDev indie game dev." And finally, let's look at one more.

Amit Kumar says, "#Day32, another autowebcompat PR" "that is, pull request, just got merged." "Way to go." "Python tkinter #100DaysOfCode." So he added some new feature or bug fix to autowebcompat.

Very very cool.

So you've seen this stuff probably all over social media, Facebook, Twitter, and so on.

What's it all about?

Well, this is actually a very structured project put together by this guy, Alexander Calloway.

So Alexander, he was studying in business school he also wanted to learn programming.

He was having a hard time making progress so he came up with this idea of 100 Days of Code.

Here's a quote from him.

"The idea of 100 Days of Code originally came from" "my personal frustration with my inability to" "consistently learn to code after work." "I'd find other, less involved activities" "to spend my time on, like binge watching a TV series." "One of those days, I was just sitting in a restaurant" "with my wife, sharing my frustrations with her." "I suggested, maybe I should make some kind" "of public commitment to learn for at least" "an hour every day." "I thought it would go for maybe three months" "but it turned out 100 days was just the right length." How about that?

Well, thank you for creating this project, Alexander.

This is really really great for many many people getting started.

That's what this course is all about we're going to give you lessons and exercises for every one of these 100 days.

|

|

|

show

|

1:01 |

There are a lot of things that people do to support each other and encourage themselves to stay focused and keep going on 100 Days of Code.

But, there's really just two main rules and they're really, really simple.

The first rule is to code a minimum of an hour a day every day for the next 100 days.

And I would say taking a coding course like learning the lessons in this course and then coding a little bit counts, right?

You're immersing yourself in code for at least an hour a day every single day for 100 days.

And if you get sick, like you saw the person before had gotten sick and had to take a day off that's okay, just add a couple days on the end to make them up.

The second rule is a public commitment to making progress and keeping with it.

And the way that works is to tweet or put something on Facebook like #100DaysOfCode with an update every day.

The PyBites platform is actually going to help you a lot with this, but however you want to do it it's code an hour every day and share your progress every day.

Super simple rules and we hope this course really makes 100 Days of Code project work for you.

|

|

|

show

|

3:48 |

We are going to cover so much material in this course that we simply can't go through it all in any reasonable way.

But, I do want to give you a sampling a couple of the highlights that stand out to me.

We're going to cover the three major frameworks as we see them, Django, Flask, and Pyramid and we're going to do a lot with each one of these frameworks tons of different things we're going to add and use cases and scenarios we're going to put them through.

We're also going to cover some of the foundations of the web like HTML 5, CSS, and JavaScript.

So, maybe you know HTML, maybe you're not super good at it maybe you have always kind of avoided CSS.

We're going to spend a certain amount of time really digging into that so you know it really, really, well as we go through this course.

We'll do a quick primer on JavaScript if you haven't used that language for awhile or it's totally new to you.

No worries, we got you covered there.

We're also going to cover some of the single page application frameworks for JavaScript like React and Vue.js.

We're going to talk about how to write data and query data from SQLite.

This is an embedded database that comes with Python and it's super simple to use but it's a good stand-in for your favorite database be that Postgres, MySQL, Microsoft SQL Server, whatever.

When we talk to databases we can do direct queries against it but, most of the time, we're going to use some kind of ORM.

We're going to use SQLAlchemy a lot.

We're also going to use the Django ORM during the Django sections.

We're going to see how to create REST APIs in a whole bunch of different frameworks.

So, you're going to do all sorts of cool stuff with things like API Star, Responder using Pyramid and Flask and just tons and tons of different APIs we're going to build.

Then, we're going to call those services sometimes testing it with Postman sometimes calling it from JavaScript or even directly from within our Python code with something like Requests.

We're going to write async code.

The web server and web applications are ideal candidates for using Python's async and await keywords.

The reason is, most of the time web apps are waiting on something else on the network, on external APIs, on databases, and so on.

So, they could go do productive work while they're waiting on these other systems.

And, we're going to talk about a couple of frameworks and async and await itself to make this super easy and super powerful in Python.

We're going to talk about web scraping.

Sometimes, it's great to call an API but if the API doesn't exist, and the data's on the web guess what, you can use Python and get at that data anyway.

So, we're going to talk about web scraping and how to get data off of websites that don't have an API or, maybe, we don't know about their API.

Of course, once we write all our web apps we don't want to ship a busted version to production and take the website offline.

We want to make sure it works and it continues to work automatically so we're going to write unit tests against our web application mocking out the database and API calls and all sorts of cool stuff like that.

We're going to take our application and deploy them to Linux using uWSGI and Nginx and we're also going to deploy them to Heroku so you'll see a couple of deployment scenarios which are pretty awesome, and you'll learn the basics of operating Python web applications in production on Linux.

We're also going to talk about Docker.

Containers are super important these days.

There's a lot of use cases for them and we're going to follow on our Linux deployment stuff with Docker because Docker is really a special way to create very small, lightweight customized Linux deployment, right?

So, everything you learn about deploying to Linux is going to be super useful for when we get to Docker.

You'll just have to use the Docker commands rather than, say, the Shell commands directly.

We'll see how to do containers and even orchestrate multi-container systems with Docker Compose.

This is just a tiny sampling of what we're going to cover.

We're going to go through so many amazing topics and little libraries, and different things that you can do on the web with Python.

You're going to have a hard time choosing what to use at the end of this course, I'm sure.

|

|

|

show

|

2:02 |

So you've chosen Python for your 100 Days of Code project in this 100 Days of Web.

Well I'm here to tell you that you've chosen wisely.

Look at this picture.

One of these languages is unlike the other.

Can you pick it out?

Of course, Python is growing super super fast and this graph is not just some random thing I found on the internet.

This is a report done by Stack Overflow which has some of the better insights into the developer space.

Here you can see the red graph is the actual usage up to 2018 this report was done a little while ago but I think it's still totally relevant.

And the gray part is the projecting out.

So if you look at Python it is just set to dominate the industry in terms of programming languages.

JavaScript has been really really popular as well but guess what?

It's topping off, you know it's not quite going down but it's not really growing that much.

You look at Java, Flat, the rest of 'em are going down.

So if you had to pick somewhere to build your skills and to jump into this technology space would you rather be in a growing space or shrinking space?

You know, my rule of thumb is I like to bet my career on things that have positive derivatives are going up, they're not going down.

Okay so, this is cool it's really popular amongst the old school traditional languages if you will the ones that have been around 10, 20 or more years.

You might be thinking well Rust and Go, I heard they're hot.

And Swift is pretty cool and all those things so how does Python compare to those?

I bet they're even more awesome and they're probably going up even faster.

Well, I have a graph for that too.

Here's how it compares to things like Swift and TypeScript and Go and so on.

Yes all of these languages are generally going up but even so they're nothing like Python.

The usage is not like Python nor is the rate of increase like Python.

So Python's pretty special and I think this is a really great framework for building web applications and other types of apps and I think it's a super super good skill to have in your skillset.

|

|

|

show

|

2:20 |

Let's talk a little bit about the course organization and how you're going to go through it during your 100 Days of Code.

We don't have 100 separate little projects nor do we have one huge project you work on for 100 days.

Instead, we've broken the course into four day segments where you work on one project, one idea you learn one thing and you work on it during those four days.

Typically, the way it goes is on the first day you're going to watch the lessons.

They're usually 45 minutes to an hour or maybe you do a little bit of coding we'll tell you what to do and help you along the way there but mostly the first day of any major topic Django, Pyramid, Async, whatever is going to be watching these videos.

Then then next day we're going to have some project for you.

And on GitHub, each section has its own read me that says on day one or day N you do this on day two you do that, on day three, day four and it just walks you through.

It's pretty open-ended and you get to explore and play with the ideas but it is guided so you're not just left all alone.

Next day, probably a little bit more code and then you might finalize what you're working on and finish up that project as you go.

And that's typically a given topic in this course.

So you can think of this course as 25 four day segments.

Except, we've actually left one open where we have four little independent ideas that weren't big enough to fit into this format.

Also, some of the topics are longer the way I've laid it out here.

For example, Docker is an hour and a half.

So on the first day, you watch maybe 50 minutes of Docker, something like that.

Day two, you work on applying what you learned there.

Day three, you come back and watch some more videos and build on that with, say, Docker Compose in this example.

And then finally, on day N plus three or day four you're going to finish up working with those new ideas.

So the basic workflow is these four day segments on any given topic.

If you want to skip around, that's fine that's totally okay they don't have huge dependencies between them but we do assume that you know what came before.

So, for example, if you jumped to the end into some Flask section, we've assumed that you have the knowledge that we covered in the first part where we just introduced Flask and things like that.

But as long as you're willing to fill in those gaps or you feel like you have the prerequisite knowledge you can jump around and that's okay long as you think of this format as being kind of the atomic unit there.

|

|

|

show

|

1:30 |

Hello and welcome to the course.

My name is Bob Belderbos and I'm a software developer at Oracle and co-founder of PyBites, a blog, community and platform that teaches Python via practical real world code challenges.

I'm honored and excited to teach you Python and web development in this course.

My programming journey started 12 years ago learning HTML, CSS, JavaScript and PHP to build websites.

I've been hooked ever since.

Programming for the web is awesome because the browser gives you instant feedback as you can easily get users to run your code.

My more recent work includes building our PyBites platform using Django, AWS Lambda and Heroku all technologies you will learn in this course.

Good day everyone this is Julian Sequeira.

As with Bob I am the co-founder of PyBites and Code Challenges.

And currently I'm a new Python developer at Amazon Web Services, very exciting.

And last but not least I am a trainer here on Talk Python Training.

I'm absolutely stoked to be sharing your 100 days journey with you.

Please don't get sick of me but I'm here to help.

So if you need anything you always feel free to reach out.

Hey welcome to the course.

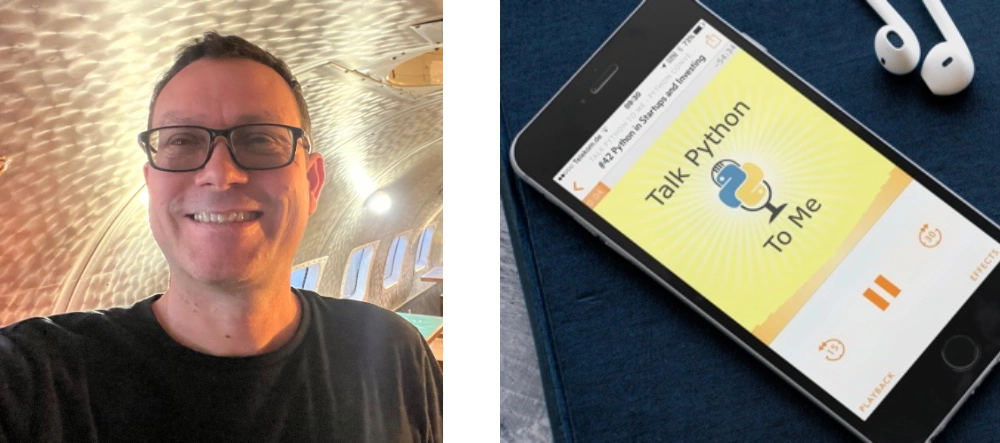

I'm Michael Kennedy, nice to meet you.

A little background on me, I'm primarily a web developer as well as the founder of the Talk Python To Me podcast and Talk Python Training.

I've been a professional developer for 20 years and I'm really excited to share that experience that I've gained with you throughout this course.

Welcome to the 100 Days of Web in Python.

|

|

|

show

|

1:10 |

You inevitably will learn a ton of Python during this course.

But, it is not a beginner course.

This course is supposed to teach you web development in Python assuming that you basically know Python.

Now, you're going to be able to follow along if you don't know absolutely everything like, if you couldn't bust out Metaclasses just off the top of your head no problem, don't worry about it.

We'll talk a little bit about the features as we use them and of course repetition you'll learn a bunch from that as well.

But, when you think about the Python language when you look at it if it's a little unclear it's a little hazy, maybe you don't remember all the details of exactly how it goes maybe you don't know much about it you just played with it a bit don't worry, we've got something to clear things up for you.

At the end of the course we've added a Python primer.

So here's 46 minutes of little concepts that show you how each of these core ideas in Python works.

Two minutes on classes Two and a half minutes on slicing one and a half minute on if else statements and things like that.

So if you're a little rusty or a little blurry on the Python language you know, be sure to jump to this appendix at the end get a little refresher and then jump into the main course content.

|

|

|

show

|

2:13 |

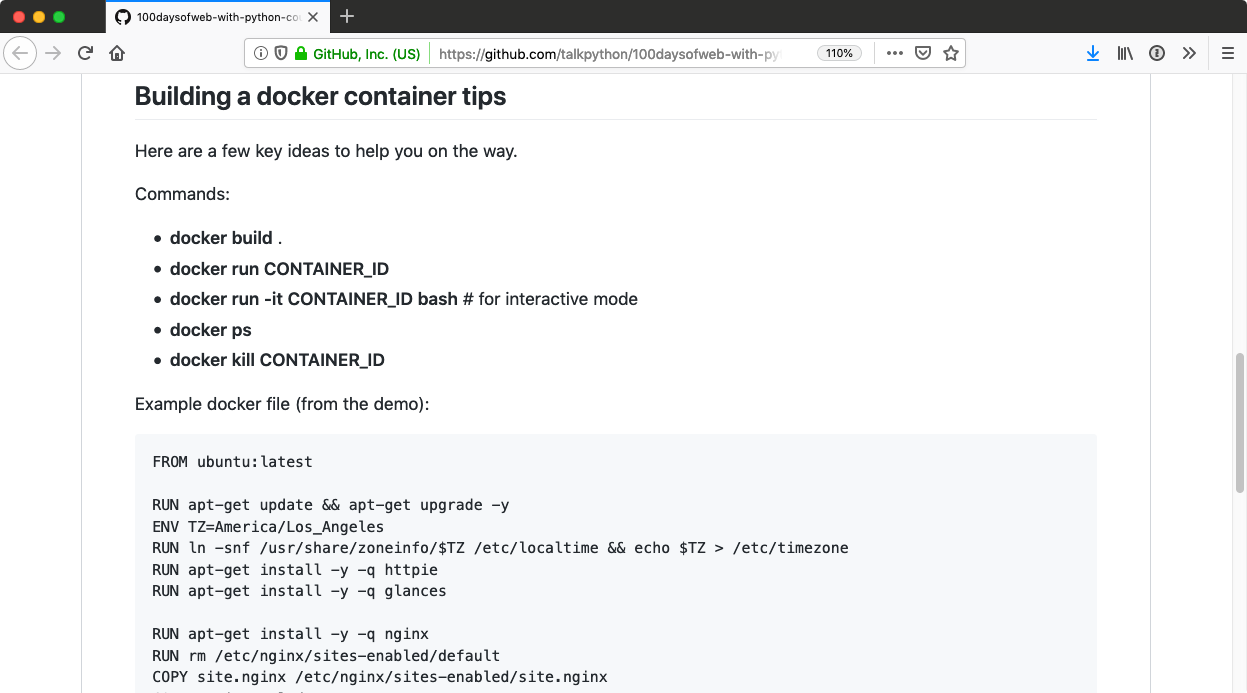

It's really important that you get the source code for this course.

A lot of the projects start with existing code or existing data and you're going to need to build on top of it.

And of course, where do we put the code?

We put it on GitHub.

You can find it over at github.com/talkPython/100daysofweb-with-Python-course.

So let's go over there and see what you'll find when you get there.

Here's the GitHub repo for our project.

First thing you need to do is go and star this and fork it.

So you're going to want to fork this repository so that you have it with you and you can make changes to yours and also star it just so you have a quick reference.

Then you're going to want to download it somehow.

Either click here and copy the clone URL and just clone it with Git, if you're familiar with that.

If for some reason you don't want to mess with Git that's fine, just grab that gear and just download the ZIP file.

Git's a little better 'cause you can do a Git pull and then update it if we make any changes.

Of course, a ZIP file's probably just fine.

You can always update that as well.

Now the most important part is the days.

So here you can see these are the four day projects that we've talked about.

So here we're going to talk about Flask and then HTML5, and then CSS basics and things like that.

Let's jump into the CSS one to see what's there.

Told you it's important to start with this because there's existing code to work with and one of the things you do is work with this thing called Selectorville to play with CSS selectors.

It's like a little app that lets you type CSS selectors into it and it'll generate changes in the page, basically.

Then, the most important part is your turn.

As you know, you're going to be writing some code in this course.

Here's where you go.

So you come in here and it just talks about the various stuff that you've done kind of like the flow we discussed in the beginning.

Day one you just watch the videos day two you play with Selectorville that's the thing you just saw next to this folder.

So you're going to go play with that.

It talks a little bit how to do that.

On day three, one of the things we did in these videos is build out a custom navigation.

So it says, "Hey, why don't you try to recreate "the talkpython.fm, the podcast navigation bar up here?" Like this, just this top black box.

So it talks about here's how you do it and here's some of the tools for it and then finally, the fourth day for this one is to play with Bootstrap Themes and finally share what you've learned.

So make sure you get the code because each four day project will be something like this.

|

|

|

show

|

1:29 |

When you dedicate yourself to taking a course and carefully working the way that the instructor the author is working, you are effectively gaining much of the experience that that particular author that developer, has gained throughout their career.

This course is special because it's taught by three people.

That means you get three experiences in one and this is super valuable.

Imagine that you have a job in this place.

You get to work with cool VR gear, on hardware and IoT things, you'll gain one set of experiences but if you took a different job say you're starting a fashion startup with your friend, this woman from college and you're working out of this coffee shop being scrappy, working and trying to get venture capital and launch your application, you'll have a totally different experience than that dude in a VR headset.

Or maybe you go the corporate route work at Microsoft like this guy here.

He's working on some new programming language tooling around Python.

These are all super different experiences and these experiences are all really positive.

They'll give you different perspectives on programming, and that's awesome.

How is that relevant to this course?

Well, with three instructors, we each have a slightly different set of tools and a slightly different way of working.

We're going to show you next how each of us gets set up what you need to follow along with each of us Julie and Bob and myself, during our particular segments.

You don't have to work like us but if you want to follow along and do exactly what we're doing we'll show you how to set up the tools to do that.

You'll have not just one experience but three experiences bundled up into one.

So on the other side of this course, you're going to have a broader perspective on Python development and that's pretty awesome.

|

|

|

show

|

0:34 |

G'day guys, this is Julian Sequeira.

Just running you through my setup for the next 100 days.

I use Windows 10.

Don't judge me, but I do, and I absolutely love it.

So you'll see in all of my videos I'll be running Commander as the terminal equivalent on Windows, and that's simply installed.

You can follow the videos to get that done.

For anything web, I use Chrome.

That's pretty much all you need from me to follow along with my videos.

There will be some other services that we'll set up but those will come on the specific days.

So let's get cracking.

|

|

|

show

|

0:35 |

In this course, I use Python 3.7 on a Mac which you can get from Python.org and I use Vim as my default editor.

For external Python dependencies I create virtual environments using the built in Vim module then pip installing the requirements or external dependencies into them to not clutter up the global Python name space.

For my JavaScript and React lessons I use Node, NPM, the JavaScript Package Manager and the Chrome DevTools.

Lastly, I will introduce you to our platform where we developed 100 days progress tracker you can use to share your progress and stay on course.

|

|

|

show

|

2:45 |

Let's talk just a moment about my setup so you can most easily follow along.

If you want to work the way I'm working when you're following my examples of course you're welcome to work the way Julian is the way Bob is, but if you're going to follow along like me, here's what I'm doing.

So I'm running macOS Mojave.

I'm using PyCharm Professional for my editor and I'm using Python 3.7 installed from Homebrew.

So if you're on your Mac you probably should know about Homebrew.

Homebrew's pretty awesome.

It's easy to install, you run that little command right there, then you can type things like brew install Python.

So you're going to definitely want to check and see if you have Python because if you just type Python on your Mac, mmm.

Not good 2.7, look and see Python and we don't want that.

Type Python3 to make sure that you have a modern version.

I think some of the projects are going to require Python 3.6 or higher, so aim for 3.7 or higher than that.

If a new one comes out since we've recorded this or at least Python 3.6.

You don't want an old one, okay?

Do some of the async stuff and f-Strings and things like that and you're going to need at least 3.6 for that to even work.

What you get this is brew install Python.

Python 3 is just straight Python.

That's the way they treat it.

Modern Python is Python in Homebrew which is really really great.

I've already done that so I don't need to but that's how I got Python on here.

If you're on Windows instead, I recommend you check out this relatively new thing Python 3.7 in the Windows store.

So this will put Windows in your path it'll give you the Python3 command.

All the important stuff you want on Windows and it'll even auto-update itself.

Totally free, you can check that out on Windows 10.

If you're on a Linux, it probably already comes with Python 3.

Use your package manager to install that and update it and just do that check Python3 -V to see what you get.

Finally, PyCharm.

I'm going to be using PyCharm as my IDE.

And now there's the full fledged Professional Edition and the Community Edition.

If you click on features, it shows you what features are in the Pro Edition and what are also available in the Community.

If you're doing standard Python stuff you could use the Community Edition, it's fine.

But, you know what this course is about?

Web development, and Python Web Frameworks, and Databases.

All three of those things are only in PyCharm Pro.

So either also get PyCharm Pro.

It has a free trial, it's like eight dollars a month if you want to pay for it or if you're not going to use PyCharm my second best recommendation would be Visual Studio Code with a Python plug-in.

So those are probably the two closest things of course, PyCharm is what I'm going to be using.

There's a little bit where I use WebStorm but that's kind of the same product, more or less.

You can use PyCharm Pro in that situation.

We talk about it then anyway.

All right, here's my set up and what I'm working with.

You don't have to match this exactly but here's what I'm doing in case you want to try to get as close as you can.

|

|

|

show

|

2:48 |

In this video I want to introduce you to the PyBites Code Challenges Platform.

If you head over to codechalleng.es you're presented with this home page.

We designed our Code Challenges platform because we are strong believers in learning by doing and the best way to learn Python is to write a lot of code.

First, let's log in with GitHub.

When you log in you're redirected to the dashboard.

You have a leaderboard the bites you have earned but what's relevant for this course is that we have 100 Days Of Python section.

If you go there, there are a couple of grids and to follow along with this course we made a talkPython_web template.

And here you see all the course materials spread out over a 100 days.

You can start the 100 days by clicking start button.

This will create a project or as we like to call it, grid and every day you can Tweet out your progress and mark items complete.

The Tweet is already formatted to show the work you are going to do today #Python, the day number and copy in @pybites and @talkPython so that we are notified on Twitter.

Then you can mark the items complete.

This way you're working towards 100% completion of the course.

This is totally optional but we noticed that having a tracker can be very efficient to persist and the Tweeting part is a nice way to share your work and to work with accountability partners.

A great way to share your progress is to join our Slack community.

There are some great Pythonistas there sharing the work and you can always ask questions if you get stuck with the course.

To join our community, just head over to settings, confirm your email and use the join PyBites Slack button.

By default you will join the code challeng.es and the general channel but we also have 100 Days of Code channel which is a dedicated channel for people taking a challenge.

Well, that's that.

We hope you enjoy the course and use our platform to stay on track.

Have fun and learn a lot of Python and web.

Keep calm and code in Python.

|

|

|

show

|

2:05 |

Welcome to your course i want to take just a quick moment to take you on a tour, the video player in all of its features so that you get the most out of this entire course and all the courses you take with us so you'll start your course page of course, and you can see that it graze out and collapses the work they've already done so let's, go to the next video here opens up this separate player and you could see it a standard video player stuff you can pause for play you can actually skip back a few seconds or skip forward a few more you can jump to the next or previous lecture things like that shows you which chapter in which lecture topic you're learning right now and as other cool stuff like take me to the course page, show me the full transcript dialogue for this lecture take me to get home repo where the source code for this course lives and even do full text search and when we have transcripts that's searching every spoken word in the entire video not just titles and description that things like that also some social media stuff up there as well.

For those of you who have a hard time hearing or don't speak english is your first language we have subtitles from the transcripts, so if you turn on subtitles right here, you'll be able to follow along as this words are spoken on the screen.

I know that could be a big help to some of you just cause this is a web app doesn't mean you can't use your keyboard.

You want a pause and play?

Use your space bar to top of that, you want to skip ahead or backwards left arrow, right?

Our next lecture shift left shift, right went to toggle subtitles just hit s and if you wonder what all the hockey star and click this little thing right here, it'll bring up a dialogue with all the hockey options.

Finally, you may be watching this on a tablet or even a phone, hopefully a big phone, but you might be watching this in some sort of touch screen device.

If that's true, you're probably holding with your thumb, so you click right here.

Seek back ten seconds right there to seek ahead thirty and, of course, click in the middle to toggle play or pause now on ios because the way i was works, they don't let you auto start playing videos, so you may have to click right in the middle here.

Start each lecture on iowa's that's a player now go enjoy that core.

|

|

|

|

47:20 |

|

|

show

|

0:59 |

G'day everyone, and welcome to the 100 Days of Web in Python course.

Thank you for giving it a crack.

I'm Julian Sequeira.

I'm going to be taking you through the very first section of four days, and we're going to be looking at the Flask web framework.

It's going to be an introduction to Flask specifically around setting up a Flask package of your own that you can run and then we're going to look at templates and not just templates as a page but as a recurring theme throughout your website or your web tool, okay?

Very simple, but very, very powerful stuff and very useful, okay?

And that's what we're all about here with this Hundred Days of Web and Hundred Days of Code thing so I hope you have a lot of fun.

Stick with it.

Get through the full 100 days, and you will be so impressed with how far you come.

But enough talking.

Let's get cracking on Flask.

|

|

|

show

|

2:15 |

Alrighty, before we get started let's have a quick look at what we're going to be doing over the next four days.

The first day is going to be watching videos.

How lucky are you guys?

This is all about setting up your first Flask app.

In the package format go through the videos starting at the initial setup video and work your way through to creating your app and then configuring your Flask end file.

That's where I'd like you to draw the line.

Try not to do too much.

The reason I say this is this is the 100 days of code challenge.

You do not want to get burnt out by doing four or five hours of straight code in your first day.

The idea behind the challenge, again, 30 to 60 minutes.

Limit yourself, maintain that motivation and focus.

So watch the videos have a little bit of a play but don't do too much.

You don't want to get burnt out.

Day two, watch the second half of the videos.

Now these videos from day two will actually continue what you did in day one and this time we'll extend on the application and then create a standard template that will flow across all the pages on your website or in your Flask app.

That's actually a really important concept of Flask.

Very useful, so make sure you master that one and then when you are done, again try not to do too much because you've got days three and four to play.

That's going to be a lot of fun for you.

It's free form.

I don't want to make it too difficult.

I don't want you to burn yourself out again.

Just have a play.

This time for three and four I would like you to actually create your own app.

So start from scratch.

Get rid of everything that we've done just push it to the side.

Try not to look at it.

See if you can create your app.

Obviously, reference it where you have to but create everything yourself.

Create your own base HTML file get some basic CSS in there make it pretty, and just have some fun with it.

And that's it!

So, move onto the next video.

Get cracking on day one and work your way through these days referencing this Read Me file if you need to.

|

|

|

show

|

3:39 |

Righty-o, quick video here.

Just on the setup for this application.

We have here Commander, I'm on Windows.

It's going to look similar regardless of whatever you're environment is that you're using or your OS.

I've created a directory called 1-flask just 'cause we're on Chapter One and we're building a Flask app, go figure!

What I would like you to do is create a directory something like that, whatever you want to call it.

This is going to be your parent directory.

We're going to build our very first Flask application within this directory.

The first thing we have to do after doing that is arguably the most important thing you have to do and that is create your virtual environment.

That will make sure everything we do here all the packages we install, it's all self contained in this awesome little virtual environment.

So because we're using Python 3 I would not be using Python 2.7 or whatever for this course.

We're doing it all in Python 3.

You can check your version with python --version.

I'm using 3.6.

Haven't installed 3.7 yet.

Doesn't really matter for this.

Just make sure you're using Python 3.

Run the command, Python -m venv venv and what this will do is create it will create a virtual environment called venv, okay?

Do an LS and you can see I've got this venv folder there.

Now, if you are using Windows, I hope you're using Commander or some sort of Linux shell 'cause it is a bit of a pain to do this on the default command line.

But that's completely up to you.

So what we're going to do is we're going to go in to the venv.

You'll see there is an include, lib and scripts directory.

This is different on macOS or Linux.

In those ones, you'll probably see a bin folder, okay?

A binary folder.

This one, because this is Windows we're going to go into scripts and you'll see there is an activate batch file, okay?

All we have to do is activate it.

And there you can see we have this virtual environment little bracket thing in front of our command line.

That means that we have now activated the virtual environment and everything we do is going to be self contained in there.

If you are using Mac or Linux from your parent directory here, where you see the venv directory you're going to do source venv/bin/activate.

Running that command will initiate your virtual environment.

And that's it.

Next, and next most important you are going to install Flask.

All we really need to know for this specific chapter is that we're installing Flask with pip.

So pip install flask.

And we'll let that install that we'll install Flask and all of its dependencies.

You can see them flying up the screen there.

We don't need to install anything else.

Just ignore this if you see that.

I mean, you can always upgrade pip yourself, that's fine.

For me, I'm okay.

And now, we can do a pip list and this will show you all of your installed packages.

And that's it!

So, we have our environment installed.

We have Flask installed and we're now ready to start creating the directory hierarchy here for Flask and get cracking on our first app.

|

|

|

show

|

2:57 |

Now that we have our virtual environment created right there we need to actually create our application directory hierarchy setup, okay?

What we're going to do, which is the more Pythonic way of doing this, I suppose, is actually package our Flask application.

This package is going to host our application.

The reason we're doing this is just to allow the app to be imported at a later date.

It's more about scalability and growth and everything like that.

You can actually just create a Flask app as we did in one our previous courses just with a single file but this is the better way of doing it and, as I said, it's more scalable.

So, with that said take a look at this.

This is what our hierarchy, or what our folder directory structure is going to look like.

We have here our parent directory.

That's the Flask folder.

And we have our virtual environment here.

What we are going to create is our Python file that defines our Flask application.

It's nothing crazy.

It's just one line of code, so we'll see that going forward.

I just wanted to point that out.

The meat of our application is going to live within our folder here, which we're creating our program in so, I've decided to name it "program".

We have our parent directory.

We have the program directory which is going to house the meat of our application.

This is the package.

Inside that, we have the dunder named file so it's a __init__.py file.

That is what denotes that this is a package that this is a Python package, that can be imported.

It has the rules in there, in that __init__.py file.

And the bulk of our application is then run out of the routes.py file, as you can see down there.

The routes file is going to create all the routes all the webpages, all the links for our application.

It's going to have all the Python code in it.

There will be other folders going forward but this is what we're creating now.

So, go ahead, create a folder called "program".

That is going to be your application directory.

Create a demo.py file.

It's just going to be blank.

Create, within your program directory, an init file __init__.py, spelled exactly like that and a routes.py file.

So, give that a go, and when you're done it will look something like this.

Okay, so we have our demo.py.

We have the program directory.

We have have __init__.py and we have routes.py.

Leave it there.

Let's move on to the next video.

|

|

|

show

|

2:33 |

Okay, we're going to set up each of these files, one at a time.

Let's start with the __init__.py file cause that's arguably the most important file here.

To begin, let's start our virtual environment.

If you don't already have it, activate it.

Okay, in the folder we have demo.py program and virtual environment.

Let's head into our program directory and there is the __init__.py file we created.

So use whatever editor you want to just pop into that and what we need to do is we need to first import flask.

So if you've ever done a single file flask app before you keep the actual importing of flask and the configuration of your app object, your Flask object.

That all happens in the one file where your routes is stored and everything.

Not so here.

Your __init__.py file is going to actually configure flask for you and tell the package what's what.

Alright.

What we want to do is insert.

So, I forgot for second there I was using Vim.

So we're going to do from flask.

So from flask import Flask.

And then we're going to create.

This is where we create our application our flask application instance.

Now this is going to create the flask instance using the name of __name__.

Now, here's where the magic is.

We're going to do another import here from our program directory that we're already in.

Alright, we're already in it.

We're going to import the routes file.

Now this has to happen below where we create the app instance because we need that app instance to be created first before we can import any of this.

So, the order of our file is to import flask it's to create our Flask app instance then once it's created, import the routes file from the program directory that we're in.

Alright, that's it.

Lets write that.

Lets cut it.

And there it is.

All right, next video, next file.

|

|

|

show

|

3:10 |

Alright, welcome back.

In our last video we looked at the __init__.py file and we now move on to the routes.py file.

You can see the relationship here.

At the end of __init__.py where we're setting up our Flask app instance, the last thing we do is we then say, from our program package, import routes which is going to be our routes.py file.

Let's edit that file now so we actually have something to import.

Something to work with.

Now, traditionally, if this was a single file Flask app we would import Flask here but we've already done that in the __init__.py file so we can pretty much get straight to the code.

The only thing that we need to import here is the Flask app.

Okay, that app object that we created in our __init__.py file.

To do that, we just simply go, from program from program import app.

And the whole __init__.py thing handles that, alright?

That's all we need for the absolute basic Flask app here using our packaged app scenario.

Alright, so pretty much we can get straight into the Flask code.

What we're going to do here is we're going to create an app route.

Now, this is going to be our URL.

So the first URL we want to create is the root or the index path.

In this instance, we can actually specify both 'cause they are practically the same thing, okay?

So, we have this decorator here and it is creating our URL.

What is it creating that against?

What is the code that is going to run against this URL?

That's what the decorator works against.

In this case, we're going to run it against this function and we're going to call the function index().

General rule of thumb, I wouldn't say all the time but one of the good practices with Flask is to try and name your functions similar to your actual URL.

So, this is the index() function for the index or root path to our website.

Alright.

For the simplest of simple all we're going to do is return something which is just plain text.

We're just going to return plain text.

We go "Hello Pythonistas" and that's it.

That's all we're returning.

And would you believe it, that is our app.

That's all we need to do, to make this very simple initial Flash app work.

So we can save that and we can pretty much run our app now.

We just have to do one last thing so move on to the next video we're going to configure that demo.py file and you'll see why I've left that 'till the end in the next video.

|

|

|

show

|

3:41 |

Okay, time to work on that demo.py file so just head up one directory back to our one Python Flask directory and there's your demo.py file that you created.

Let's just Vim into that and all we really have to do in this file, is we're telling Flask what to do so when you run the Flask command to launch your app which I'll show you straight after editing this this is the file you point at because this is what kicks it all off.

This is what tells it what package to use and so on.

So, all we have to do is go, whoops from program, import app.

Now the reason this sounds confusing is because we're importing app and we've referenced that before in other places so let me just walk you through it really quickly.

Save that.

Let's just verify it.

Okay, so from program import app.

Now, this is talking to the __init__.py file.

So we have app in here.

What we're saying is, from our program package import the app instance, or the app object of Flask and then, from there, it's going to do this one from program import routes and reference the routes.py file.

Alright?

It just sort of goes through that tiered approach.

Now, we head back up.

One last thing we need to do before we actually run this program is tell Flask, the Flask command, to use demo.py and we do that through an environment variable.

Now, this environment variable is set differently in Windows from macOS or Linux.

If you're using any sort of Unix environment you'd be used to using this method.

So, export Flask underscore app and then we want to talk to the demo.py file and that works.

Now, in Windows, we can't do that because you can't set environment variables using export.

We have you use the actual set command.

So, set FLASK_APP=demo.py and then we go.

So, if you're using Mac, use export or if you're using macOS or Unix, whatever and if you're using Windows, use the set command.

Now, all we have to do, is flask run.

Okay, and you can see we are running our website on 127.0.0.1.

That's localhost, port 5000.

So, let's bring up a web browser and check that out.

So, I've just opened up a web browser here.

This is Google Chrome in incognito mode and I've just punched in the URL or the IP address 127.0.0.1:5000, and that's it.

We see the returned string that we had in our routes.py file, okay?

In the background, we see the actual timestamp.

We see the address that we're talking to.

We see the URLs that were requested.

Just ignore this one.

This is a remnant from a bookmark I had in there and that's it.

There's our very first Flask app.

What I'd like you to do now is just have a play around with the routes.py file.

Add in some other return values.

Do whatever you want to play with but this should be long enough to get you through today and possibly a second day depending on how much time this took you.

Just have a play, have some fun and let's move on to the next video.

|

|

|

show

|

3:03 |

So there's one little gutcher that I wanted to discuss here.

And that was that environment variable that we created, that flask_app.

To view it, we can just quickly some up windows we'll do it this way.

We can see it their, flask app equals our demo.pie file.

Now unfortunately, what happens is when I close this terminal window when I can cancel this session or exit out we lose that data.

So let me show you that, one second.

Let's deactivate and let's exit out.

And now with the new terminal launched, let's just get into our folder 1-flask.

All right so there we're back in here.

Now, let's activate our virtual environment.

Right, and now if we run that same command...

Flask app its gone.

Okay, so if we were to run flask run, we're going to have issues.

It doesn't know what it's doing.

It doesn't know what file to talk to.

It's just running nothing, all right.

So it's even say's it here "you did not provide the flask app environment variable".

So, the problem there is, every time you close your environment for the day if you happen to shut off your computer and you come back, you're not going to be able to do this.

You're going to have to reset that variable.

And it's really frustrating.

So, one work around for this, is to install a new package into our virtual environment here.

Called Python-dotenv, it's this one here let's pick install it now.

If I can type pip install Python-dotenv Now, what this module allows to do, it will allow us to specify that environment variable in a environment file, in this parent directory here.

And what we do is, we create that file that's actually going to be called .flaskenv So let's go Vim okay because we're in an environment file.

We'll be titled in Linux and everything is classified as a dot file, so Vim .flaskenv and we throw that line in there.

FLASK_APP=demo.py Save that, oops.

All right, and now flask, run, there we have it.

So, what this does is it now refers to that .flaskenv file.

So if we close out terminal session and we cancel it out and we come back into another week, we don't have to remember signing that environment variable again.

It's just taken care of by the .flaskenv file and the dotenv package.

So, install that, get that cracking and save yourself a bit of heartache.

Just remember to put it in all of your projects from here on in.

|

|

|

show

|

4:52 |

You're probably wondering when we're getting to the more interesting stuff because at the moment, your webpage looks like it was made by a 15 year old in 1996, right.

It's nothing flash.

To get that, let's create what's called a template.

A template in Flask is pretty much the file, the HTML file that you will write, that links to each one of those URLs.

So at the moment in your code, your URL for index simply returns plain text, whereas the HTML.

Well, rather than code HTML into that routes.py file we're actually going to create an index.html file as you would with a traditional website.

And then you can throw all the flashy HTML and CSS you want in there but for now, let's just go ahead and set everything up.

Within our program directory, we're going to create a directly called templates, pretty obvious.

And in our templates folder we have nothing in there obviously let's create an index.html file.

And, in this file let's just give it something basic let's say.

So we have our head and then we'll have our body, okay.

Right, and in that body this would be where we usually punch in all of our information all of our HTML code, all of the text that goes on your page, all of those things right.

Let's actually just put in some test information here.

Alright, one thing at a time, right so let's make this say something like this is text, we've included in our HTML file.

That way we don't want to make it the same as the other one because then we won't know, right.

So, let's go body and let's close this off, come on.

Alright, so there is our file.

It's nothing special.

Let's just write that, okay and we'll head back to our routes.py file to relate the two.

So, right there we have our return value of "Hello Pythonistas." Now, what we want to do is we want to return that HTML file instead.

And we do that using another feature of Flask.

So, that means we're going to have to actually import something here.

So let's, delete that, let's head up to the top.

And, let's throw in, actually we'll put it down here from flask import render_template.

So, what this going to do, this is allowing you to render a template, render an HTML template file.

Now for the traditional term template you would assume it's a template that is carried across all of your other files.

We'll get to that in a minute.

Let's just work through this one at a time, okay.

All we're doing now is we're returning that template so let's go return render template.

And in here, we specify the name of that template file that we're using.

In our case, we called it index.html and that's it.

So, now what we've done is we've replaced that text return of "Hello Pythonistas." We've replaced that with the index.html file.

So, let's run the app and see if that works.

We've got our environment variable set.

We do flask run.

Alright, so there's the actual URL we're going off with the IP.

Let's bring the browser across.

There's our site from before.

We refresh it now and we get the title, this is our site.

And, there is the paragraph tag that we've put in our HTML code that says this is text we've included in our HTML file.

That's how we create our webpages using an actual template and we don't have to include all of that HTML code in that simple routes.py file.

So, now that you know where you can put your HTML in you can imagine, this is where you set up your CSS and your divs and all those sorts of things which we're not going to go to in too much detail in this course but now you know, so that's a template.

That's a very basic template.

Let's move on.

|

|

|

show

|

7:19 |

Our site is pretty plain.

There's nothing special about it.

It's just simple text and there's nothing really much else to it.

What we need to do is create some sort of template around it, some sort of common theme that flows across the entire site no matter what page you go to.

So looking at PyBites here, the common element that flows between every page regardless of which page you're on is this left-hand bar, okay?

It's almost like your nav bar.

It's almost your menu bar along the top of the page.

Whatever you're used to seeing on your favorite websites.

To do that we actually need to create a base template file, something that our webpage can call on.

So as an example here with PyBites this stuff on the left here stays the same.

All that changes is the content on the right.

Imagine if you will two files, a file called base that contains all the code for this and then the unique file to the page in which case this is an about.html page.

That is what we're going to create with Flask and that's what's awesome about Flask is that you can create a template.

You can create that left-hand column.

So let's head over to here.

You've got your templates folder.

Let's create a base.html file.

Now, in this file we're going to keep it really simple.

What on our page stays the same?

We have a title, we have a header we have the HTML code, and we have the body, right?

The only thing that really differs is potentially the title in the title bar along the top but that doesn't have to change and the thing that definitely changes is what's in the body.

It's that unique text to that page.

Now, we're just going to keep it really simple.

Let's put our HTML header, let's throw in the head tag, and let's throw in a title.

Now, this title will stay static across all of our pages so this is our site.

Whoops.

Getting ahead of myself there.

And we close off the head.

And what else did I say?

We said body, so the body tag is now static across every page but nothing within the body tag is static so let's leave that blank just for a second.

And we close off our HTML.

So this is our template.

This template is going to be static across every webpage on our site.

But what differs?

The stuff in between.

So without explanation let's just throw in some Jinja template code, block content.

I'll explain in just a minute.

And we'll close that with end block.

Now, what this means is in this template we have HTML, we have head, we have title, we have body.

That is static across every page.

What differs is everything within the body tag and Jinja calls on that using block content the block content code.

This is something we will refer to we will link to in our other files.

Everything that calls on this base HTML template is going to have this block content tag.

And you end it with endblock.

So you will see in just a minute.

Let's leave this up here.

Let's head on in another window here to our templates.

And we'll open up index.html.

Now I have prepared this already so just roll with it.

In here see the block content?

Block content here will be inserted into here on the template.

This is where it's going to fall.

So anything that we put within the block content and end block Jinja tags is going to appear here.

Now, I know that's a lengthy explanation but I need to drill it in.

This is how it works.

In this block content we now have our H one text saying, "Hello," and we have a paragraph tag that says, "This is text we've included in our HTML file." The one thing I haven't explained here is extends base HTML.

This is pretty much our import.

This is pretty much the Jinja code that says to this when you render this index file it says Hey, go and grab my base.html from here.

Go and grab my core template, my core formatting from this file." We are extending the base HTML file into our index.html file.

Then we're going to put everything that is within the block content Jinja tags into our base HTML file here and this is what we're throwing in there.

We're throwing in a H one header, we're throwing in a paragraph tag, and that's that.

You can see the relationship between the files.

Here's the core file, here is what extends it here is what is unique about every page.

And we're just going to work on an index.html file for just a second.

So let's write that.

Let's write that.

Just go back and look at routes.py.

You can see we don't need to reference base.html.

We just need to try and render index.html.

That's it because by rendering index.html that template we have that Jinja code in the top that will then pull in the data from the base HTML file.

So let's launch that.

Here we have our browser.

Let's launch the file here.

And you can see there's, "Hello" from our actual index.html file.

This is the text we've included in our HTML file.

That came from index.html.

What didn't come from index.html is this is our site.

That title was not in that code.

It was not in that file.

If we open up base.html this is where the title came from.

There's the proof if I may that this base HTML file is being used by this index.html.

Same thing, we call the index route and we get the same thing there.

This is our site.

So on the next video we're going to create another file called 100Days.html and that's also going to pull from the same base HTML file but it's going to change a few things and you can see how the same template is being pulled over every time.

|

|

|

show

|

4:30 |

Let's create another page.

As I said, it's going to be called 100Days.html.

To do that let's create it here, 100Days.html.

We're going to speed this up a little bit.

Now that I've explained it there's no need for us to type it all out one by one.

There is our Jinja code to create this template.

Again, we are extending base.html.

We're going to take that code, that template that format from the base.html file and we're going to import it.

We're going to extend it into this 100Days.html.

Essentially at the moment all we're taking in is that unique title code.

That's the only real valuable stuff that we're taking in.

Let's throw in another H1 tag here.

And let's throw in some unique text here.

This is text from 100Days.html, not base.

Again, a little bit more proof to show that we are taking the code from this file not from the base file.

And the one thing we didn't do previously was edit the routes.py file because we already had an index.html route created.

So let's edit this now.

We need to create a route for our new page.

We need to tell our Flask app where it needs to go if someone says, "I want to load 100Days.html." So let's do that here, app.route.

And the URL end point that we're going to be using is 100Days.html.

That's the file.

I will show you something here.

This won't work.

We can't actually use a number to start our function name.

We have to use a letter.

That's just a rule in Python.

Notice the color change difference.

It knows, it knows too much.

So let's put an arbitrary p there and continue.

And all we need to do here is return, just like the other one, return the render_template that we're going to be using for this.

So in this case it is return render_template 100Days.html.

That's all.

Just to recap, if you browse to 100Days.html that's the route that the app hits.

We then load this HTML file.

That's what this function does.

It returns that.

That's all we need to do.

Let's head over to our app and run it.

Open up the browser again.

Okay, we're in our browser.

Hit enter.

You can see that it ran and we see our same site.

Let's go to index, same thing.

And now let's change that to 100 days.

And that hasn't worked obviously.

Look at that and let's leave this area in because this is a really good point.

What I've done wrong here is in the routes.py I've actually left in the route here .html.

That's not going to work.

Let's get rid of that.

Let's restart the Flask app.

Bring up the browser again.

Okay, we have the browser up.

Let's reload the page and there we go.

So you can see there's just a little bit of an error there.

Congrats on starting your 100 Days of Code challenge.

That is text that came from the actual 100Days.html.

Again, this is text from 100Days.html, not base.

But this is our site.

This came from our base.html template.

Now you can see how you can create as many web pages as you want within your templates folder for whatever your website might have and they can all call on that one base.html file for the overall architecture of your page.

|

|

|

show

|

3:25 |

As a bonus, I thought I'd throw in a bit of CSS some cascading style sheets to make the site look a little bit nicer, specifically with a menu bar.

I'm going to be using something called MUI CSS it's just some pre-established CSS I can link to it saves me writing all the code manually.

Now we are going to head over to our base.html file.

We're going to throw in quite a bit of code here so I'm going to paste it in and I will explain it line by line, just a minute and here we have all of the extra code I've just thrown in these are the meta tags up here that are required to load MUI CSS, you can pretty much just ignore all of those for now, this is all static stuff that they will show on their web page to actually get MUI CSS imported into your script, so all of those need to be thrown between the head tags and the key stuff here I want you to look at is within this body tag we're creating a div all right, in HTML, just a little section there called menu bar, this is using their MUI panel CSS class, just ignore that for now most importantly within our menu bar div that we're creating, we're creating a button with these characteristics, again ignore that this button that links to our index.html file our index route and says home.

So we're creating a home button that takes us back to the home page, the index page, there is a second button here which has the same characteristics but this time it's called a 100 Days button and it links to our 100Days route, all right.

So there's not much to it there, it's pretty simple and that is within our base.html file, so that menu that we are creating is going to be across every page and be extended and all we're going to have unique is our block content, all right, so we'll save that file.

Let's move this to the right, and all we have to do here we don't actually have to do anything else to our routes.py file 'cause that all stays the same we just run the app, let's bring up our browser all right here is our browser, let's get rid of 100 days hit enter, and it again, it's not styled perfectly just roll with it, this is pretty simple stuff but there is our menu bar and we have two buttons, home and 100 days.

If we hit 100 Days that takes us to 100Days page.

Remember the div that we created for their menu bar is static, so that will stay on every page, it's in our base.html file, that will never change, just the content in the body tag will.

Click on home again, this is just that custom content in the body tag that's changing, menu bar staying the same.

Nice and simple, you can see this is the framework of your web page, your web page is starting to come together, so this is really exciting, you've learned quite a few concepts here, move on to the next video for a little bit of a wrap up.

|

|

|

show

|

4:57 |

Congratulations you made it to the end of your first two days of the course.

This was the introduction to the Flask Web Framework.

I hope you enjoyed it, I hope you got a lot out of it.

Let's do a quick recap of everything that we learned.

What we're looking at here is the Flask package hierarchy this is pretty much how our entire application was laid out.

If you remember we started out with our project folder we created the virtual environment, we have our demo.py file which tells the flask app what to run and we also have the flask n file there which was the actual environment variable for the flask app.

We then had the program folder, that's where our actual application lived, it was packaged within that and that's denoted by the __init__.py file that tells the package what to run, what to import and so on and it imports the routes.py file which contains all of the information for routing out applications so all of your routes, the app.route decorator and what each one of those web pages actually does.

We then have the templates folder which contains all of those HTML templates including the base template which was the one that carried across all of our pages and then we have our two unique pages, index.html and 100Days.html.

Just looking at our __init__.py file quickly, we begin by importing flask, that's the very first thing you have to do for this file, you have to tell it to import the flask module from the flask package, and then we create our very own flask app object.

So we get this app object and that's what's used throughout all of our application.

We then from our program folder we import the routes.py file, so thinking back to that hierarchy, from the program folder we're importing routes.py.

Looking into the routes.py file, we import app so again from that program folder we know because of the __init__.py file that we can import our flask object called app, from flask itself we then import render_template and looking down a little bit further, we then have our decorators, these are the decorators that sit on top of our function, and these tell our function what path is going to work with that function and at the end of the function we then return the actual HTML file or the template that is going to be used when that page is called.

Looking to the base.html file now, this one's quite simple nothing much to it, it was just some base HTML code with some Jinja code injected right into the middle of the body tag, this again was the place holder of all of the content that we're going to be injecting into this base HTML file, and that code that we're injecting in is our unique HTML files and looking at those unique HTML files, those other pages we then have the extends Jinja code and that tells us what file we're extending from, in our instance we're extending from base.html, taking that base HTML code that template, and using it for this other page.

The block content as we saw in the base.html file this is the code that's going to be injected, this is the code that fits within that block content on the template and last but not least we then played with a little bit of MUI CSS, this is just a screenshot of the website there's the URL down on the bottom, if you want to have a closer look, have a bit of a play.

So now it is your turn, have a quick look at the video again on the read me, or go and read the read me yourself on the actual GitHub repo but now it's up to you.

In your last two days you are going to make your own web application, the idea is to be completely free form there is no rule around this, all I'd like you to do is take everything you've learned and make your own website, make your own page, it doesn't have to be functional just as long as you are using a base HTML type file to have a template that flows across all of your pages, do whatever you want come up with anything, make it as simple as possible it doesn't matter, just remember it's all about the consistent learning, so day three and day four dedicate at least 30 to 60 minutes to just playing around with your own website and remember keep calm and code in Python.

|

|

|

|

37:49 |

|

|

show

|

1:05 |

It's time to lay the groundwork for so much of this course.

When we talk about Django when we talk about Pyramid when we talk about Flask and all these other frameworks we're going to be using HTML through and through.

Sometimes JSON, but a lot of the time we're going to be using HTML templates and we're going to talk about that foundation right now.

Now, you may be thinking, oh, what is there to HTML?

I know this.

We've got paragraphs, we got BRs for line breaks header, body, done.

Well, even if you know HTML pretty well I encourage you to watch this chapter.

It's going to be pretty short.

We're going to build two interesting applications and we're going to show you a couple of cool editor tricks and other techniques to be really effective with HTML.

We're also going to talk about HTML forums some of the client side validation and good stuff like that.

So if you're really familiar with HTML go ahead and just watch the video and maybe skip the hands-on section but if you're not, if you're a little unsure your HTML skills are not quite up to par well, we're here to strengthen them up and get you ready for the rest of the course.

Now let's get to it.

|

|

|

show

|

1:42 |

You may be thinking, what is there to HTML?

It's pretty easy, right?

I mean, here's Yahoo.

We could write that up in some really simple HTML.

In fact, here it is in 1996.

If you go to the Wayback Machine this is the front page of Yahoo and basically it's like a Yellow Pages of websites.

It wasn't really a search engine.

It was like a bunch of people just going around the internet going "That's interesting.

"We'll add it to Yahoo." Totally crazy.

One of the demos we're going to do during this chapter is we're going to rebuild this site almost exactly like this with no CSS.

Very, very little styles, just pure HTML.

So yeah, pretty simple.

Kind of blows my mind that's a $100 billion company at their peak, but there it is.

That's Yahoo.

So, the web is simple, right?

Well, it was, it was simple.

It's getting a lot more interesting, a lot more complex.

As people go from building documents on the web that are linked to building applications that are on the web that are responsive that are progressive, that do interactive things like this Turo site here.

So, Turo is a place, kind of like Airbnb for cars.

And these are private car listings that you can rent.

Get a Ferrari, a 2007 Ferrari, for just $695 a day.

Pretty awesome.

So this is a really cool site, very nicely done.

It's beautiful, but it's also highly interactive with the map, with the progressive infinite scroll stuff going on and whatnot.

So I would bet that this HTML is not quite that simple.

We're going to focus on building a good foundation talking about some of the modern features like HTML5 and so on.

So that you can build apps more like this, less like that.

|

|

|

show

|

3:41 |

Before we start writing some HTML let's just talk briefly about its timeline when it was introduced, and what features were added when.

HTML starts way back in 1990, 91, with Tim Berners-Lee where he invented HTML back at CERN.

And it was really meant to just be linking documents.

All right, so that's kind of how it started.

Hyperlinks, basic non interactive documents.

In 1995 to five years later HTML 2 was released and that added form elements such as text boxes and option buttons.

Ability to change the background and better tables and things like that.

We quickly get to HTML 3.2.

There was, you know HTML is very standards driven and they tried to do some stuff for HTML 3 and apparently that failed or didn't go anywhere.

So they rebooted that effort as HTML 3.2.

And here we have better supports for tables still.

Applets, text floating around images, things like that.

Superscripts, subscripts.

So still working on this document in this non interactive like document world.

Moving ahead quickly now.

In 1999, remember this is the Dot Com boom.

Pets.com and all that craziness.

We get HTML4 and finally we get style sheets.

So until then, you wanted to style something you would have to put the styles on the elements.

Think how tedious that must have been, right?

That was horrible if you wanted to have a common design across your whole site.

So here we have style sheets that allows us to style all of our site not just embedded in each individual page and scripting ability for multimedia elements.

Sort of a competitor to HTML4 is XHTML.

So HTML4 is a little bit more loose.

It doesn't have to be an exact super set of XML.

And remember around the 2000's this is like the height of XML on a text base.

We have XML SOAP web services, all sorts of stuff like that.

We have style sheets, XSSLT, transforms all those kinds of things for XML.

So there was a push to take HTML and make it proper parsable XML, all right?

Everything is properly closed.

You know that got some traction but really modern day HTML is not nearly that strict and it's probably a good thing.

However, we've been moving pretty quick up til here.

In 1999, 2000, we get these two competing standards and then there's a long waiting period.

As time goes on and on all the way out to 2014.

In 2014, HTML5 comes along.

That was a long period there of not too many things being released.

This release of HTML is meant to replace things like Flash.

Remember Flash, you had to have Flash to watch this video.

Well replace those types of things with built-in video elements and audio elements and so on.

Also allowed for web applications to be much more like well, applications and not documents, right?

They can access local storage, the little databases they can run in offline mode, all those kinds of things.

They have location based services, mapping and whatnot.

And also changing the syntax a little bit to separate content from presentation.

When you look at some of the features especially around validation and stuff of HTML5 you will see it's decidedly not an XML document, all right.

You might have attributes that don't have values and things like that.

So this is more of a carrying on of HTML4 heritage than the XHTML.

So basically 15 years between major new versions here.

But now we have HTML5 we're kind of moving along to building real applications on the web properly.

|

|

|

show

|

0:50 |

A quick comment about editors before we start writing some code.

In this chapter, you're going to see me using an editor WebStorm.

This is from JetBrains.

Now, I really like the JetBrains set of IDEs.

You'll see they do great work around working on HTML and CSS and JavaScript and all the stuff have to do with the web.

Noe, if you don't want to use WebStorm, that's totally fine.

Here I'm going to use WebStorm.

I'm also later when we get to the Python framework use PyCharm, which basically has most of WebStorm in it plus also, it has Python capabilities.

If you don't want to use WebStorm but you want to have some of the cool editor tricks that we're going to do, probably your best bet is Visual Studio Code.

So you can try that, as well but I'm a big fan of Web Storm and PyCharm.

So I'm going to be using those.

And just want to give you a heads up of what's sort of compatible out there that's also free.

|

|

|

show

|

1:15 |

You want to see what Yahoo was like back in 1996?

Here we are in the Wayback Machine.

You can see all the different versions of Yahoo that have been saved and we come down here about a week after the launch and let's just click on that and see what we get.

Well, there we have it.

The little image at the top didn't come through.

That's okay, we're not going to model that.

Anyway, so here's our Yahoo we have a new, and a cool, and the brand at the top and the news, and more Yahoo and then we just have a listing like, here's all the arts and humanities and here's education, or if you want K12, you go in here.