|

|

|

6:52 |

|

|

transcript

|

1:04 |

Welcome, Welcome, to Python Memory Management and tips.

This is a pretty unique course.

We're going to dive deep into the internals of Python to understand how it works to make your code more efficient and make it faster.

I feel like Python memory management is a bit of a black art.

We use Python all the time, but most people don't really understand how Python memory works.

How are Python objects structured actually in memory in the runtime.

What does the reference counting part do?

What does the garbage collection part do?

we've heard maybe even you can avoid using the garbage collector altogether.

It's a bit of a black art.

Well, maybe not this kind of black art.

Maybe this kind of wizard.

Maybe this is the kind of black art, the programmer kind.

But if you really want to know how Python Memory works, this is a course for you.

We're gonna have a balance of high level conversations about algorithms, we're gonna dig into the CPython source code and we're going to write a ton of code to explore these ideas and see how they work in practice.

|

|

|

transcript

|

0:57 |

So why should you care about memory management in Python, anyway?

Isn't it just gonna work the way it's gonna work, and we just have to live with it?

Well, you'll see there's actually a ton that we can do to work with it or even change how it works.

Here's a simple little program.

It's running Python running one of our applications, and it was fine, but then a little bit later, it wasn't fine.

Now it's using 719 megabytes.

That seems bad.

Maybe we should do something different.

We're gonna learn a whole bunch of amazing techniques that would allow us to keep this memory usage much lower and we're going to dig in So you understand exactly the trade-offs you might be making and where these costs come from, how Python is doing its best to get the most out of the memory that we're using and so on.

Not only will you come away with an understanding, but we're going to dig in and get some design patterns and some tools and techniques that you can proactively use to make your program look more like the one on the left, and less like the one on the right.

|

|

|

transcript

|

0:46 |

Now, when you think about memory management and making our code more memory efficient or understanding how the garbage collector and reference counting works, you might think this is to make our code use less memory.

To make the memory required smaller.

But it also will have a side effect.

It will make our code faster.

Our code Python will have to do less garbage collection events, potentially is using less memory so less swap space, maybe better cache management, but also just some of the design patterns and some of the aspects of Python that are not frequently used but we're gonna bring into it will actually make our code quite a bit faster for some really interesting use cases.

this is sort of a general performance thing with a concentrated focus on memory.

|

|

|

transcript

|

2:57 |

Let's talk about what we're gonna cover in this course.

We're certainly going to talk about Python memory management.

But there's actually three distinct and useful things we're gonna cover here.

So, three separate chapters.

We're going to talk about how Python works with variables and look behind the scenes in the CPython source code to see what's really going on when we work with things like strings or dictionaries or whatever.

We'll get a much better understanding of the structures that Python itself has to work with which will be important for the rest of the discussion around memory management.

We're also going to talk about allocation.

People often think memory management, clean up.

You know, garbage collection, reference kind of and so on.

And yes, we'll talk about those things, but Python actually does really interesting things around allocation and a bunch of cool design patterns and data structures and techniques to make allocating memory much more efficient, which then lead into making reference counting more efficient as well as garbage collection.

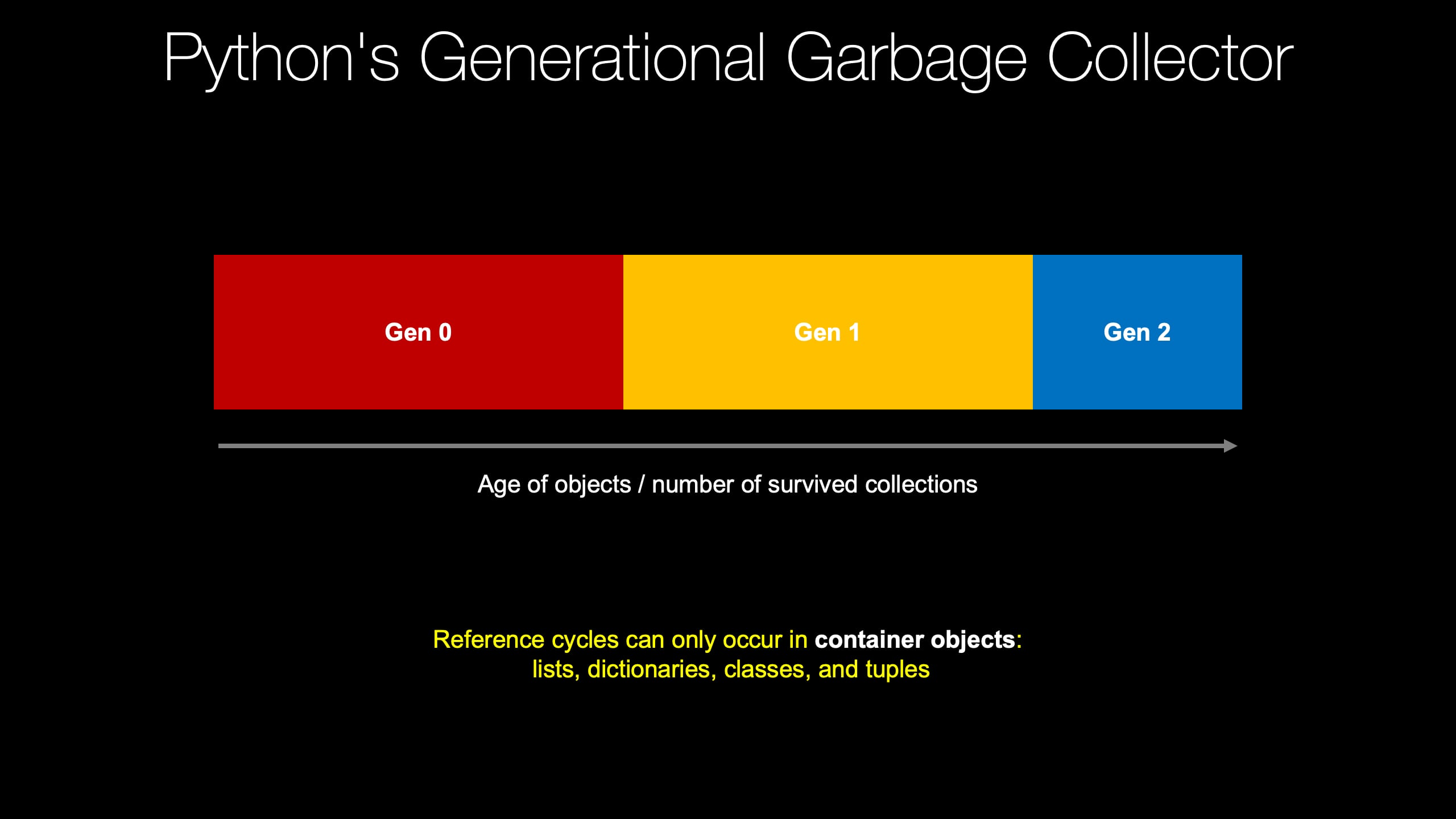

So Python has two ways to clean up memory.

We're gonna talk about them both, when either of them come into action.

We'll also write a lot of code to explore that.

The data structures that you choose to represent your data and your application can dramatically vary in how much memory they use.

We're gonna take the same data and look at it through the lens of storing it in a bunch of different types of data structures: arrays, lists, dictionaries, classes, even pandas data frames and NumPy arrays and see what the various trade-offs are around these different data structures.

Once we get to talking about functions, you'll see that there are some really powerful and simple design patterns that can dramatically make our code faster and more memory efficient.

So we're gonna look at some really cool ways to make our functions use a little less or a lot less of the memory that we're using.

We're also gonna look at classes because storing data in classes is super important in Python and maybe gonna create a list of classes, a whole bunch of them and so on, and you can have a lot of data there.

So we're going to talk about different techniques we can use when we're designing classes in Python to make it much more efficient both in memory and it turns out a nice little consequence in speed as well.

Finally, we're going to do some detective work.

Once we understand all of these things we're going to take a script and we're just going to run it through some cool tools.

Try to understand from the outside what exactly is happening in our application.

How Python is using memory.

We're gonna create some interactive web views of this data.

We're actually gonna create some graphs, all kinds of stuff.

So we're gonna use some really neat tools to do the detective work, to understand how our program is working and where we should apply some of these techniques that we've learned previously that make it even better and faster.

That's what we're gonna cover.

It's gonna be a lot of fun.

It's gonna be hands on, but also some high level conversations.

I think you'll get a lot out of it, and I'm looking forward to sharing it with you.

|

|

|

transcript

|

0:42 |

What are the prerequisites to take this course as a student, what do you need to know?

Honestly, the requirements are really, really simple.

You just need to be familiar with the Python programming language.

We obviously don't start from scratch.

We're digging into the internals of CPython.

We're talking about the trade-offs of different data structures that you should already know from Python.

So, you should be familiar with dictionaries, lists, the ability to create classes, functions, and then the core stuff like list comprehension and loops and whatnot.

If those things are all in place for you, then you're absolutely ready to take this course.

If not, check out our Python for the absolute beginner course as a great foundation, and then you'll be ready to jump in here.

|

|

|

transcript

|

0:26 |

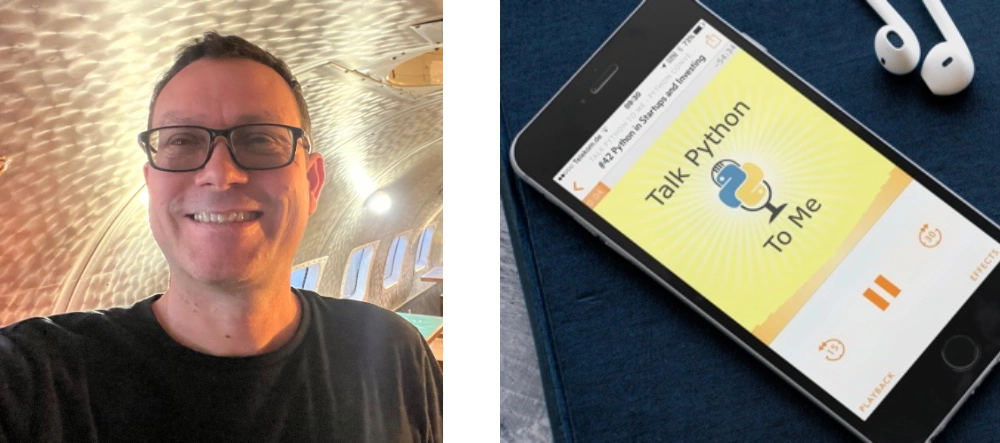

finally, let me introduce myself.

Hey there, I'm Michael.

You can find me over on Twitter, where I'm @mkennedy.

You may know me from the podcasts "talk Python to me" or the podcast "Python bytes".

I have done both of those.

And I'm also the founder and one of the principal authors here at Talk Python Training, and I'm super excited that you're in my class.

Thank you so much for taking it and I hope you really get a lot out of it, so, let's get started right now.

|

|

|

|

5:55 |

|

|

transcript

|

2:51 |

in this short chapter, we're going to talk about what you need to follow along with the course and to write code and play with the same tools that we're going to use.

Now, right off the bat, Would it surprise you to know that you're gonna need Python for a Python memory management course?

Of course you are.

But I do want to point out that you're gonna need at least Python 3.6 if you want to run the code that we're using and if you want exactly what we're using in this course, we're using 3.8, specifically, 3.8.5.

These memory behaviors can change slightly from version to version, but they should be pretty stable in Python 3 at this point.

But the syntax, things like F-strings and whatnot, we are using those, and those require Python 3.6 and beyond.

So the code will not run without 3.6 so make sure you have that right version.

Now, how do you know if you have the right version?

Well, you go to command prompt or your terminal and type Python3 -V in Mac os and on Linux, and hopefully you get something like 3.8.5 or higher.

The screenshot when I took it was a little bit older, but you know, you get the idea.

On Windows, you probably should type Python space, Dash capital V.

Now one of various things can happen here, especially on windows.

On windows, what you might get is, it might say, Python 3, or it might say Python 2.

You might also have Python 3 but your path might not have it first.

If nothing at all comes out or the Windows Store opens, the newer versions of Windows 10 will try to go to the Windows Store and install Python for you if you type it.

And that will happen usually if you just type Python or Python 3 by itself.

But with this -V, if it says nothing, that means you don't really have Python installed.

It's just this store shim that really should say "you don't have Python installed", even with an argument.

Nonetheless, that's what happens.

So make sure that you get Python 3.6 or above ideally 3.8 or above on your Windows machine or on Linux or Mac Os.

Finally, if you don't have Python installed, there's all these varying recommendations of how you install it and how that changes over time, What the trade offs are.

Personally, I installed Python 3 on my Mac using Homebrew and I installed Python three on Windows using Chocolatey.

So, those are both systems package management systems for the operating system that lets you automatically upgrade to the next version just by saying Brew - upgrade or Chocolatey upgrade Python Things like that, which is really, really cool.

But there's a bunch of different ways and trade off, so I recommend you check out this Real Python article: realPython.com/installing-Python/ and see what they say now, they say they're going to keep this up to date to try to keep up with the times or what the options are for installing Python.

So if you need to, have a look here.

|

|

|

transcript

|

2:05 |

If you want to follow along exactly what I'm doing, I'm going to be using PyCharm so you should use PyCharm too.

It's a fantastic tool for writing Python code and exploring it.

However, if you don't want to, you can use other editors as well.

That's fine.

So, if you're going to use PyCharm, I recommend you just get the latest version and use that.

They offer two versions.

They offer the PyCharm Community Edition and The PyCharm Pro Edition.

For what we're doing this course the community addition, the free open source edition is totally fine.

There's one step where I say "let's see the CPU profile graph in PyCharm" and we spend about one minute on that.

That requires the Pro Edition, which you can just look at the picture, you don't have to be able to run it yourself.

Other than that, you're gonna be able to do everything with the free, open source Pycharm Community Edition.

So, there's no real reason to not use it other than you just want to use another editor.

I think this is a great one and you'll see me using it throughout the course.

The best way to get PyCharm is to go to the JetBrains toolbox and get that installed and then use that to install either PyCharm Pro or PyCharm Community or both, and this will keep it up to date and let you know when there's new versions and let you roll back to different versions.

It's a much nicer way to work with PyCharm and the JetBrains applications rather than just installing it directly.

If you don't want to use PyCharm, which is fine, I recommend that you use Visual Studio Code.

That's the other really good editor these days.

Those two have, like 85%, 90% of the market share at this point.

From what I can tell the recent surveys and so on, this is a great one.

Just be sure that you install the Python extension or it's not going to do much for you.

And you get that by clicking on this little button here and then the Python extension is the most popular one.

Looks like it's been downloaded 55 million times when I took this screenshot, and I know it's much higher than that right now.

So, two editor options, PyCharm, Visual Studio Code, PyCharm community free version will totally work for this course.

|

|

|

transcript

|

0:59 |

You want to get the materials, the source code and whatnot data files for this course.

You could pretty much type them in as you go, but if you want to play with it and tweak it, there's a lot of little ideas where you just make a small change and you see what happens in terms of the memory usage or the performance or whatever, so I definitely recommend that you get the source material, so go over here to github.com/talkPython/Python-memory-management-course.

If you're a friend of Git and you like to work with it, just go clone this repo.

If you don't really know about Git and your uncomfortable doing it, you don't have to clone it.

Officially, you can just click the green button where it says "code" and download It as a zip file and roll with that, right?

There's no major changes or whatever we expect other than bug fixes along the way.

Make sure you get the source code and take it with you.

In fact, I recommend you fork and star it, just so you have it for sure.

Alright, that's it.

Go through these steps.

You should be all set up and ready to take this course.

I'm excited to dive into Python memory management with you.

|

|

|

|

40:00 |

|

|

transcript

|

1:40 |

it's time to get into Python memory management properly and start writing some code and looking at how things work behind the scenes.

We're going to start by focusing on variables.

Now, you might think the place to start would be garbage collection or creating new memory or whatever, but to understand how all those things work, we're gonna start by talking about variables and how Python manages them, passes them around and so on.

We're gonna start in a place that might surprise you for a Python course.

We're gonna talk about C.

Now, just to be clear, it's only a little bit of C.

If you don't know the C programming language, don't worry, it's a few lines of code, I'll talk you through it.

The reason we're gonna talk about C is when you talk about Python, people say "Oh, you just type Python and you run that".

What they almost always mean is CPython, right?

Python is actually the programming language implemented in C.

And you can go, we'll talk about some places where you can see the source code, how things work in the actual implementation of the Python runtime.

Some people call the thing that executes Python, a "Python interpreter".

That's true for CPython.

There are many other things that run Python that do so that is not interpreted.

We've got PyPy, which is a JIT compiler, not an interpreter, you've got Cython and so on, so I prefer runtime.

But if you want to say interpreter, same basic thing here.

So what we're gonna do is we're going to talk a little bit about C and how specifically pointers and things like that in C surface when we actually get to the Python language, because even though there's other ways to run Python, the vast majority of people do so on CPython.

|

|

|

transcript

|

2:57 |

Now let's look at an example of some very, very simple code in C, C++ and first C++ it's a little bit complicated, cause even simple code can get pretty complicated.

But here it is.

We're gonna look at this function so this is not object oriented programming like C++ more on the C side, and what we're gonna do is we're gonna have two person structures or they could be classes whatever.

But we're gonna have two pointers notice the person star, not person, but persons star.

That means we're passing the address of where that thing is in memory rather than making a copy of its data and passing it along.

We're gonna take these two person objects that we're referring to by address and then we're gonna ask the question "are they the same?" The way we do this in C is you go to the pointer, which is some place in memory.

In this particular example, it was something like this, like 0x7ff whatever it doesn't matter.

This the value of this thing is just a place out in memory.

And here in that memory location on what's called the heap, we've got two pieces of information, right?

Two of these pointers point to those places out in memory.

That's why we're gonna use this arrow operator P1 goes to our dash greater than arrow, the id.

So follow the pointer out and then find out there that memory location what the id is and see if they're the same.

So this is how it works in C, and it raises a lot of questions that are maybe not even knowable here.

For example, who owns these objects?

Who is responsible for creating them and ensuring that they get cleaned up correctly?

That answer could vary.

It could be allocated in the stack of the thing that called this function.

It could be some other part of the program created it, and when it shuts down or that part cleans itself up, it's supposed to delete those out of memory.

How long will they stick around?

I don't know.

Until the other part, whoever owns it, decides that they're going to go away.

What happens if we forget?

What happens if somehow the programmer lost track of that piece of memory?

Well, that's a memory leak, and it's just going to stay out there forever.

There's nothing that's gonna fix it.

That's bad.

But what's worse, usually is if actually it gets cleaned up too soon.

And the reason is you'll still have that p1 pointing to that memory address, But what is living out there is either gone or it's Some other piece or some partial piece of some other data, and that could be all sorts of problems if it gets cleaned up too soon.

So what I want you to take away from this is just there's a lot of actual bookkeeping or accounting around things that were created, how long they live, whose job it is to clean them up, when they can do it, and they've got to make sure that they don't forget to do it, but not too soon.

Okay?

So all of these things are really tricky around C and pointers, and we'll see in Python that generally that's not what we have to do.

A lot of things are done for us, but we want to understand what is done for us.

How is it done for us, and so on.

|

|

|

transcript

|

1:33 |

Let's look at one more example before we move on to talk about how Python does the equivalent thing.

So let's look at a slight variation.

We've got the same SamePerson function.

It's going to take two people and determine if they're the same.

However, this time, instead of passing pointers, which are basically numbers to memory addresses where the real data lives, we're just gonna pass the id's.

Remember before we're saying p1 arrow, id.

Might as well just past the id that makes it a little easier to do.

So we have our two pieces of data which are integers p1_id and p2_id.

They don't point anywhere.

They literally just have the value, right?

This is the same thing that was the id before.

So in C, we can pass the value of a thing or we can pass a pointer like we saw before and there's good reason for both.

If you have a large data structure and you want to move it around without making copies because that would be slow, You would pass by reference or pass a pointer.

If you wanted to, even more importantly, you want to make changes to it and have those changes reflected in other parts of the program, you need to pass the pointer, change the shared location, and then everyone will see those changes.

As opposed to here if we change the id only this function would see it.

But, C and many languages, c#, other languages, they have this distinction between passing sometimes just the value, like the integers, and sometimes like previously the address of the thing that you gotta follow as a pointer out there.

So, I want to put these two up and contrast them for you, and then we're gonna dive into Python.

|

|

|

transcript

|

3:33 |

Alright.

Time for some Python code.

In a big question, does Python have pointers?

Well, let's look at a function.

The same, SamePerson function but written in Python.

So here we have a function: def same_person, and we're passing a p1 and a p2, and we're using type annotations to indicate this is a person class.

So, p1 is a person, p2 is a person indicating also the function returns a bool, true or false, on whether they're actually the same person.

But notice p1.id == p2.id That's not that arrow thing, right?

We don't have to treat it differently.

And if you think about Python, you've probably never seen the star in the context of meaning this is a reference or a pointer to a thing you've never allocated memory, you probably never cleaned it up.

There's a del key word, but that means something totally different that doesn't really directly affect memory.

Are there pointers here?

I don't see any pointers.

Here's the thing.

Let's try to print out p1 and p2 and see what we get.

Well, the interpreter would just say "__main__" we have a person class object.

So person is the class and we've created one of them.

So object at this memory location.

Hmm, at this memory location sounds a little bit like, well what we have the pointers, doesn't it?

You could also use this function, the cool function in Python a built-in called "id" and say, where does this thing, basically, where does it live?

Hey, and if those numbers are the same, they're sharing the location.

If they're not, it's a not shared thing.

And we can talk about that.

We will talk about that as we go.

But if you go out here and actually look in memory, we're gonna have two things out on the heap dynamically allocated, And these are going to be pointing to it.

Well, p1 and p2 are pointing to it.

Those id of them actually correspond to the address.

This is the same situation as we had in C++ pointers.

The language is hiding it from us.

We don't have to worry about it, right?

That's cool.

But as you think about, you know, what is the lifetime of p1 and p2?

Who was in charge of it?

All those same questions I asked, Come up.

Who owns these objects?

Well, in Python, the answer is better because you don't have to worry about it.

Like I said, you probably have never really thought about cleaning up memory by, like, going free or delete or whatever on some thing you've created.

Because you can't really do that.

But somebody has to, right?

If these get created.

So the question Who owns it it's kind of, it's interesting, It's kind of the community of all the things in the program, all the things that share that piece of data.

Once they all stop paying attention to it, it goes away.

It goes away for one and other reason.

There's a couple ways in which it could go away and we'll talk about it.

But the runtime itself kind of owns of these objects.

You don't have to worry about that.

How long were they stick around?

Until everyone is done with them, maybe a little bit longer, depending on how they're linked together.

But generally speaking, just until everyone is done with it and the runtime also knows who's paying attention to it, so you don't have to worry about the time.

It is really nice.

So in a sense we have pointers in Python, yes, but we don't have the syntax of pointers, lovely.

Nor do we have all the stuff for in the memory management and the accounting of who owns what, when, and when it should be cleaned up.

All those things are gone, which is beautiful, but we also need to understand how and when Python does those things on our behalf right?

Does Python have pointers?

I'm going to say yes, Python has pointers, but you don't syntactically have to worry about it.

|

|

|

transcript

|

1:19 |

Let's look at that alternative example that we saw in C++, But now in Python.

Remember what we did is we passed over the id We said these are integers and we're just gonna compare them directly.

And here's the same code in Python, really The only difference is where the return value goes and whether it goes int p1_id or p1_id int.

Right, this is the same basic code.

And if you print out p1_id or p2_id, it's gonna look just like the same thing that we had in C++, but it's not the same thing.

In Python, these are different than what we had in C++.

In C++ they're just allocated as the program runs technically on the stack.

They're not passed by value, all sorts of stuff like that.

So we're going to see that, actually, these also live out in memory, they're dynamically allocated, they have to be cleaned up just like our person object was.

So these are pointing out here in just the same way.

In some sense, does Python a Pointers?

Python has more pointers than almost every other language, right?

Even things like numbers and integers or functions themselves or modules and source code all of those things are objects and pointers.

Even when they operate as basic numbers, like we have here these are basic numbers that are allocated dynamically out in memory.

|

|

|

transcript

|

4:05 |

I'm going to reveal the hidden truth about Python variables to you now.

So, if you've seen the movie The Matrix, one of the best science fiction movies of all time, Morpheus spoke to Neo when he was just first realizing, or was being told, that he was living in a simulation and Morpheus told him a little bit about that and said, "look, you have two choices here, a choice between two things.

One.

You can take the blue pill.

Forget this happened and just go back to being blissfully unaware that you're actually in this weird, dystopian world.

Or you can take the red pill and see the truth.

You can see inside the simulation what's actually happening".

And I feel a lot of this course is a little bit like that.

When we work with Python, it generally just works, everything's smooth.

Sometimes it uses more memory than we want.

Well, that's the way it is.

There's not a whole lot we can do about it, right?

What I'm gonna show you in this course, is there are a lot of little techniques that combine together that will either help you build better data structures, better algorithms and how you use those data structures, or at least just understand why your program is using a lot of memory or it's a little bit slow or whatever.

And in order to do that, we have to look beyond the Python syntax down into the CPython runtime.

So in that regard, this course is much like taking the red pill.

By the way, if you wanna watch this little segment, the link at the bottom is like a five minute video.

It's great.

So let's take the red pill.

Here's some Python code.

Age equals 42, so age in an integer.

Yes, its value is 42.

You can add it.

You can divide it.

You can treat it like, you know, numbers in a programming language.

It couldn't be simpler, right?

Well, not exactly.

So if we look at what is actually happening inside of the CPython runtime, wouldn't we work with numbers like these integer types, They're called integer types or int in Python, but they're actually PyLong objects in the C level.

You'll see there's a whole lot of stuff going on here.

Now, this is just a very small part of a single function in a very large piece of code that you can actually click "Go here" and go to bit.ly/cPythonlong.

This will take you to this line in the CPython source code, which I don't remember exactly how long it is, but it's hundreds of lines long.

So the ability to create one of these things is not super simple.

Now, for the number 42 it gets treated special.

Small numbers get treated special, as we'll see later.

If this was 1042 it would be closer to what's actually happening.

The important thing, Is not which one of these functions around Python integers runs, but, just take this one as an example.

So, PyLong_FromLong, which is a C++ or C long that's converted to this PyLong, what does it return?

It returns a PyObject pointer.

Everything in Python is a PyObject pointer.

Strings, numbers, functions, source code, classes you create, everything.

This is a common base class for everything in Python, okay?

But specifically what it's creating is a PyLong object that's then being sort of down casted to this lower version.

So you can look through.

There's a bunch of stuff even within this function I removed just so it would fit on the screen.

But it's allocating here.

See this line that says _PyLong_New(1).

And then it does a bunch of work to it, is this pointer that's allocated?

That's the dynamic memory allocation out on the heap.

It does a bunch of stuff to set its value, and then it returns it as a pointer, which the Python runtime just converts that to something that feels like a nice little clean integer like that one line we have above.

But there's actually a ton going on.

So this is the red pill world that we're going to explore what's happening behind the scenes, the algorithms that are running, the reasons that they're happening throughout this course.

|

|

|

transcript

|

1:57 |

Alright, we've taken the red pill, let's go explore.

Over here, I'd like to enter that link bit.ly/cPythonlong and it'll redirect us over to this horrible url, which you can see now why I was using Bitly to shorten it.

This is, in the Python organization, the CPython project in the longobject.c file, and this is the PyObject PyLong_FromLong, and you can just go through and you can see all the stuff that's happening that's going on here.

So there's all the stuff I deleted we're still in the same function and still in the same function.

When are we done?

Goes for aways.

There's quite a bit going on here.

Let's go to the top.

You can see that this is the long, arbitrary precision integer object implementation.

And in this objects section, you'll see all different sorts of things, like Booleans, and then you've got dictionaries, here's part of the dictionary implementation.

There's the allocation stuff.

obmalloc.

Here's the base PyObject.

yup, PyObject pointers all over the place, Right?

This thing.

So this is the implementation for that and one thing that's interesting is, you would think just the base class would be pretty simple, but it's 2000 lines long, like the header file.

The header file, if you go up, is already, I think, like 600 lines long.

So there's a lot of stuff going on with every one of these objects, and throughout various places in the course, I'll be giving you the short your url's, which will take you to different locations in the source code.

You don't have to go look at it, especially if all the code here makes your eyes water.

That's fine.

You don't have to look at it.

But this is where you can go and see exactly what is happening and when or what kind of data is being stored and what structure and how the runtime is using that to manage the memory or allocate things and so on.

|

|

|

transcript

|

1:29 |

Let's real quickly look at the documentation from Python's docs around this id built-in function, and it says "id is a function that takes a single object".

And if you look at the description, it says "it will return the identity of the object.

This is gonna be a number which is guaranteed to be unique and constant during the objects Lifetime, however, two objects may with non-overlapping lifetimes may have the same id value".

That's more likely than just random places in memory.

As you'll see, there's patterns that have a tendency to reuse memory rather than allocate new memory.

Nonetheless, this while the objects are around, what this number come back means this is the unique identity of the object.

And if you get two objects and you ask from them and they're the same, they're literally the same object in the runtime.

If those numbers are different, even if they would test for equality like two strings might be equal equal to each other but they're not the same location of memory, potentially, then the id would come back to be different.

And, little special detail here at the end, if you happen to be in CPython, The CPython implementation detail is that this is the memory address, the address of the object in memory.

So this literally is the base 10 version of "Where's This Thing in Memory?" Id is simple, but we're gonna be using it a couple of times throughout this course to ask questions like "are these actually the same thing?" or "where do they live in memory?" and stuff like that.

So, here's the deal.

|

|

|

transcript

|

3:15 |

Now finally, let's write some code, as we're going to do for much of the rest of this course and explore these ideas.

But before we actually start writing, I want to just show you how you can work with the code from GitHub and just get it started if that's somewhat new to you.

So over here, we've got our code structure.

Now, right now, these are all empty.

I just have little placeholders, so Git will create the folders.

Git only tracks files, not folders.

So this is the trick to make it create them for me.

What I want to do is just open up this entire project here in PyCharm.

Now, the first thing I want to do in order to do that is create a virtual environment.

We're not actually going to need the virtual environment for quite a while, but let's go ahead and just start out that way.

So I'm gonna open a terminal here in this folder.

I checked it out as a mem-course not its full name, but I'm gonna create a virtual environment.

So, Python3 -m venv venv, and we want to activate it.

On Windows, this would be the same.

Sometimes you drop the three.

This would be scripts, and you wouldn't do the dot.

It always turns out that whenever you're creating a virtual environment almost always pip is out of date.

That's super annoying, though we just want to make sure that we're going to upgrade pip.

Alright, now we're ready.

I'm gonna take this directory mem-course and drop it into PyCharm.

And because of the way I put it over here, it's kind of annoying.

You can't get back to its super easily.

So if you right click up there, you can get a hold of the course.

I'm gonna drop this on PyCharm.

Now, on Windows and Linux, you can't do that trick.

Just go file, open directory, and select it.

Now, for some reason, it found the wrong virtual Environment, so I click here, add interpreter, pick an existing one, go to my project directory.

Sometimes it gets it right.

Sometimes it doesn't get it right.

Perfect.

So you can see it's running down here, and it looks like it's probably the right thing.

So let's go down here and we're gonna just add a quick little function that we can, file that we can run just to make sure everything is working.

I'm going to call all the things that are here that are meant to be executed directly "app_something" and then there's gonna be a bunch of libraries that they use but maybe don't get executed directly, and those will just be whatever their names are.

So that's the convention.

I'm gonna try to make it clear what you can run in here and what you can't.

So I'm gonna call this "app_size".

And then as a good standard practice, we want to create a main method we want to use the "__name__='__main__'" convention.

I've added an extra personalized little live template to PyCharm so I don't have to type it.

So you'll see me type "fmain", and if I hit Tab, it writes this for us.

You could write that yourself, but it's super annoying to write it all the time So I'm gonna write this and we'll just print out.

Right click, Run.

Looks like we're using the right Python to run our little project.

Cool.

So now we've got our projects open.

Once we get stuff in here, I'm gonna be deleting these, but that's how you open the code.

Of course, you'll have the code already here, but then you could just go right click and run the various things that you want to explore.

|

|

|

transcript

|

7:47 |

Alright, let's answer some questions and you can see I've already threw in the questions up here just you don't have to watch me type like print.

How big is this?

How big is that?

And the thing I want to explore is how much memory is used by certain things.

So we get a sense for a number versus a string that has one character versus a string with 20 characters or a string with one character versus 20.

Like how much is it?

The character space versus all the underlying runtime infrastructure.

That's gonna contribute to the memory use.

So we're in luck.

Python has a good way to answer this.

We're gonna import sys.

And over here we can print out things like sys.getsizeof a thing like the number four.

So let's do that real quick and then run.

How big is the number four?

Well, in a language like C++ or C# or something like that where these are just allocated locally, you always have to talk about the size and the number is like a short or a long or something like that.

But typically this would be 2, 4, or 8 bytes long.

in Python, a number, a small number, is 28.

If we had a little bit bigger number, it's still 28.

Let me make a little bigger so it stays on the screen But if it's a lot bigger, we use a tiny bit more memory.

So the size matters, but not so much.

But there is some overhead.

Remember, this is the PyObject pointer and all the things to know how many people are keeping track of it, where it was allocated, what type it is.

All of these things are happening behind the scenes, and we just see the simple number 4, but Python is doing a bunch of work through that infrastructure that we talked about.

Remember the red pill stuff?

That's what's happening, that's why this a little bit bigger.

Alright, what about this one?

Let's print sys.getsizeof the letter "a".

Well, these you feel like these might be similar, right?

I mean, in most programming languages, that's 1, 2 or 4 bytes and this is 2, 4, 8 So maybe it's even smaller.

Let's see.

Nope.

50.

It's bigger.

So it turns out, strings have a lot going on, so there's a little bit going on there.

And let's see if we have a big string like this.

How much larger is it?

25.

Well, 25 larger right?

75 now, and that's because we have 26 of these rather than 1.

So you just multiply that by 26.

We get our 26 with multiplied by 100.

Look, it's about 100 bigger.

Okay, that's what's going on here, right?

So basically, there's this infrastructure to keep track of the string and do all the string things and then the extra data.

And if we had Unicode characters, they might take up more than one byte per character.

How about a list?

Simple little empty list.

How big is that?

56.

Okay, that's not too super large.

Now let's do something with like, 10 items in it.

There we go.

I put 10 in there.

Let's see how much bigger it is.

136.

Well, that's quite a bit bigger.

Let's think about that for a second.

Well, what does the list actually contain?

The list doesn't contain the values the list contains basically what every variable in Python is It contains a pointer to a number out in memory.

So there's somewhere out in memory a 1.

And in here in the list, there's the go find the 1 the number over there and then here's another pointer out to the 2 wherever it is in memory.

These smaller numbers are interesting where that actually is.

But we've got a list, and it's got 10 of those in there.

The lists generally don't allocate one slot at a time.

They kind of grow in a doubling type of way, like, you've got 10, and then you add a few more so we're gonna allocate 20 and then 40 and and so on that kind of pattern so that you're not constantly allocating every time you're adding something and copying cause that's super slow.

All right, well, that's how big that is.

Sort of.

We're going to see that this going to get interesting and let's actually do something else.

So how much memory did this take like, How much does that line contribute?

Well, it contributes, we saw that each number is 28 and then it's gonna allocate whatever the list needs to be.

The list by itself is 56.

So each one of them would have 280 bites, probably for all of those numbers, those 10 numbers because they're 28 each and then we're gonna have the 56 for the list.

That's like 320 or something 330.

And then there's also the pointers that are gonna be in the list as well that have to point out there, maybe the over allocation.

So something's going on like this is not big enough, right?

Just in the numbers alone, they should take 280 bytes.

We're going to see what's going on in a minute but this is how much room this thing is taking.

But let's try to force the issue by saying "What if there's a large piece of data right there and a large piece of data right here?" So I'm gonna do a little bit of work here.

I'm going to come up with some data that we're gonna ask about, and I'm going to go from 1 to 11.

I'm going to add in some stuff 10 times.

The number of elements in the list should be the same.

I'm going to come up with an item and the item is going to be a list that starts out with the number of whatever it is the loop.

So, first time through this will be 1 second time It'll be 2, and so on, and Python has this funky little trick that we can do here.

So if n is 7 and we can come over here, there's a list of Let's put, like 3 in there and we times n what we get is a like a multiple a list with that copied that many times.

So here we get a single list with seven 3's instead of just one 3.

We're going to do that here.

i times i so first it will be 1, then 4, so on, times 10.

And by the time that gets to be 10 that's gonna be 1000.

So it'll be a list with 10 in it 1000 times.

the last one that's out here is gonna be bigger than just, you know, the number 10.

Absolutely.

You're gonna put that item in there and let's just really quickly print out data just so you see what we got here.

Notice there's a whole bunch of tens and a bunch of nines fewer and so on, right?

It kind of grows geometrically.

All right, so that looks like that did kind of like what I said it did.

And let's just print sys.getsizeof, Data.

184.

What do you think?

I'm gonna think no.

no, that's not right.

But, this does give us a sense of what the base size is.

So what are we answering or what information are we getting when we say getsizeof?

What we're getting is it goes through and it says, "I'm gonna look at the actual data structure, the list".

So, this one right here and let's see how much it's internally allocated, what are its fields?

And if it's got a big buffer to store items it puts in it.

How long is that buffer?

But what it doesn't do is it doesn't traverse the object graph.

It doesn't go "Okay, well, there's 10 things in here.

Let me follow the reference from each one of those 10.

See how big it is.

And if it has references, follow their references" it doesn't do this traversal which is actually what you need to know about how much memory is used.

But this getsizeof, its A start, you'll see that there's a better way that we can get going to actually answer this question more accurately.

|

|

|

transcript

|

3:46 |

To answer the question of how much memory do these things actually use in the way that I describe you traversing the object graph, We're going to use a cool foundational library: "psutil" and I said we didn't need the virtual environment right away.

Well, I forgot about this one, so we need it right away.

We're going to pip install that one.

You can see PyCharm if I just click This will do that for me.

I added this requirements file, now I put it in there.

Alright, that's all happy, but what we're gonna do is we're going to write, or take this code over here that basically will do that traversal, so give me the size, and it'll just recursively go looking and say, "is that a dictionary?

Does it have a __dict__?

As in it's a class.

Is it a thing that can be iterated"?

and so on.

And it's just going to recursively dive into those things.

Okay?

So we can use this to traverse that object graph over here, if we just import it.

Looks like it will import right?

But no, it doesn't.

Even though you can be assured that is what I called it "size_util".

And the reason is PyCharm is looking for something up in this folder called "size_util".

So what I am going to do is I'm going over here and tell PyCharm "also look in this directory as a like a top level directory for imports" and the way you do that, is you say mark directory as sources route, and we're gonna need that for the others, potentially as well.

Here we go.

Oh look, now it works.

So, what we can do is we can do the this and say "get full size of that" and let's do it for the "a" and let's do it for "26 a's".

Now, those things don't have stuff they contain, so these numbers are likely to be the same.

And they are "28 28 50 50 75 75", but where it gets interesting is where you have container objects.

So if we just put the list in because the List is empty it's probably the same size.

How big is the list?

It's still 56.

Alright, so those are the same size, but here's where it's going to get interesting, where we look at the size of something that points to or contains other things that are not empty, basically.

Look at this one: the 10 items instead of being the 136, if you take into account all the stuff out there, so the other 280 bytes that you're gonna get from these, then that's how big it is, okay?

And then here's the one where it was a really out of whack.

Where It was really crazy in terms of, we know we allocated tons of memory and yet it said 184.

Not likely.

So let's go get the full size of this data list that we've created.

There we go.

It probably would be better, even if we go with this way little comma digit grouping there 31 basically 32 kilobytes for this beast that I created here this geometric growing list of lists.

So if we're going to understand the amount of memory of these objects take, we gotta look at all the container objects, the things that they contain and recursively do that, right?

If there's container objects that are contained, look at their things that are contained and so on.

And that's what this little utility does, and we're gonna be using this throughout much of the course.

Do not use this sys socket "getsizeof" which is great for the relative size of the like, the core essence of the thing, but not the object graph that it relates to, and that's what this size utility is all about.

|

|

|

transcript

|

1:07 |

Let's talk about a cool little optimization that Python uses for some of the objects that are common and reused.

Now you've heard me talk about the numbers, and seeing small numbers behave differently than others.

And that's because Python applies what's called the flyweight design pattern to them.

So let me just read what Wikipedia had to say about it: "in computer programming, flyweight is a software design pattern.

A flyweight is an object that minimizes memory usage by sharing as much data as possible with other similar objects".

So, for example, numbers that have the same value could literally be the same place in memory.

Those are immutable.

It is a way to use objects in large numbers when a simple, repeated representation would use an unacceptable amount of memory.

So, like if you have the number 3 appear 1000 times like that's gonna be say, that would be 28k when really it could just be 28.

So often some parts of the object state can be shared.

It's a common practice to hold them in external data structures and pass them to objects temporarily when they're used.

Okay, so that's the flyweight design pattern, and you'll see that Python uses that some of the time, especially around numbers.

|

|

|

transcript

|

4:47 |

Alright, let's do one more demo.

Let's explore this idea of flyweight numbers.

So, I'm gonna create a new little thing we can run here, app, remember that means you should run it directly, all right.

And we'll do our fmain to just boom pop us into the right pattern.

And I copied some text here that's just gonna help us a little bit.

So this is gonna be an example showing number, which numbers are pre computed and reused.

That is the flyweight pattern.

And what I want to do is come up with a range of numbers from -1000 to 1000, and then we're just going to say for this 872 and that 872 are they the same?

So a really simple way to do that is to have just two lists, each with -1000 to 1000 numbers in them, like -1000, -999 etcetera.

It will say this is gonna be a list of range from -1000 to 1001.

Annoying, but it doesn't include the end right in this range thing, and we'll do that for 2.

We're going to do it twice.

What we want to see is by doing it twice, even though the exact same values will be in there, will they be the same memory address?

Will they literally be the same PyObject pointer thing in memory?

Or will they just be the equivalent values?

All right, and a way to do that, we'll just keep a track of reused, make a little set or something like that.

Actually, let's make it a list so we can sort it.

for number 1 number 2 in we're gonna use this cool thing called "zip", and if you have two lists, what it's going to do, if they will get out the way, is if you give it two lists, it will take an item from one and the other and put them together as a tuple.

So if I say list one list two, I'll get -1000, -1000, -99, -99 each time through.

But they're going to be the values out of the two lists, and we're gonna store them into n1 and n2.

And just so you know what we're doing here we'll print n1 and n2 and that's not what I want to run, let's run it here.

Here you go.

You can see, It's just like lining them up side by side, but this one comes from list 1, and this one comes from list 2.

So what we want to do is we want to ask, Are they equal?

Of course they're gonna be equal.

More importantly, are they the same place in memory?

So we'll say if the id of n1 is equal to the id of n2 then we're gonna reuse, go to our reused and append doesn't matter which one just one of them to say "this number is reused".

Then we're gonna print and print out "reused", something like this: "Found Reused, n1".

All right, let's go and just print that and run that real quick and see what happens.

Ok, this is pretty interesting.

Look, it goes up to 256 and it starts at -5.

It's weird, right?

So the numbers -1000 up to -6, Those are not reused.

Those were different allocations treated as totally different, unrelated things that just happen to have the same value.

But from -5, to, scrolled the wrong thing, to 256, these are literally the same thing in memory.

So there will only be one 244 PyLong object pointer in the runtime because it's always using it over and over this flyweight pattern.

So we can also just be a little more clear to make sure we know what's happening.

So we have a lowest equals the min of reused, and the highest is the max, and we just print out flyweight pattern from, put a little "f" in the front.

There we go, lowest to highest.

Alright, one more time.

Just like we saw flyweight Pattern is from the numbers -5 up to 256.

This is actually a super cool pattern that you can use in your own code.

You saw, like, Python objects and data structures are fairly expensive, memory wise.

So if you have the idea of like we've got immutable data that's reused in lots of places, you could come up with your own concept of a flyweight pattern here and optimize that.

But, you know, that's not really the point here.

The point is more to talk about the internals of what Python is doing around the numbers to prevent them from going totally crazy in terms of how much memory they consume because -5 to 256 those numbers appear all the time.

|

|

|

transcript

|

0:45 |

If you're a fan of this red pill type of thinking, you should definitely check out Anthony Shaw's CPython internals.

I've had him on the podcast a couple times to talk about it, and he's finally written it up as a book.

So this, like 350 pages talking about all the CPython source code, how you can figure out, you know navigate where in the whole structure, different things are, like where the object headers vs the objects themselves and digging into a whole bunch of interesting things the parsers and whatnot.

So if you want to dive into not just the memory side of CPython, which is what we're gonna still spend a lot of time on, if you want to look at the whole thing, the parsers, the execution and everything, you know, check out Anthony's book.

It's definitely good, recommend it.

|

|

|

|

19:53 |

|

|

transcript

|

1:02 |

When you think about Python memory management, you're very likely thinking about reference counting, garbage collection, how things get cleaned up.

But memory doesn't start there.

It starts by getting allocated, and it turns out that Python has a whole bunch of techniques and patterns and ideas around making allocation fast, efficient, doing things so there's not memory fragmentation, all of those kinds of things.

Allocation in Python is actually way more interesting than you would think.

So we're going to spend some time talking about the core algorithm, using pools and blocks and arenas, which maybe you've never heard of, but all of these things are really important in working with objects to prevent memory fragmentation and prevent them from blowing up in terms of taking too much memory and so on.

So this will be really a fun and important prerequisite before we get to what most people think when they think of Python memory, and that's the cleaning up side of all that.

|

|

|

transcript

|

4:00 |

Let's do a little thought experiment.

Imagine we have this one line of Python code, which we know whole tons of stuff is happening down in the runtime.

But on the Python side, it's simple.

We have a person object.

We want to create them, past some initial data over to them, their name is Michael, and so on.

Now let's imagine that to accomplish this operation we need 78 bytes of RAM from the operating system.

What happens?

How does that get into Python?

Like, what part of memory do we get?

How is it organized and so on?

So a very simplistic and naive way to think about this would be all right, what we're gonna do is going to go down to the C-layer, and C-Layer is going to use the underlying operating system mechanism for getting itself some memory.

It'll malloc it, right?

So malloc is the C allocation side and then free, we would call free on the pointer to make that memory go away, Okay?

So you might just think that the C runtime just goes to the operating system and says "give me 78 bytes", and the operating system says "super, we're gonna provide 78 bytes of virtual memory that we've allocated to that process", which then, boom into that we put the thing that we need, some object that has an id and the id wasn't explicitly set.

But, let's say it's generated.

The id is that in the name is Michael.

Well, that seems straightforward enough.

I mean, you have these layers, right?

Python is running and then Python is running actually implemented in C and C is running on top of the operating system and the operating system is running on real RAM on top of hardware.

So this seems like a reasonable thought process.

But no, no, no.

This is not what happens.

There's a whole lot more going on.

In fact, that's what this whole chapter is about, is talking about what happens along these steps.

And it's not what we've described here.

Let's try again.

So at the base we, of course, still have RAM.

We have hardware.

That's where memory lives.

We still have an operating system.

Operating systems provide virtual memory to the various processes that are running so that one process can't reach in and grab the memory of another.

For example, we saw that there's ways in the operating system to allocate stuff.

So at C, there's an API called malloc that's gonna talk to the underlying operating system.

This is what we had sort of envisioned the world to be before.

But there's additional layers of really advanced stuff happening on top here.

Above this, we have what's called Pythons allocator or the PyMem API and PyMalloc.

So the C runtime doesn't just call malloc, it calls PyMalloc, which runs through a whole bunch of different strategies to do things more efficiently.

We saw that in Python and CPython in particular, that every tiny little piece of data that you work with, everything, numbers, characters, strings, all the way up to lists and dictionaries and whatnot, these are all objects, and every one of them requires a special separate allocation, often very small bits of data, and that's why Python has this Pymalloc.

But wait, there's more.

If you're allocating something small, and by small, I mean sys.getsizeof, not my fancy reversal thing.

So if you're allocating something that is in its own essence small, then Python is going to use something called the "Small Object Allocator", which goes through a whole bunch of patterns and techniques to optimize this further, and we're going to dig into that a bunch.

So if you want to see all this happening, you can go to "bit.ly/pyobjectallocators", the link at the bottom, and actually the source code is ridiculously well documented.

There's like paragraphs of stuff talking about all these things in here, but there's actually, in there, There's a picture, ASCII art-like picture that looks very much like this diagram that I drew for you with some details that I left out, but they're in the source code.

|

|

|

transcript

|

1:52 |

As you saw, in just the last video, Python has this Small Object allocator.

Well, if small objects are treated differently than other objects, you want to know what objects are small and more of them are small than you would think, because most big objects are actually just lots of small ones.

So let's take a look at one example.

There's many we could talk about.

So here we've got data, a list that contains 50 integers, 1 to 50, and in a lot of languages, the way this would work is you would have just one giant block of memory that would be, say, 8 times 50 long for each 8 byte number that's gonna go in there 4 times that right 200 bytes if they're regular integers and so on.

And it's just one big thing.

But that's not how it works in Python.

When you put stuff together like this, you're gonna end up with the list that has, you know, a bunch of pointers as many pointers as there are in the actual list.

Plus probably a few extra for that buffering so you don't reallocate like I talked about, but what you're actually gonna do is have a bunch of little objects that are being pointed to by the parts of the list.

And if you have a class, you're gonna have fields in the class.

The things that are in there are like strings and numbers and maybe other lists those are not part of that object in terms of how big it is, those are outside of there, pointed to by, pointed to by the variables within that data structure.

So these objects that feel like they're big, often they're many small ones, and if that's the case, all of these little things get stuck into the algorithm applied to the Small Object Allocator, not a big object.

Even though taken as a whole, they might use tons of memory.

Most of the parts will probably still be effectively as far as Pythons concerned small objects.

|

|

|

transcript

|

2:13 |

Let's look at one of the red pills in CPython around object allocation.

So this is "obmalloc.c", and if you look here, you can see here's the ASCII art part that I was telling you about before.

This is when the second take that we did on what allocation looks like.

We have physical RAM, we have virtual memory, then we have malloc, then we have PyMem, API, the allocator, but there's something really interesting at the bottom that we didn't talk about then and check that out.

It says "a fast special purpose memory allocator for small blocks to be used on top of a general purpose malloc heavily based on prior art".

And if you want to go check this bit of the source code, this is literally straight out of GitHub, just go to bit.ly/pyobjectallocators, and it will take you right to this line, and you can look through it.

So what's the deal?

To reduce the overhead for small objects, that is, objects that are less than 512 bytes in the "sys.getobjectsize", not the whole traversal, but the small bit, Python sub-allocates larger blocks of memory, and then, as you need it, will free up, or give, that memory to different objects, which potentially could be reused once that object is cleaned up.

Larger objects are just routed to the standard malloc.

But for these smaller ones, which are most of them, the Small Object Allocator uses these three levels of abstraction.

We've got arenas and pools and blocks.

At the lowest level we've got this thing called a block, and then pools manage blocks and arenas manage pools.

So we're gonna go through all of those.

But there's this trifecta of ideas or algorithms that we're going to use to manage, remember, the small objects.

And this little quote right here comes from an article about Python memory management by Artem Golubin, and he's done some fantastic research and writing around it.

So I recommend that you check out his blog.

There'll be a couple of articles that I think I refer to.

Definitely, I've read as researching all the stuff for this course, so check out his article here.

It has a lot of interesting analysis on what's happening and why it's being done.

|

|

|

transcript

|

2:17 |

Let's start at the lowest level where the actual objects are stored in memory.

Instead of allocating as we saw at the very beginning of this chapter 17 bytes or 20 bytes or whatever you need exactly for a thing just randomly where you've got a gap in your memory, Python uses these things called blocks.

Blocks are chunks of memory of a certain size, and each block is designated to hold objects of a certain size.

So, for example, we might define a block that holds objects of 24 bytes, or around 24 bytes, let's say.

The places where the objects go are 24 bytes and anything that's between 17 to 24 bytes is allocated into those 24 byte spaces.

And sure, if you've only got 20 bytes you need and you stick it into a 24 byte spot, you're wasting, quote "wasting" 4 bytes, right?

You could have packed it a little bit tighter.

But this algorithm allows Python to create these sets of memory, that it's really easy to allocate stuff into once it's freed up, just un-assign it, but not give it back to the operating system, necessarily.

Then when you want to allocate something new, maybe next time it's 22 bytes, you can use that same little spot and reuse it.

Okay, so that's the idea of these blocks.

And there's some rules.

One of the rules is it only holds, each block only holds things that fit into its block.

So if you've got one, that's the size for a 24 byte element, things of 17 to 24 bites go in there, but they kind of waste the space if they don't totally fit and you can see they're broken into these different categories.

So once of block is allocated, it's always dedicated to its size.

Its either a bunch of stuff that fits into 24 byte pieces or 16 byte pieces and so on.

So you can think of Python allocating these blocks, for of the different size of allocation it's going to do and then be able to just reuse that memory.

It doesn't have to go back to the operating system, free up memory, ask for more memory, get that fragmented on in RAM and things like that.

It can get a whole bunch of space for those pieces of those small objects that it needs and just works with it internally, and it's more efficient that way.

|

|

|

transcript

|

1:05 |

The next level up in this algorithm are the pools.

Now pools, their job is to manage a bunch of blocks and a given pool always contains blocks of the same size.

So if Python needs to allocate something that fits into a 16 byte blocks, it can just go to the pool that contains those and ask for it to allocate it, ask for one that has some free space and so on.

The pool size is typically set to match the memory page so it maps well to RAM, it doesn't get fragmented, and Pythons ability to reuse this fixed, contiguous set of memory helps reduce fragmentation that would otherwise happen if we just went to the underlying C-layer and just said "give me the next free bit of memory that you have of this size".

You can look at this a little bit here is the source code around the pools.

It has the next free block.

Right there is a pointer that you can always get you, and it also has the next pool and the previous pool.

So it's kind of a doubly-linked list of pools that within there contain a bunch of these blocks.

|

|

|

transcript

|

1:19 |

Let's go back to the CPython source code and look at this idea of blocks, pools, and arenas.

We haven't talked about arenas yet, but let's just look over there real quick.

So I've come up with another Bitly URL for you, cause like, all of these are super long.

So "biy.ly/cPythonpools", if you want to go there, and you might be surprised to see what you get when you come here.

You don't get source code immediately.

There is source code if you scroll enough, like here, there's some source code, but check this out.

There's like a full on essay about what is happening around one of these pools.

Okay, so if we go down a little bit further, it talks about how blocks are managed within the pools.

So blocks in the pools are getting carved out as needed.

The free block points to the start of a linked list of the free block, so you can always just go there and start allocating into that one.

So pretty awesome, right?

There's a bunch of stuff about, like things that they're doing that might be a little bit obscure, or what's unclear, and so why it's happening that way and so on.

So when I was studying this I Was really surprised at the level of detail, describing how pools and blocks interact together.

And if you want to see what the core developers and the people who worked on this and implement it and maintain it, what they say, Well, here it is, right in the source code on GitHub.

|

|

|

transcript

|

0:54 |

The last data structure or idea that we gotta cover when we think about how Python is working with small objects are arenas.

And arenas are 256 kilobytes of memory, they're allocated on the heap and they manage 64 pools.

You can see down here the data structure that defines them.

It's quite similar to the pools.

You've got doubly linked list.

You've got the next free one.

Things like that.

These arenas, this is the top level thing.

Arenas contain a bunch of pools.

Arenas are always the same size.

The pools are often the same size, the blocks that they contain, they might be different scale, they might be 8 byte objects, they might be 16 byte objects, though, you know, the second would only hold half as many as before, but that's the idea.

We've got arenas that control the pools, the pools hold the blocks, and the blocks are where the objects actually go with 8 byte alignment.

|

|

|

transcript

|

5:11 |

Well, with all this abstract talk about blocks and pools and arenas can be a little bit hard to understand.

So, let's just actually go and see what Python will tell us about all of this stuff and the Small Object Allocator.

So, go here and create an "app_something", Remember?

That means you can run it and I'll just call it "stats".

Real simple, we'll just do our fmain magic once again, we're gonna need to use sys, so there's our sys, Perfect.

And let's you put a little message to see what this is about.

Great, so over here, we can just run "sys.", It's not great, kind of this semi-hidden, but you can still get it..

"debugmallocstats()", like so.

Let's go ahead and run this, see what we get here.

Notice it didn't come up in our auto-complete, Right?

"_debug", no, not there.

But, we tell PyCharm not to obscure it and not to tell us it's not there.

Hey, We can see that it looks like it's okay.

Alright, so let's look and see what we got out of this.

Check this out.

Run it once from scratch.

If we get to the top, what do we got?

We have this "small block threshold".

Remember, things that are managed by the Small Object Allocator, they are how big?

512 bytes or less.

And there are 32 different size classes.

So, 16, 32, 48, 64, 80 and so on, is apparently what we got right now.

There's how many pools of each size of those are allocated and how many blocks are in use within those pools?

so like 5 pools, we've got 558 blocks used within those 5 pools, and we don't need to go allocate more until we've used up 72 more of them.

And of course, all of that is only for 32 byte elements.

All right, so let's scroll through this.

This just gives you all the information about the blocks, their sizes, how many pools go with them and whatnot.

And then here's our arenas.

How many arenas have we allocated?

12.

So that would be 12*256, Should be 3MB areas reclaimed.

3, The max we've had is 9.

Currently, we have 9.

And then it tells you that this much memory is being used right now.

You can go through and you can see a lot about it here, Right?

Then we come down here and it actually tells you what is allocated.

So we have "PyCFunctionObjects", we have 56 bytes each.

We have 5 of those, so that's cool and so on.

Right.

So You can see all the different objects: tuples, stack frames, floats, dictionaries and whatnot being used here.

So you get a lot of operations.

Let's go and make it do something.

So I'll just come down here and say "make it do memory things".

So I'm just gonna copy some code.

What we're gonna do is we're gonna create a bunch of larger numbers, so that's going to make sure they get outside the flyweight pattern and they're gonna actually get allocated every time.

And then we're gonna create a list, then we're gonna put a list which has the string repeated 100 times of whatever this large number is that we get here.

So we're creating a bunch of objects.

Basically, we're doing a bunch of things here.

Alright, let's try this one more time and see if it did anything different this time.

Scroll, scroll, scroll, scroll, where is my separator?

It didn't flush quick enough, did it?

Alright, so we gotta go through the first one, right?

The first time we see 512, there we go.

That's the second one here.

So you would see that there's potentially more of these in use.

Not a whole lot more.

You can see, I think more frame objects were in use here and so on.

Let's look one more time.

Yeah, the data is still there.

Make it a lot bigger.

Here we go, it's slow.

It's doing stuff.

Okay, great.

Now you can see more arenas were allocated.

We had 62, the highwater mark was 34 and we're still there, were using 8.9MB of RAM and so on.

Then we can see our list object and all these different things.

Okay, we have more of these blocks in use than we had before.

So the 64 one's, 1000, 700, and before we had only 138.

So you can see as we do more work, It's consuming more memory, it's getting allocated into the different spaces, and so on.

Not sure how much meaningful information you're going to get from, like, actionable stuff you can do here.

But I definitely think it helps understand some of these basic ideas that we've been talking about and gives you a look at the current state of the system, and that's pretty cool.

|

|

|

|

42:27 |

|

|

transcript

|

3:00 |

Well, we've come to the end of the line.

Not for our course, there's a lot left there.

But for the life-cycle of Python objects.

We're going to talk about cleaning up memory and destroying Python objects that we've been working with, but we don't need anymore.

This is really what most people think about when they think about Python Memory Management.

I think the stuff about allocation is not even on people's radar for the most part, but this is.

This is when we're working with objects and we're working with data, how does Python make it go away without us having to do what C has to do?

Where you have accounting and some part of the program is responsible for tracking when a thing can go away and it's got to make sure it doesn't do it too early, but not too late.

So we're gonna talk about two different techniques in Python that, but let's not worry about that, but understanding it is still super important.

So if we grab our red pill again and jump over into the CPython source code, you can just go to "bit.ly/cPythonobject" to go find this bit here.

Remember, that we saw object.c already.

This is object.h, the header file that defines what is one of these objects.

There's 667 lines just in the header.

This is a crazy thing.

So I've only grabbed a very small bit of the definition of PyObject.

So we're defining a new type, You can see at the bottom it's called "PyObject", we're gonna have a whole bunch of pointers to it, but the most interesting thing I want you to take away in this part is to do with this "ob_refcnt".

So how Maney references, how Maney variables, pointers, remember, Python doesn't have pointers, but it kind of does so, references or pointers point back to this particular object?

and it's a "Py_ssize_t", which is a weird type, basically, it's a way to get an unsigned number, an integer or a long or something like that, that matches the type of architecture you have.

So like on a 64 bit processor it's probably 64 bit or 64 bit version of Python, a 64 bit number or a long, things like that.