|

|

|

10:10 |

|

|

transcript

|

3:36 |

Welcome to Python 3, An Illustrated Tour.

I'm Matt Harrison and I'll be your host through this nice trip on the new features of Python 3.

Let me just give you an introduction about what we're going to cover in this course.

We're going to talk about the changes and new features in Python 3.

So if you've been using Python 2 for a while and transitioned to 3 or you've started using 3, but aren't aware of all the new features, this course is for you.

Let me tell you a little bit about myself.

I am the author of various Python books.

I wrote the best-selling Illustrated Guide to Python 3.

I have books on Pandas as well as the Tiny Python Notebook.

I run a company called MetaSnake and I do Consulting and training for them.

So I've trained companies big and small and I'm really used to seeing how people cope with Python and how people cope with the new features.

I ran the Utah Python user group for five years.

I've been using Python since 2000 and I tweet about Python with my username @_ _mharrison_ _.

Who is this course for?

Well, it's for old Python programmers or those who have been using Python 2 and maybe have transitioned a Python 3 but are still programming it as if it were Python 2.

It's also for new Python programmers, those who are just getting started and want to sort of understand better the features and cool syntax and benefits that Python 3 provides.

So in general, this course is aimed for all those who want to leverage the latest features of Python.

Let's talk about what we're going to cover in this course.

We're going to talk virtual environments how you can make use of Pipenv in a Windows or Unix environment.

We'll also be talking about Fstrings and how you can take advantage of those, great new feature in Python 3.

We'll also talk about what has changed in numbers since Python 2 some of the things that are happening under the covers as well as some other features that you can take advantage of.

We'll talk about classes, we'll dive into Super and learn how that works.

We'll also talk about some of the new dunder methods in classes.

We'll talk about functions, we'll talk about keyword only arguments and we'll get into some of the fun stuff there.

We'll look into the standard libraries some of the new libraries in the standard library, we'll look at the statistics module, we'll look at the enom module.

We'll look at the pathlib module and show some examples from those.

We'll also talk about some of the new syntax in Python 3 that isn't available and why you might want to use that.

We'll look at the new async library, we'll talk about how that functions under the covers, the mechanisms that that's using and we'll look at cool things like asynchronous context managers, asynchronous iterators and asynchronous generators.

We'll also dive into annotations and see how you can take advantage of this cool new feature to make your code more robust.

In addition, we'll look at unicode, this is native in Python 3 and we'll see some of the features that Python 3 supports and allows you to do with the new Unicode functionality.

We'll also look at some of the new annotation tools that allow you to take advantage of the annotation syntax.

And we'll also talk about the new features like generalized unpacking and show some examples of that.

So in addition to content, which I think is pretty good, there's also a lot of labs for all these concepts and you get to try your hand at these Labs there are some cool self-correcting assignments that you'll get to do.

And in addition, there are content walks through all the solutions to those as well as the walks through common errors that people have when they come across these things.

It's a great way to try your hand out and learn these new things and take advantage of them.

I hope you enjoy this class and I hope that it makes your Python code much cleaner, easier to read and more robust.

Enjoy.

|

|

|

transcript

|

0:36 |

I just want to share with you some motivation and some resources.

I've taught a bunch of people Python over the years and I've found that most people learn best when they can in addition to just listening or watching, they can try something out.

And so included with the course are a bunch of labs or assignments for you to try out what we've talked about.

I would advise you not to look at the solution videos which are included in the course until you've tried out the labs, that's going to help you get the most out of the course.

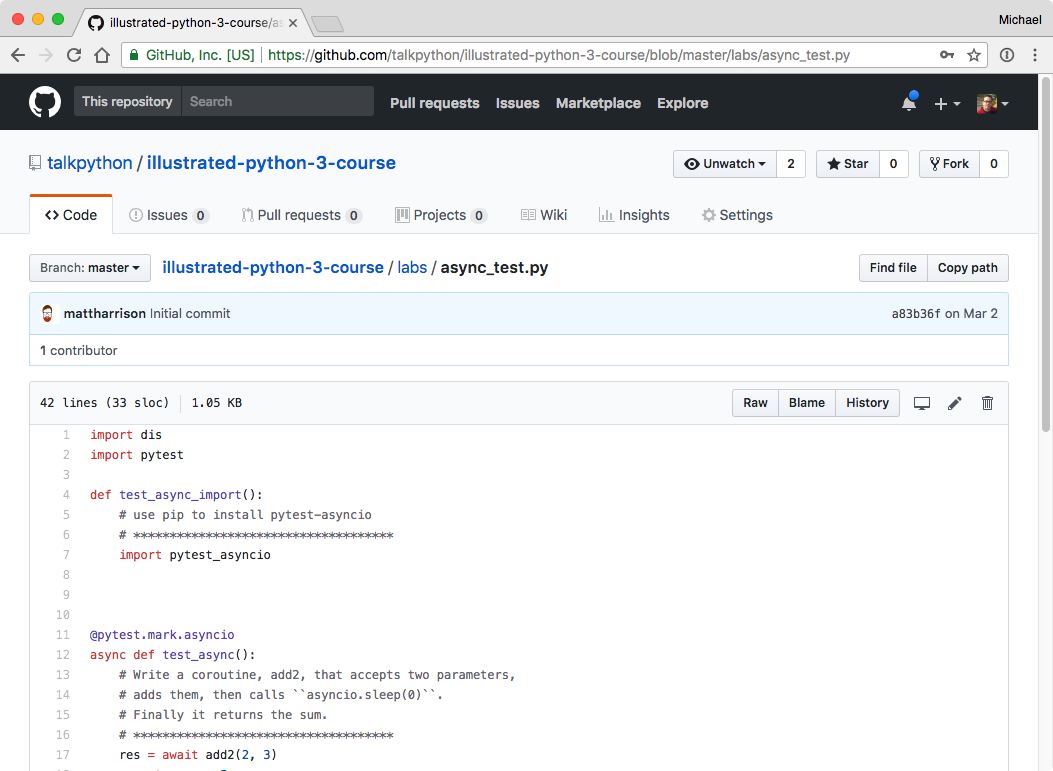

Also, I want to give you this GitHub link here.

This will give you all the resources for the course.

So keep this handy while you're going through the course.

|

|

|

transcript

|

3:53 |

In this screencast, we're going to look at how to install Python 3 on Windows 10.

Typically, when I use Python in Windows, I like to make sure that I understand how to use the prompt.

So in order to launch the prompt you're going to go down to the bottom of your taskbar and where it says type here to search you can just type CMD and then we'll pull up command prompt and you can run that.

Now, you'll note here that when I type Python it currently says the Python is not installed, so we want to remedy that.

Let's go to the Python website and download Python 3.

We're at the Python website and you can see if you scroll down a little bit that there is a download link.

We're going to click Python 3.6.4 which is the current version at the time of this recording, you'll want to use whatever Python 3 version is listed there below.

Inside of here, you'll see a bunch of things listing peps and whatnot and you can scroll down and we'll get the files here at the bottom.

And typically, you'll probably want to either install a Windows 64-bit or Windows 32-bit, I am going to do a window 64-bit.

That should work on most modern machines.

There's a couple of different versions here.

There's what they call an embeddable zip file.

There's an executable installer and there's a web-based installer.

I'm going to download the executable installer.

So just click on that and download it.

This will download for a minute.

After that's downloaded, you'll see that there's a file here called Python 3.6.4 AMD we'll just double click on that and that should install Python for us.

Now, this is where you want to be careful.

You'll see that there are various ways to install it and one thing that you need to make sure is that you click this add Python 3.6 to path.

If you don't click that, when I type Python at the command prompt, it won't find it.

So I'm going to click that.

I'm also going to choose customized installation note that the normal installation is going to put it in this C:\Users\matt\AppData\Local\Pograms\Python\Python36 directory and I'm just going to say customize and I'm going to install for all users.

So it will put it in the C:\Program Files\Python36 it's going to ask if I want to install it, I'll say yes, it will think for a little bit and install Python.

Okay, it looks like it's installed.

I'm just going to close this.

Let's go back to our prompt here.

Remember type CMD down there and let's type Python.

You can see that after I type Python it's now put me in the Python interpreter where I can execute Python code, to get out of this, I type quit and that will put me back into the prompt.

02:39 Note that I can also do things such as type Python -m idlelib.idle and it will launch idle, this is a Python editor that comes with Python.

Note that a couple other things that it will do is that if you scroll down here you'll see that there is a Python directory here and you can click on this idle here to launch idle, which will launch idle that way, alternatively you can launch Python by clicking this as well.

This will open a command prompt with Python, but I really prefer just to go down here and type CMD to launch Python here and note that we'll talk about virtual environments.

When I launch Idle from the launcher here, we're using the system idle and when we start using virtual environments we'll probably want to launch idle or other programs from our virtual environment.

So rather than launch it from there, I prefer using this Python -m idlelib.idle to launch idle.

Great, hopefully after you've done this you've been able to get Python on your Windows 10 machine working.

|

|

|

transcript

|

2:05 |

Welcome to your course i want to take just a quick moment to take you on a tour, the video player in all of its features so that you get the most out of this entire course and all the courses you take with us so you'll start your course page of course, and you can see that it graze out and collapses the work they've already done so let's, go to the next video here opens up this separate player and you could see it a standard video player stuff you can pause for play you can actually skip back a few seconds or skip forward a few more you can jump to the next or previous lecture things like that shows you which chapter in which lecture topic you're learning right now and as other cool stuff like take me to the course page, show me the full transcript dialogue for this lecture take me to get home repo where the source code for this course lives and even do full text search and when we have transcripts that's searching every spoken word in the entire video not just titles and description that things like that also some social media stuff up there as well.

For those of you who have a hard time hearing or don't speak english is your first language we have subtitles from the transcripts, so if you turn on subtitles right here, you'll be able to follow along as this words are spoken on the screen.

I know that could be a big help to some of you just cause this is a web app doesn't mean you can't use your keyboard.

You want a pause and play?

Use your space bar to top of that, you want to skip ahead or backwards left arrow, right?

Our next lecture shift left shift, right went to toggle subtitles just hit s and if you wonder what all the hockey star and click this little thing right here, it'll bring up a dialogue with all the hockey options.

Finally, you may be watching this on a tablet or even a phone, hopefully a big phone, but you might be watching this in some sort of touch screen device.

If that's true, you're probably holding with your thumb, so you click right here.

Seek back ten seconds right there to seek ahead thirty and, of course, click in the middle to toggle play or pause now on ios because the way i was works, they don't let you auto start playing videos, so you may have to click right in the middle here.

Start each lecture on iowa's that's a player now go enjoy that core.

|

|

|

|

25:14 |

|

|

transcript

|

3:08 |

In this video, we're going to look at how to install the tool pipenv, which is the recommended way to install Python packages and create virtual environments in Python 3.

This is kind of tricky to install on windows, so I'll show you how we do it.

First let's launch a command prompt by typing cmd into the search box here and we'll see that we don't have access to pipenv right now, but we do have access to Python.

Okay, so we have Python installed, but we don't have pipenv installed.

So let's install pipenv.

Pipenv wraps virtual environment creation and pip utility into one nice little command line tool and in order to use it, we have to install it so we can install it using Python and saying -m I want to run the module pip install pipenv and we'll try and install it here.

When I'm running this command, it's installing it into the system Python.

It's going to think for a while but it's going to give me an error because it couldn't install it into a certain directory because it doesn't have access to do that, and that might seem annoying or problematic but it can be okay as well because what it allows us to do is sandbox our system install and not have any user trampling on top of it.

I want to install it, instead it's doing what's called a user install.

So I'll show you how to do that.

Rather than saying python -m pip install pipenv, I'm going to add a --user option here and this is going to try and install it again.

And it looks like it worked this time.

Let's try and run pipenv and we can see that pipenv did not run.

Why didn't it run?

It's because our path can't find it, because we did a user install the directory where a user install binary is installed is not in our path.

We can look at our path by saying echo %path% and we can see also where the path that the user install goes in by saying Python -m site --user-base.

So inside of this app data roaming Python is a directory that has our new pipenv guy, let's look at that.

It's going to say dir app data roaming Python and inside of Python there's a Python 3.6 directory and inside that, there's a scripts directory and that's where our pipenv executable is.

So what I want to do is copy this directory right here I'll just highlight it and hit enter and if I go down here into my search box and type env, that will allow me to update my environment variable.

I'll just click at the bottom there, environment variable click on path and say edit and I'm going to say new I want to add a new environment variable path and just paste in what I copied there.

I can say edit.

Okay.

Okay.

Okay, now note that pipenv will not work in this command prompt.

So I'm going to close it, but I'll launch another one here by typing cmd into the search box.

I should have access to pipenv now.

So this video showed you how to install pip in a local install and how to update your path so that you have access to it from the command prompt.

|

|

|

transcript

|

10:50 |

In this video we're going to talk about virtual environments and pip.

A virtual environment is a mechanism that Python provides to allow per project dependency.

When we install Python, we get what we call the system Python installed or if you have a machine that comes shipped with Python there is a version of Python installed and you can install packages into that, but you probably don't want to and we'll explain why.

Virtual environment allows us to isolate our dependencies based on a project, and it allows us to easily install and upgrade those dependencies.

One example of why we might not want to use our system Python is illustrated here assume that I'm working on a project that requires Django 2.0 and later a year or so after that, my boss comes in and tells me he wants me to work on a new project and I want to use Django 3.4 the current version of Django at the time.

If I've installed Django 2 on my system environment.

I now need to install Django 3.4 on top of that and that can bring in the whole bunch of dependencies and whatnot essentially making it so my old project won't work anymore, which could be fine, if I'm working on a new project.

But what happens when my boss says, oh, I need you to fix that bug in the old project.

Well, then you've got to go in and uninstall Django 3 and all the dependencies and install Django 2, it turns into somewhat of a headache.

So solution to that is to use these virtual environments and that allows us to on a per project basis create an environment for Python and so we can have one for our old Django 2.0 project and have another one for our new one that our boss tells us to create.

Using these virtual environments we can easily switch between these two different versions and use the appropriate one very easily.

Here's how we create a virtual environment.

I'm going to run Python with -m switch, the -m swicth allows me to run a module that's included in the standard library in the Python path, in this case it's going to run the venv module.

And we're going to pass in a path where we want the virtual environment to live.

This can be any path and I like to put it in a directory called .venv in the same directory as my project, I'll tell you why in a minute.

After we've created this virtual environment, it might take a while.

What Python is going to do is it's going to make a directory structure in there that has a binary directory where there's a Python and a pip in there and it's going to make a library directory and it's going to also give us a tool that allows us to activate our virtual environment and when we activate it, what it's going to do, it's going to shim in our path where our new binaries are found into our path variable.

So when you run Python, we're no longer running the system Python, but we're running the Python in a virtual environment.

And you can see on bash systems when we run this command source with the path to this new environment and then there's an activate script in there when we run that, we can see that our shell will tell us the name of the virtual environment we are in.

In this case, we can see that the env matches the env that we passed in to the command line.

On Windows, we do a similar thing, we can pass in the full path.

If you have multiple versions of Python installed you can use the full path to Python.

And again, we're going to do the -m with the venv module, and we give it the path to where we want to create our virtual environment.

Once we've created that virtual environment in Windows, because we don't have source we run this bat file here, which is in a scripts directory.

And if you run that, you'll see that that updates your prompt as well.

Just to clarify the -m swich, this will execute a module that's found in your Python path, why we use this instead of using a pip executable or virtual env executable is it allows us to make sure that we know which version of Python we're using to create our virtual environment on systems where you might have multiple versions installed.

this can be pretty handy.

Once we have a virtual environment what we do inside of it?

Typically we install things, so there's a couple commands we can say pip install foo to install package foo, we can create a requirements file if we want to we can say install -e and that will install our package in edit mode, it allows us to have our package in the Python path, but also update it and when we update it will get live code essentially in our Python path.

We can also say pip freeze and that will list all of the packages that are installed in a virtual environment and we can also uninstall libraries, which can be nice.

One thing to be aware of is if you've created a virtual environment just running pip doesn't ensure that you're going to install something into that virtual environment, you either need to be using the pip that's located in the virtual environment or have your virtual environment activated so that the pip for that virtual environment is shimmed in the path and that's what's getting called when you call pip, so just make sure that you know which pip you're running.

Let's talk about a tool called pipenv, as of post Python 3.6 pipenv is now according to the Python.org documentation the recommended tool for managing dependencies.

What is pipenv?

Pipenv is a tool that basically allows you to do virtual env and pip in one command line, we'll see some examples of that.

Now this is a third party tool, so it's not included in Python 3.6.

So you need to install it, again, we're going to use this -m module we're going to say Python 3 -m pip install and we're going to also use a --user option on the command line here what the -- user option says, is it says I want you to install this package, but I don't want you to install it into the system Python I want you to install it into a user directory that your user has access to but other users might not have access to.

It allows you to get around having to be root to install packages.

Now that might be problematic, because probably wherever Python is going to install this pipenv user package is not going to be in your path and you want the pipenv tool to be in your path.

So you're going to have to do some things after that.

Yeah, this is a little bit more convoluted and not quite as easy as it could be but bear with me and you'll only have to do this once.

You want to know where your user base is or where this user path is you can run this command here Python 3 -m site -- user-base and that will tell you the directory of where your user base is and on Unix systems, if you add bin to that or on Windows systems, if you add Python 3.6 scripts to that that will be the path where pipenv will be located.

So you'll need to do something like this on a Unix system in your .bash profile file, you'll need to update that and add into the path this new directory here and then you can source that and you'll have access to your user pipenv.

On Windows systems, it's a similar process though typically not done through editing files.

You need to go down to your search box and type env in there in the path environment variable, you want to update that and add the user base with the addition of Python 3.6 and scripts on the end of that.

That point, if you relaunch the command prompt by typing cmd in the search box, you should be able to type pipenv and have access to it.

Here's an example of using pipenv.

I've created a directory called blockchain, this is on Unix, but you can do similar things on Windows.

I'm going to change into that directory, and now, inside of that directory, I'm going to say pipenv install py.test what that does, is it goes out and because I called pipenv it's going to create a virtual environment for me and it's going to install py.test into that virtual environment.

If I look in the current directory after I run this, this directory was brand-new, I just created it, I'll see two files, I'll see a file called pipfile and the file called pipfile.

lock.

That's what pipenv has created for me.

It's also done some other things behind the scenes.

It's created a virtual environment for me.

Let's talk about pipfile.

Pipfile is a new way of specifying dependencies.

It supersedes the old requirements.txt file.

One of the nice things about pipfile is that it allows you to support a dev environment and a default or production environment in a single pipfile and this basically says if you're developing this library, then you'll want to install the dev dependencies.

If you're deploying it for production or whatnot, you'll just want the default dependencies.

There's the other file there, the pipfile.lock and that stores the installation details about what libraries you have and it has hashes and versions in there, so you can make sure that when you recreate a virtual environment using this file that you have the exact same libraries installed.

When you run this pipenv tool, it's also going to create a virtual environment and on my system, it threw it into this .local\ share\ virtualenvs directory and inside of there, it created a directory called blockchain with a unique identifier after it.

If you want to have pip recognize another directory, it will recognize a .venv directory that is in your current project, if you have this environment variable pipenv_venv in project set so you can set that using the set command in Windows or you can just say pipenv_venv_in_projet=1 right before your pipenv command and it will recognize .venv virtual environment if you've got one.

A couple commands for using pipenv you can say pipenv run Python and note that my environment here is not activated, my virtual environment, but because I'm using pipenv, pipenv has some logic to know that I am in a directory that has a virtual environment associated with it and so will launch the right Python there.

If I want to activate my virtual environment, I can say pipenv shell and that will activate it, note that this command will work in Unix and Windows.

A couple of other commands that we can do with pipenv, we can say pipenv --venv and that will give us the location of where our virtual environment is.

We can say --py and that tells us which Python it's going to run we can install a package and we can install it with a dev dependency by putting --dev after it.

Cool thing that we can do with pipenv is we can say graph and that will create a dependency graph to tell us what our dependencies depend on, we can lock our dependencies by calling pipenv lock.

And we can uninstall package as well.

I hope that you've learned a little bit more about virtual environments and pip and pipenv.

If you haven't tried using these tools, please try them.

Again, as I said, pipenv is now the recommended tool to use moving forward so you should get used to using that tool.

|

|

|

transcript

|

2:46 |

In this video, we're going to look at the venv_test assignment.

I'm on Windows, so I'm going to start a command prompt and I'm going to change into the directory where I have my Python files installed here.

I've got a labs directory here.

We're going to do venv_tests so let's just look and see what that says.

It says use pipenv to create a virtual environment and install pytest, run pytest on this file.

Right now I can't even run pytest because it's not installed and I want to create a virtual environment to do that.

One of the things that I like to do is to put the virtual environment in the same directory, and if you want to do that, you have to name your virtual environment .venv I can use either virtual env or I can use pipenv to do this.

I'll show you how to do it with pipenv.

So in order to do it with pipenv, we need to create an environment variable and the environment variable is pipenv_venv_in_project.

So we're just going to say that set that equal to 1, if we want to inspect that we can say echo %pipenv_venv_in_project and we can see that that is now set.

And since I have pipenv installed, I can say pipenv install py.test and it will think for a minute and it will create a virtual environment and then install py.test.

Okay, so it looks like it worked.

If you recall one of the things we can do is we can take pipenv --venv and it will tell us where our environment is and it says it's in this current directory.

Awesome.

If we want to activate it we can say pipenv shell and now we are activated, we're in our environment here, let's just type pytest venv_test.py and we can see that it now succeeds, it ran.

I will also show you that I should be able to import pytest and it seems to work here if I exit out of this guy and I say Python and I say import pytest I get no module named pytest, this is because I'm now using the system Python, I'm not using the virtual Python.

And we can see that we have created in our directory, I need to type dir, we have created in here a virtual environment in our directory called .venv simply by setting that environment variable and that's all there is to do for this assignment.

You just need to make sure that it runs, it doesn't give you any errors and we have done that so we're good to go.

|

|

|

transcript

|

5:31 |

In this video, we're going to look at the venv test assignment and we're going to do on Unix system so that'll work on Linux or Mac systems.

I've got the files downloaded from the labs here.

Let's look at the venv test lab and you can see there's the assignment in that comment under the test venv function that says use pipenv to create a virtual environment and install pytest activate your virtual environment and run pytest on this file by invoking Python venv test.py You'll note that this will run pytest because at the bottom there we've got the check for if the __name__ attribute is the string __main__ import pytest and invoke pytest.main on the file.

So let's do that, I'm going to create what's called a user install.

If you don't have access right to your system or install into your system Python, you might need to do a user install, it has a few more steps, so this is how we do it.

We say Python 3 -m pip install --user and we're going to install pipenv.

Okay, it looks like that installed.

Now what I need to do is be able to access the pipenv command-line utility and because I installed that in a user install, Python installed in a certain place, I'll show you where it came.

Python -m I'm going to invoke the site module and say user-base, that says that it put it in my home directory in library Python 3.

So in there, I should see a bin pipenv executable.

Let's see if that exists in the bin directory and there is a pipenv.

So now what I want to do is I want to make sure that this bin directory is in my path.

So I'm going to update my .bash profile.

I'll just show you how I update it.

It looks like that, and once you've updated that you can source that file and you should be able to say which pipenv and it should tell you that it's using the user-installed pipenv.

Great, at this point, I'm ready to use pipenv and note that I'm in my directory, where I want to create the virtual environment, I want to do it in the lab directory.

If you don't create it from inside the directory you want to you might have problems activating it automatically using the pipenv tool.

So make sure you're in the right directory here.

All you have to say is pipenv install pytest.

So it says it's creating a virtual environment for the project and it's installing pytest.

You can see up at the very top it says where the directory is in the virtual environment, it's in my home directory in the .local folder.

So if I just list the contents of the home directory, I'm not going to see that because it starts with a period so I have to do an ls-al to actually get the local directory there.

And you can see up at the top here, there's a .local directory there.

Okay, so inside of that local directory, there's a shared directory and then there's virtual environments inside of there and it created this guy right here, talkpy3 -labs and if you look, there's a bin directory and there's an activate guy in there.

We're not going to run this the traditional way, we're going to use the pipenv tooling to activate this.

So how we activate with pipenv is we say pipenv shell.

And you'll see that it says it's spawning an environment and it says use exit to leave.

So in this case, we don't use deactivate which is the traditional virtual environment command to leave the shell we're going to use exit and also note that it updated our prompt here and it said that we're using this environment here.

You'll also note that when we ran pipenv, it created these two files here pipfile and pipfile.lock Let's just look at pipfile and you can see that it says that it has installed pytest, we can look at the lock file.

The lock file has hashes for all the different libraries that we've installed.

So if we want to get specific libraries reinstalled, it's going to check those hashes to make sure that they're the same versions, nice little feature here.

Okay at this point, I should have pytest, let's see if pytest is found and it's found in my virtual environment, cool.

Let's say which Python to make sure that I'm using the Python from my virtual environment, which Python, and in this case, I don't need to say Python 3 anymore because when we created the virtual environment it made an executable called Python that is Python 3 there.

I can also say Python 3, but those should be the same, just to show you if I type Python now it says Python 3.6.

Okay, and finally, let's run venv test and it says it ran one passed in 0.1 seconds.

So that's how you create a virtual environment and install pytest using pipenv.

I'm also going to exit from this.

I'm going to say exit and you'll see that now I am out of my virtual environment.

So this video showed you how to use pipenv to create a virtual environment and to install files, how to activate the virtual environment and how to exit from it.

|

|

|

transcript

|

1:17 |

In this video I'm going to show how to run the venv test file using idle in case you don't have PyCharm and you want to use another editor, this is how you would do it from another editor.

So the first thing I want to do is I want to activate my environment.

So I say pipenv shell and that will activate my environment now, I want to launch idle here, so I'm going to say Python -m idlelib.idle.

If I don't activate my virtual environment first and I launch idle I'll get the system idle that might not have pytest installed.

So I want to make sure that I have pytest installed.

Okay, at this point, idle has launched.

Let's open our venv test file, there it is and let's just come up here to run and say run module and note that over here in the repl, we got some output and it says it past one in one second.

So because we have launched this from a virtual environment that has access to pytest, we're able to run this from pytest.

All we have to say is run module.

|

|

|

transcript

|

1:42 |

In this video I'm going to show how to use PyCharm to run the venv test file.

I'm going to use the environment that we created using pipenv.

So I'm going to say create new project, it's going to be a pure Python project and it's going to point to the labs folder where I have the labs and note I'm going to say use an existing interpreter.

PyCharm will happily create a new virtual environment for us if we want to, but we're going to use an existing one, so we have to click the little gear here and say add local and let's make sure that we use the right one.

So I'm going to go into my home directory and I'm going to go to my .local and in my shared directory there is labs and note that it's looking for the Python executable there so I need to come down here and click on Python right here.

Okay, at this point, I'll hit create and it will say the directory labs is not empty, would you like to create a project from existing sources?

Yes, let's do that.

Great.

Here's the file right here.

Let's run this and see if it runs.

So to run it, it's going to index, we'll let it index for a while.

Okay, now let's run this here.

So I'm going to say run venv test, and it looks like it ran, note that it said it used the virtual environment Python to run it and it ran it and the test imports pytest so it looks like pytest is working.

This video showed how to use PyCharm to create a new project using an existing pipenv environment and to run a test, note that I can also right click here and say run test again and it will run it again.

|

|

|

|

43:50 |

|

|

transcript

|

15:28 |

In this video we're going to talk about unicode.

There are a few terms that we need to know before we can understand unicode and how Python handles it.

So let's talk about these terms.

The first term is a character and a character is a single letter something that you would type and it would print on the screen.

There's a little bit of a vagary between character and glyph glyph is the visual representation of said character.

So if we think of the character A in the English alphabet A is a single letter and there's a visual representation of A actually uppercase or lowercase.

So the glyph would be the representation of it, a is the actual character.

There's also what's called a code point and a code point is a numeric description of a character.

And each character or glyph has a unique numeric description.

Typically this is represented as a hex number and this is also where unicode comes from.

This is a universal code that represents this character or glyph.

Another term that we need to know is an encoding encoding is a mapping, a byte stream to a code point and so we'll understand this little bit more, but basically, you can think of a code point as a universal way of understanding something and when we want to tell someone else about it or tell a computer or send it over the network, we encode that character into some encoding, so typical encodings will include ASCII or utf-8 there are other encodings as well, we'll look at a few of them.

Here's an example.

So there's a character called Omega and it has a glyph and it looks sort of like a horseshoe Ω you might be familiar with it if you've done some physics, it has a code point, so the code point, we put a capital U in front of it it just stands for unicode, and the code point is 2126 note that that is a hex number.

There are also a couple encoding represented here, so one encoding is the byte string consisting of e2 84 and a6, this is the utf-8 encoding for the Omega character or glyph or the 2126 unicode code point.

There's also a utf-16 code point, ff, fe&!

at the end.

Note that these are two different encodings and their byte streams look different.

Here's how we do it in Python.

One thing to be aware of in Python 3 is that all strings in Python are unicode strings we'll talk a little bit how they're represented internally but if I have the glyph, I have a way to type it I can just type it into a string.

I can also copy and paste it from a webpage or whatnot.

If I don't have the glyph or I don't want to type it but I do have the code point I can insert that by putting a \_u or _U depending on how long the hex string is if the hex string is 4 characters, then I use an _u or a lowercase u if the hex string is longer than 4 characters then I'm going to put an upper case U and I'm going to left pad it with zeros untill I get to 8 characters.

I can also use the unicode name and in this case the name is Ω sign and I put a \N and then I put the name in curly braces.

A fourth way to get this unicode string is by passing in this number here and 8486 is the decimal version of 2126.

So if I pass that into the chr function that will give me a character from an ordinal number and that's the unicode ordinal.

Note that I can print this out to the screen and it will print out the Omega character and I can test if all these characters are indeed equal or equivalent to one another and they are.

Another thing that you might want to be aware of in Python is a module included in Python called unicode data.

And if you have unicode data, you can pass in a single string character into it and it will tell you what the name is.

So in this case, we have the Ω character in there and unicode data.name says that unicode name of this is Ω sign.

Let's look at another example really quickly.

There's a character called superscript two and that's if you're familiar with math, like you said x2 the squared would be the glyph the number 2 raised up slightly higher is the superscript two it has a unicode code point, in this case, it's the hex characters 178 and we can see two encodings here, here's a utf-8 encoding and we can also see a Windows 1252 encoding.

Now, where'd you get these code points?

Where do you understand what the master data is?

If you want to find them out, you can go to a website called unicode.org.

There's a consortium there that occasionally releases new mappings, but they have charts that you can download that map letters to unicode character codes or code points.

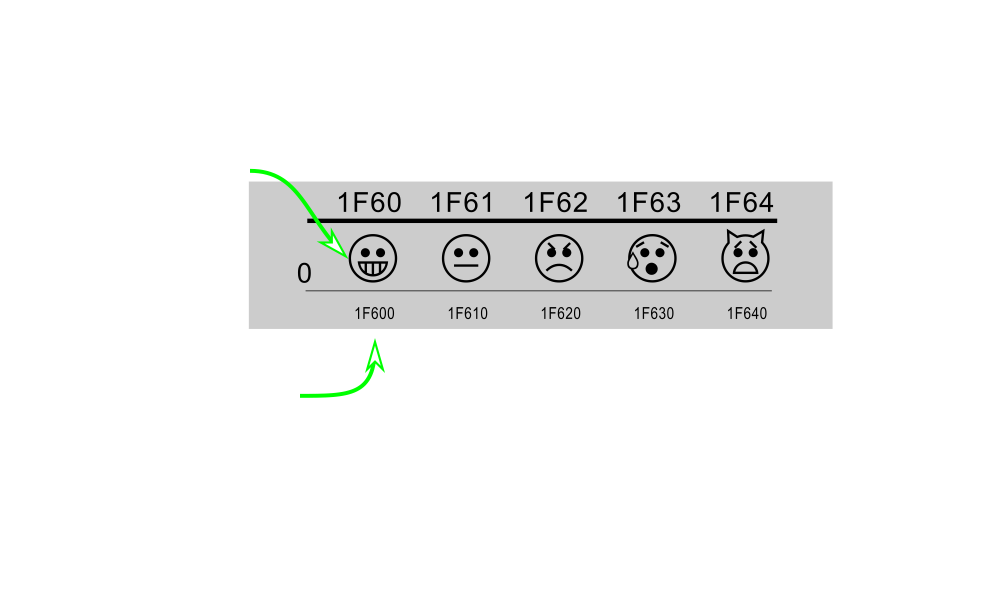

Here's an example of one of the charts.

You'll see something like this.

This is for the Emoji chart and you can see that there is along the top, we've got a hex number here and then we've got another hex number here on the left-hand side.

And when you concatenate those two you get this hex number at the bottom here, and that is the code point for the smiley face here.

And then the next one is the sort of normal face, and then there's a frowny face and a crying face and a surprised face.

The chart also contains a table that looks like this that has the code point name and glyph all in one place here.

Right here we have the code point 1F600, we have the glyph which is the smiley face and we have the actual name, which isn't smiley, but grinning face, note that it's capitalized and there is a space between it.

One thing to note is that the code point for this 1F600 is longer than four characters.

So in order to represent that using the code point we need to put a capital U and then we need to pad that with three zeros to get 8 characters in that case.

We can also use the name with a \m If we have access to the glyph or keyboard that types Emoji we can put that directly into a string.

Note that here I've also got the utf-8 version of the encoding of grinning face.

If I have that byte stream encoded as utf-8 bytes, I can decode it back to unicode using the decode method and the appropriate encoding that it was encoded as and I say decode to utf-8, I will get back the utf-8 string for that.

Let's talk about how things are stored in Python.

Everything internally is stored as two or four bytes and there's internal encodings, these are called UCS2 and UCS4, depending on how your Python was compiled will determine how your unicode strings are stored.

So one thing to be aware of because all strings in Python 3 are unicode strings, and these are stored as UCS2 or UCS4 byte strings internally, there's typically a 2X to 4X increase in the size of memory needed to store strings in Python 3 versus Python 2.

In practice, that doesn't really make so much of a difference on modern machines unless you're dealing with huge files, but just something to be aware of.

Also note that bytes in Python 3 are not the same as Python 2 strings.

So bytes in Python 3 are simply arrays of integers.

Let's talk about encodings a little bit more, encodings map bytes to code points.

A common misconception is that an encoding is a unicode number.

So utf-8 is an encoding.

This is not code point.

This is an encoding of a code point, just to be pedantic about that, utf-8 is an encoding of characters, it is not unicode per se.

Unicode is always encoded to bytes and the reverse is always true bytes are decoded into unicode.

Note that you can't take unicode and decode it, you encode it.

Also, the same with bytes— you can't take bytes and encode them, they are already encoded, you can only decode them to unicode.

Here's an example here.

We have the string with Omega in it.

And I created it with the code point and then if I wanted to encode that as utf-16, I say encode, I call the encode method on that and I pass in the encoding utf-16 and it returns back a byte string, again, note that c is a unicode string and the result of that is a byte string coming out of that.

If I want to encode c as utf-8, I simply call the encode method and pass in utf-8.

Note that these encodings are different, utf-16 and utf-8 have different encodings.

Now, once I have these bytes, I can go back and get the original string from it.

So I don't encode bytes, I always decode bytes and here I'm taking the utf-8 bytes and decoding them calling the decode method on them to get back a unicode string.

Here's a chart that just shows what we do if we have a unicode string, we always encode it to a byte string, likewise if we have a byte string, we always decode it.

We can't do the opposite, the byte string doesn't even have an encode method, likewise, the unicode string doesn't have a decode method.

There are some errors you can get when you're dealing with unicode, here's a pretty common one here.

I've got the Omega sign here in a variable called c.

And if I try to encode that as ASCII, I'm going to get a unicode encode error.

And the problem is that the ASCII character set doesn't have an encoding for this character.

And so that's what this error means, charmap, codec cannot encode character unicode 2126 in position 0.

This is a pretty common error when you start dealing with unicode.

So again, what this error means is that you have a string and you're trying to encode it to a byte encoding that doesn't have a representation for that.

There are some encodings that have representations for all of unicode, so utf-8 is a good choice, but ASCII does not, it only has a limited number of characters that it can encode.

Here, we'll trying to encode this Omega character again we'll call encode with the windows 1252 a common encoding that was found in Windows during the last century and we'll get the same error here.

Well, similar error, we are getting unicode encode error and that it can't be encoded into Windows 1252.

On the other hand, if we try and encode it into cp949, this is a Korean encoding, we get a byte string.

So this Korean encoding has the ability to support the Omega character.

Now be careful, once you have bytes encoded, you need to decode them typically.

Typically, you only encode them to send them over the wire or to save them as a file or send them over the network, that sort of thing.

But when you're dealing with them, you want them in utf-8.

So a lot of times, you'll get data and you'll need to decode it to be able to deal with it.

Here we have the variable core which has the bytes for the Omega sign encoded in Korean.

Now if we have those bytes and we call decode and we say I want to decode these bytes assuming that they were in utf-8 I'm going to get an error here, that's a unicode decode error.

And this says I got bytes and I'm trying to decode them as utf-8, but there aren't utf-8 bytes that make sense here.

So this is a unicode decode error, typically what this means is you have bytes and you are decoding them from the wrong encoding.

Note that we encoded as Korean, we need to decode from Korean as well.

Now even more nefarious is this example down here.

We have the Korean bytes, and we're decoding them but we're decoding them as Turkish.

And apparently the combination of Korean bytes is valid Turkish bytes, but it's not the Omega sign, it's a different sign.

This is known as mojibake, that's a Japanese term that means messed up characters, I believe.

And so this is a little bit more problematic, you've decoded your characters, but you have the wrong characters, because you decoded them in the wrong encoding, so be careful about your encoding, you want to be explicit here and you want to make sure that your encoding and decoding match up with the same encoding.

Here's a chart that represents the various things you can do with characters and the conversions that you can do on the single character.

Note that if we have a string here, this box right here is various ways to represent ASCII character T.

We can convert that to an integer by calling ord on it and we can go back by calling chr.

We can also get bytes by calling bytes with the encoding that we want and we can put our bytes into a file if we open the file in the right binary mode, if we have string and we want to write to a file we need to just call it with the w mode.

There are a couple errors that you might see.

You might try and open a file for writing with bytes and you'll get an error, that's the type error, you have to use a string and not bytes if you're opening to write it in text mode.

Similarly, if I have a string and I open it in binary mode I'm getting an error that says string does not support the buffer interface.

So these are errors that you might see with an ASCII character.

This chart shows some of the errors that you might see with unicode characters.

Here we've got the string here which has Ω and we can see that we can encode it as an integer.

We can also encode it as bytes, in this case we're encoding it as utf-8 bytes.

Now note that if I try and decode this sequence as Windows 1252 that will pass, but I'll get a messed up mojibake.

So again, we need to make sure that this decoding has the same encoding as the encoding call, which was utf-8.

We also see some of the other errors that we have if we try and encode with a different encoding that's not supported, we might get a unicode encode error.

So Windows 1252 or ASCII, those both give us errors and know that we can't call decode on a string, we can only encode a string.

So those are some of the things that you need to be aware of.

Typically, if you get these unicode encode errors, that means that you're trying to call encode and you're using the wrong encoding there.

So try and figure out what your encoding is.

Common coding these days is utf-8.

Okay, we've been through a lot in this video.

Hopefully, you have some understanding of how Python handles unicode and how we can change these unicode strings into byte strings to serialize or send over the wire.

Hopefully, you also understand some of the errors you might run into and how to deal with those errors.

If you're sure what your encoding is, that can eliminate a lot of the issues that you might run into.

|

|

|

transcript

|

3:53 |

In this video, we're going to look at unicode test, let's open that up in the editor that you want.

I'm going to run it.

You can run it from your command line by just invoking Python on the file, or you can in PyCharm right click and say run you should get three errors here.

Let's go to the first error.

On line 10 we get a name error, so here's line 10.

And in this function, it's called test 1.

It says the following line is from Yu Lou Chun by Dao Ren Bai Yun.

There's a link to Project Gutenberg there, it says convert the line to utf-8 bytes stored in the variable utf-8_txt.

So up here we have a unicode string and we're going to convert that to bytes.

Let's see how we do that.

We're going to make a variable called utf-8_text is equal to and on text, we need to call the encode method.

So we're going to encode the string and we're going to encode it as utf-8 bytes so we can say utf-8, and that should give us a new variable that actually is bytes.

Let's run this and see if it works.

Note that our test here is just asserting that the last five characters are these bytes.

It's also asserting the length of the bytes.

Okay, so we have one that's passed now.

So the thing to remember is that if you have a string, a unicode string if you want to change it into bytes that process is called encoding, you don't decode a string, you decode bytes back into a string.

Okay, here's another line or probably the same line convert the line to big5, another Chinese encoding and store it in big5_txt.

So big5_txt = txt so we have bytes here and we want to encode those bytes as big5.

Let's run that and see if that works.

Okay, it looks like it worked, we have 2 passed, one thing to note is the length of the big5 encoding is 74 bites on that same string versus above, when it's utf-8 encoded it's 111 bytes.

So there are some compromises that utf-8 makes but in general, utf-8 is one of the most widely used encodings on the internet.

So it's a pretty good encoding to use even though it might be a little bigger than other encodings.

Okay, test three, the following is utf-8 bytes decode it into a variable result.

So we have some bytes here and we're going to make a variable called result and we're going to take our unknown bytes and we're going to decode it.

Again, we don't encode bytes, bytes are already encoded for us.

Okay, let's run this and make sure it works It looks like we're good to go.

So let's just for fun put a little break point here and see if we can see what unknown is.

I'll move the break point down one level here Okay, here's result.

And if you look at result, it says that snake makes your head and then it says spin upside down.

Okay, cool.

Thanks for watching this video.

Hopefully, you have a better understanding of unicode and bytes and the conversion between those two.

Again, if you have a unicode string, you encode those as bytes, and if you have bytes, you decode those to a unicode string.

|

|

|

transcript

|

3:15 |

In this video we'll discuss unicode in files in Python 3.

We talked about unicode in Python 3 and that Python 3 handles unicode natively and the strings are natively unicode.

One thing to be aware of is that when Python reads in a text file, it's going to use the system encoding to determine what the encoding is on that file.

So you can run this command right here locale.getprefferedencoding with faults passed in and it will tell you what the encoding is on your system.

Typically, on most systems that's utf-8, if that's not the case, you should be aware of that.

And in any case, you should be explicit about what your files are encoded in.

Here's an example of being explicit with writing output.

I have a unicode string that has the ohm (Ω) character in it.

Again, ASCII can't handle this, but the cpe949 encoding can, that's a Korean encoding.

And so I'm going to make a file called ohm.core and I'm going to write to it, note that I'm calling the W mode, I'm not saying binary because I'm writing out a string to it.

So if you're writing out text, you only open a file in read or write mode not in binary mode.

And then I specify the encoding being explicit here and I'm saying that I'm going to encode this string as the Korean cp949 encoding and then with my file, I can call write and write my data out.

Now, this is a case where if I tried to read the file without specifying the encoding the encoding on my system again is utf-8 and if I'd simply try and open the file for reading and read from it, I'll get a unicode decode error that the utf-8 codec can't support that byte sequence.

That's because there is some combination of characters in the Korean byte sequence that utf-8 doesn't know how to decode but if I specify my encoding here and I'm explicit then I can read that data back and get back my original string.

Now, this used Korean, typically, most files you're going to see these days are utf-8.

So this just shows us an example of being explicit by being explicit, we can get around these encoding issues.

If we happen to have binary data, note that binary data is what we send over the wire or what we write to files.

If we have binary data, we don't specify the encoding here.

So here I'm saying I'm going to write a binary file and I'm specifying the encoding and Python throws an error and it says the binary mode doesn't take an encoding argument.

Again, we want to be explicit here and remember that binary is what we send over the wire over the network on a file and that is already encoded so you don't need to specify an encoding, it's a sequence of bytes.

If you open something for binary it's just going to lay down that sequence of bytes.

So I hope you understand a little bit more about reading and writing files that have alternate encodings in Python.

One of the best practices of Python is being explicit.

So when you're writing a text file you want to be explicit about what encoding you're using, especially if you're using characters that aren't ASCII or commonly used characters.

|

|

|

transcript

|

1:22 |

This video will talk about Unicode identifiers.

These came out in Python 3, and PEP 3131 introduced them.

The PEP states: by using identifiers in their native language code clarity and maintainability of the code among speakers of that language improves.

What does this mean?

It means that I can use a unicode character such as Ω I can say the Ω_val=10 if I'm talking about resistance or whatnot and then I can ask for that variable and Python 3 will give that to me.

Note that Python 2 does not like this and this won't work in Python 2.

Still have some issues, you can't start a variable with a number so I can't say to 2Ω_val, I'll get a syntax error there.

That's basically all I have to say about unicode variables.

I personally haven't seen them that often.

I'm in the United States and I mostly deal in an ASCII centric world.

One other thing that the PEP notes is that the Python language isn't going to use unicode variables either.

So even though the language supports it and an effort to make things simpler and easier for everyone to type and understand it's just going to stick with ASCII variables.

So I personally haven't seen anyone using this feature, though it's out there.

So if you've got a cool example, I'd love to see it.

Hit me up on Twitter or whatnot and let me know of a useful example where this is being used.

|

|

|

transcript

|

7:47 |

This video will discuss string formatting and PEP 3101.

Pep 3101 introduced the format method on a string, note that this exists in later versions of Python 2.

Prior to that, there was a c-like way of formatting strings so we would use percent and then some character after that typically s means I'm going to represent this as a string.

So here I have two variables and then we use the mod operator and pass in a tuple of things that we want to stick into these placeholders here.

So %s %s will take hello and stick it into the first place holder and in the second place holder world will pop in.

Pep 3101 we make this a method on a string format is a method on a string, and we're using curly braces to specify our placeholders here, in this case the 0 and 1, 0 refers to who's in the zero position and the 1 here refers to who's in the second position.

Again, Python is a zero based language, so 0, 1.

One of the nice things about the 0 and 1 which are completely optional is if I want to say Hello, Hello, Hello, I could say {0} {0} {0} I wouldn't have to put in Hello three times in as arguments to format.

Using the format method, there's a mini language and it allows us to do some things, we can insert some Python expressions.

So in this example, I'm passing in a dictionary into the format, and note that I'm doing an index operation here with square brackets here, so I'm saying on what's ever passed in I want you to do an index operation and pull age off and we get 50 there.

We can do a similar thing with attribute access, Here I say .age give me the age attribute of my p class and I'm passing in p there and he prints out 50.

So we can do some simple expressions there index and attribute access.

You try and call a function or do something like that and it's going to bark at you and say that you can't do that.

Here's an example of trying to call upper.

So we pass in a string and we want to say hey give me the upper attribute, but also invoke it with parentheses here and it gives me there's no attribute upper with parentheses following it.

So it's trying to do some things to not allow you to invoke or call arguments there.

We talked about position and here's just an example of using position if I want to say na na na na hey Jude, I can repeat na 3 times just by putting the position in there, note that I don't have to repeat na multiple times.

Now, there's a whole little language for string formatting here.

This is basically what can go where, this next slide here is the crib sheet that tells you what can actually go in the where so I'll go over these briefly, don't try and memorize all this you can refer back to this if you need to but a lot of the times you don't need all these different formatting options.

So a fill character, you can specify a fill character the default character here is a space and you don't need to put anything in there.

There's an alignment that allows you to center right or pad align things by using one of these four characters here, less than, greater than, equal or the caret.

There's a sign, we can stick in a sign here so we can put a plus a minus or a space if we have a plus in there, then we're going to put a sign in front of all numbers.

If we have a minus in front of there, then we're going to put a sign in front of negative numbers and with a space we will put a space in front of positive numbers and the sign in front of negative numbers.

We can put this hash in there and that just says if I've got a number that's a binary, octal or hex, I want you to stick 0b, 0o or 0x in front of those respectively.

There's an option here to do zero padding so we can stick in a zero there and if we have numbers we'll get padding after that, the default there is space so it doesn't stick in padding, but if you want to have zero padding on the left you can do that.

We can specify the minimum width if we want something to take at least 3 spaces, we can say 3 in there.

We can also specify a thousands separator, there is no thousands separator by default, but if we want to have a comma as a thousands separator we can put that in there.

Also, we can put a precision following a period, this is for floating-point numbers.

If you want to have five digits of precision, you can put .5 and that will give you the precision.

If you have a string that's going in, then this will give you the max length of the string.

So if I want to take up to 5 characters of that you can put 5 in there.

And finally, at the end here, we have a type.

There are various types that we can specify, these are all on the bottom here.

The default is s which means just give me the string representation of that.

We can also provide r to give us the repr.

There are various options that we can use for numbers that are integer numbers and here are some floating-point options we can use as well.

So e for lowercase exponent, E for uppercase exponent f for fixed point, g general, it changes between fixed point and exponent to try and be nice to you.

And n is a locale specific general version if you're in a different locale and a % sign will convert a floating point number to a percent.

So if you have .5 it will convert that to 50, so lots of options and things that you can do in there.

Don't memorize this, but you can come back and refer to this if you need to.

Here are some examples of formatting a string.

Here I say that I want to format Ringo in 12 characters and surrounded by asterisks here.

So we put a colon here, anything following the colon is the formatting options.

You can see that we have an asterisk, that's the fill character and then we have a caret and then we have 12 so we're going to take 12 characters and center that, caret means center.

Here's one here, formatted percentage using a width of 10 with 1 decimal place and a sign before the width padding.

And so we see there's a colon, after the colon is going to come our formatting options we're going to use an equal that says put the space after our sign there and we're going to use 10 characters and one character of decimal precision.

And then, since it's a percent, we're going to multiply it by 100 to convert it to a percent.

And so we see 44 divided by 100 would be .44 but this is going to multiply that by 100.

Here's a simple binary and hex conversion.

We just put :B and 12 as binary is 1100 12 as hex is c.

There's a little link at the bottom here pyformat.info, it's a nice website, you should go visit that and it has a bunch of examples of doing string formatting in what it calls the old school way of doing it, which is using the mod operator and the c-style placeholders and some examples of doing that with the newer format method, great examples in there, nice little resource to know about when you forget the formatting options and want to see some examples.

|

|

|

transcript

|

4:30 |

In this video, we're going to look at format test, open that up in your editor.

Let's look at the first problem.

It says create a string variable f1 to show a stock price.

It should have three placeholders, using the format method to print given data like a name that has a string, price that has a float and change that as a float.

It should be able to print a string that has name with the name inserted a couple spaces, then the price with a $ sign and the price formatted as a floating point, with two decimal places and the change format is %.

Let's see if we can do this here, f1 is equal to name: we need a placeholder for that, string name, so we'll just put a placeholder there, it looks like we've got some spaces here.

So we'll just copy those amount of spaces and stick them down here below.

And then, we'll say price and I need to put a $ sign here.

And this is going to be formatted as a float.

So I'm going to put: with two decimal places and f and put change and we're going to put our change in here.

It's going to have two decimal places and it's going to be formatted as a percentage.

Let's try this to see if it works, so just hit run here.

Okay, and so we've got f2 does not work but this part worked.

So we're down to the second part, create f2, it should look like f1 but have 10 spaces for each left-aligned placeholder.

So each one of these curly braces is a placeholder just copy this and plop it down here.

Let's change this to F2 and if we want 10 spaces in here, so in order to use 10 spaces, we can put a colon here and say left-aligned 10.

We just put a less than 10 at the front and that should give us 10 spaces here.

Let's give it a try and see if it works.

So let's run this again.

And it appeared to work.

So simply by putting that less than 10 at the front that says I want to make this APPL here take up 10 spaces and whatever's in there I want to be left aligned.

Okay, great, f3, it should have 3 placeholders and should be able to create the f1 or f2 strings the placeholders except the formatting string.

So this is sort of meta here.

So this is saying I want you to create something that could create this or could create this and the end user is going to pass in the contents of this.

So, let's see if we can do that here, f3 is equal to a string and we're going to say name colon and then we're going to have a placeholder here.

But we want to be able to have curly braces in there because we want the output of the format to have curly braces.

So we've got to put two curly braces, if we put two curly braces, then that's considered an escape.

So this would show our curly braces.

Then we need to put another curly brace pair inside for where we need to put our formatting.

And we'll do our spaces here and price and we're going to have to again do our curly braces and change 1, 2, 3, 1, 2, 3.

So this is how you include curly braces, you simply use two of them and then Python is not going to use those as placeholders, but rather it's going to just put a curly brace there.

Let's try it and see if it works.

Okay, it looks like it worked and you can see in the test here it's saying I want you to format on f3 with empty, with nothing, with a float, with two places and a percent with two places.

And then with that, I want to call format again, so that should have the placeholders now with the formatting strings in there.

And if you do that, we should get back to the original thing that we had back in f1 up above.

Hopefully, you feel like you understand formatting a little bit more.

There's a lot more to it.

Again, you can refer to the slide deck or the website that was referred to in the slide deck for more examples of how to do formatting.

|

|

|

transcript

|

3:44 |

In this video we're going to talk about literal string interpolation PEP 498 this came out in Python 3.6 and this is probably one of the most exciting features of Python 3, or one of the features that people really enjoy using and feel like maybe it should have been introduced quite a while ago.

The existing ways of formatting strings are either error-prone and flexible or cumbersome.

So here's the progression of the old-school style, which is the c style of formatting with the placeholders as percent as or whatnot the PEP 3101 with curly braces, and then this newer style which is called literal string interpolation.

You can see that there's a f in front of the string literal and then inside of these placeholders, we are passing in Python Expressions here.

Note that there is no format at the end here.

So it's just looking into my name space and seeing that there is a variable called coin and a variable called price and it's sticking those into those placeholders and we get this nice syntax for sticking in variables and having interpolation occur inside of that string.

So basically to get this functionality in Python 3.6 you stick an f in front of your string literal and then you can put an expression inside of your curly braces.

Here's an example, it just doesn't have to be a variable.

Here we are defining a function called to Spanish and inside of our string literal we are calling the to Spanish function here and we are passing in val here in the first placeholder and we're passing in val in the to Spanish call and we're getting a result there.

The Python 3 101 format specifier doesn't allow anything other than index and attribute access, but this allows you to put arbitrary expressions in there.

So there's a lot of power in there, you can go crazy if you want to but it also allows you to be a little bit more succinct with your strings and string creation.

This syntax also supports the PEP 3101 string formatting.

So if you put in a colon in there following the colon you can put a format specifier and that will indicate how you want to format whatever was passed in into the placeholder there.

So this says val is 12 and we're going to format that with the b or as a binary likewise this one down here, format to this hex.

The PEP specifies that you can use these f strings with normal Python strings.

You can also use them with raw strings, but you cannot use them with byte strings or unicode literals.

Those are the literals that have the u in front of them.

Another thing to be wary of is including a backslash in the literal string Python complains about that.

So if you want to get a backslash in there make a variable that has that backslash and make a reference to that variable, kind of an uncommon thing there but something that might get you.

Another nicety of this is that this is also faster.

So I've got some timings here on my machine, but you see that with the old c style you had pretty decent speed this slowed down when we called the format method and then when we put this as a literal string interpolation when we introduced the app, we get some speed up and we're actually faster than the old method.

So this isn't going to be a change that if you use this you're going to have to use half as many web servers or whatnot but it is nice that this feature is faster.

So I hope you've learned a little bit about f strings in here.

Once you start using these, you'll wonder why this wasn't introduced earlier.

It's a really nice syntax that allows us to be brief, but also be explicit.

|

|

|

transcript

|

2:31 |

We're going to look at fstring test, open that up.

The first part says assuming the variable stock and price exist make a variable res that has Stock and then whatever the stock value is then price and whatever the price value is.

You should use an fstring to do this.

So long behold here is stock and price.

So let's make a variable called res, that's an fstring.

So to make an fstring we put an f in front of our string and I'm going to say Stock here and then I want the value of stock, in this case I'll just put it in curly braces here and then price here with the value of price.

We could do some formatting here.

In this case, it didn't particularly define that we include any specific formatting.

Let's just run this and see if it works.

And it looks like it worked here.

So this will give us a string that looks like that, pretty cool.

The next part says assume the variable x exists.

And x is defined down here, create a variable answer that has the sin of x using math.sin to 2 decimal places.

And so it wants us to say x with the value of x then sin of x with the sin of that, use an f string to do that.

So the first thing we need to do is import the math libraries, we're going to say import math here.

Let's come down here and we'll make this variable called answer.

It's going to be an fstring, so we'll put that in there.

We need x and we'll put the value of x there and we want sin of x with math.sin of x and we need to format it.

So in order to provide formatting, we put a colon at the end here and we're going to format this as a float.

So, I believe we need to say .2f to get 2 decimal places.

Let's try that and see if it works.

Oh, I've got a typo here, I need to close off my embedding of my expression there.

Let's try again.

Okay, it looks like that worked.

So this shows you that not only can you put expressions in here, but you can put formatting with them as well using the formatting syntax.

|

|

|

transcript

|

1:20 |

This video will discuss explicit unicode literals.

These were created in Python 3.3 by PEP for 414 and if you're using Python 2 and porting it to Python 3, these can be pretty important, just in review, all strings are unicode in Python 3 by default that wasn't the case in Python 2, in Python 2 to make a unicode string or a unicode literal, you would put a u in front of your string.

So here's a little bit from the pep, the requirement to change the spelling of every unicode literal in an application regardless of how it is accomplished is a key stumbling block for porting Python 2 to 3 efforts.

So this is simply an inclusion in Python 3 to ease the porting effort from Python 2 to 3.

If you're only writing Python 3 code you can disregard this, but if you're using Python 2 and want to write code that works in both or migrate, this can be something that can be useful.

Here's just some brief code.

It shows that I can create a unicode string or a Python string by putting u in front of it and I can do the same thing without a u in front of it.

and those are equivalent.

Python 3 basically ignores the u and allows you to have it there for porting purposes.

So this is a brief section but useful for those who are migrating from Python 2 to 3.

|

|

|

|

17:58 |

|

|

transcript

|

1:38 |

In this video we're going to talk about integer division.

This came out in Python 3 PEP 238 introduced it.

In Python 2 when you divided a number by another number if you divided floats, you'd get back a float as a result, but for integers, you would get back an integer as a result.

And this is called floor division.

Guido writes in his Python history blog about how this was a mistake and Python 3 attempted to rectify this by changing the behavior of the division operator.

So in Python 3 slash does what's called true division there's a __truediv__ and double slash does floor division __floordiv__ So if I divide 2 by 3 in Python 3, I get point .6666, and if I say 2//3 then I get that floor division and I get back 0 as a result.

Note that floor division also works on floats if I say 2.0 divided by 3.1, I get .66, but if I do floor division on those numbers, I get 0.0 and because everything is an object in Python, if we want to we can go through the steps to show that you can invoke __truediv__ on an integer and __floordiv__ as well.

Now again, you typically don't do this, we typically don't call the dunder methods but they're there because in Python everything is an object and you can call the object methods if you want to.

Quick summary, in Python 3 when you divide two numbers, you will get a float as a result.

If you don't want a float then use a double divide to do what's called floor division.

|

|

|

transcript

|

1:53 |

In this video we're going to talk about PEP 237, came out in Python 3 and this is the unification of long and integer types.

From the PEP it says there is also the greater desire to hide unnecessary details from the Python user when they're irrelevant for most applications.

It makes sense to extend this convenience to numbers.

If you're familiar, in Python 2 there was a long integer type and a normal integer type.

And you could tell one from the other because when you print it out a long type there was an L after it.

Now Python supports arbitrary precision in integers it's only limited by the amount of memory you have so you can make pretty big integers if you want to.

Here's an example of creating big integers and we're calling the sys.getsizeof on our integers and just seeing that as we create bigger and bigger integers, that the number of bytes required to store those integers gets bigger.

And so what's happening here is that Python's doing an automatic transition from the native integers to these longer integers, but it's hiding that from you, you don't notice it.