|

|

|

20:35 |

|

|

transcript

|

1:37 |

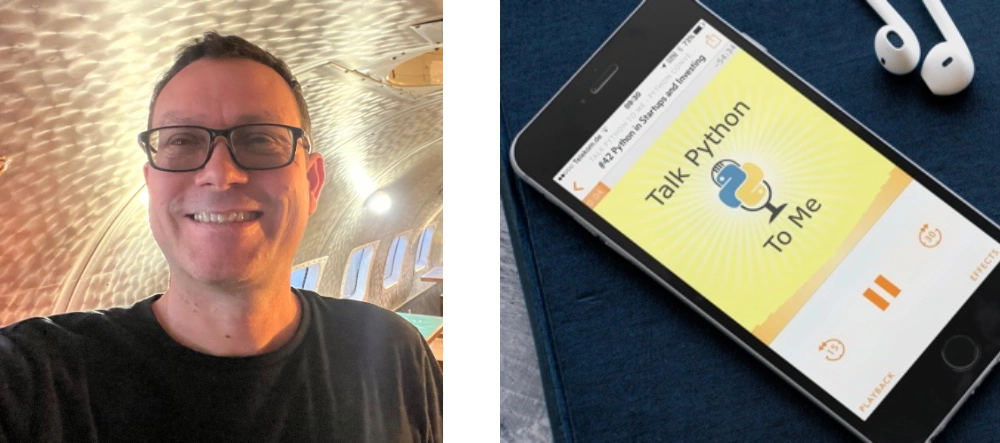

Welcome to Agentic AI for Python.

I'm Michael Kennedy.

I'm going to be your instructor for this course and your guide along the way.

I am incredibly excited to share Agentic programming with you in Python.

Look, I get it.

There is a ton of hype.

A lot of people are tired of the AI thing.

It's in every email client.

Who knows?

You probably have AI in your refrigerator these days, and who knows what value it adds?

Probably not much.

But this agentic programming, these agentic tool-using programming AIs are unbelievable.

I have had unimaginable success just five years ago.

It's truly unimaginable that there are tools that are this good that make you this productive.

And what we're going to focus on in this course is how you, as an experienced developer, can take the most advantage of this.

Not vibe coding or any of that buzzwordy stuff.

No, applying software engineering, using these AI tools as helpers along the way to build incredible solutions and applications and features.

I hope you're excited.

I'm going to try to show you, try to convince you how awesome these are by showing you many different concrete examples.

And we're going to build out a couple of applications throughout this course.

I think if you've not seen this before, I'm sure your mind will be blown at the end.

You're in for an amazing ride.

We're going to have a great time.

I'm looking forward to sharing it with you.

|

|

|

transcript

|

4:36 |

Let's jump right in.

What is agentic AI in regards to programming tools?

Well, agentic AI, and this is mixed in with tool using AI, is an entirely different category of what you might have experienced before.

Definitely if you've used things like chat or GitHub copilots, super charged AI, auto-charged, auto-complete type of thing, that is one thing.

What we're going to see this course is wholly a different thing, a whole entire different level.

You've heard that AIs hallucinate.

You've heard that they can't write good code or they always go off the rails.

I think you'll be surprised how on target they will be and how effective they will be because we're using this agentic tool using AI rather than just an LLM chat or a really powerful autocomplete.

Let me show you.

What we're talking about is absolutely not chat.

When ChatGPD came out, it was truly groundbreaking and it still every day completely impresses me.

However, it is not what we should be using for programming.

Let me give you an example.

Here I have just went to the Talk Python website and just selected a bunch of the table that lists all the episodes.

And I said, hey, give me a regular expression that will parse out all of this information out of the table, give me the number, just the numerical integer value, and the title.

And he said, no problem, Michael.

I'm on it.

37 seconds later, he says, here are a couple of really excellent regular expressions you can use.

So I said, all right, well, let me try that.

And I fired up Python, and I pasted in the text in the regular expression, and then I and look at that.

Incredibly, incredibly, it is correct.

This is mind-blowing.

It's not a, hey, I found a regular expression and it might work.

No, it read the data, understood it, and gave us an exact regular expression.

This is so impressive, but this is not what you should be doing.

What is wrong with this?

Well, look, I took some unrelated code and data and I said, I need this thing.

Then I got a little fragment back and then I went and moved it over to my app or my Python REPL.

And I said, well, let's work with it now.

It doesn't understand everything that my program is doing.

It doesn't know how to talk to my database and see if the thing it actually built is consistent with the models that are stored in the schema of the database and all of these different things.

It just, it only knows a little bit.

It's super impressive what it can do with that little bit, but it doesn't have enough context and understanding and even the ability to verify itself.

It just gives you the answer.

It says, I looked at it.

Here's your answer.

Good luck.

Did it try it?

Who knows?

Probably not.

And if chat's not the answer, I guarantee you this crazy overdone autocomplete stuff that we get from so many of the tools these days, that's not it.

Look at this.

Our cursor is right here.

And we haven't even written a word.

Oh, you know what?

We're going to write.

Return.

tbody we're going to loop over the users and map them to the rows and then we're going to loop over the columns and map them to the tds and that's impressive until it's not turns out I don't know how you feel about this I hate this stuff in my code in my editor it drives me crazy why because it's 95% right or 90% right which is amazing but how do I what do I do here let's let's suppose that the class name is wrong.

And that the thing I'm mapping into for user bracket column, I want that slight, maybe it's user dot attributes of column.

So I could either accept this and then go find the few places where it's wrong and adjust it, or I could just type it out.

But this auto-complete's constantly bouncing around in my way trying to do more, but you can see that what it's doing is wrong, but it's mostly right.

And it just takes away from the art of coding it, it's in the way, right?

You've got to like kind of power through it and ignore it if it's wrong.

You want to accept, I don't know.

It's just, it's not good.

And this is not what I'm promoting.

In fact, most of the time I turn these kinds of features off even when my editors have them.

I want basic auto complete.

I hit dot.

What are the attributes or functions I can call there?

That's all I need.

|

|

|

transcript

|

8:43 |

Now I can talk all day, but in order for you to appreciate this, you really need to see it in action.

And what we're going to see is a couple of animated screenshots of this AI working in action.

And then we're going to go build it out and go round after round of actually doing it and guiding it and tweaking it and really leveraging this.

But I want to give you a quick glimpse before we jump in.

Okay, so let's look at an example.

Let me give you a little background on what the feature I'm trying to build with this example.

Over at Talk Python, we've got a bunch of structured Flask routes that generate different things.

So for example, when you go to an episode, there's a specific route in Flask that handles that, and it's data-driven in all of these things.

There's one for the RSS feed.

There's one for the list of episodes.

There's many, many pages.

It's a very much larger project than you would think from the outside.

But on top of that, there's a fallback CMS.

So if you request a page and it's not one of the particularly hard-coded ones from Flask, then it falls back to this database-backed CMS that we have.

And in there, it says, okay, this URL, do we have, is it supposed to be a redirect over to a resource or an advertiser's page?

Or is it supposed to show some particular content that's just coming out of the database?

Those kinds of things.

If all of that fails, if it's not a data-driven programming request, if it's not a CMS request, then it's something that's missing.

And we record that in our database to say, we want to know what pages people and sites and Google and so on think exist.

And maybe if it's really, really popular, we should just create it.

If everybody keeps saying /rss when it's actually slash episodes /rss, well, maybe I should just add RSS as a thing.

So the feature that we were going for here is to create a visualization, an admin front end to allow us to do a bunch of different things with those missed CMS requests.

All right, with that stage set, here's what I wrote, and let's watch it go.

Think about how different what you're about to see is than I pasted some data in chat and I said, hey, chat, do this.

So here's what I said.

We have our CMS service recording when a URL is not registered and we have to return a 404.

This appears in the log files as a CMS service colon, whatever, right?

Recording missed CMS page at some location.

I want to add a new section to the admin area that will put all the CMS misses that are not skipped or suppressed database we can say yeah I know this stop bothering it just get it and just ignore it I want to display this in a table with searching and ordering I meant sorting but whatever right so this is what I said and you can see it has that little one it actually is looking at the editor but it's also running in the context of the application so it can it knows our entire code base and all of our tooling and our virtual environment and so on what happens when I hit go starts out and says great I'll help you section to the admin area to display the CMS misses.

Let's start by exploring the code bases to understand the current implementation.

Looked at the CMS service to see what it's doing when it has a miss.

It looked at the admin area to see how those pages are structured.

Great.

I'm going to need to add a link from the admin landing section to this area.

So let me look there.

All right, great.

I got that.

Now let me look for a more sophisticated example of a table that you might be using.

Oh, we have this blob management, like an S3 sort of front end type of thing in the website.

That has a really interesting table.

That's a good match for what it's trying to build here.

So it's like, all right, that's great.

We'll maybe use that as a template.

Now we want the filtering, sorting, other types of interactions.

So let me look at the JavaScript file to see how the other table was doing that.

Now it's got all the information.

Instead of just jumping into it, It says, I actually broke this problem down into a bunch of small pieces.

What would you, as a developer, do?

You would break the problem down into small pieces.

I've got to create the page.

I've got to create the table.

I've got to create the JavaScript.

And then we're going to tie the JavaScript together, et cetera, et cetera.

That's just what it's doing.

You can look through all these different to-dos that it's done.

It said, all right, let's jam on it.

And off it goes.

Maybe 10 minutes later or so, I'm not sure.

It had pulled off all of these.

And I don't want to read the whole thing.

We're going to do a whole bunch of examples of this live.

But it did all of its steps one after another, verifying along the way.

And then it said, oh, look, I'm done with the code.

And so I'm going to run the Ruff formatter against your code.

Because I know you have a rough.toml file, which specifies a configuration, how you like your code.

So maybe I wrote it one way, you like it another.

We're going to make sure whenever I write code, says the AI, that you're going to get it the way you like by me formatting it with your config.

and sure enough at the bottom down here you can see two files reformatted.

So it did convert it over to the way we like, which is amazing.

But it's not done.

It's not like here is your answer.

It said, let me just make sure everything's hanging together.

And it looked, it said actually Pyrefly, the type checker you're using, seems to be surfacing a problem with this update query bit when we're trying to interact with the database.

I think this is when it's when you say here's a miss, but I want to suppress it or hide it.

Basically, this has got to update the database.

And it noticed that when it wrote that code, there's some kind of issue.

We're using the Beanie ODM talking to MongoDB.

And look at this.

It said, Hey, I went and I looked at the Beanie documentation for how this is supposed to work.

How does it know about the documentation?

We'll see later.

And it goes and it finds the documentation and says, oh, I see this returns such a thing.

Let me see how you're using it throughout the app.

It says, oh, it looks like actually there's a bunch of places where you're already calling update and that's working correctly.

It turns out the problem is with the type stubs for the ODM and not your code.

So let me just put a flag to ignore that for now.

What I did later is I went back and I actually used the AI to create a better version of the type stubs based on how you actually use them.

So those type errors went away globally and we save that in a place that all the editors can find, which is great.

But for now, I just said, it's not really a problem.

It's like a deficit of the ODM.

How long was that?

And if I think back, it's probably an hour end to end, playing with it, tweaking.

I pulled up the UI that it gave me and I said, oh, I don't really like how this is laid out.

There's a little too much padding here, not enough there.

I don't like that color.

And in the end, we have a super cool admin section here.

Look at this.

Showing 1,000, you know, it's paging, showing 1,000 of the first 180,000 CMS misses.

Ooh, you can mark them as malicious.

You can mark them as suppressed.

It filters them out.

You have a search, which is doing dynamic filtering against it.

You've got all this reporting here.

How cool is this?

This whole feature was done almost entirely by just working with the AI.

I didn't have to write much code.

I gave it a little nudge here, a tiny correction there.

But this came from that agentic AI.

And I didn't give it that much more information than I did my regular expression.

The key difference, the big difference is it runs knowing all of your code, all of your tools.

It can go to your shell and run ruff.

It can talk to your database.

It can do whatever it needs to do as if it was a junior developer, a theme you're going to hear a lot.

So this is the kind of thing that we're going to work through in different incarnations of this course.

We're going to have maybe some examples where we start completely from scratch and some other examples where we start with existing code.

But the results we're going to get are going to be amazing.

I hope you're excited.

I think this is just such an amazing technology.

It really supercharges developers if they take the time to guide it, set it up the right way, and use it as a partner rather than just some thing you throw a bunch of commands or prompts at and just walk away.

|

|

|

transcript

|

5:10 |

I don't know if you've noticed, there are a lot of AI tools out there.

There's a lot of hype out there.

There are a lot of editors.

Everyone and their brother is trying to add AI to their tools or create an AI tool so that you can use theirs and not some others.

So it's not a given which tool we're going to be using.

You may have recognized that little chat window we just did, but even that, it could be a handful, four or five different tools.

So what we're gonna do is we're gonna use Cursor.

Cursor is a fork of VS Code.

And to me, if you're a person who likes a IDE type of editor experience, this is hands down the very best thing.

So Cursor, nice editor, really deep integration to how the UI interacts with what Cursor does, how it controls the AI to find all the right elements project and so on.

We're going to be using here, this has got a free tier as well as the editor itself is free, but to use the AI, it's got a trial period and a free tier and those kinds of things.

Notable mention, the other one that I would strongly recommend if you are not someone who wants to use an editor like VS Code or PyCharm would be to use Claude Code.

So a bit of a Spoiler, we're actually going to be using Claude as the model from Cursor to do most of our work, right?

We use Claude Sonnet 4.5 at the time.

But Claude code is more of a terminal-based experience.

There are plugins for editors, but it's primarily you go into the terminal and you get this chat experience for your code in a very similar way I already explained.

But all of that is happening in just a terminal flow experience, not within an editor.

So if you're not a VS Code PyCharm type person, then you can use this.

Almost everything that we cover in this course applies equally to ClogCode or to Cursor.

Because like I said, underneath, we're using the same model.

Notable mentions.

What else might you choose if you don't choose those?

There's some really good ones out there that you probably haven't heard of all of these.

I'll bet most people taking this course have not heard of all of these.

So one that's kind of new and really cool is called Cline, C-L-I-N-D-E.

I'm not sure exactly.

It stands for something like that.

The idea is this is an open source plugin that goes into VS Code, PyCharm, and Cursor actually, though it doesn't use the Cursor subscription.

It's like alongside that.

It just happens to be in the same editor.

And the idea here is this is an open source system and they do not charge or even really control how you're paying for your AI usage.

You put your API key for like Anthropic or OpenAI or something in there and it just passes that through and it's just a tool that brings these things together.

How do they make money?

Because there is a pricing up there.

They charge for team tools basically.

but it's an open source, pay whatever you use of the AI sort of thing.

So this is actually really interesting.

It goes into a bunch of editors.

Very nice.

There's one of the OGs, which is GitHub Copilot.

And you can see they just added agentic mode.

Why?

Because it's so much better, right?

You can see right above the AI that builds with you, it says now supercharged with agent mode.

Okay.

We can also do kilo code, which is another open source editor, I believe.

I believe it's VS Code-like.

Also, you can see that it plugs into PyCharm and Cursor as well, and there's install kilocode, three icons there.

And there's also Juni from JetBrains.

So if you're a JetBrains Pro subscriber and you have the AI package, you can use Juni in PyCharm, which is JetBrains' own agentic coding agent.

Now, be careful here.

JetBrains has two unrelated, disconnected AI things at the time of recording.

I doubt this is going to last, but there's a JetBrains AI, which is like ChatGPT in Autocomplete, and there's Juni, which is this agentic thing, and they don't go together.

So, interesting.

You can also take all of the three things, yeah, all the three things we just talked about and install them into JetBrains.

And JetBrains also now has a collaboration with Claude.

So there's a bunch of mixing of these things, but you'll see there's actually a lot of similarities and commonalities amongst them.

So a lot of choices out there.

You choose what makes you happy.

But for this course, we're doing Cursor backed by Claude AI models, Claude Sonnet 4.5 and others.

|

|

|

transcript

|

0:29 |

Super quick, before we jump into the next section, I just wanted to tell you that the source code for everything that you see me type on the screen is available.

I strongly encourage you to go to the GitHub repository right there at that URL.

It also is in your player and on the course page and so on.

And star it and consider maybe even forking it just so you have a persistent copy for yourself.

So all the code that we create and all the little extras, you'll find them here.

Don't worry about writing them down.

|

|

|

|

12:48 |

|

|

transcript

|

5:38 |

In this short chapter, I want to give you some concrete examples of things I've built with Cursor and Cloud Sonnet.

And all of these are in production, or on PyPI, or wherever they are.

And they're well-structured, they're nice code, they're not just a bunch of slop.

No, they're really, really good.

And you'll notice a theme.

Many of these are things like, wouldn't it be nice if I could, but it's way too much work?

Or wouldn't it be nice to enhance this thing, but I've got more important things to do.

So instead of having a multi-file drag and drop interface, we're just going to have a button that you browse to pick the file, you know, that kind of stuff.

And we'll say, well, that's how it could be.

But if a little work from cursor, it could be really, really nice.

Or it's a feature that I'm like, I just can't justify the time because it's going to take so much time versus what you get out of it.

So I want you to come into it with that mindset.

Obviously, we haven't met necessarily, but I'm sure in your life as a software developer, there are things like, I would love a utility that did this, or I would love a library for that, or something over here I could enhance, but it's been there for two years, And I just, I never got around to it.

You'll see with these tools, those become not things that are like a drag on you.

They become stuff that are just fun opportunities.

Like, oh, I got like two hours to work on something.

Let's try to fix that thing that's been a hassle for two years.

You probably can, let's see.

All right, here is a tremendously big challenge that I had.

So when I built Talk Python way back when in 2015, Bootstrap was all the rage.

And boy, boy, Bootstrap wasn't brand new.

No, it was Bootstrap 3.

Bootstrap 3 is no longer supported.

And now I have this problem.

I have a ton of code written in Bootstrap 3.

HTML, you know, the HTML is structured like if you want a grid layout, it's got to go container and then row and then column or whatever the grid settings that I'm forgetting for Bootstrap 3.

and it's pervasive and deep within the HTML structure the way Bootstrap works.

So whenever I want to add a new feature to this website now, I have to go and write old Bootstrap.

This is a hassle for me because I know it pretty well at this point, but also it's just not following the modern themes.

And every time I add a new feature with this super old framework, I feel like, well, I'm kind of adding more technical debt.

I've created yet another thing that is out of date on this old framework, okay?

I want to move to Bulma, which is kind of like Tailwind, but it doesn't have all the build steps.

It's like Tailwind, but super simple.

It's a utility classes CSS framework.

But I've got to rewrite a ton of stuff.

How much?

Well, let's see here.

Code stats for talkpython.fm.

Not the courses, just the podcasts.

For the HTML templates, we have HTML files, but mostly PT files, which are chameleon.

And we have 8,564 lines of that structured, super framework focused HTML.

We also have 8,000 lines of CSS.

Oh my goodness.

And we have 40,000 lines of Python code.

That's a lot of stuff.

Now the Python code doesn't have to change at all, but those 16,000 CSS and HTML lines do.

And this is why for years, I wanted to move off of Bootstrap 3 to something more modern and nice and haven't.

You go over to Bootstrap, getbootstrap.com, and you look at it, it says, Bootstrap 3 has reached end of life.

Go, don't mess with it.

You need to get away from this.

And I can't even say, oh, I'll just rewrite it in Bootstrap 5, because they've changed their structure as well.

It's like kind of the same problem, just sticking with Bootstrap.

So there's not a great answer over there.

So I want to move to this thing called Bulma.

Amazing, right?

So I was sitting around some summer night, sitting on my back porch, messing with my computer thinking, huh, I bet Cursor could handle this.

So sat down, started working through this, and by middle of the next day, the whole project was done.

And this would take a week, weeks to get right.

Along the way, not only did I just make sure it transformed over correctly, I actually saw some parts.

This is kind of clunky.

I know that's the way it was, but let's make that a little bit better.

So I completely converted this over probably in clock time.

It probably took six hours, four hours.

I don't know, not that long.

And it's visual.

It's complicated.

It's incredible that this worked.

So this is one of the things that's been bothering me for years.

and I could never justify taking weeks of work to just rewrite the website to look the same.

Could I take four hours?

Yes, not only could I do it in four hours, it was fun to do it.

So this is example one, just migrating from one CSS framework to another.

It sounds small, but now when I make changes to the site, I'm building on modern stuff, not adding more tech debt.

|

|

|

transcript

|

5:02 |

The second one that I want to talk about is enhancing the search functionality over at the Python Bytes podcast that I run with Brian Okken.

So over there, and it's like a news show, there's a bunch of headlines.

And it's really common that people come and say, hey, if they talked about this, either go look what we said about it or suggest like, hey, would you guys be willing to cover this topic so that we learn more about it or whatever?

It's really cool.

And a little bit of a inside baseball is, I use it all the time when I'm preparing for the show because I'm like, dude, we've covered, we've done this for 10 years.

Like, have we covered this thing before, maybe five years ago?

Or is it actually worth covering some aspect from scratch?

Or is it an update to something we talked about?

So finding this, finding things through search is really, really important.

This one is better seen live.

So let's jump over there.

So I can come over here and I can hit search.

and it just says, what do you want to search for?

Let's imagine I want to search for something like MongoDB.

There's 103 episode results.

Now, before I addressed this problem with cursor, what would happen is you would see this, number 447 going down a rat hole, and then this, none of these things down here, and then this, And it was just a flat list of episodes which had that search result in it.

It's better than nothing, but it's not that great.

So what did I do?

Here's a couple of things that I said would be nice, right?

I would like to elevate the episodes where MongoDB is actually mentioned as a direct topic.

It's either in the title or it's one of those, right?

So there's a bunch of, you can see like this one right here.

Now we have this little highlight.

It says, this one, you actually talked about the Django backend for MongoDB, rather than it's just some other random match.

If you just saw the title, nothing about this tells you that it stands out from the others, right?

So I added this UI element here in this functionality with cursor that says only show episodes with direct topic matches.

Look at this.

Now, instead of, what was it?

103 results, it's 11.

And you can see, look at those.

How awesome is that?

And even better, watch this as part of what Cursor helped me do.

Official, so if I click on this, it doesn't take me to the resource, it takes me to the conversation on episode 90% done 50% of the time.

And it actually takes you right to that portion of the podcast, right?

This is exactly why this here, here's all the stuff we said, all the extra resources, not a ton of links, but migrations and models and so on.

Okay, this is a massive improvement, a massive improvement.

How long did this take?

From, hey, wouldn't it be better if we could show more stuff to changing the search results, to showing these things, to there's a whole performance story about this, right?

Look, you saw 103 results came back here.

Let's search for something else.

Turn on the dev tools.

Let's search for something like Django popular oh there we go there's a little filter so let's see if we look at this example here what was it how long did it take 750 milliseconds or this MongoDB one was even quicker it took 84 milliseconds and let me run the Django one again 400 so you can see actually this thing here the second request for Django took half as long and going forward that result is going to take only half as long because not only do we add this ui feature but there's a ton of really interesting performance enhancements and caching using something called disk cache that's really incredible so that like the parsing and rendering of all this information like what do the topics of that look like they started as markdown and they end up looking like this and from the database so a ton of features how long did this take me from idea to finish with the UI, all that, 45 minutes, maybe an hour with like a little messing around and getting a coffee or something.

That is crazy to look at that and go, wouldn't it be nice if our search were better and think, yeah, but it's not that important.

And it would take a couple of days.

If it took 45 minutes, you know, the calculation starts to change.

You're like, you know what, 45 minutes to have this over what we had, 100% I'd do that all day.

It was fun because it was cool to see it all just coming together so quickly and to like iterate and try all these things and learn about some of the caching that I pulled off.

So really, really interesting ideas here.

And just another example, like this is in production.

You can go play with it.

|

|

|

transcript

|

2:08 |

Last but not least is a Discord community.

Well, with Discord, you want to have a pretty deep integration with things like who is a member, who is not, what's happening in the podcast.

So, for example, these days when I go and I'm about to start a podcast recording, which we're live streaming on YouTube, it's basically a single CLI command that will then notify all the different locations, including the Discord community.

like, hey, in 20 minutes, we're starting a Discord or a YouTube stream with this guest, with these topics, you should come and join, right?

Making the membership and the community a little bit more tightly together, a little more cohesive.

So I got to write an entire Discord bot to make that happen.

I've never created a Discord bot, but guess what?

It's pretty much Flask and Python, and there's some nice libraries.

And Kirsten and I, we got it completely working.

You can see right here, you've got the bots.

I can just go and ask for the bot status.

It's online and ready.

It's got a 16 millisecond latency, which is pretty good.

This one's been running for 23 minutes.

It cycles every few hours just to keep everything fresh.

All this information, all this Discord bot stuff, completely built hand-in-hand, me and Kerser and the documentation.

So that's it.

amazing concrete things built significantly with the tools and techniques that you're going to see throughout this course.

And a lot of my working with this has evolved and iterated and improved.

Like that used to work, but I can do so much better.

So I hope this chapter has inspired you to look at like, okay, I see how that can, that can make sense in my life, right?

That, that looks a little better than I expected of just going into chat and saying, hey, chat, give me a regex.

Or going and just auto-completing your way through with overwhelming and not always right auto-complete.

These are all meaningful features that took lots and lots of work and saved way more developer time than we spent on them to create them.

|

|

|

|

1:03:34 |

|

|

transcript

|

1:43 |

It's time for us to dive in and start programming with Cursor and Identic AI and Cloud Sonnet and all the things.

We're going to put much of what you've seen into action.

You've seen some really cool examples of things that I built that are in production or being used widely that are working great.

It's time to see how to go from zero to finish with that.

Really quickly before we get to it, though, I want to just set some expectations.

For some reason, a lot of people seem to get frustrated with AI if it makes a mistake.

I want you to think about this little AI programming helper of ours as maybe a junior employee.

Better than an intern, not quite a senior software engineer, but whatever they are, they're incredibly fast at what they're going to be able to do.

So you would never hire somebody and expect absolute perfection from them in their creative endeavors.

So I think we need to have the same perspective on AI.

Don't get upset if it gets a little thing here or there, not quite right.

If it gets 95% of what it's doing correct and you've got to work a little bit with it to get that last bit done, amazing.

That's as good as a junior developer who you've given vague specifications to and you go back like, pretty good, but this is not actually what we're looking for in this part right here.

So just set your expectation.

If we get it almost all perfect, that's actually incredible.

That's all we'd ask from an employee or a teammate or something like that.

So just keep that in mind.

|

|

|

transcript

|

1:01 |

Now, in this chapter, when we're building, we're just going to start building and we're going to start seeing what we can do with our agentic AI.

Some of it is going to seem like wizardry.

Like, why did it do that?

Like, actually, is the right thing?

Why was it right?

It could have done anything, but it did what we wanted in a lot of different ways.

So I want to just build something with you.

Get our hands on.

We've been talking for way too long already.

It's time to write some code and build something amazing.

And then after we've had this experience, I'm going to go back and show you the five or six or 10 things that are happening behind the scenes that actually keep it way more on track and get it way more accurately using the tools that we would want it to use from the beginning along the way.

So just sort of do a suspension of disbelief science fiction style for a little bit while we go through this.

And then I'll bring the engineering side into the course and show you all the knobs that we have to turn to accomplish what we're accomplishing.

|

|

|

transcript

|

7:28 |

All right, here we are.

It's time for our first big project with cursor and Claude and agentic AI.

So I'm right here in the GitHub repository code that you're going to be able to get to.

This is the one for the course, the one that's listed in the website and so on.

Now, of course, it's empty now, but by the time I'm done and published the course, it'll have everything that you see me do, right?

So let's make a directory.

for code and we'll go into the code.

And our first project is going to be, well, that Git refresh sort of app.

So I'm gonna call this something a little more playful and a little more fun.

Instead of just Git refresh, it's gonna be GittyUp as in update your Git.

So I'm gonna do there, I wanna go into GittyUp and I wanna just have cursor open right here, but I also wanna have a virtual environment that's just the way we do things these days.

I have a nice little alias called VNV and you're welcome to borrow it.

And what it does is it just says, uv, please create a virtual environment with Python using the one that you manage named in the folder named VENV and then turn it on, activate it.

Also, this will, even if you don't have Python installed, this will download it.

You might, if you've installed say 3.13 or 3.12, but not 3.14, you might need to put a version 3.14 in there, but I've already got that installed.

So we'll just make our virtual environment here.

Oh, whoops, I do have to actually set a value.

So I'll just put 3.14 there.

So V and V off it goes, we got a new virtual environment and you can see that it is active, the GittyUp 3.14.0 right here.

So now I wanna open this in cursor.

How do I do it?

Well, we have cursor right here.

and I could go pick this, and I could say open project, browse to it.

That's all well and good.

Or you could just say cursor dot, and it'll open up there as well.

Very nice.

All right, here we are.

Now, in a normal program, normal programming story, you would come over here, and I would make main.py, and we would start coding.

But that is not how we're going to start this time.

In fact, we're going to do something different.

We're going to start with AI.

let it scaffold things up, and then we'll start adding our pieces as well.

So instead of adding that, I'm going to just add a README file.

And I've copied some text because you don't want to watch me write it.

So let me paste that here, and we'll see.

I'll put this onto the view.

If you hold down Alt or Option, you can click this little preview and get a nicer look of any README file here.

Always nice.

So we're going to program, and we're going to build GittyUp, updating your Git.

And so this is the only input that we're going to give to our AI plus a sentence or two to just tell it, hey, use that file, okay?

So let me just give you the quick overview.

This is an application which will work as a CLI tool for developers.

The problem, when working on multiple computers or with many team members and across many projects, it's easy to start working on an existing one and forget to do a git pull.

Then when it's time to commit the changes, you realize you have merge conflicts and more.

This is not fun.

The solution, this application will be run in a folder at the root of source control, source code.

It will traverse the current directory in all subdirbs.

When it discovers a Git repo, it will run the shell command git pull --all.

This will ensure that every project in the directory tree is up to date and ready to roll.

We want to make sure that we employ color to communicate to our users, So hint, use the Colorama library for this.

All right, so we're going to use that definition right here.

This is the primary input to cursor, to Claude.

So that brings us over here to the right where we see new chat.

Now notice there's a couple of things here.

We have this tab open and it says one tab is active.

So, and the active tab is readme.

If for some reason we were somewhere else, we could just drop this and refer to it.

This tells cursor, we're talking about this readme specifically.

This thing is so simple.

It doesn't really need that.

But in a large project, this is a common thing you do.

So I'm going to give a little description here.

Also, incredibly important.

Notice that we're in agent mode.

Agent mode.

Not playing, not running the background, not ask questions.

Ask is like ChatGPT style.

No, run in agent mode where you take actions, use tools, and go.

The other one is this probably looked like this if you just installed it.

Auto.

This is not your friend.

It's cheaper, but it's not your friend.

So the idea is that Cursor can choose the right, whatever model they think will handle this best.

And their incentives are to use the cheapest but sufficient model.

I don't recommend that.

I recommend that you go through and you pick out your model specifically.

So I like Claude Sonnet thinking, whatever the highest version of that is at the moment, but this space is changing fast.

Like this Claude Sonnet 4.5 came out last week.

Yesterday, Claude 4.5 Haiku came out, which is really fast, but not quite as good.

So one of the things you might do is get it started with the smart model and then turn that down.

But I'm going to just turn it on here and say like this.

So let's come up with a prompt that we can give to cursor to start with this readme and build out the application.

Okay, here's what I'm coming up with.

Let's see where this takes us.

We're ready to start a big project.

We're building a new application described in at readme.

And because it's this at thing, it's actually referring to that particular file, not just something called readme.

I need your help to build this as a professional-grade app that we can share with the world.

This is important.

It'll put more error handling, maybe more CLI interactions and so on.

This is a big job.

I don't want to jump right in.

Your first task is to design and create a detailed plan, and then we'll begin coding.

Remember, plan only, no code.

This is really important.

It's going to put it probably just in the root because where else would it put it?

I'm going to make a folder called plans because as your app goes through cycles of features and fixes and so on, you might need to want to keep these and refer back to them so on.

I'll say, save the plan and we'll give it this empty directory.

All right.

There it is.

The stage is set.

We've written our application description down in the readme here.

And now we've primed it with the prompt and the context that we need to go.

We've selected ancient mode.

We have a thinking high-end model.

I recommend when you're planning, you always run a high-end model.

And if you need to turn it down for pricing or speed or other things, then turn it down, but get the best plan you can.

You'll see there's a lot that goes into it.

|

|

|

transcript

|

8:04 |

This is very exciting.

I am going to zoom in a little bit so you can see the words go by better here on the right because this text can get small when it starts running.

But it's our time to run this.

Notice over here, there is no plan.

If I expand this, the readme is just the readme that I pasted.

So let's run it and see what it comes up with.

You can see it's thinking through the steps.

It's pulling out the specifications, reading the requirements.

Okay, I'm gonna create a comprehensive plan for GittyUp.

Let me first read the readme, great.

Now I'll design the application.

And at this point, it's just writing the plan and I don't think we're gonna get a lot of feedback.

So it's gonna run for a second.

But while it is, it gives me a chance to talk about a couple of things here in the UI.

Notice first this 8.9%.

This number is how much of the context, the thinking space of the AI model are we using versus its max.

you want to keep this smaller rather than larger.

The more it gets, the more confused it can get, the less it's able to pull in different information.

If it gets too big, it could forget the beginning.

There's a lot of stuff here going about this.

So this number is really, really important, but you can see that it's got plenty of space.

I find that less than 50%, definitely where most of my stuff lives, even on large projects, a more common number might be 2025%.

so we'll see things we can do to manage this but you want to keep your eye on that number as it makes a big difference on how the ai works and the larger that number the more every request will cost you well there we have it a 659 um line plan that was created and we can come up here and see what it said it gives us a little overview of what it's been up to all right plan overview the design plan covers all aspects of building this professional grade CLI tool requirements, technical architecture.

I'm going to show you the details in a second.

Quality standards like test coverage, different strategies, security considerations, like that one phrase made a difference, right?

It's a four stage process.

First, we're going to build an MVP, then enhance it with config settings and so on strategies, stashing, like try to pull to a thing.

There's going to be conflicts, polish, so we can distribute a PyPI documentation so much.

It says six to 10 days.

If you were to hand this to a real human and that might be actually, but we're not going to hand it to a human.

We're handing it over to Claude Sonic.

Okay.

So that's great.

Notice also that it has keep all and it tells you what files are changed.

So if I were to open this, it actually shows you all the changes, but because it created the whole file, it's everything.

So it's not super interesting.

All right, let's look at this real quick.

Was the readme updated?

Nope, not yet.

All right, so let's look at the reader view of this.

Review view of this.

All right, I'm not going to read you the whole thing, but I want to just go through and see what it's made and look at some highlights.

So it gives us the nice summary of what is it that we're going to build.

Requirements of the core requirements are directory traversal to find the git repositories.

It got this right.

The thing that we need it to do is look for the hidden.git folder.

That's the folder that holds all the git stuff at the root of any git repository.

That's the best indicator that there's a git, the thing is part of a git repository.

Pull execution is doing this on each discovered one.

Colored output, use colorama as I suggested, have different categories of colors.

Show the user which repos are being processed and processed as they go.

continue if one of them crashes this is really nice and display a final result okay and then we want to make it professional grade so we have a bunch of cli tools like dry run to see what would happen don't go looking inside of things that you're guaranteed to just have weirdness have a lot of files be associated with software development like node modules vnv and so on the ability to not and go super recursive, wide or verbose, all these things, right?

We're going to see great.

Clear visual hierarchy, non-intrusive, fast, safe.

This is really nice.

This probably kind of came up from the professional grade side.

Like, oh, professionals don't like to lose data.

Here's our technical architecture that it came up with.

So it's already said, we might want this to go to PyPI.

So instead of just putting it into a main file, we're going to put it into a structure such that it's a Python package.

this all looks really good to me one of the main i really want to highlight this just these little amazing pieces that come up during this course one of the big knocks on ai coding is that they write crap code they just jam a bunch of stuff in there they don't structure things well you just end up with a big ball of slop ai slop is what you're going to get i'd like to point out here.

Look at this structure.

Look at how nice it's going.

We're going to use a whole sub module for discovery, the Git operations, reporting, the models, and so on.

It's going to use data classes to pull that off.

It's going to break it apart like this, right?

We have a separate thing for coloring for the output kind of stuff, separate one for the CLI definition, a whole set of tests, tests focus on their areas.

Why is it so good?

Why did it do this so well?

That's part of the wizardry that will become engineering later.

This is not an accident.

Okay.

This, you can control and influence.

And this is really, really good.

I'm super happy with this.

I'd be fine if employee or if I came up with this, I'd be like, yeah, that looks really good.

Nice job.

Okay.

And it talks about what each of the core modules in that graph does.

We don't really need to dive into that too much.

Talks about the CLI.

And what's really great about this plan, not only does it let me see what it's thinking, it puts it down so the AI knows step by step, it can refer back to the plan and I can change it.

So if I wanted to change verbose somewhere, I could change this to like wordy and put a W and maybe a couple of W's and three W's, I can say, look, we don't want to have a verbose idea.

We want to call it wordy.

Give it a little hint, though.

That means verbosity.

So you make the plan.

You review it.

You give it hints.

So this is not just fire and forget.

You get tons of input, review, and so on.

Okay.

There's our wordiness right there.

Let's keep going.

What else is in here?

Examples output.

So run this somewhere.

That's what it's going to look like.

Yeah, this looks fantastic.

verbose mode, what it's saying, error handling, how it's going to handle that.

Here's more of that professional grade, multiple exit codes, unit tests.

Let's see what else.

Configuration settings.

Amazing.

All right.

What else?

It just keeps going.

Here are the different implementation phases.

So see how it says phase one, such and such.

We're going to make really good use of this and the next steps.

All right.

So risk assessment.

This is fantastic.

It's historical documentation.

It's guidance and progress for the AI as it works.

It's our chance to review, have input and so on.

This is way better than I would have ever expected from an employee.

Give a junior engineer the job to go, hey, plan this out.

Would you have got, would you get this much?

660 lines, 159 lines of detailed, thoughtful analysis.

Maybe, maybe.

but certainly it's, this does not look bad to me.

This looks really, really good.

|

|

|

transcript

|

4:55 |

All right, we're ready to actually start writing code.

Remember that we just told our AI to plan out the project, but not to actually write it.

We said no code, just plan.

Remember that?

I do want to make a quick follow-up on that that I don't think I mentioned before.

I showed you, and you saw right here, there is a plan mode.

So we could go into this plan mode here and say, just describe it and not discuss having this plan concept.

There's some drawbacks to that.

If all your goal is like, I just want to knock out a thing and I want the AI to think before it acts so it breaks it into pieces, great, use this little plan mode.

So what it does is it builds, it does the plan just like this.

Then it creates a temporary view of it.

And then you say, okay, go ahead and build that.

But what you do not get is you don't get something you can put into source control.

You don't get something you can have across different machines.

It will be saved in this chat and you can refer back to it.

but it won't be synced across different computers.

It won't have a permanent view.

It's very hard to come back to it three weeks later, right?

There's just a lot of benefits to just going, make a plan in a file that we can version.

Okay, so the plan mode is neat.

It's not as powerful as actually saving this stuff to a file today.

So that's great.

We're going to use that.

Now, the next thing is a couple of things here.

One, notice how if I go over to source control, it shows, take off, eh?

It shows that this readme is added and this is added.

When you're doing AI, when you're doing agentic coding, your source control game has to be spot on.

It has to be top shelf source control.

Now, this is not that hard.

It just requires a lot of focus.

Why?

Because even though this thing is really amazing, it can screw up stuff.

it can make mistakes.

So we can use source control as a way to roll back, right?

You can be pretty fearless if you commit often.

You can just say, you know what?

I ask it to do something.

I don't like it.

I'm going to go just roll those changes back or I'll go back to get commits.

That will be fine.

So there's actually four different levels of commits that you can use as like save points to go through.

So here's the first one, I guess, which is unsaved.

This is a bunch of files sitting on a hard drive, not even committed to Git.

Normally, you might think this commit button is what you want next.

There's an intermediate stage.

So for example, this one, if we look at the Git diff, close these things.

We look at the Git diff, it's just brand new.

So I guess that's not going to be super interesting.

If there were changes, it will show you the changes that are here.

We can do what's called staging.

I can hit this to say stage all changes or just do it for one or the other.

And now if I make more changes, you can see the only one line is changed here.

And if I look at this one, it says that line changed.

What did it change from?

Not what's in the Git repository, just what's staged.

So there's full changes, staged changes, committed locally, and then pushed.

Those are the four levels.

And we can employ those all along the way as we're building out with this AI.

and it gives you just an insane amount of visibility what the AI is up to and ability to go, no, we're rolling that back.

We're rolling it back to what I had staged versus what was changed or versus what I committed versus what I didn't.

You really want to have, basically when I'm working with the AI, this is my view.

Source control changes as they unfold on the left.

The cloud code, the agent, cursor agent on the right.

And then I'm bumping around the code in the middle.

so this you want to get familiar with this view like for example i might not want this more anymore i don't but every other word there i want to keep what do i do well i can just hit this roll back you sure you want to discard you didn't lose everything you just lost those changes and neither were actually committed there's like these different layers okay so you just your your source control and get game have to be top shelf.

All right.

You got to really be on this.

So let's just say initial plan or GittyUp.

We don't have to sync them now.

That's that get can take care of for us later.

Okay.

But these, these are now saved.

So anything else that we ask the AI to do will show up here and we can accept it temporarily save it to stage save it more by committing it and so on right so this source control game super important

|

|

|

transcript

|

3:31 |

The next thing we want to do is just implement phase one.

But I want to talk about one more thing before I press go on the next step here.

So we want to know how much, what model should we pick here?

If we have lots of AI credits, choose the highest model that is reasonable.

Something like a thinking model and Cloud Sonnet.

But if you're almost out of credits, but you still got to get work done, maybe you choose the Haiku one or even flip it back to auto.

Okay, how do you know?

So let me show you two things really quick.

So we go over here and say cursor settings and we search for the setting usage summary.

This is off by default or it's hidden by default.

Where are you?

It's hiding down here.

It's on auto, which only shows up when you're about out of credits, which is too late.

So say always.

And notice down here it says, you've used 50% of your credits.

And you can click on it.

It says you used $199 out of $400 a credit.

it's a lot of credit so that's nice to have that show there the other thing is that a lot or not well what's your renewal date how many days until it renews and predicting that can be real tricky so i've created this library this cli thing called ai usage is in my github here but you don't even need to go to the github so what it can do is you take this percent this 35 or whatever percent and you feed it to it and it'll tell you, it'll run like some linear predictions to say, if you keep going at this rate, you're going to have credits left over or you're going to be out of credits.

So let's do this.

Let's go uv tool install AI usage.

So now that's globally available.

We'll have AI usage.

And at first it's going to ask you a question.

It says, what day is your renewal day?

I know that on the 24th of the month, is when my cursor credits renew.

Okay, so it'll remember this after the first time you enter it.

Do you want to save it?

Well, I guess if you say no, it won't remember it.

But yes, saved.

And it says, well, what is your current usage?

Remember right here, it said 50%.

So 50%.

Awesome, you're conserving well.

I've got way extra.

Even though I've done a ton of cursor this month, it says you're going to have 35% surplus of credits.

So you know what we're doing?

We're going on full fat, baby.

We're going to go with this model here.

We're going to choose a more expensive model.

If that said you're running out of credits, I might choose this one or the Haiku one or something like that.

Okay, so that's how you know what model you should pick.

Obviously, if money is no concern, pick one that clots on it or one of these really high, good models.

I don't really think that the argument that, well, it's faster, so I should choose the faster model is really that relevant.

It's already so much faster than a human.

And your goal is to get good code out of it, right?

So I think give it its best shot at writing really, really good detailed code the way you like.

So I don't know.

I don't typically choose by speed.

But if it's going to get real expensive and you're not willing to pay for it, you just want to be aware, Choose your model based on that.

But for this course, we're going to be using Claude Sonnet 4.5 Thinking across the board pretty much.

You might toggle that based on your credits and usage and so on.

|

|

|

transcript

|

10:35 |

We're ready to implement this plan.

So I'm going to go up here and just say phase one.

Now, we can keep talking over here in this and just go, okay, phase one is ready.

But what I like to do is to keep this context window as open as possible is to create a new one.

So you could hit plus here, or you just hit command N.

And you can see the old one went right down here where my mouse is.

And now we have a new complete from scratch.

So I'll say something like, we're ready for phase one of, and just drag this.

I can't do the source window for some reason.

You're going to do it.

If it's open like this, you can drag it.

Whatever.

So we're ready for phase one.

Let's see what it does.

Great.

I'll help you with phase one of GittyUp.

Let's go.

You can see it's creating little to-dos for itself based on what is in the plan that we already put together.

I'm going to go back to source control.

So as it makes changes, I can see what's going on.

So remember this file structure that we had way back up here, somewhere in the little ASCII art thing there was all of this, it's sketching out that skeleton.

Look, you can see all these files start dropping in and I'll go over to the file view and we'll see things coming along here.

Look at this.

and implementing my Michael style of doing pip compile management with uv.

Weird.

Why is it doing that?

Added pytest.

Why not UnitTest?

Rough.toml.

Okay, looking good there.

And notice, oh, I haven't expanded it.

There's this giddy-up section, like here's doing git operations.

Look at that.

It's already putting some stuff in there.

The constants have things.

Let's see.

Models.

And as it's working, this may take some time.

I don't know how long it's going to take, three, four minutes.

You can tell it like, hey, I want to keep this file.

And you can start looking through and reviewing what it's up to.

But let's just look at the models it created.

Update strategy.

These are enumerations.

Close, close, but it could do better.

We'll see in a minute.

These are nice, the repo status.

Look at this.

It's got the different statuses.

The scan config.

look it's using the numeration like this is good looking code you guys this is not sloppy it's not junky really pretty well factored pretty well thought out so i'm actually really excited the only thing i think would be nicer is if this was string enums because these are string values not numerical values i might have it fix that first in a minute here but it says look we're missing an import.

Now, here's one of the things, there's a lot going on that is amazing here, but one of the things that's really, really remarkable is, remember, I said how bad it is that chat just throws out an example.

It works, it doesn't work, who knows?

How's it even supposed to know?

But this one, it's keeping all of these things as a coherent whole.

It's like, oh, look, I missed an import in CLI, and then, so I've got to go fix that.

What did it change?

Well, we can keep all of them, but it missed this optional probably, right?

Notice down here somewhere it's using optional.

Who knows where?

Like this optional string, the rough styles to say use stir pipe none.

This is actually generating probably a rough warning over here, but it's still doing it this way.

Why is it doing that?

Because Michael likes it and it knows I like it that way.

All right, now look, it's updating the readme that we wrote.

This is our original plan that we gave it.

And it's like, all right, we need to update this one.

Let's see what it puts in here.

Look, it's updated it with a description of the tool, the features that we've added that it planned out and we planned out together.

How do you install it here?

Okay, the quick start that gives you a CLI demo.

Let's even look at it in a little nice review.

Notice it needs to install Colorama.

This is interesting, by the way.

Notice that it's using Python M dash M pip.

That's not gonna work.

No module named pip because I use uv.

So let's see how it handles that.

Okay, said, oh, that's right.

Let's see, what did it say?

It didn't describe it, but it's like, that's right.

I remember Michael, Michael doesn't like it that way.

He doesn't want to use pip.

He wants to use uv.

So it's like, all right, we're gonna have to use uv pip to continue.

All right, now let me check the linting issues.

So notice we're using an allow list of options here.

And every new command is like, are you allowing me to CD?

Are you allowing me to run ruff or whatever?

I'm just going to say, run everything.

Never had a problem with that.

The problem is it could go crazy and screw up your project.

But you know what?

We're using Git, right?

We're using Git really well.

So it's not going to mess things up.

Look at this project coming together, folks.

This is insane over here.

There's a lot that's nice that is going on here.

Notice it found six errors with rough check.

What is it going to do here?

Made a change.

Let's look at that.

All right.

So rough said that line was too long, so it's going to wrap it like that.

And you can opt in one at a time like, I'm not sure about that one, but yeah, this one looks good.

And you can sort of approve them.

So I said there are the four levels of saving with Git.

There's also this do, don't do, line by line from cursor, right?

So it pulled this out and put that up there into a message, right?

Instead of inline in it.

So that's kind of like another level.

You can always say, roll back the files you've changed.

Now here, up here, notice that somewhere a little bit above, that we need to install this package as a package so we can run it locally and refer to it.

And it knows that we use uv, not just pip to do it, before it went and it started running some of its behaviors down here.

So this is really, really great.

Another thing that's pretty wild here is it's actually creating temporary Git repositories in a hierarchical directory tree so it can explore the behavior of the application.

Notice down here you can see zero repositories found.

In this one, it said one repository found.

So it's like creating little test environments for it to explore and play to make sure it's actually working.

Now, it found two repositories.

This is kind of the stuff I'm like, wow, look at it work.

look at it go working locally with everything that it has at its disposal.

Now it's running pytest.

Looks like all the tests pass.

And we're done.

We can even go through here and say, just run pytest and see what happens.

Sure enough, looks like it works.

Give this a little summary.

And when it's done here in a second, I'll be able to say, here's this final do undo.

You can say, just keep everything.

Check this out.

It also wrote me a git commit message.

So we got a lot of changes here.

And like, what is going on with all that?

Well, here's a nice commit message.

Did you know your git commit messages can be multi-line by the way?

There's a new feature we implemented GittyUp phase one.

And what was that?

It's this, here's the more detailed steps.

So I'm gonna copy that, paste, commit.

We don't need to go push that yet.

It doesn't matter.

I just want to save it, catalog it, tag it with what was changed there.

All right.

Well, let's go back here and see phase one.

What's happened?

All right.

This is great.

Now let's watch this.

please indicate that a phase is done in which parts when you're finished I'll put that there but also this one time I'm gonna put it here oh I don't want to tell me I wanted to update this file accordingly Thanks, but no thanks.

Let's do this.

Update instead of this file.

I'll say the plan, design plan.

There we go, look at that.

Phase one complete.

Let's go emoji.

And it tells you how much coverage it got, when it was completed, little check marks.

And I guess it thinks phase two is also complete.

Okay, great.

It's partially complete, partially, sorry.

And we can use this little navigation thing to jump up and see what it added.

Oh, it's got this cool, let's look at it like this.

It's got this cool table of its progress, which I love it.

You know, how far is it done, right?

Little, you know, probably even get a graph if we want, but we definitely don't need that.

Okay.

Really, really nice.

It's thinking just a moment longer, should be about done.

All right, let's see, updated design doc, that's a decent commit message.

We can put that in there.

I could keep it simpler, but you know, that's fine.

|

|

|

transcript

|

6:00 |

Now here's another pro tip for you.

We finished phase one.

Remember I told you what's really valuable to write down the plan, so we have it and down here somewhere is phase two.

In this place right here, right?

I could come over here and say, ""Okay, keep going.

Phase two cursor, let's go.

But see this 33 percent, this number is creeping up.

And if we go over to my cursor account, Then we go to the dashboard here.

Notice in just the last seven days I've done 20,000 lines of code.

How much have we done?

50,000 lines this month?

A lot less than last month, I'll tell you that.

It's crazy.

But that's not what I want to show you.

This is all interesting.

But what I want to show you is this usage section here.

We go down here.

This request so far, let's see.

These couple of requests that we've done so far for this course, they all kind of start right here.

That 1.3 million tokens.

It's a dollar.

That's how much our plan costs.

Our plan costs a dollar.

Okay.

I think it's a dollar well spent, especially since it's credits already paid.

I'm just redeeming my credits, but still for an entire project to get such a detailed plan, it's amazing.

And then this last one was 31 cents to implement phase one.

Now, if I just type here and say keep going, it's going to be more and more because it's only going to add to the amount of tokens and contexts that are used.

We don't need that.

We have our plan written down.

What I'm going to do is hit command N again, hit a new one, and just say we are ready for phase two with this.

Notice smaller, not zero, but much smaller context.

So it helps it focus on just phase two.

There's plenty for it to read to understand what else is going on.

And it keeps the cost down and it keeps it faster.

There's lots of reasons to do this.

Tony, it's really good to have these plans written down.

All right, let's see what it's doing here.

Working on parallel processing.

Okay, well, you doing PyYAML configuration files.

Okay, great.

Config file to read that CLI for the number of workers to run in parallel.

Well, like they're doing AC multi-processing.

I'm not sure example exactly.

there's not going to work.

It's like, oh, that's right.

We need to use uv.

Okay.

I'll just use uv.

So that's one of those hallucination things like, oh, I'm going to try this.

Oh, it doesn't work for you.

Many AIs would say too bad.

This one just said, oh, that's right.

pip doesn't work.

Let me try uv.

Oh, that's right.

uv is set up for you, right?

There's a lot of self correction.

That's why this stuff works so well.

All right, let's see what it's up to over here.

It's now added to the PI project requirement that we need to use pyyaml.

Great.

It knows that I like to put that in this other tool as well in this other location.

And it's created a config file parsing tool to read it.

And let's mark these so we can keep going.

There we go.

It's scan config here is now knows about the workers using data classes.

It's updating the CLI here.

Notice how nice Git is, right?

Having this Git window over here, I don't necessarily have to rely on reviewing whatever it says.

I can just bounce around and it shows you real quickly what's changing.

So in this case, the config was included.

That was the change.

so down here.

It's got its workers.

Okay, fine.

But it's validation, right?

It's checking.

That's part of that professional stuff.

Like don't just take whatever arguments and assume they're good.

No, you need error handling and reporting and all that.

Look at this, it's built at pull repositories async.

We can go check this out and see what's in here.

All right, phase two is complete.

What did we get done here?

So it's been completely done.

I remember some was done from phase one, but parallel processing, we can do sequential we can do in number of workers.

The default is to use workers and that's four.

We've got config support so we can configure this.

We have our stats that it gave us, new files.

We added dependency, this is really great.

And let's see, it's given us a really nice get commit message that we can drop in over here.

And let me push that to get real quick.

And down here, notice here's the phase two parallel processing.

But if I click on this, actually let's go back real quick but even if I hover over it, look how it shows you all the features that were going, right?

And also how many lines changed.

Well, but you can see it's got like this really nice commit message.

This is all that shows up the first line in the little get history window and so on.

But it added all that extra detail that it's keeping.

That's super cool.

|

|

|

transcript

|

4:40 |

We got phase one, phase two complete, and also partially with the others, but there's a couple of nagging issues I'd like to deal with here.

So let's look, first of all, if I run, clear things out a bit, if I run pytest, notice the tests all passed, but there's a warning.

What is that warning?

I could run pytest -V for verbose and track it down.

Let me scroll back and see here that it happens with this Git current branch async success test.

So I don't like this.

I'd like to just have Claude take a look and see what I can think.

Claude's on it.

So I'll just say, I see their warnings when I run pytest.

I don't like that.

You can have fun and be a little snarky when it's really, really focused.

All right, it's running.

Oh, I do see an error.

it says, or a warning, let's have a look.

I can see there's a runtime warning about a coroutine not being awaited.

That could be a sign of an actual problem, like not awaiting the coroutine is really bad.

But it turns out that it looks like it says, ah, the problem is I set up this async mock and it's doing something not quite right.

So I'm just gonna improve my mock basically.

It's creating a routine and not cleaning it up.

So it fixed it and come over to source control see what it did.

It said, okay, we're going to use a regular mock for our process.kill instead of an async mock, which is great.

All right.

Took away the async mock, added this one, and I can test.

Sure enough, everything's green.

Awesome.

Really nicely done.

So that's one little improvement that I saw that wasn't great.

The other one here, let's look at this.

We go over and look at models and keep everything keep keep keep keep all right if we go over the models here and we look at it you'll see that up at the top we have these classes that are enumerations and enumerations their values are integer based right so we have defaults but it's kind of like it's doing something to store them in python 312 or something like that they moved they added a thing called a string enum and str enum, which these values can actually be stored directly as the enumeration value when it's all strings.

So let me close this and not give it a hint where I'm going.

Let's just see how it does.

And also let's reset this.

It's unrelated.

So I see we have some classes that are enum based, but they only have string values.

Now, normally what I would do is I say, please make them stir enum classes.

And why not just change it when I already had it open?

Because maybe in the plan, it shows the code for them or it talks about them that could be talking about them in the test.

I wanted to holistically update the code base to take that idea into account.

Now, normally I would say just make it a stir enum.

But I'm just kind of leaving a little more open-ended and see if it can figure it out for itself.

Let's see how it does.

I'll help you with those.

Let me search and find them.

It's looking.

It says, okay, where is it looking?

All right.

It needs to read models and maybe constants as well.

I'm not entirely sure.

It's great.

I see that we've got some places.

And since you're using, I think, 311 is where that got introduced.

Yeah.

Since you're using 314, you're good to go.

So let me fix that and use the more modern version.

all right let's see what it did uh it didn't make a It's not surprising what changed it.

It added story in where it was in new before.

And yeah, that's exactly what I'd hoped.

So it's given us a nice little message here.

Off it goes.

Okay, so there's a couple of improvements that we made along the way.

And our code is just getting nicer and nicer.